Time domain prediction-based saliency extraction method

A technology of attention degree and attention value, which is applied in the field of video analysis, can solve the problems of high complexity of attention degree extraction method and the inability to apply real-time video coding, so as to reduce computational complexity, balance accuracy and real-time performance, and overcome computational complexity high degree of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

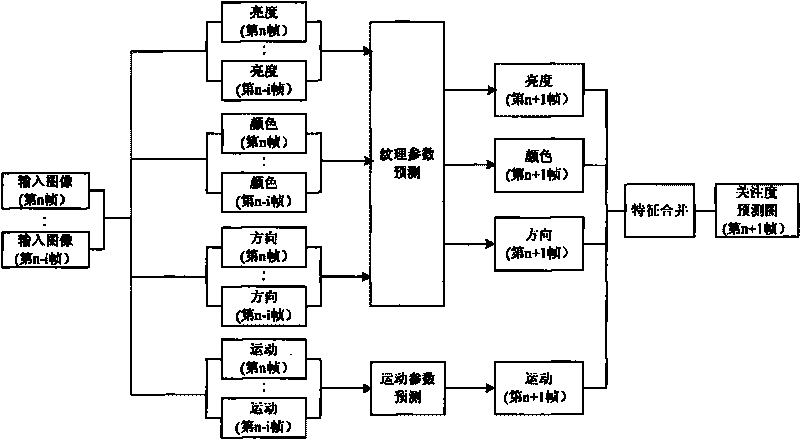

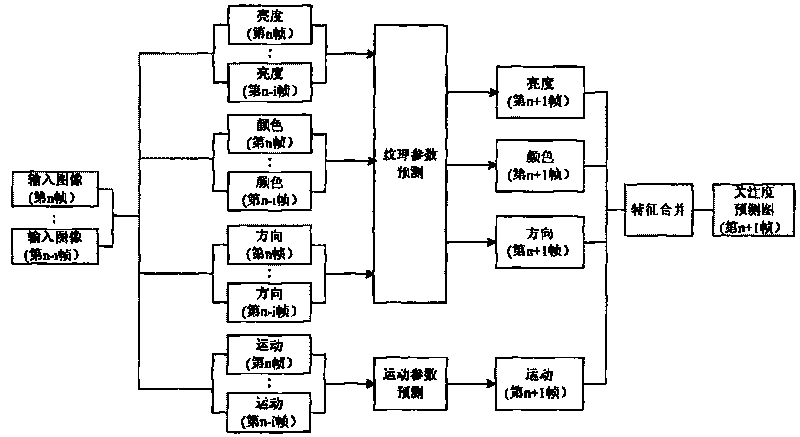

[0016] The invention discloses a attention degree extraction method based on time-domain prediction. The basic principle is to use the time-domain correlation of the texture visual features and motion parameter features of the attention area, according to the current frame and at least one adjacent previous frame. The attention map calculates the attention prediction map of the next frame to reduce the computational complexity.

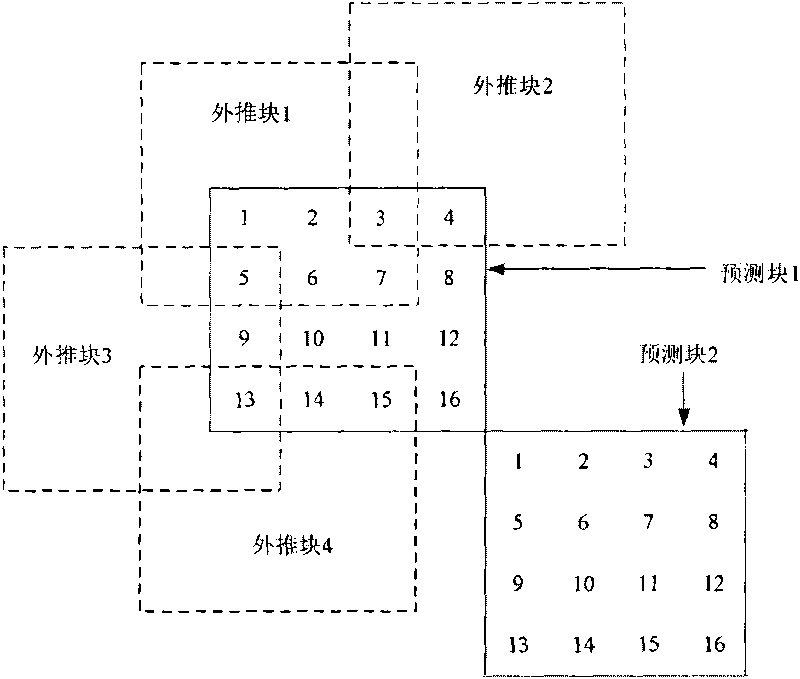

[0017] Embodiments of the invention are described below with reference to the drawings. Through the detailed description of the embodiments in conjunction with the accompanying drawings, the advantages and features of the present invention, as well as its implementation methods, will be clearer to those skilled in the art. However, the scope of the present invention is not limited to the embodiments disclosed in the specification, and The invention can also be implemented in other forms. Texture visual features may include various features, among whi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com