Motion synthesizing and editing method based on motion capture data in computer bone animation

A technology of motion capture and skeletal animation, which is applied in computing, animation production, image data processing, etc., can solve problems such as difficult expansion of data sets, and achieve the effect of strong expressive ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] Below in conjunction with accompanying drawing and example the present invention is described in further detail:

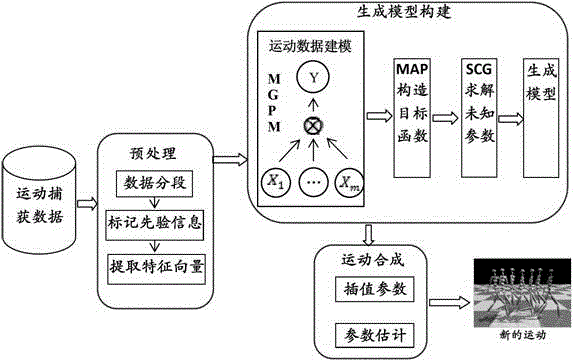

[0035] The implementation process of the present invention includes four main steps: motion data preprocessing and prior information labeling, defining a random process and specifying the kernel function of each factor, constructing an objective function to solve unknown parameters, constructing a generative model, and using the generative model to realize the synthesis and editing of motion . figure 1 A schematic diagram of the overall process of the present invention is shown.

[0036] Step 1: Motion data preprocessing and prior information labeling:

[0037] The first stage: motion data preprocessing:

[0038] The main thing to do in the motion data preprocessing stage is to calculate the feature vector corresponding to each frame of the motion data. Suppose, the current given training motion data set: Q={Q j |j=1,2,...,j}. where J is the total numb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com