Stereoscopic video visual comfort evaluation method based on region segmentation

A technology of stereoscopic video and area segmentation, applied in stereoscopic systems, televisions, electrical components, etc., can solve problems such as non-unification, and achieve the effect of accelerating development

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

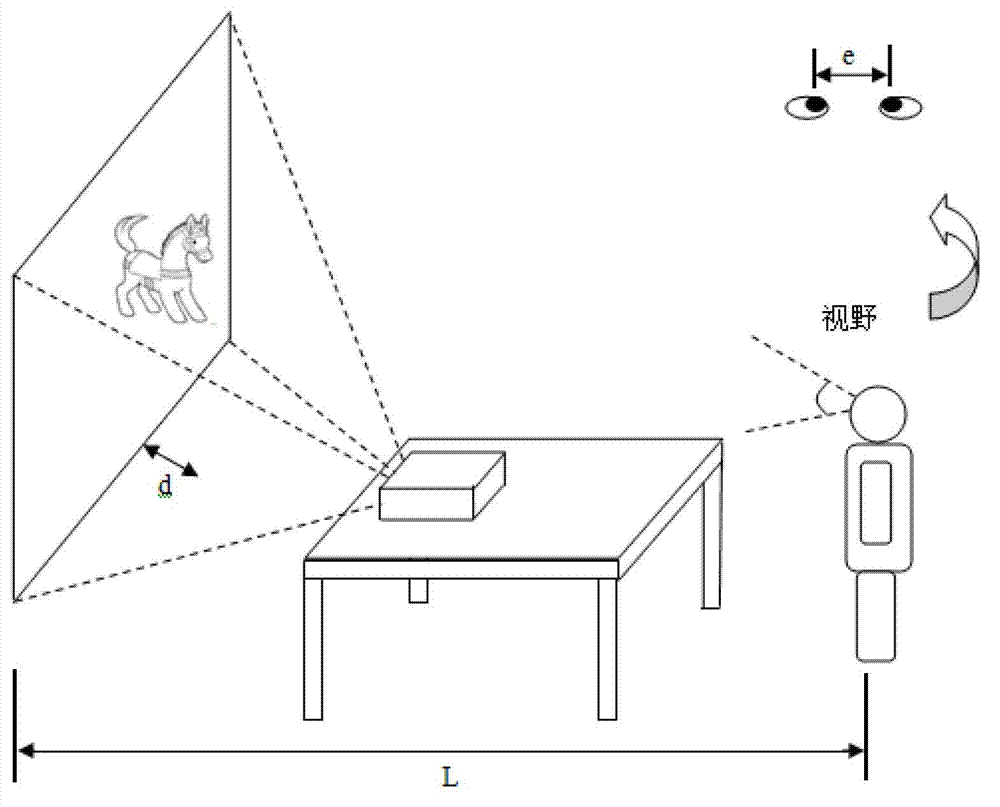

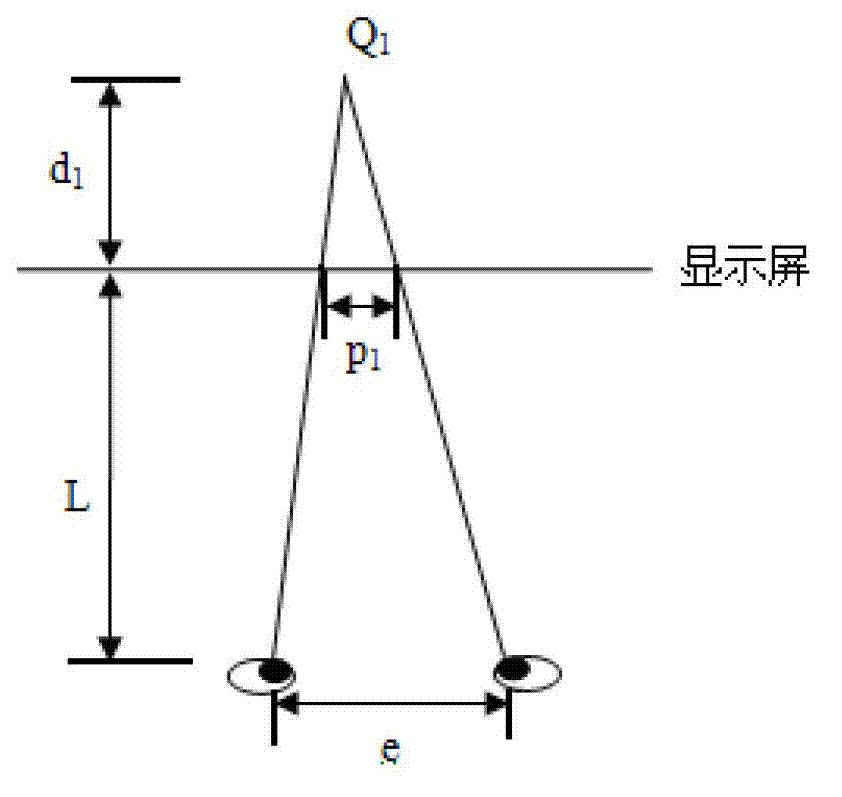

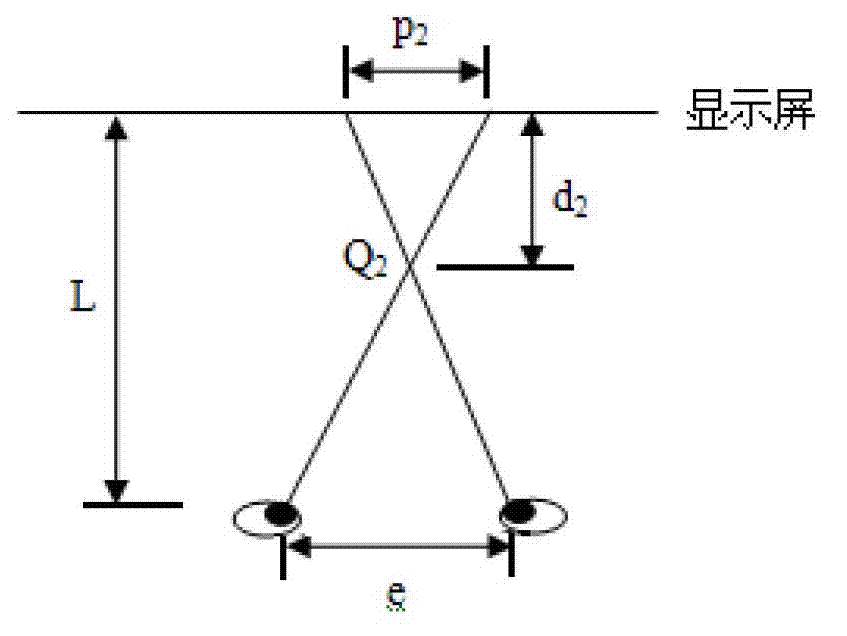

[0040] The present invention will be further described in detail below in conjunction with the accompanying drawings. The present invention will describe the following implementations in detail from two parts: the calibration of projection equipment parameters and the evaluation of stereoscopic video comfort.

[0041] 1. Calibration of projection equipment parameters

[0042] Due to the influence of different types of experimental equipment and experimental conditions, it is difficult to guarantee the accuracy of comfort evaluation when observing stereoscopic video. Therefore, it is necessary to calibrate the parameters of the projection equipment first. Here, the present invention compares the characteristic curves between various factors that affect the visual comfort rating (such as visual fatigue, physical discomfort, inattention) and the subjective evaluation experimental results through a human subjective evaluation, and establishes The visual comfort evaluation model i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com