Audio and video dual mode-based spoken language learning monitoring method

A dual-mode, audio-video technology, applied in the field of oral learning monitoring based on audio-video dual-mode, can solve the problems of unavailable student guidance, limited teacher resources, high labor costs, etc., to improve learning efficiency and reduce teacher resources dependent effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0013] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

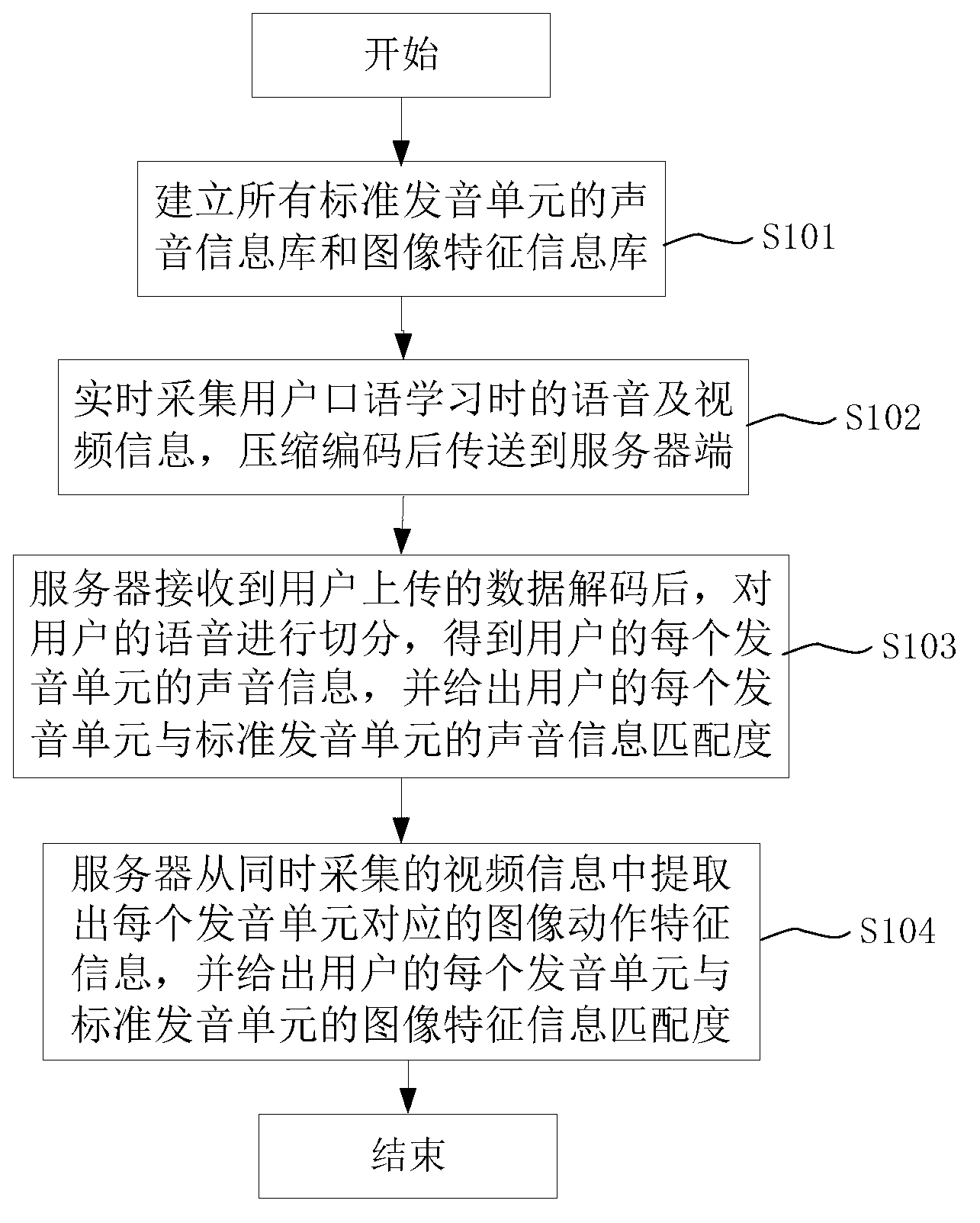

[0014] figure 1 It is a schematic diagram of the oral language learning monitoring process based on audio and video dual modes of the present invention.

[0015] See figure 1 , the spoken language learning monitoring method based on audio and video dual modes provided by the present invention comprises the following steps:

[0016] S101: Establish the sound information base and image feature information base of all standard pronunciation units; such as using Chinese phoneme units or finer sub-phoneme units as standard pronunciation units; training standard pronunciation models on a database, the database includes different ages Segment, different gender, covering the pronunciation image information of all standard pronunciation units, and including standard pronunciation annotations; the hidden Markov model is selected for the acoustic information bas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com