Thread-aware multi-core data prefetch self-tuning method

A data prefetching and threading technology, which is applied in the field of performance optimization of multi-core storage systems, can solve problems affecting private cache hits, achieve the effect of improving hit rate and reducing competition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

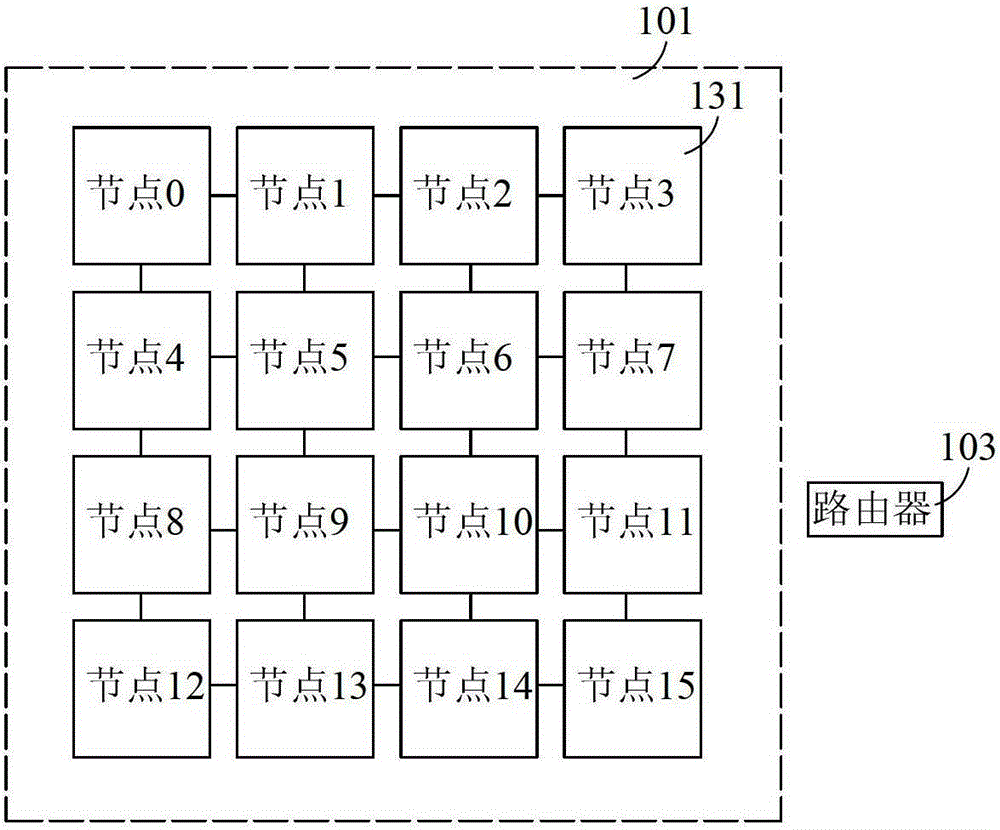

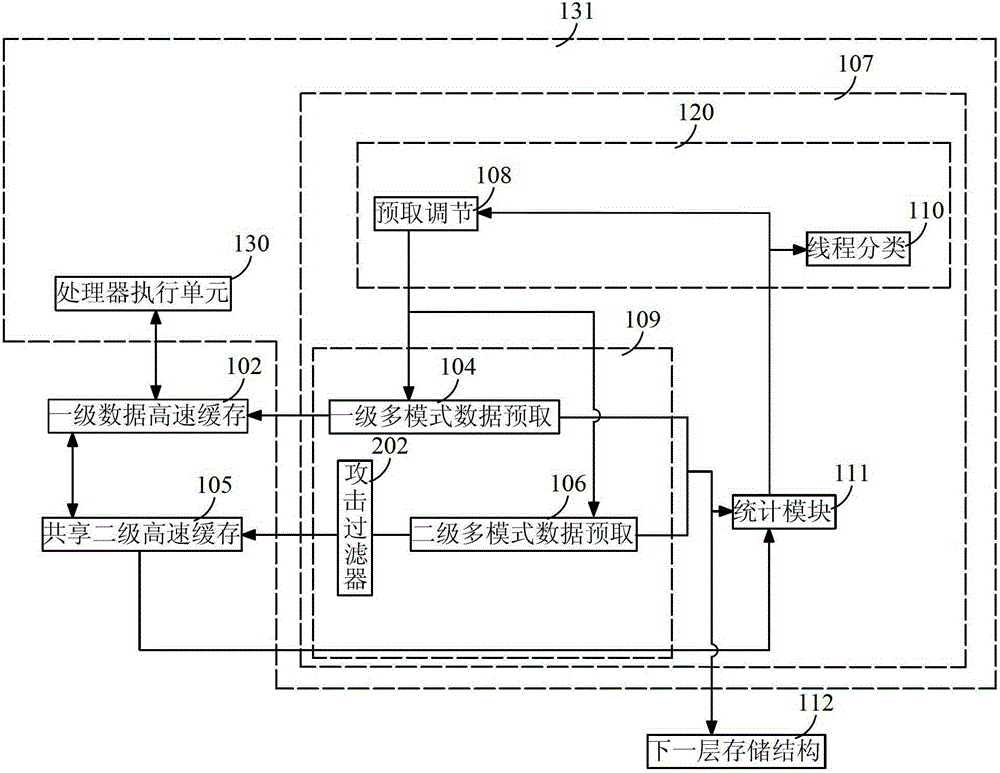

[0021] Embodiment 1, figure 1 with figure 2 Combined with a thread-aware multi-core data prefetch self-adjustment method; including a multi-core thread-aware multi-core data prefetch device; such as figure 1 As shown, the multi-core thread-aware multi-core data prefetching device includes multiple (at least two) processors 101 and routers 103; the processors 101 and the processors 101 are connected through an on-chip interconnection network.

[0022] Such as figure 2 As shown, each processor 101 includes a number of nodes 131 (ie Tile), a number of L1 caches (ie figure 2 a level 1 data cache 102) and a level 2 cache (i.e. figure 2 Shared secondary cache 105 in ), each node 131 has a one-to-one relationship with each primary cache, that is, each node 131 has a private primary data cache 102 independently; all nodes 131 All share the L2 cache (that is, share the L2 cache 105, which is assumed to be the last cache LLC on the chip here), and several nodes 131 (Tile), sever...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com