Multi-view depth video fast coding method

A deep video, fast coding technology, applied in TV, electrical components, stereo systems, etc., can solve problems such as less texture details

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described in detail below with reference to the embodiments of the accompanying drawings.

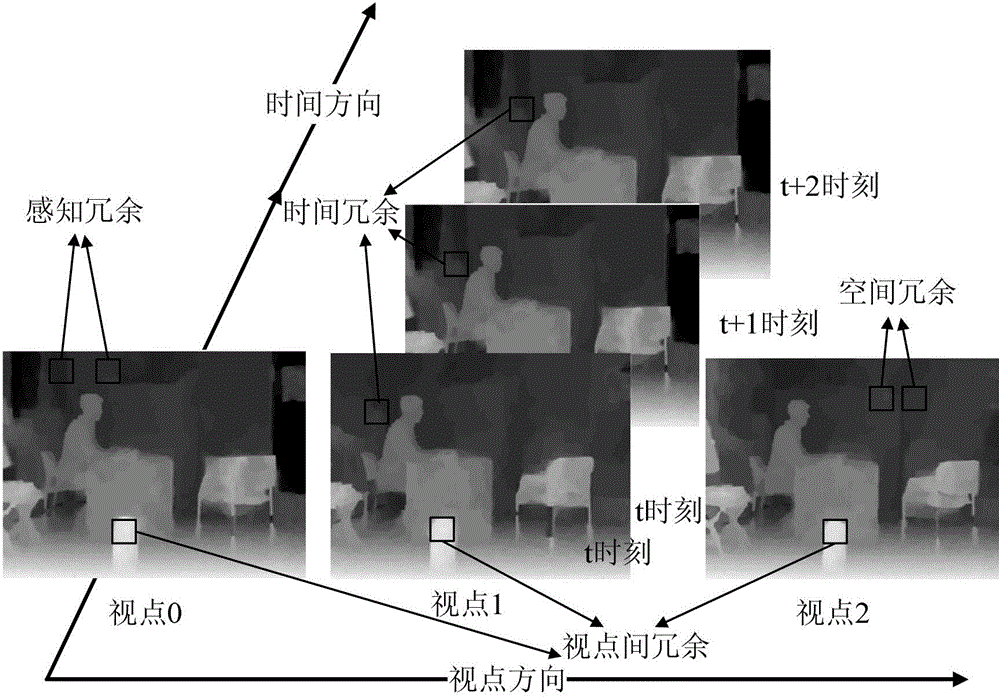

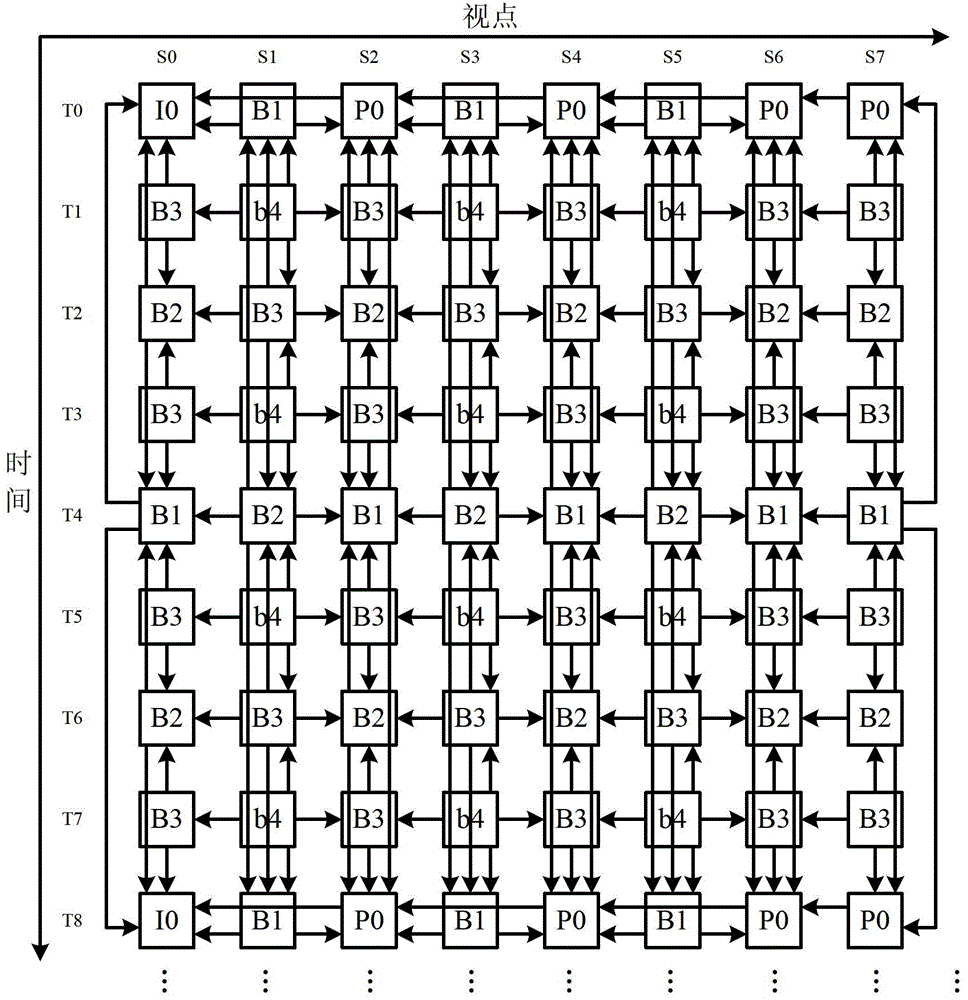

[0041] The present invention proposes a multi-view depth video fast coding method, which starts from the spatial content correlation, temporal correlation of depth video and the correlation of coding modes of adjacent macroblocks, and proposes the coding mode complexity of a macroblock. According to the coding mode complexity factor, the depth video is divided into a simple mode area and a complex mode area, and different fast coding mode selection methods are used for different areas. In the pattern area, a relatively fine and complex search process is performed.

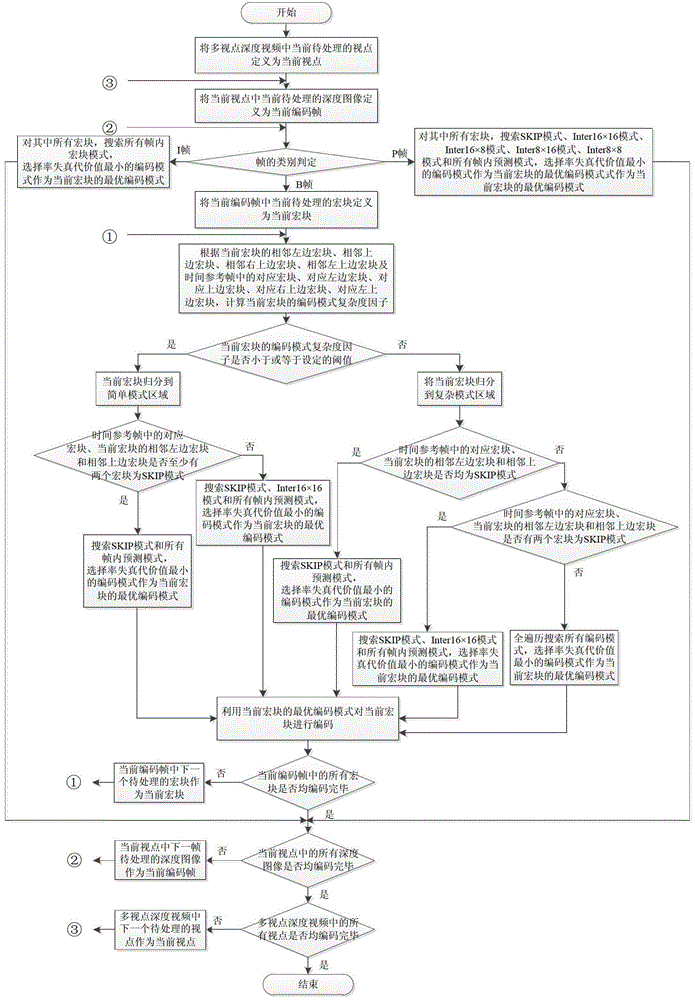

[0042] The multi-view depth video fast encoding method of the present invention has an overall implementation block diagram as follows: image 3 As shown, it specifically includes the following steps:

[0043] ① Define the current viewpoint to be encoded in the multi-view depth ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com