Human Action Recognition Method Based on Low-rank Representation

A low-rank representation and recognition method technology, applied in the field of computer vision and machine learning, can solve problems such as only considering sparsity, not considering the overall structure of data, and low recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

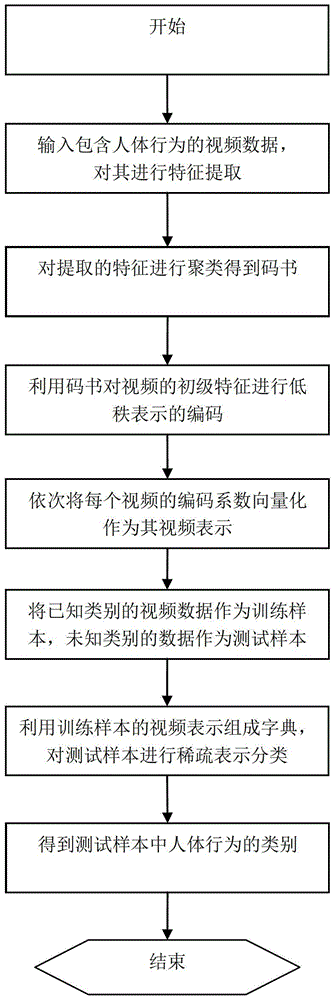

[0043] refer to figure 1 , the present invention mainly includes two parts: video representation and video classification. The following describes the implementation steps of these two parts:

[0044] 1. Video representation

[0045] Step 1, input all videos, each video contains only one kind of human behavior, and use Cuboid detector and descriptor to detect and describe the local features of the behavior in the video respectively.

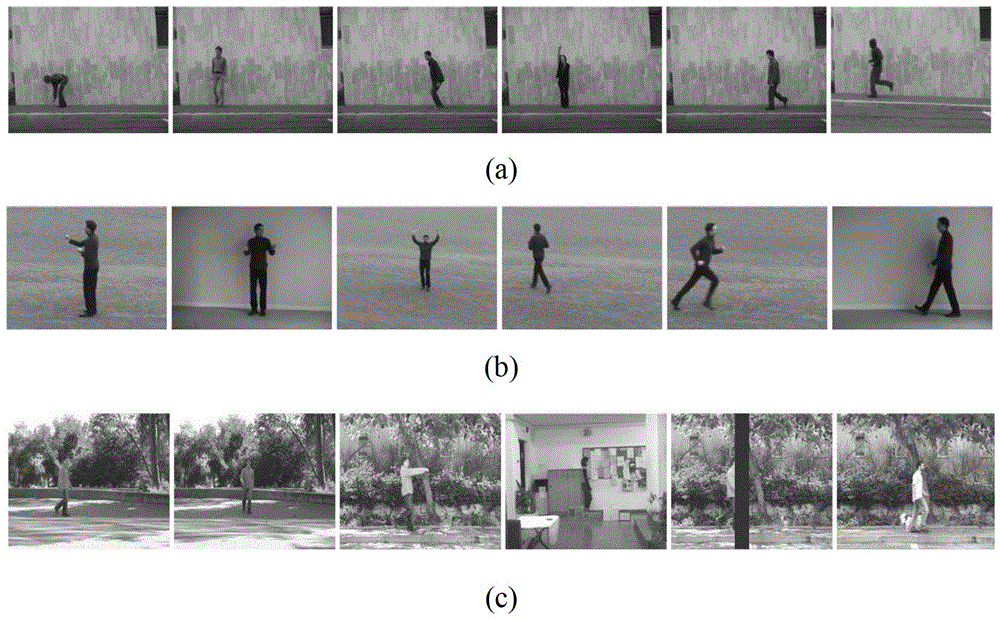

[0046] Behaviors in the video refer to human actions such as walking, running, jumping, boxing, etc. All videos are executed by several actors, and each actor completes all actions in turn. A video contains only one actor. Behavior;

[0047]The implementation method of using the Cuboid detector to detect local features of the video is: divide the video into local blocks of equal size, and calculate the response function value R of each pixel in a local block:

[0048] R=(I*g*h ev ) 2 +(I*g*h od ) 2 ,

[0049] Where: I represents the gray...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com