Feedback driving and adjusting system for efficient parallel running

A technology for running platforms and tasks, applied in concurrent instruction execution, machine execution devices, program control design, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

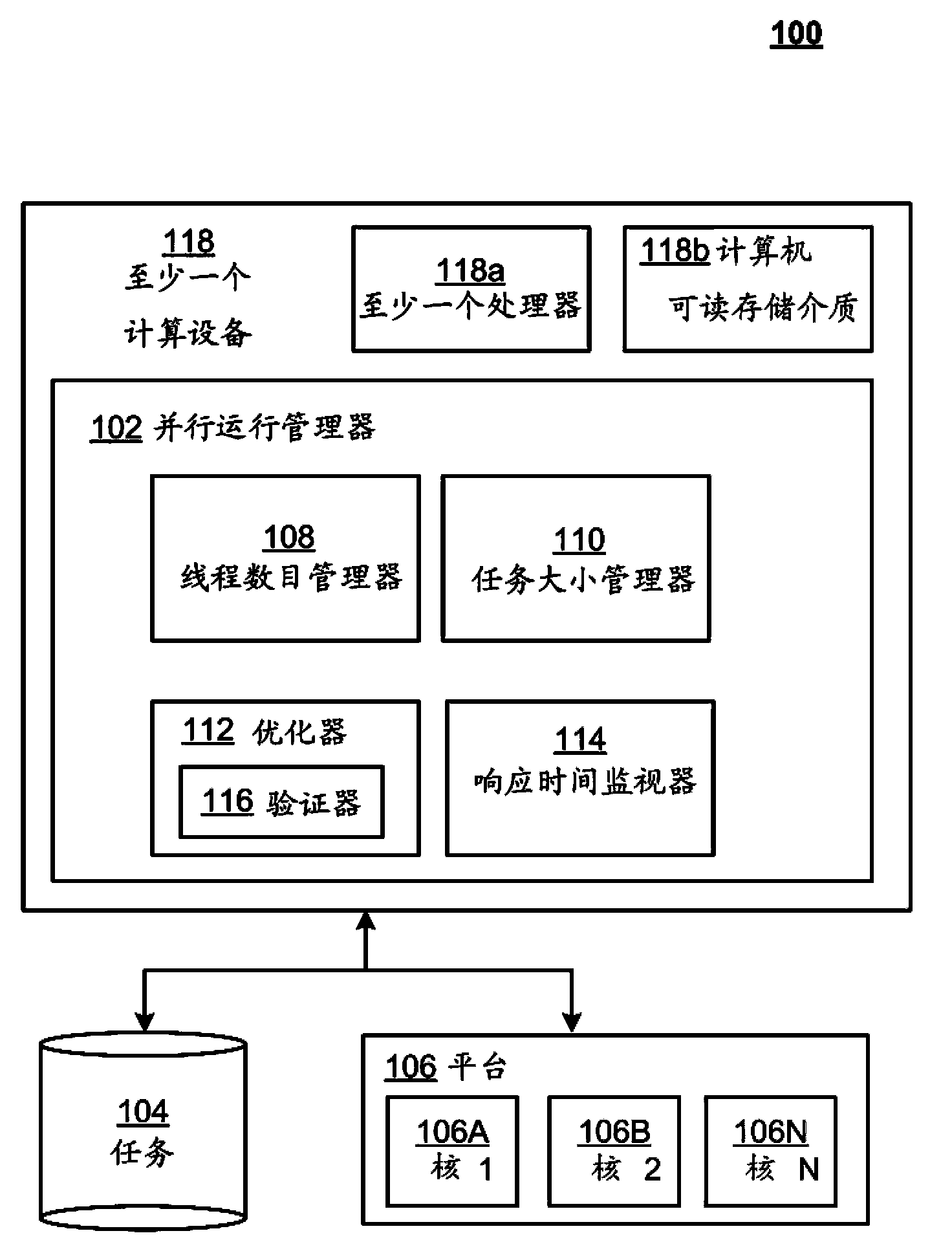

[0032] figure 1 is a block diagram of a system 100 for feedback-driven regulation running in parallel. exist figure 1 In an example, parallel execution manager 102 may be configured to execute tasks 104 in parallel by utilizing platform 106 . As shown and described, the platform 106 is capable of running multiple, parallel threads of execution, such as figure 1 This is illustrated by the illustration of processing cores 106A, 106B, . . . 106N. More specifically, as described in detail below, parallel execution manager 102 may be configured to actively manage how and to what extent parallelization of tasks 104 using platforms 106 occurs over time. Specifically, parallel execution manager 102 may be configured to achieve optimization of parallelization in a manner that is generally agnostic to the type or nature of platform 106 and does not require extensive knowledge of platform 106 and associated The manner and extent to which the parallelization parameters respond to the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com