Language model training method, query method and corresponding device

A training method and language model technology, applied in speech analysis, speech recognition, instruments, etc., can solve the problems of large training corpus, rapid update of language models affecting the speech search system, slow training speed, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

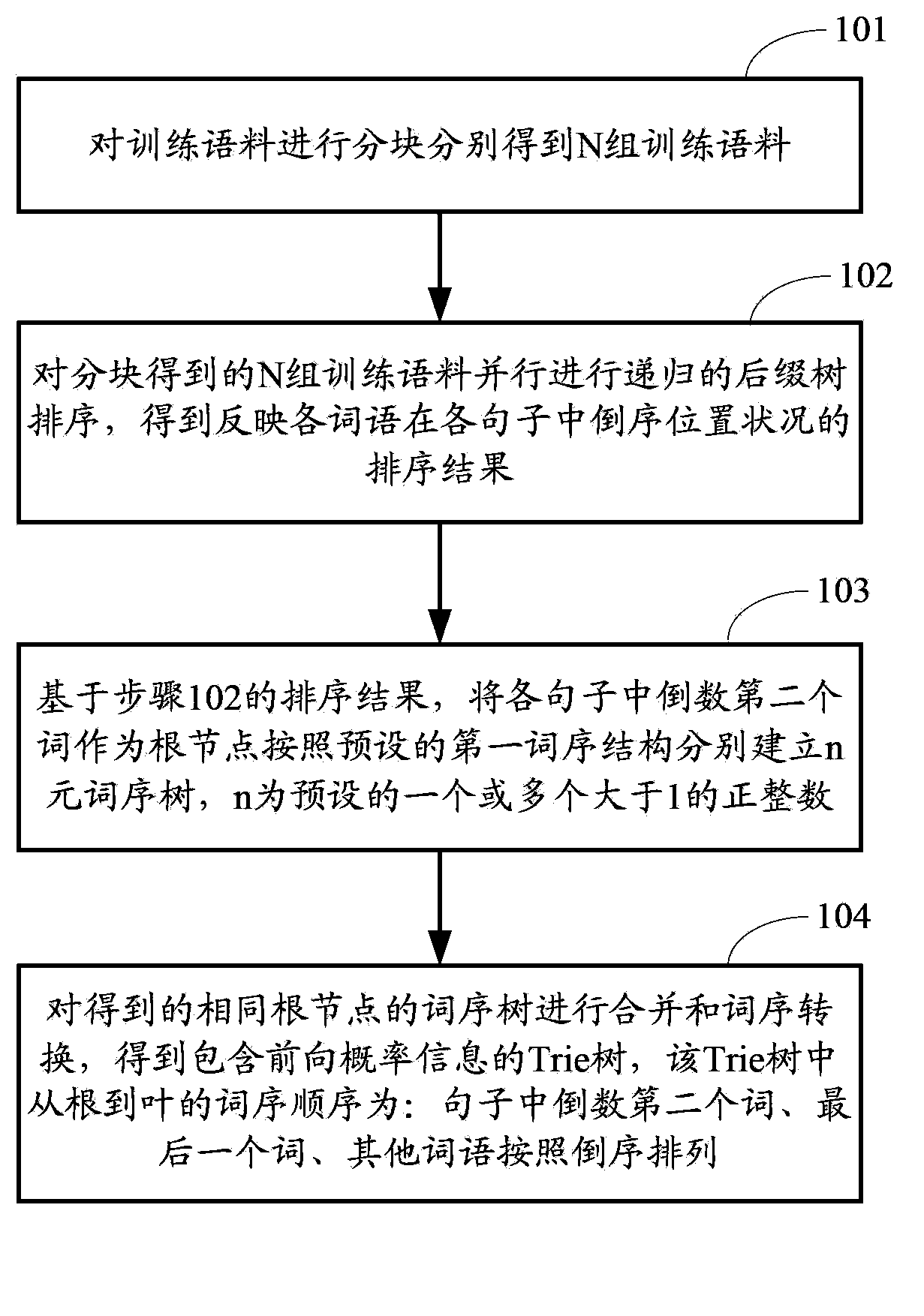

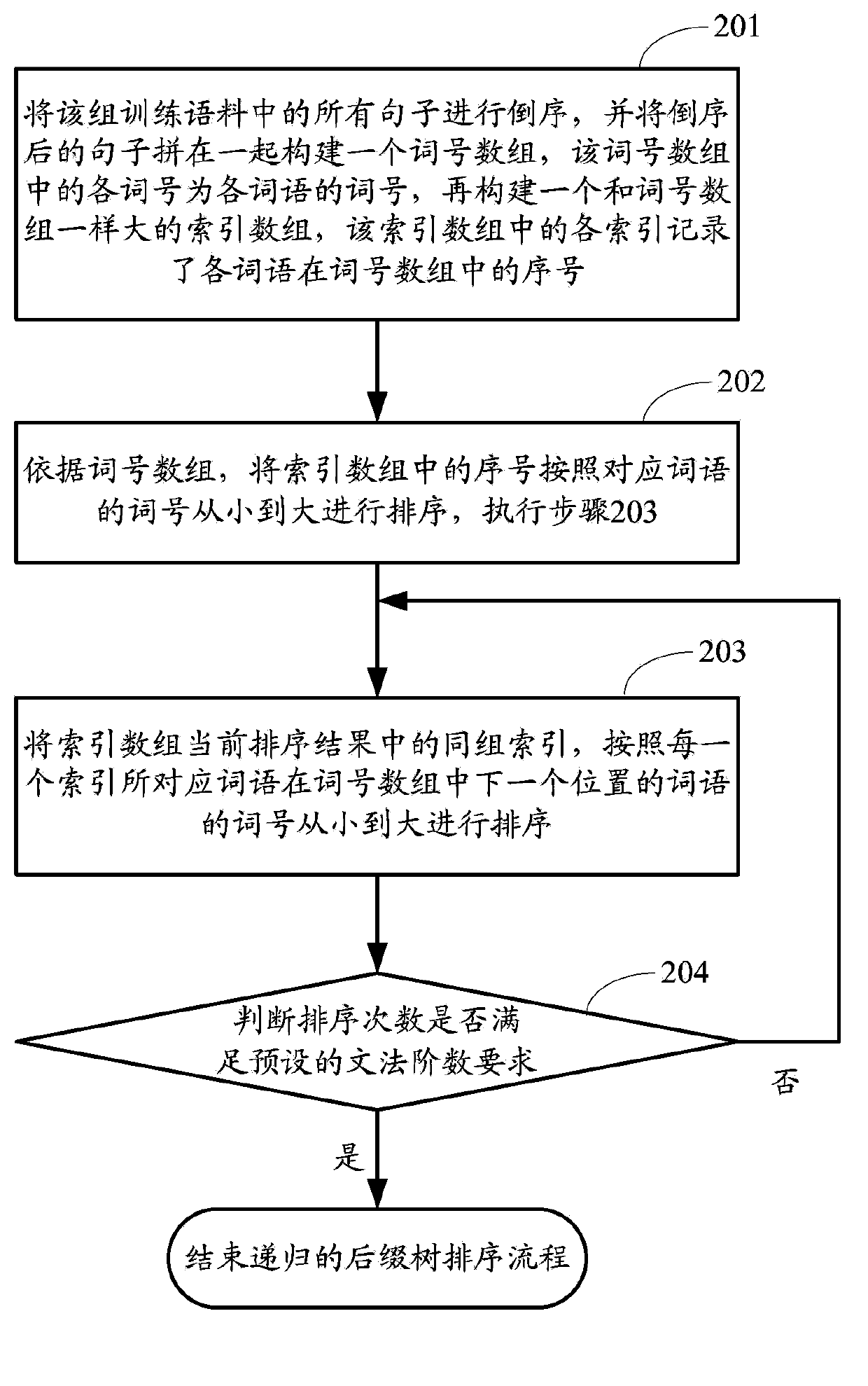

[0072] figure 1 The flow chart of the language model training method provided by Embodiment 1 of the present invention, such as figure 1 As shown, the method includes the following steps:

[0073] Step 101: Divide the training corpus into blocks to obtain N sets of training corpus, where N is a positive integer greater than 1.

[0074] In order to improve the update speed of the language model, in the embodiment of the present invention, the original serial processing of the training corpus is changed to parallel processing, so firstly the training corpus is divided into blocks to obtain multiple sets of training corpus, so that the multiple sets of training The corpus is processed in parallel.

[0075] Here, the division of the training corpus can be performed according to any strategy, as long as the training corpus can be divided into N groups. In addition, the training corpus used in this step can be the user input information of all time periods in the search text duri...

Embodiment 2

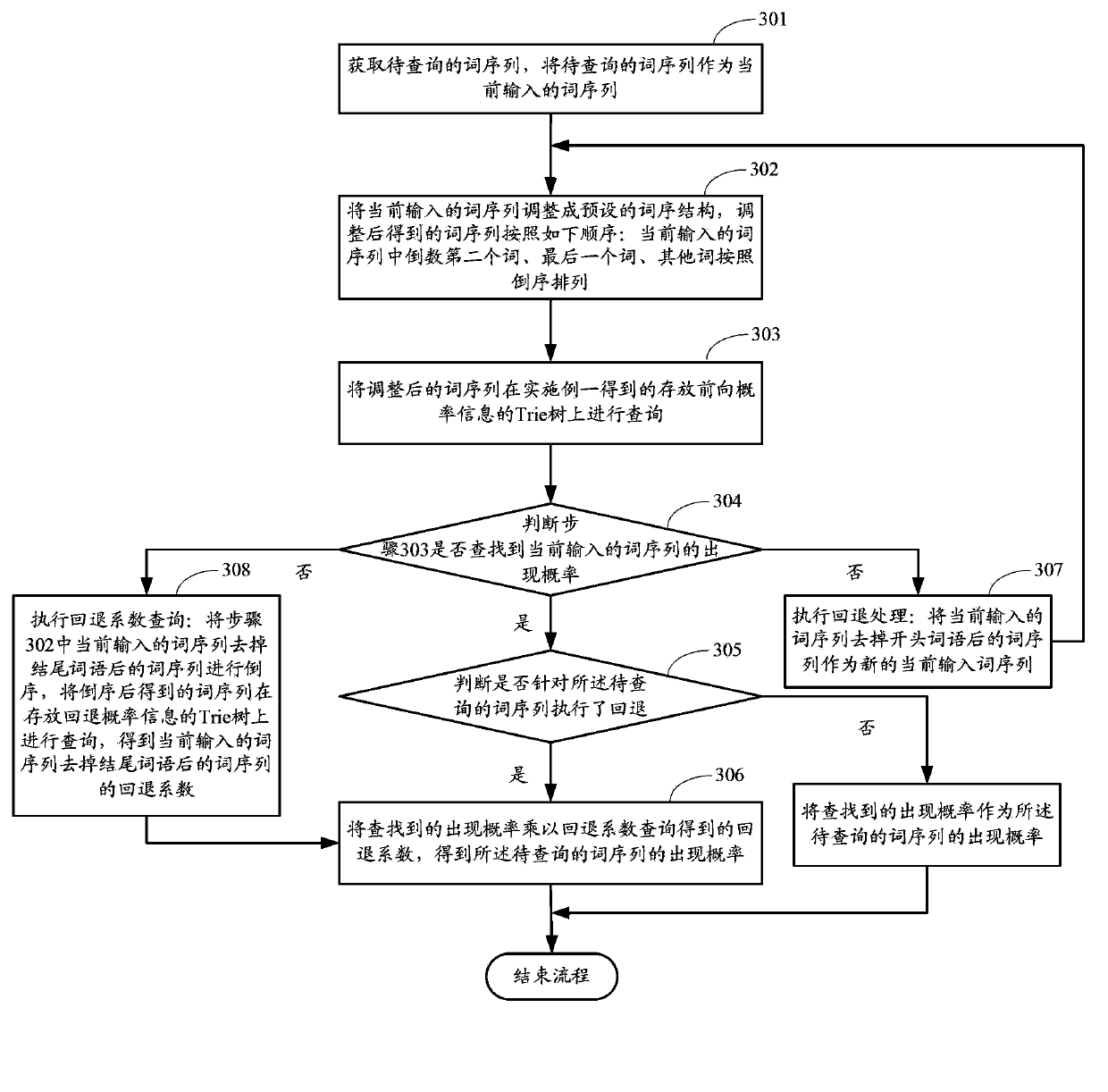

[0119] image 3 The flow chart of the language model query method provided by Embodiment 2 of the present invention, as shown in image 3 As shown, the query method specifically includes the following steps:

[0120] Step 301: Obtain the word sequence to be queried, and execute step 302 with the word sequence to be queried as the currently input word sequence.

[0121] Step 302: Adjust the currently input word sequence to a preset word order structure, and the adjusted word sequence is in the following order: the penultimate word, the last word, and other words in the currently input word sequence are arranged in reverse order.

[0122] The word order structure adjustment of the input word sequence in this step matches the word order structure of the Trie tree storing the probability information.

[0123] Step 303: query the adjusted word sequence on the Trie tree storing the forward probability information obtained in the first embodiment.

[0124] Step 304: Determine whet...

Embodiment 3

[0137] Figure 4 The structural diagram of the training device for the language model provided by Embodiment 3 of the present invention, as shown in Figure 4 As shown, the training device includes: a block processing unit 400, N recursive processing units 410, N word order tree building units 420, and a merge processing unit 430, where N is a positive integer greater than 1.

[0138] The block processing unit 400 blocks the training corpus to obtain N sets of training corpus, and provides the N sets of training corpus to each recursive processing unit 410 respectively.

[0139] In the embodiment of the present invention, the original serial processing of the training corpus is changed into parallel processing, so the block processing unit 400 first divides the training corpus to obtain multiple sets of training corpus, so that the subsequent training corpus can be performed on the multiple sets of training corpus Parallel processing. The training corpus used by the block pr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com