Cache management method and device

A cache management and cache technology, applied in the computer field, can solve problems such as cache pollution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

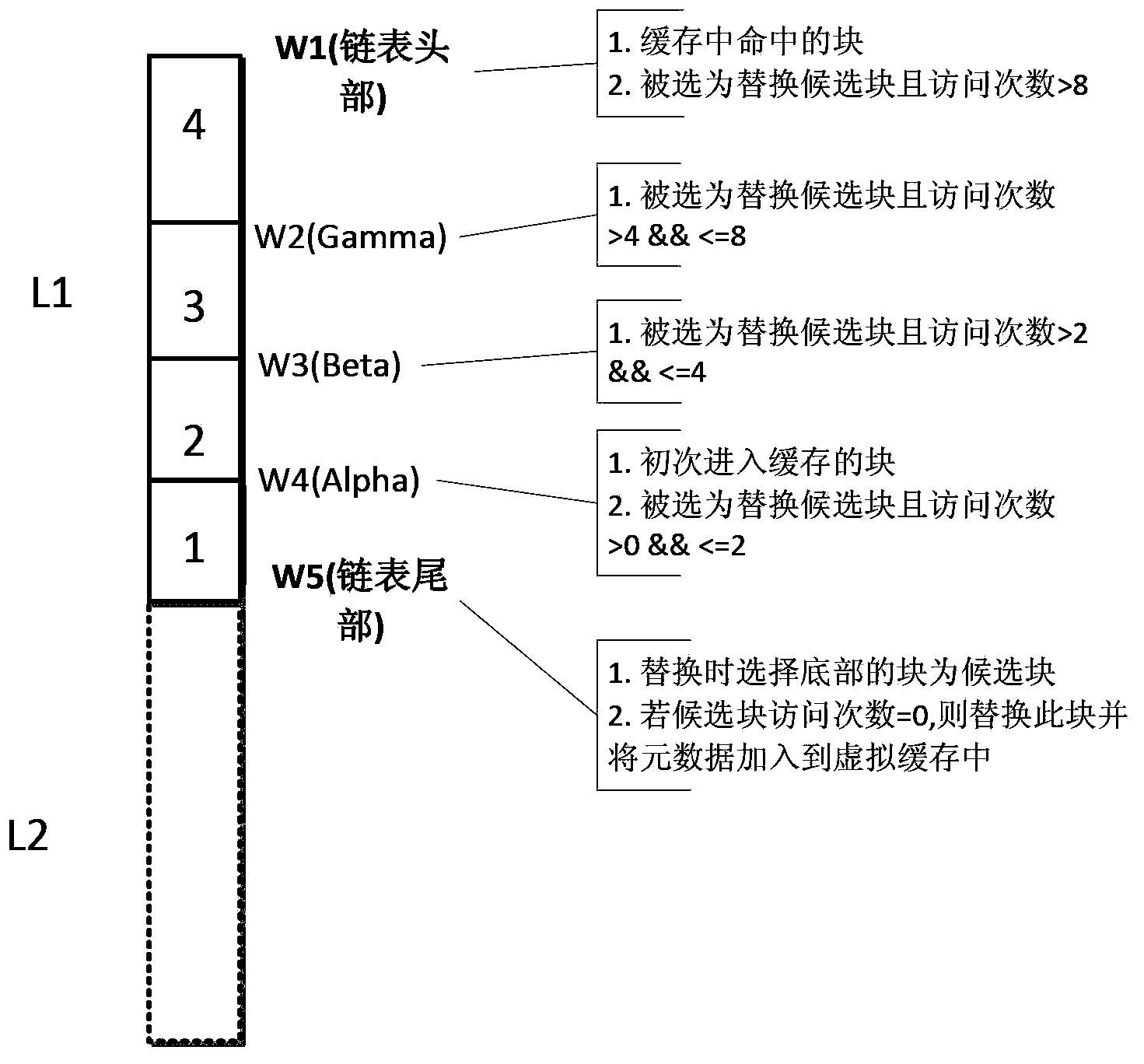

[0110] An embodiment of the present invention provides a cache management method. The cache is divided into two parts: a solid cache (Solid Cache) and a virtual cache (Phantom Cache), such as image 3 As shown in the schematic diagram of the cache, the physical cache is maintained by the linked list L1, and the virtual cache is maintained by the L2. The metadata and data of the page are stored in the physical cache, and only the metadata is stored in the virtual cache. What needs to be understood is: because only metadata is stored in the virtual cache, and the metadata only saves the access information of the page, therefore, if the requested page hits the linked list L2, it is not a real cache hit.

[0111] In the embodiment of the present invention, the entity cache linked list L1 can be divided into more than one segment. Preferably, the linked list L1 is divided into 4 segments, and the number of pages stored in each segment of the linked list can be different (for the co...

Embodiment 2

[0125] The embodiment of the present invention provides a cache management method. The method provided in this embodiment is similar to the method provided in the first embodiment above, and has the same strategy for how to delete pages in the entity cache, that is, the method provided in the first embodiment above scheme, so that the data in the page that finally meets the condition is deleted. In this embodiment, based on the strategy provided in the first embodiment above, a solution is designed to add a new requested page using the space provided by the deleted page. For details see Figure 5 As shown, the entity cache is managed and maintained through the linked list L1, and the linked list L1 is at least divided into more than one section, and the division of each section is fixed, indicating that each section has a certain storage space, but when there is a replacement candidate page that needs to be added, Requirements for segmented channels need to be met; the approa...

Embodiment 3

[0147] The embodiment of the present invention provides a cache management method. This method is based on the same inventive concept as the first and second embodiments above. The difference is that the first and second embodiments maintain the entity cache through a linked list L1. In this In the third embodiment, the entity cache is maintained through multiple links, and the number of linked lists can be the same as the number of segments of the linked list described in the above-mentioned embodiment. An example is to divide the linked list L1 into 4 segments. The number of times linked list is only an example for easy understanding, and is not a limitation to the embodiment of the present invention.

[0148] Such as Figure 6 The cache diagram shown in the cache shows four linked lists L1 to L4 for the physical cache and the linked list L0 for the virtual cache. The cache in the dotted line can be understood as a virtual cache. The virtual cache can be used as a preferr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com