A load balancing method and system based on double-layer cache

A load balancing and caching technology, applied in transmission systems, digital transmission systems, data exchange networks, etc., can solve problems such as resource waste, increased costs, unbearable, etc., and achieve the effect of reducing request pressure, reducing input costs, and reducing quantities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] Hereinafter, the present invention will be described in detail with reference to the drawings and examples. It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other.

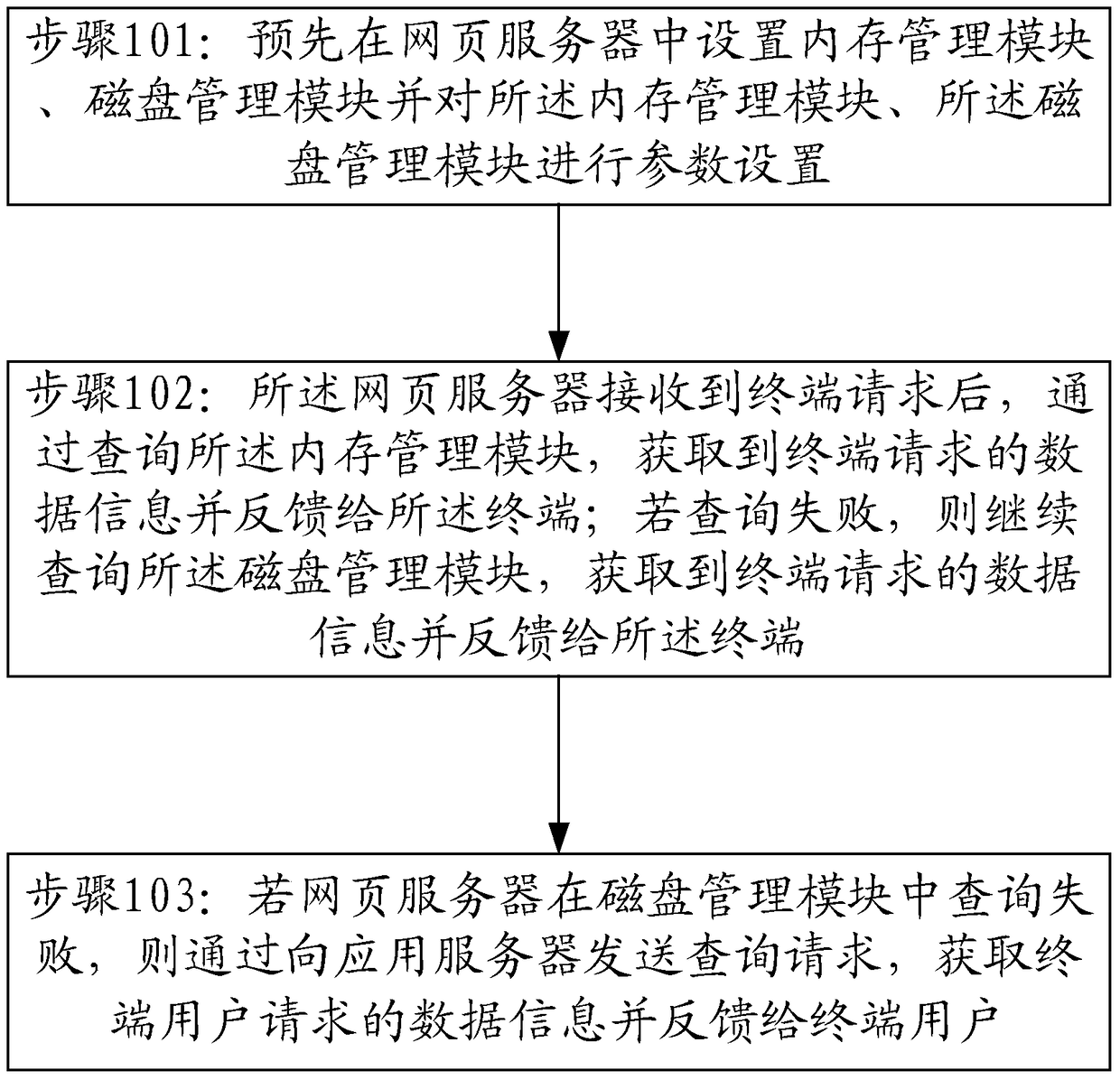

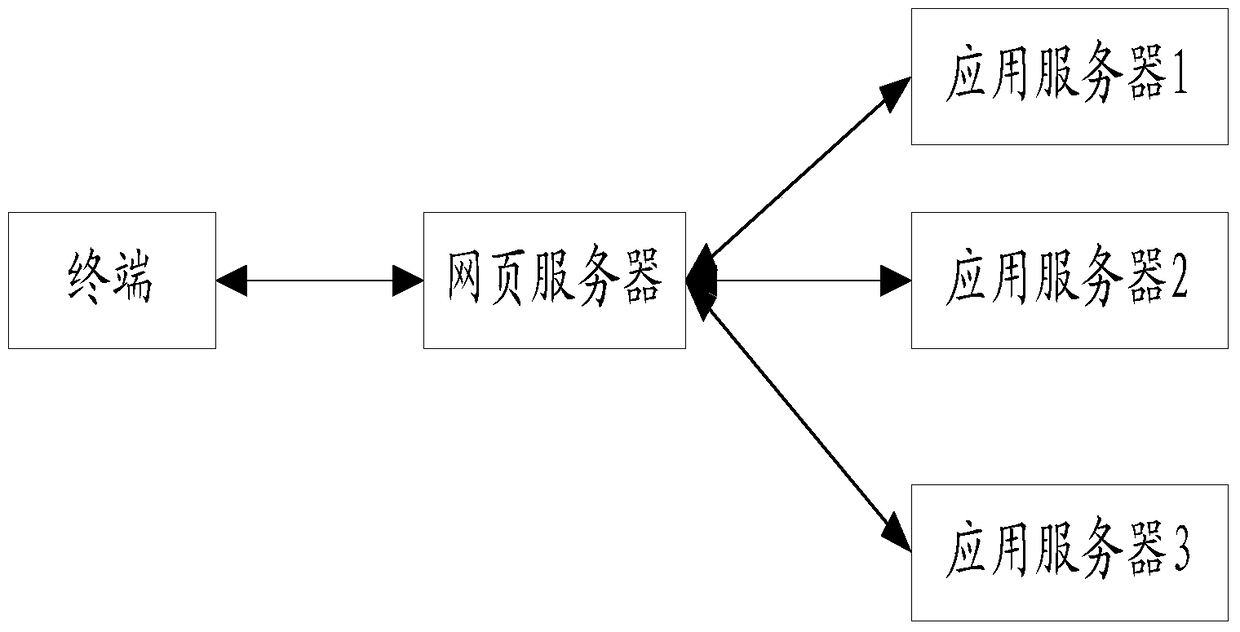

[0017] figure 1 Shown is the flow chart of the load balancing method based on double-layer cache in Embodiment 1 of the present invention, including the following steps:

[0018] Step 101: Pre-setting a memory management module and a disk management module in the web server and setting parameters for the memory management module and the disk management module;

[0019] Set the following parameters for the memory management module:

[0020] 1. Set the connection timeout, send timeout, read timeout, cache invalidation time;

[0021] 2. Set the access request mode, that is, only directly accept internal access, and not directly receive external requests.

[0022] E.g:

[0023] memc_connect_t imeout...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com