Video target tracking method based on compound sparse model

A technology of sparse model and target tracking, applied in the field of video target tracking based on compound sparse model

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

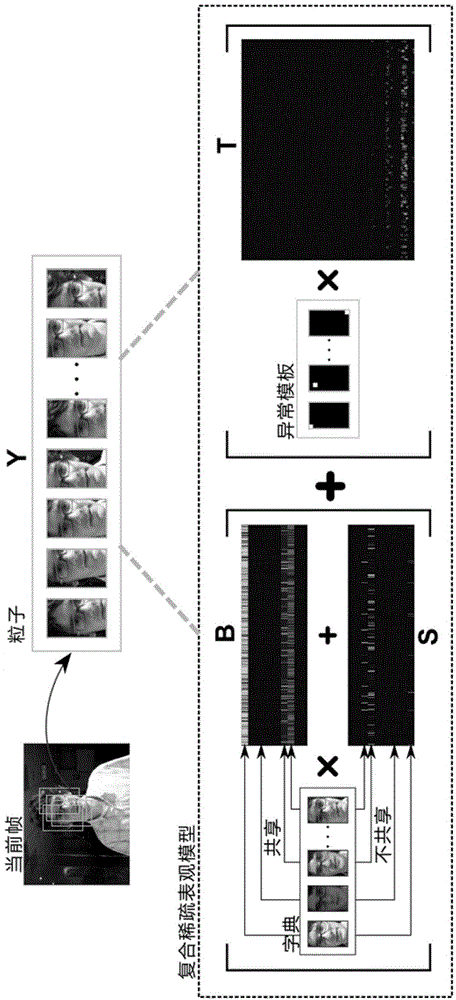

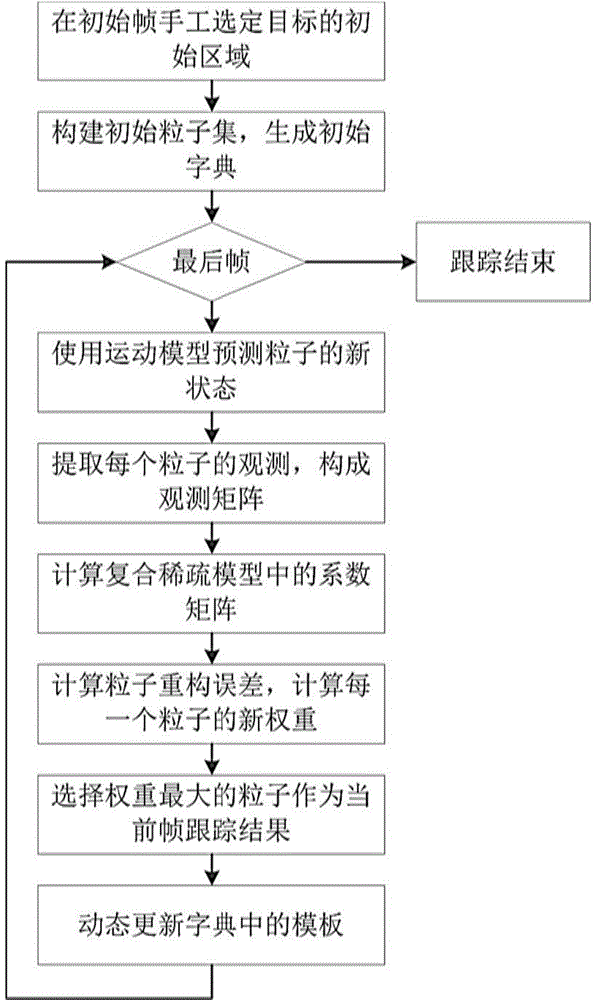

Method used

Image

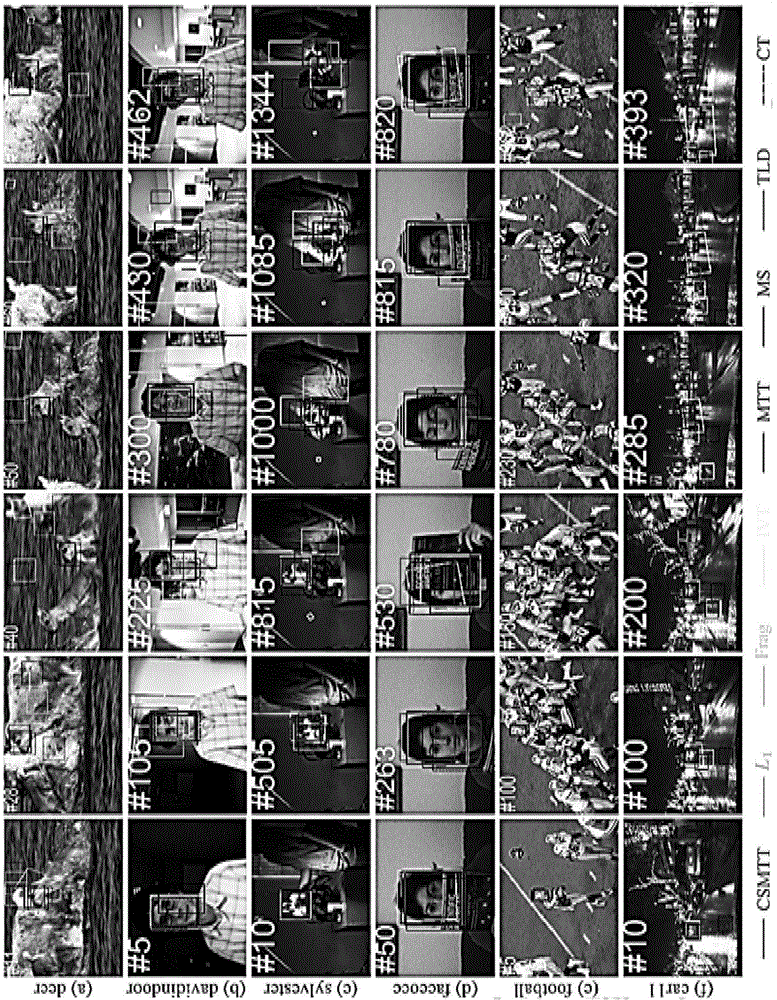

Examples

Embodiment 1

[0039] In this embodiment, the state of the target adopts a six-variable affine model, that is, x t ={a t , b t , θ t ,s t , α t , φ t}, where: 6 parameters represent position coordinates, rotation angle, scale, aspect ratio, and tilt angle respectively; the motion model of the target adopts the random walk model, that is, the new state is obtained from the previous state according to Gaussian distribution sampling, The original observation of the target is the pixel value of the area defined by the state, and then it is down-sampled and compressed into a vector, which becomes the observation actually used in the composite sparse model; the likelihood function p(O t |x t ) is defined by the similarity between the particle appearance and the dictionary template, that is, the reconstruction error of the composite sparse appearance model of the particle obeys the Gaussian distribution. At time t, the current dictionary is D t-1 , n particles are Then in this example,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com