A data prefetching method based on mapreduce

A data prefetching and data block technology, applied in the computer field, can solve the problems of not being able to guarantee the prefetching effect, not taking into account the performance factors of computing nodes, etc., to achieve the effect of improving the overall throughput rate, flexible and convenient implementation, and shortening the execution time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] This specific embodiment adopts following technical scheme:

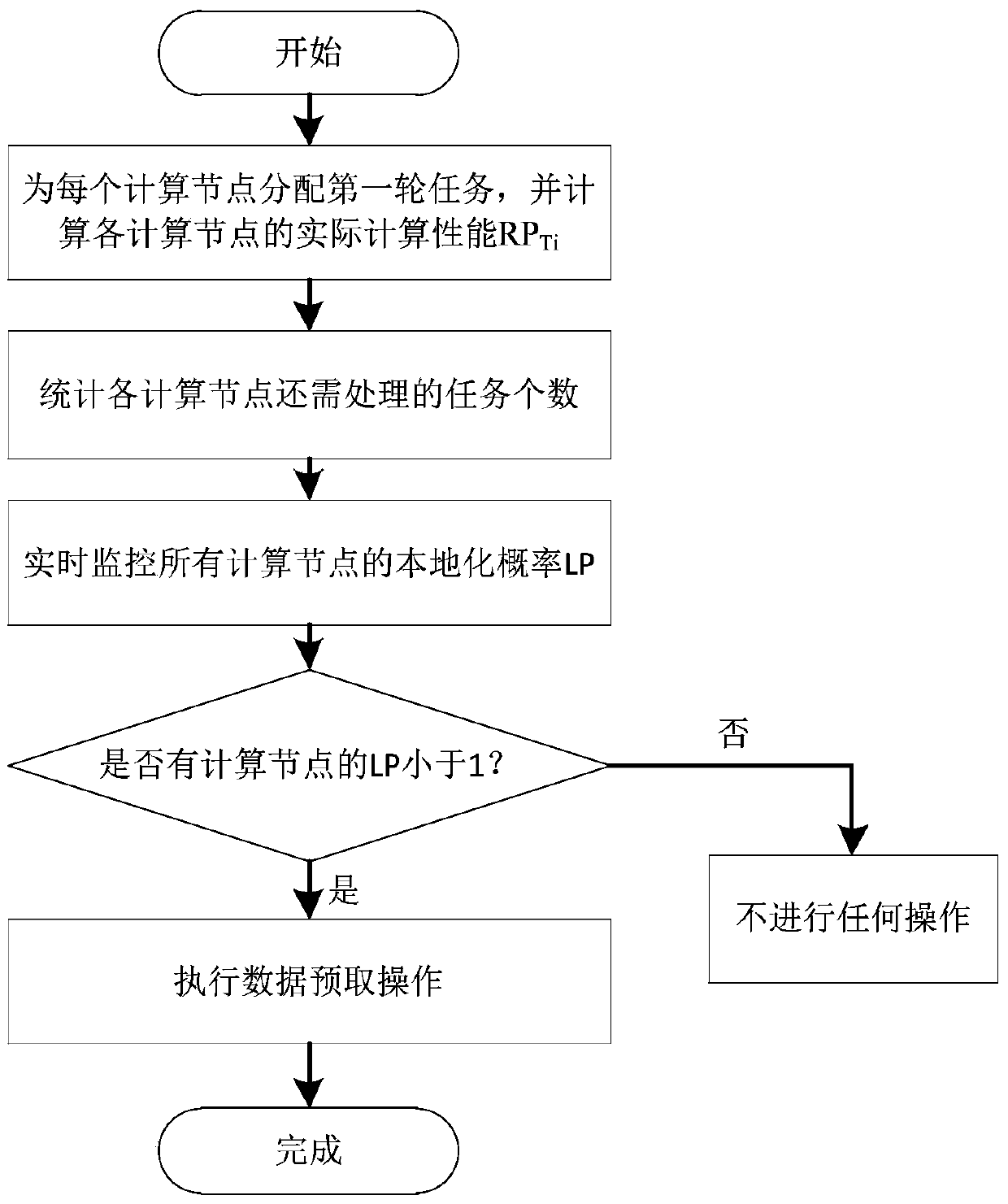

[0032] A data prefetching strategy method based on MapReduce, its process is as follows figure 1 As shown, on a cluster with n physical computing nodes, for a specific job A that is scheduled, data prefetching is performed in the following way during its implementation:

[0033] Step 1: Since clusters can be divided into homogeneous and heterogeneous, it is assumed that the cluster is homogeneous when the calculation has not yet started, that is, it is assumed that the computing performance of all physical computing nodes P i are all 1, where i∈[1,n]; for job A, assuming that the number of data blocks corresponding to the job is b, and the default number of backups for each data block on HDFS is 3, set The number of data blocks is F Ti , then the total number of data blocks ∑F Ti = 3b;

[0034] The number of localized data blocks of job A on each computing node is used as a parameter to establish a small to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com