Spatial relationship matching method and system applicable to video/image local features

A spatial relationship and local feature technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve the problems of reducing SIFT's ability to distinguish, inability to distinguish, and reduced ability to distinguish

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

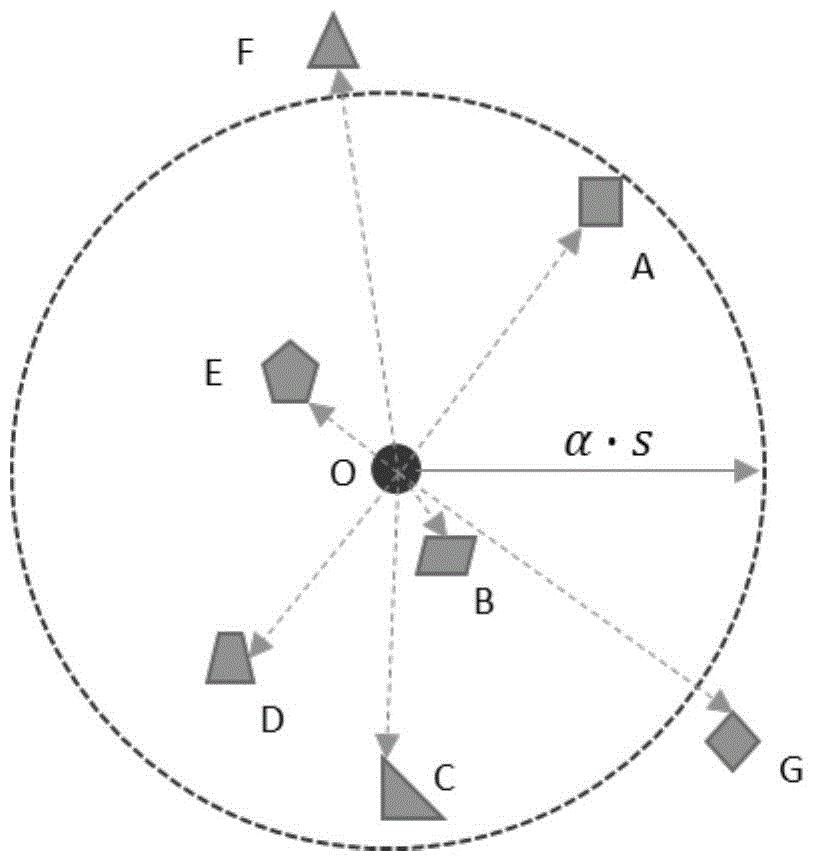

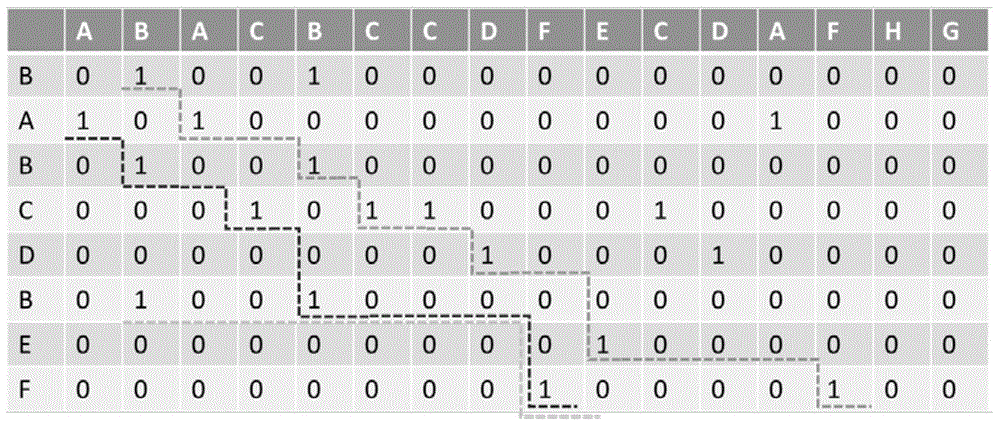

[0049] In order to solve the above technical problems, the present invention proposes a spatial relationship matching method suitable for video / image local features, including the following implementation steps:

[0050] Step 1, obtain all video / image feature points of the video / image and the attribute information of the video / image feature points, and obtain all the video / image features according to the video / image feature points and the attribute information The scale information of the point, through the scale information, determine the local neighborhood space of each video / image feature point, obtain the visual keyword codes of all the video / image feature points in the local neighborhood space, and Perform quantization processing on the visual keyword codes to generate new visual keyword codes, sort the new visual keyword codes, and generate spatial relationship codes of the video / image feature points;

[0051] Step 2, compare the video / image feature points to be matched ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com