Patents

Literature

68results about How to "Scale invariant" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Tooth X-ray image matching method based on SURF point matching and RANSAC model estimation

InactiveCN103886306AReduce volumeAvoid destructionCharacter and pattern recognitionCorrelation coefficientFeature extraction

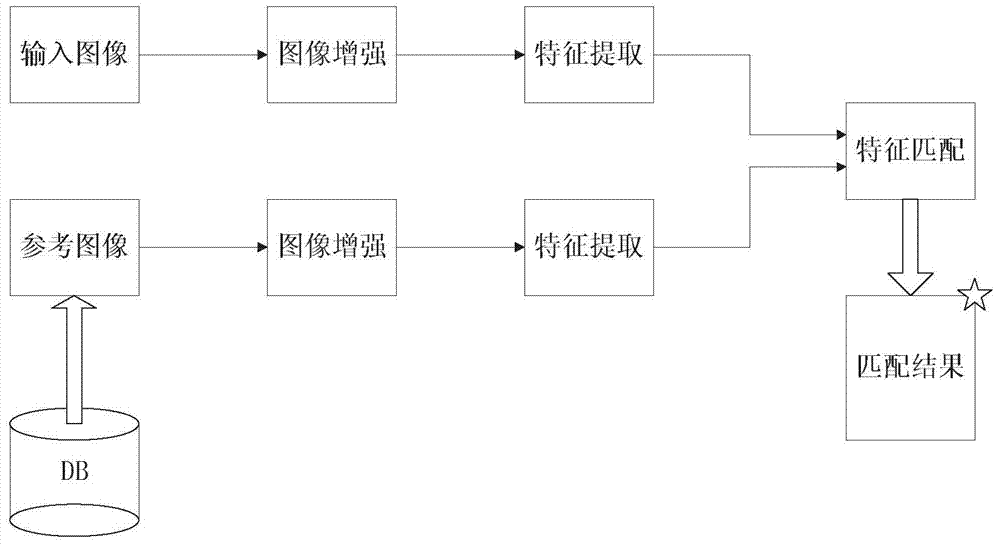

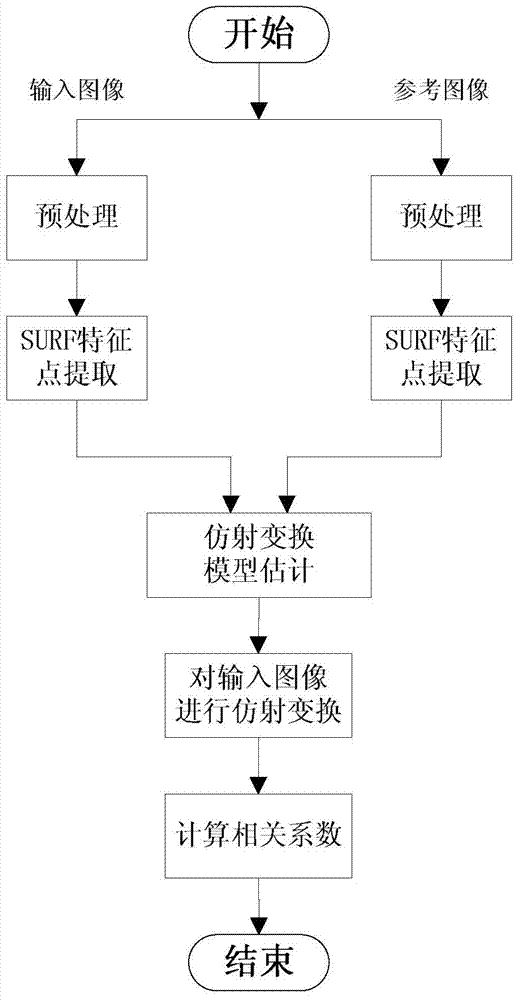

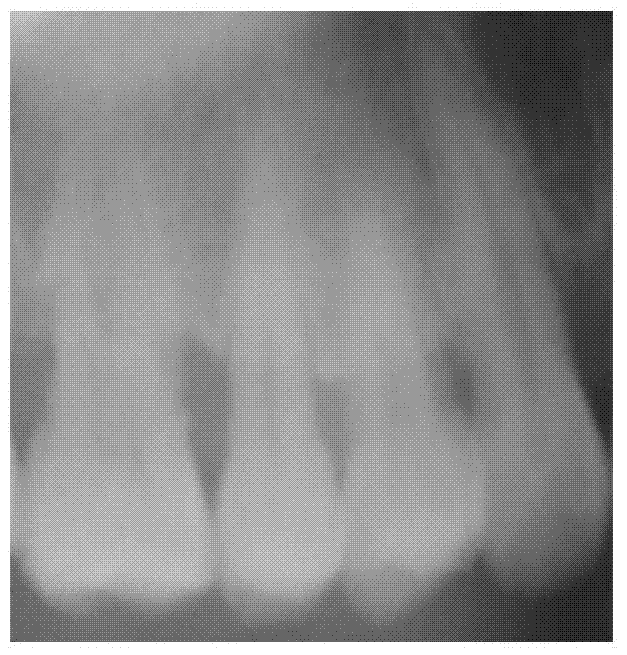

The invention relates to a tooth X-ray image matching method based on SURF point matching and RANSAC model estimation. The tooth X-ray image matching method includes the steps that (1), images are collected; (2), the images are enhanced; (3), features are extracted; (4), the features are matched; (5), related matching coefficients are calculated; (6), a matching result is displayed; (7), if reference images which do not participate in matching exist in an image library, the step (1) is carried out, a new reference image is selected from the image library, and if all images participate in matching, operation is finished; (8), matching succeeds, personal information corresponding to the reference images is recorded, and then operation is finished. The feature points of an input tooth image and a reference tooth image are extracted according to an SUFR algorithm, then the feature points are matched according to an RANSAC algorithm, and finally, the matching degree of the two images is measured according to the correlation coefficient of the two matched images. Experiment shows that by the tooth X-ray image matching method, the high precision ratio and high real-time performance are achieved.

Owner:SHANDONG UNIV

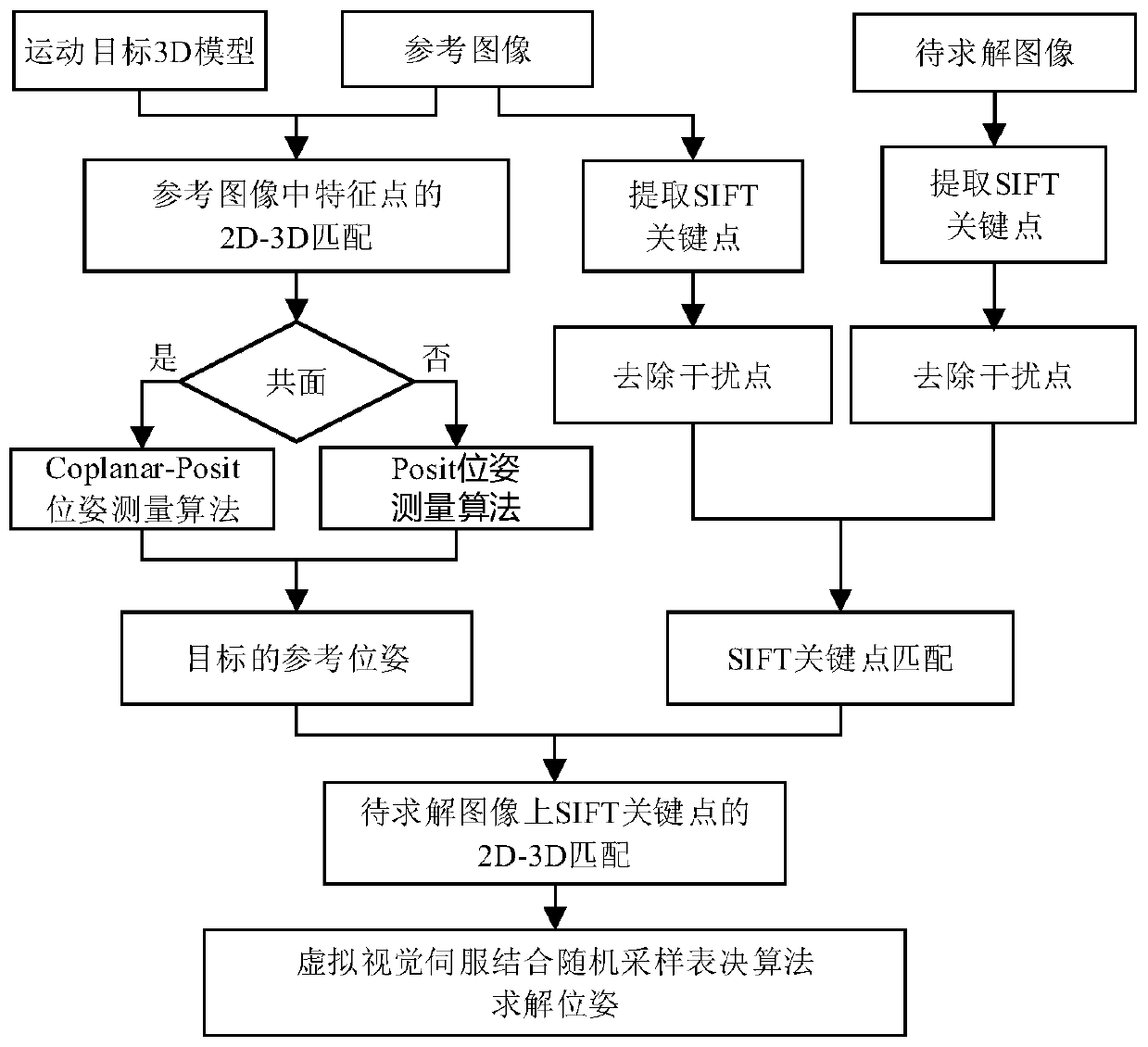

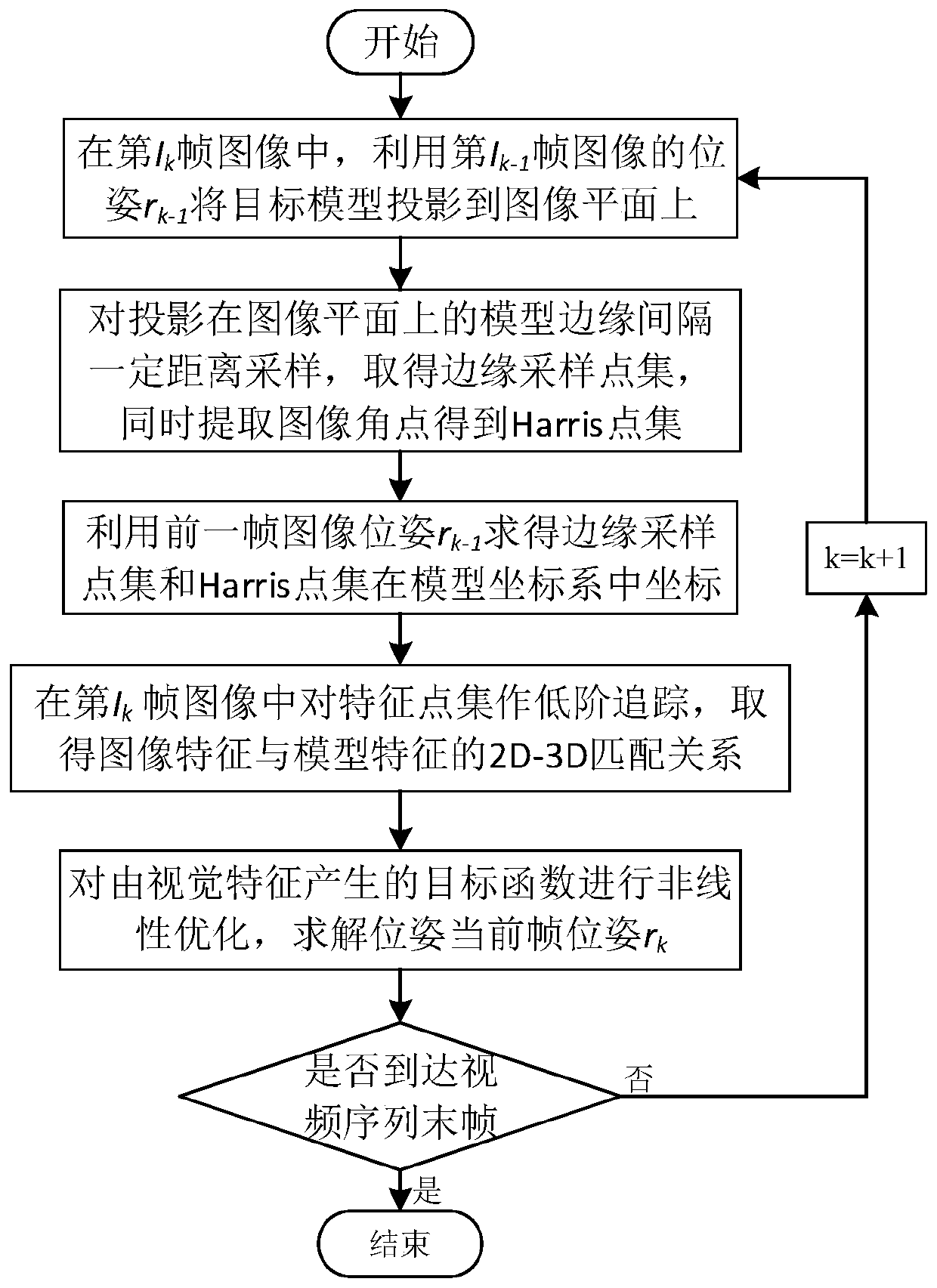

A pose measurement method combining initial pose measurement with target tracking

PendingCN109712172AAchieve real-time outputRotation invariantImage analysisCharacter and pattern recognitionMoving averageVideo sequence

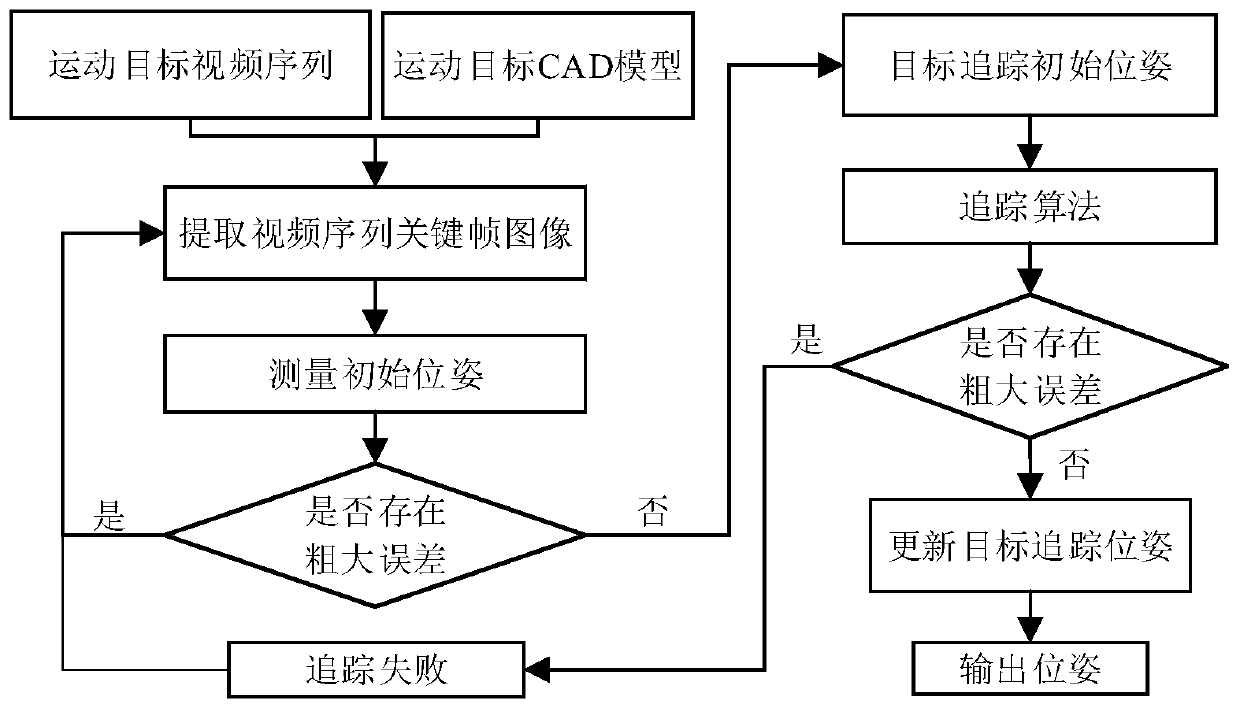

The invention discloses a pose measurement method combining initial pose measurement with target tracking, and belongs to the field of computer vision. The invention aims to realize real-time output of pose parameters of a moving target in a video sequence for an irregular moving target. The method is technically characterized by comprising the steps that initial pose measurement of a target is conducted, the initial pose measurement of the target is used for starting follow-up target tracking, and real-time output of pose parameters is achieved. The method comprises the steps of reference pose measurement, feature point extraction and matching and pose measurement based on multi-point matching. Target tracking comprises searching and matching of edge features, tracking and matching of point features and nonlinear optimization solution of pose parameters. According to the method, the initial pose of the target is combined with a target tracking method, so that real-time output of the pose parameters of the moving target in a video sequence is realized; the method has a certain adaptive capacity to the change of the environment, and the pose of the object can be measured when the object is partially shielded.

Owner:HARBIN INST OF TECH

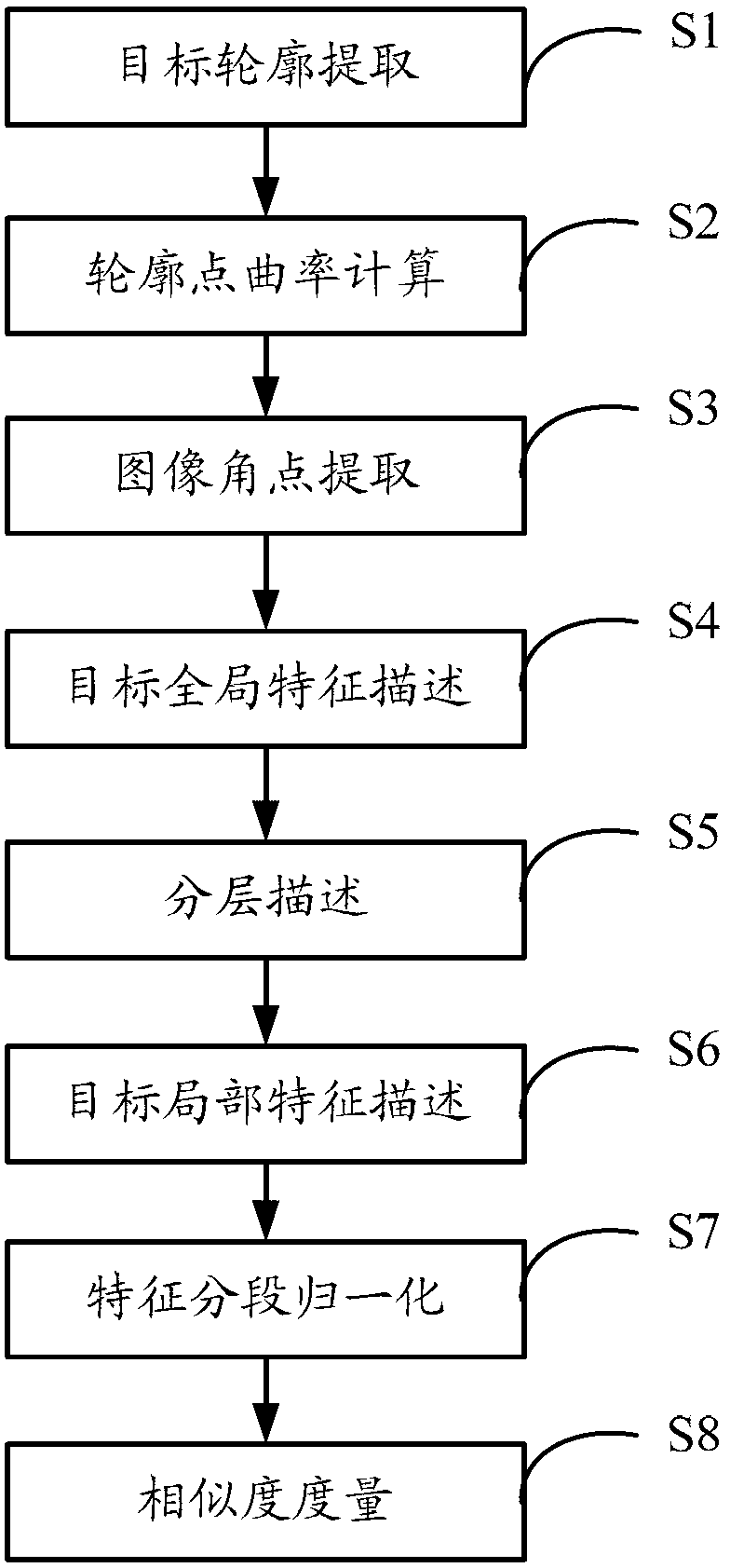

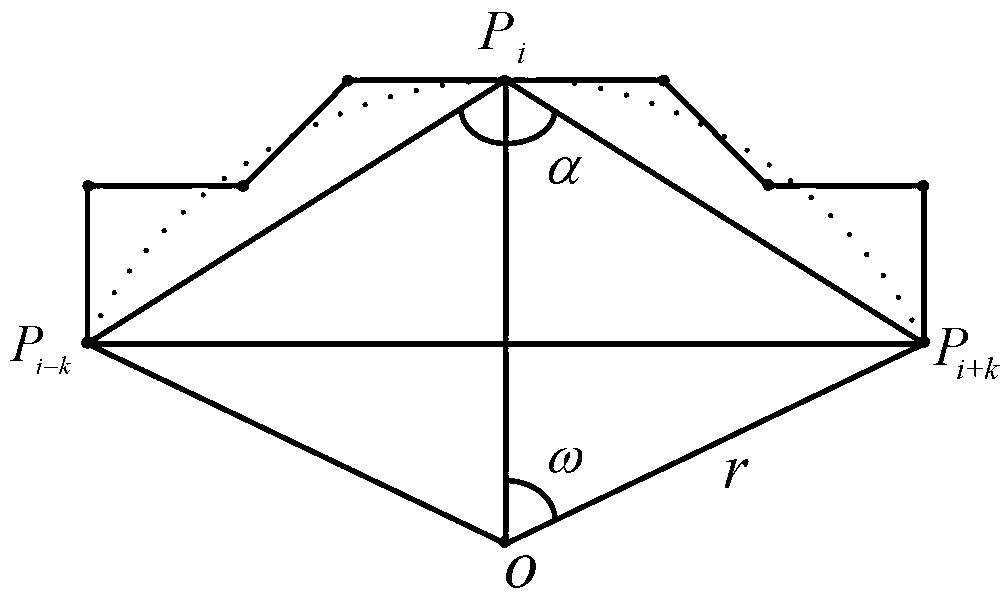

Target recognition and shape retrieval method based on hierarchical description

InactiveCN103345628AImprove accuracy and robustnessReduce computational complexityCharacter and pattern recognitionTranslation invarianceScale invariance

The invention discloses a target recognition and shape retrieval method based on hierarchical description. The method comprises the following steps of: extracting the profile feature of a target by a profile extracting algorithm, calculating a curvature value of each point on the profile target, extracting the angular point feature of the target by non-maximum value suppression, taking a profile segment corresponding to every two angular points as an overall feature describer of the target, carrying out hierarchical description on the profile points according to curvature, carrying out hierarchical description on the profile segments according to the importance degrees of value features, combining profile segments, the values of which are lower than evaluation thresholds, to form profile feature segments as partial feature describers of the target, carrying out normalization on the profile feature segments, and carrying out similarity measurement on the profile feature segments of different targets according to Shape Contexts distance. The method can be used for performing feature extraction on a target shape effectively, scale invariance, rotation invariance and translation invariance are achieved, the accuracy rate and the robustness in recognition are improved, and the computation complexity is reduced.

Owner:SUZHOU UNIV

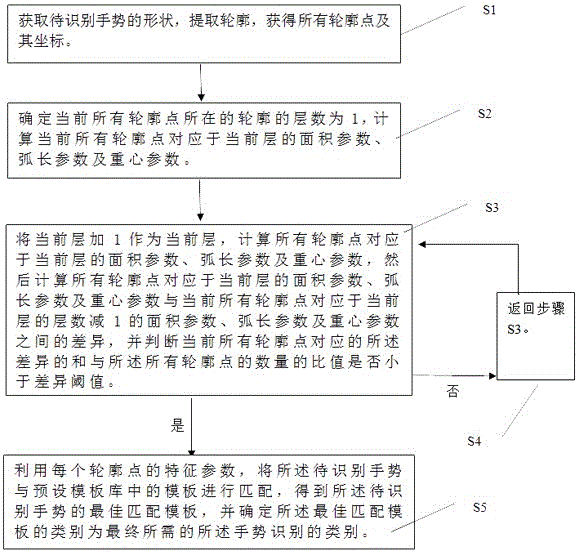

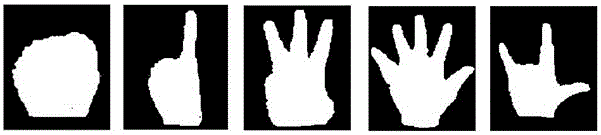

Gesture identification method and apparatus

InactiveCN106022227AReduce dimensionalityAvoid situations with low recognition accuracyCharacter and pattern recognitionTemplate basedGravity center

The invention discloses a gesture identification method. The method comprises: a shape of a to-be-identified gesture is obtained, a closed profile is extracted from a gesture-shaped edge, and all profile points on the profile and a coordinate of each profile point are obtained; the layer number of the profile is determined, and an area parameter, an arc length parameter, and a gravity center parameter that correspond to each profile point at each layer are calculated based on the coordinate of each profile point, wherein the parameters are used as feature parameters of the profile point; with the feature parameters of each profile point, a to-be-identified gesture and a template in a preset template base are matched to obtain an optimal matching template, and the optimal matching template is determined as the to-be-identified gesture. According to the invention, a global feature, a local feature, and a relation between the global feature and the local feature are described; multi-scale and omnibearing analysis expression is carried out; effective extraction and expression of the global feature and the local feature of the to-be-identified gesture shape can be realized; and a phenomenon of low identification accuracy caused by a single feature can be avoided.

Owner:SUZHOU UNIV

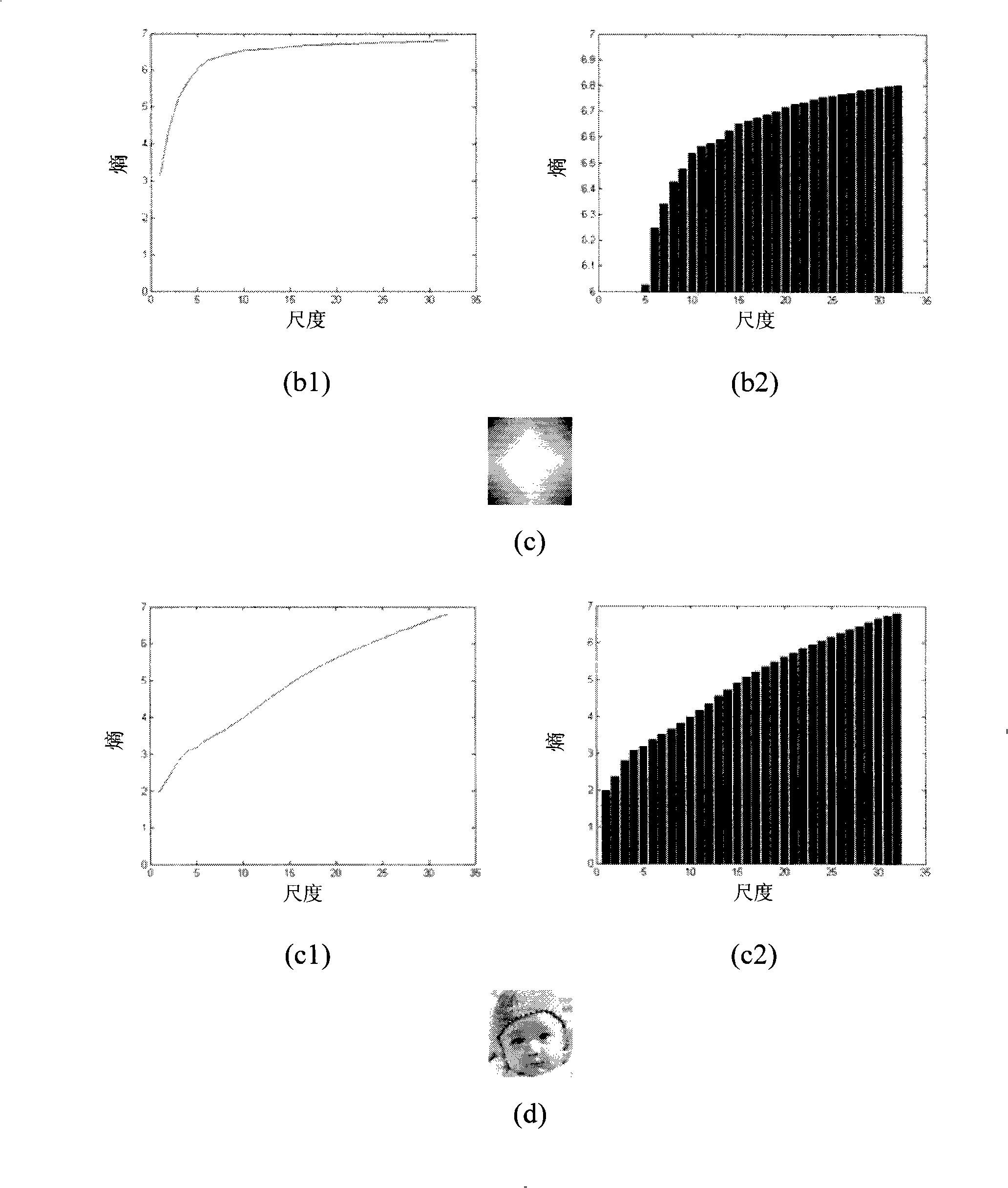

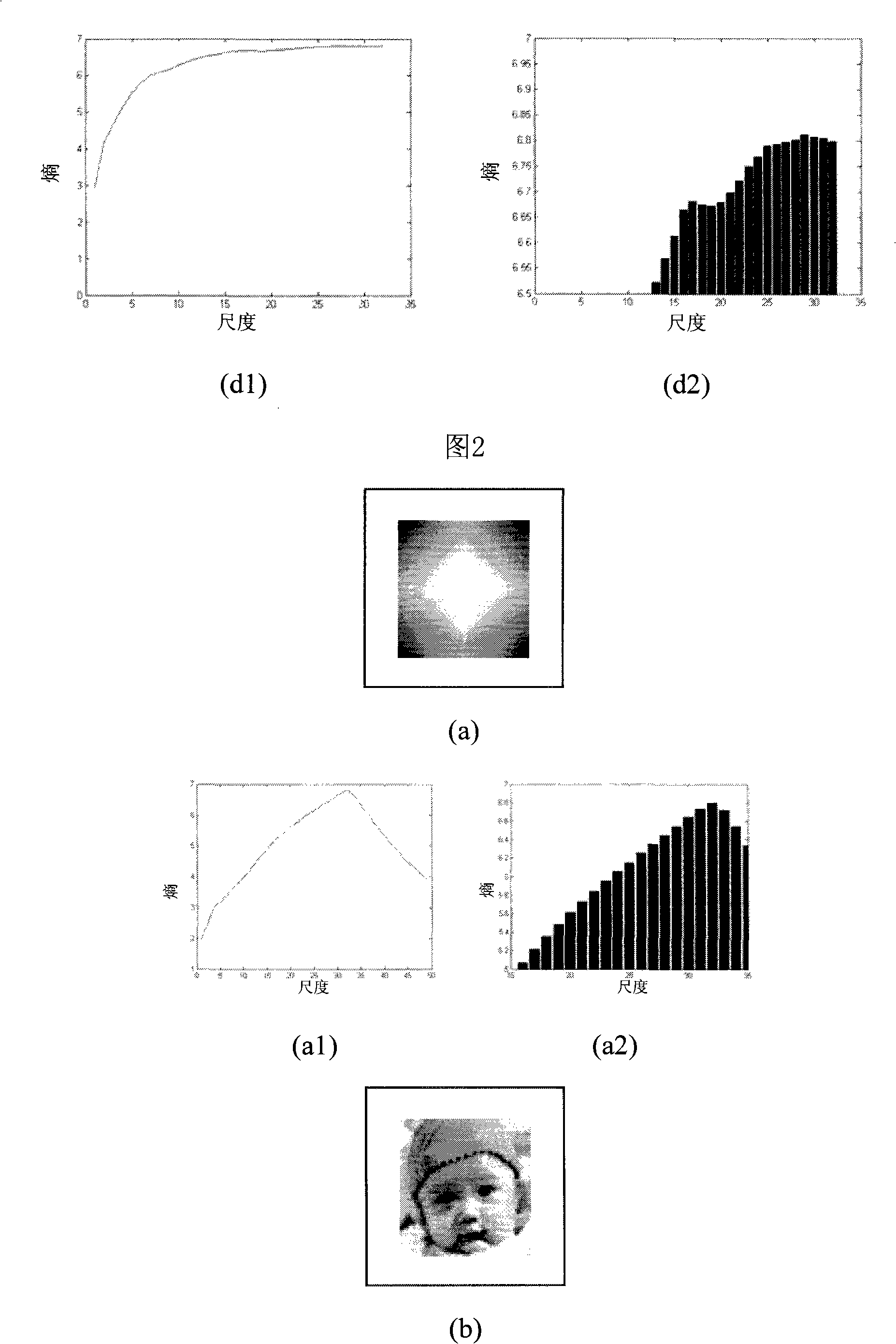

Bottom-up caution information extraction method

InactiveCN101334834AWith rotationScale invariantCharacter and pattern recognitionPsychotechnic devicesAttention modelCharacteristic space

The invention provides a bottom-to-top focused information extraction method based on the vision focusing research results of psychology. The bottom-to-top focused information is formed by significances of the corresponding zone of each point of an image and the size of the zone automatically adapts to the complexity of local features. The new significance measurement standard comprehensively takes three characteristics, i.e. local complexity, statistical dissimilarity and primary vision features into account. The significant zones simultaneously appear significant in both feature space and scale space. The acquired bottom-to-top focused information is provided with rotation, translation and scaling invariance invariability and a certain anti-noise capability. A focusing model is developed from the algorithm and the application of the focusing model to a plurality of natural images demonstrates the effectiveness of the algorithm.

Owner:BEIJING JIAOTONG UNIV

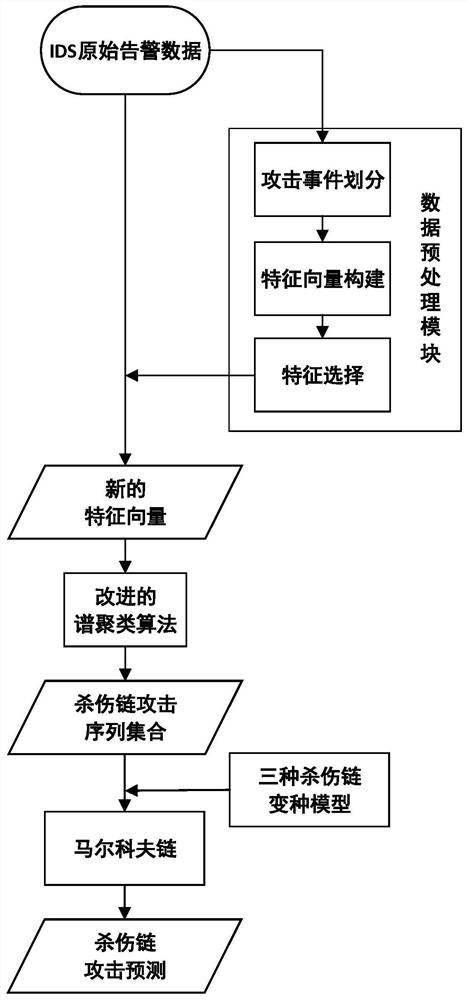

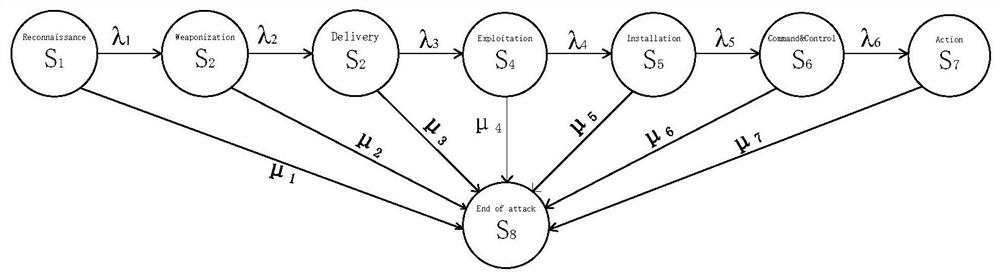

Network killing chain detection method, prediction method and system

ActiveCN112087420AEffective automatic identificationImplementing Unsupervised LearningCharacter and pattern recognitionMachine learningFeature vectorSpectral clustering algorithm

The invention discloses a network killing chain detection method, a network killing chain prediction method and a network killing chain detection and prediction system. The network killing chain detection method specifically comprises the following steps of: (1) constructing a d-dimensional feature vector; (2) screening and subtracting the d-dimensional feature vector into a k-dimensional featurevector by using an unsupervised feature selection algorithm; (3) acquiring a network kill chain attack event sequence set through utilizing the k-dimensional feature vector, wherein in a real scene inwhich IDS alarm log data is subjected to killing chain mining, aiming at the problem that the number of killing chains contained in the data cannot be known in advance, an improved spectral clustering algorithm disclosed by the invention not only can realize unsupervised learning, but further can automatically identify the clustering number compared with other supervised learning methods; (4) based on the obtained network killing chain sequences, performing prediction analysis by adopting a Markov theory and three network killing chain variant models; and (5) realizing the killing chain detection and prediction system based on theoretical analysis.

Owner:XIDIAN UNIV

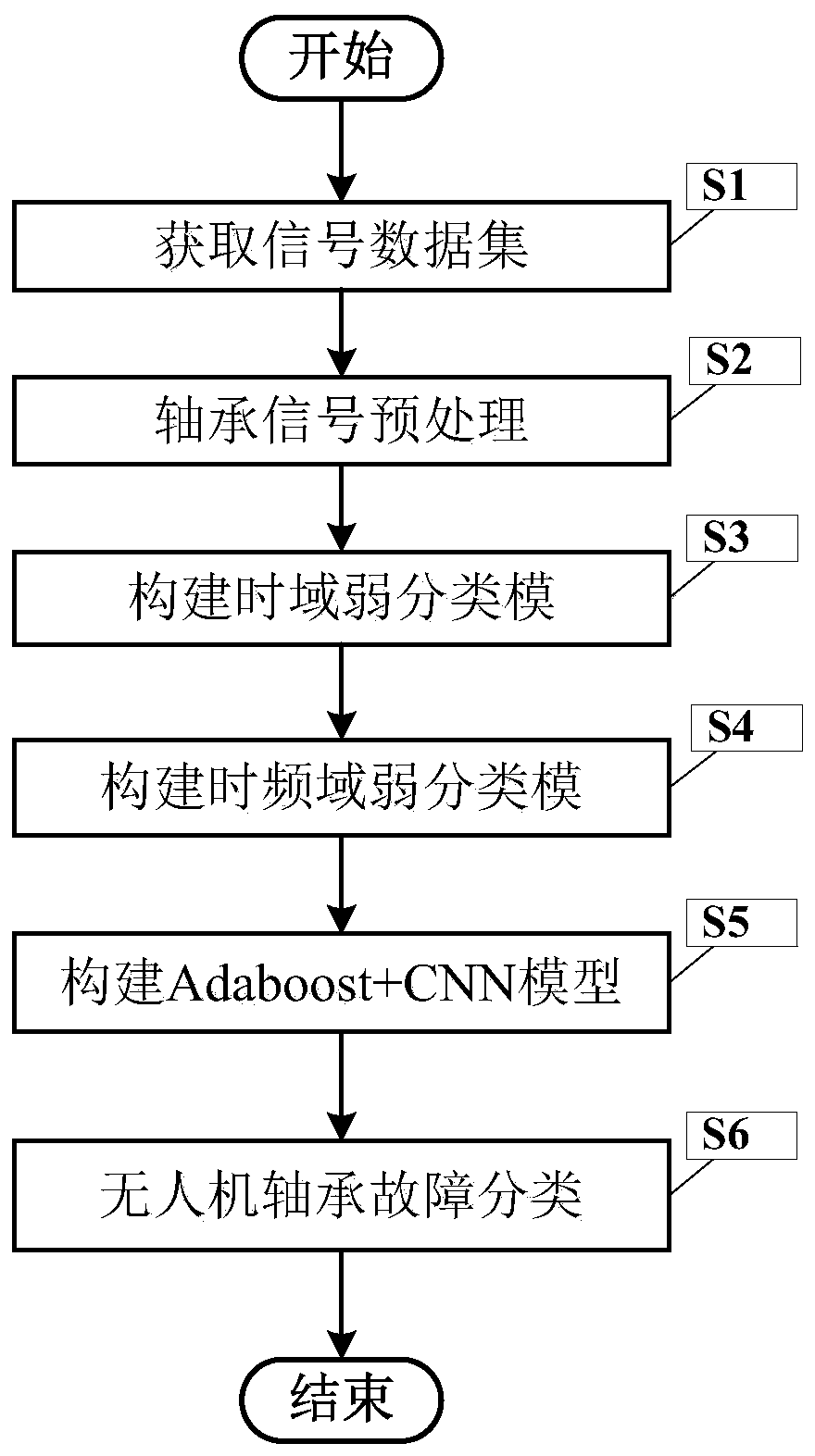

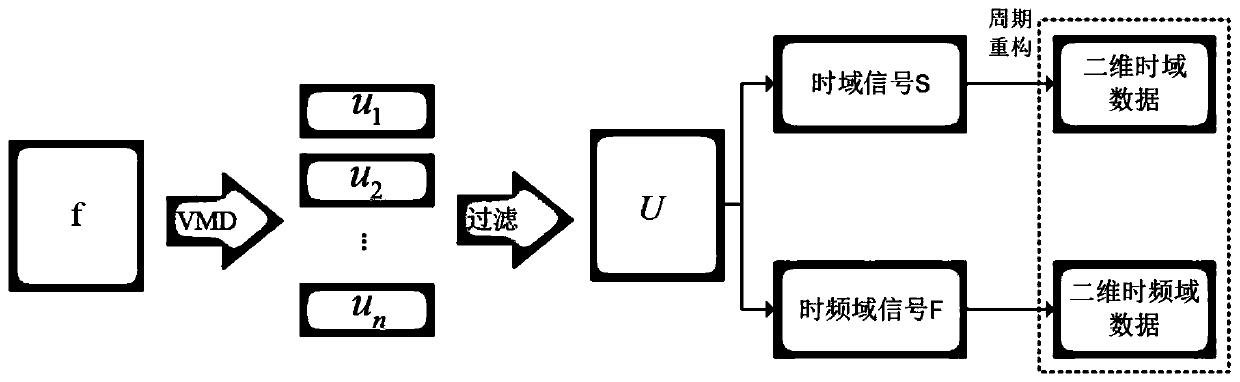

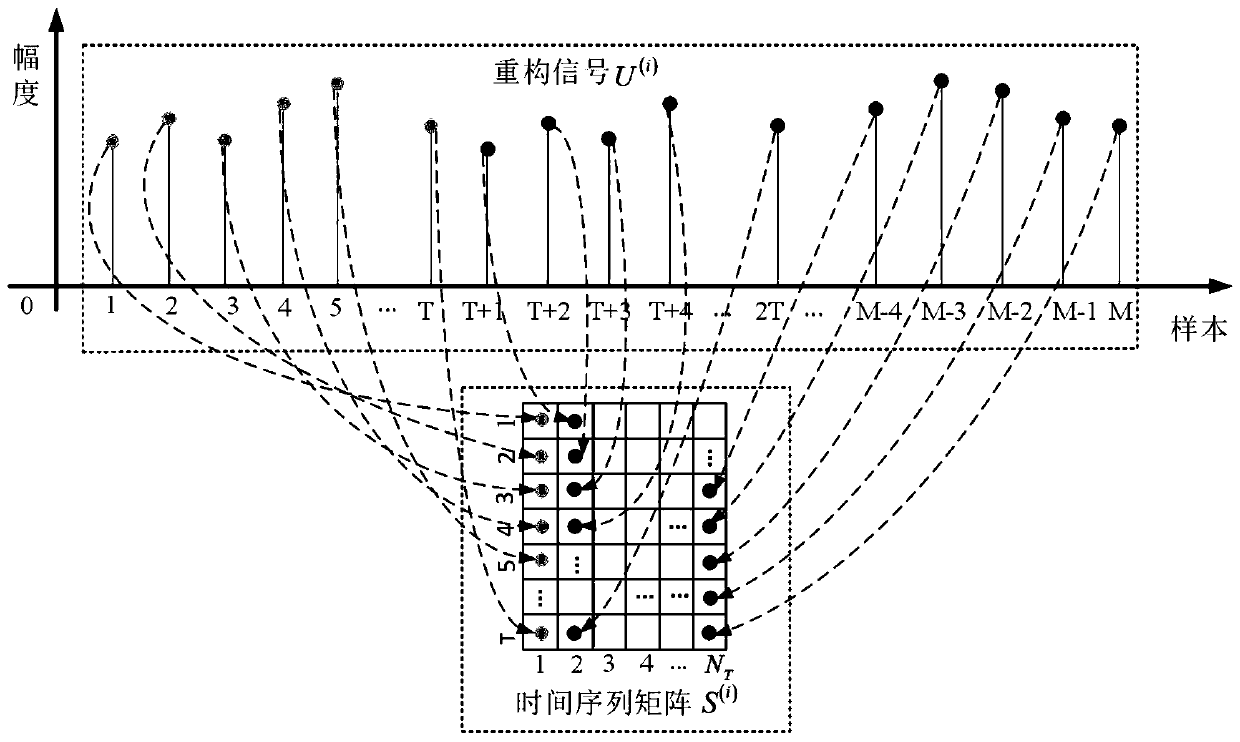

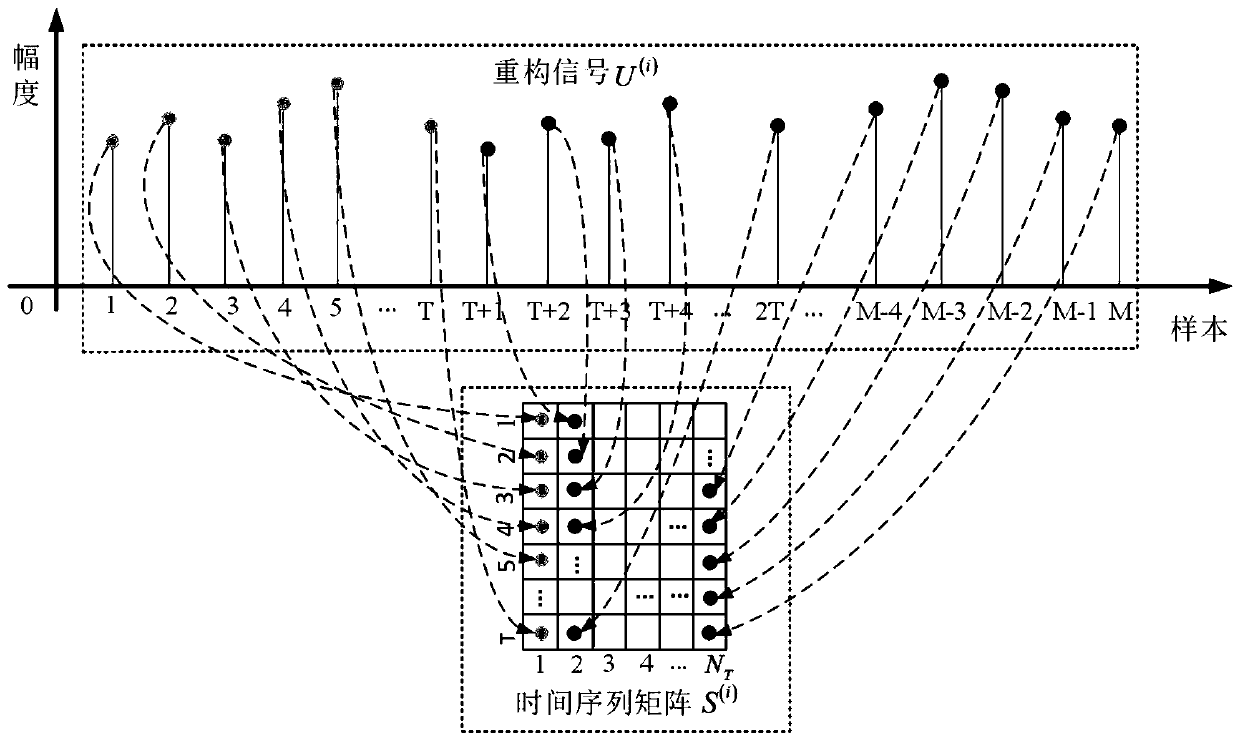

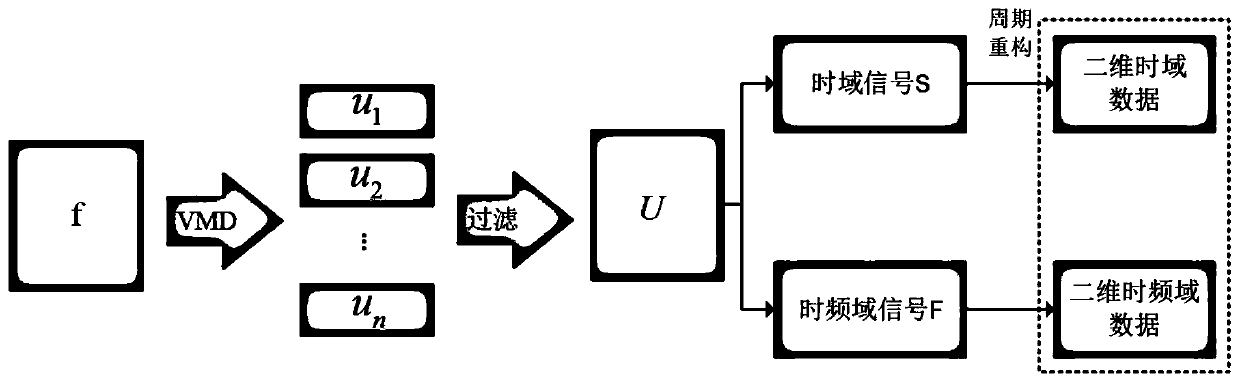

Bearing fault classification method based on CNN and Adaboost

ActiveCN110307982AEasy to handleScale invariantMachine part testingEnsemble learningTime domainClassification methods

The invention discloses a bearing fault classification method based on CNN and Adaboost. A bearing signal is collected, the bearing signal is preprocessed, and a time domain signal and a time-frequency domain signal are extracted; a time-domain weak classification module and a time-frequency-domain weak classification module are constructed based on the time domain signal and the time-frequency domain signal; and then the time-domain weak classification module and the time-frequency-domain weak classification module are integrated and a membership probability value of a to-be-detected unmannedaerial vehicle bearing signal is predicted by using the integrated classification model. Therefore, the classification of UAV bearing faults is realized.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

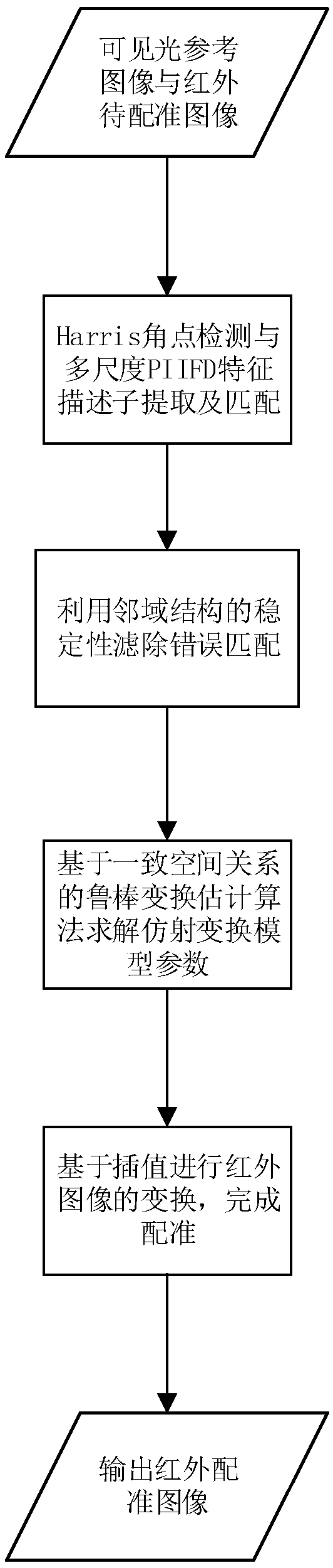

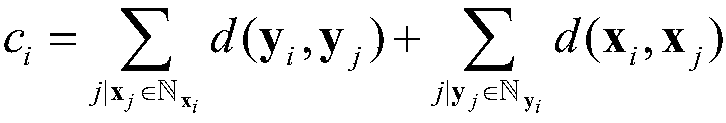

Infrared visible light image registration method and system based on robust matching and transformation

ActiveCN109285110AScale invariantTrusted initial matchGeometric image transformationImaging FeatureFeature description

The invention provides an infrared visible light image registration method and a system based on robust matching and transformation, comprising the steps of extracting a feature description subset ofinfrared and visible light images to be registered by using a robust feature point detection algorithm and a feature descriptor, and establishing an initial matching; the parameters of affine transformation model between images to be matched are estimated robustly according to the matching relation of feature points. The infrared images are transformed by interpolation method and the registrationis completed. The invention takes into account the modal difference and scale difference of infrared and visible light images, In the process of feature matching, the stability of the neighborhood structure of the feature points is used to filter out the mismatch, and the parameters of the transform model are estimated by using the parameter estimation based on the uniform space constraint, whichis robust to the image feature extraction of different modes and the feature matching affected by the strong noise.

Owner:WUHAN UNIV

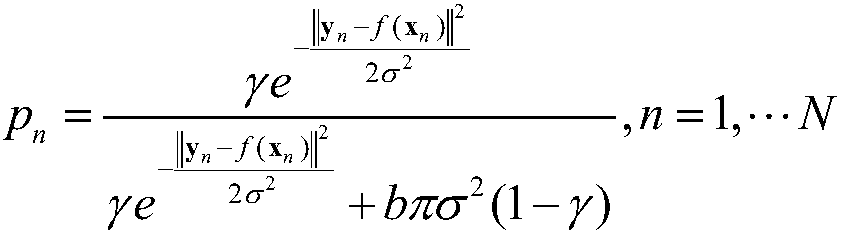

Prostate image segmentation method

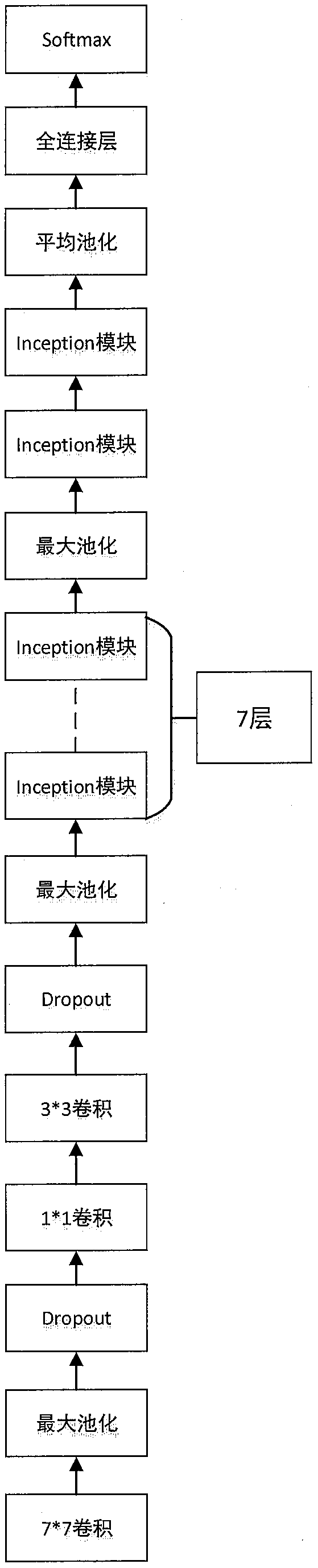

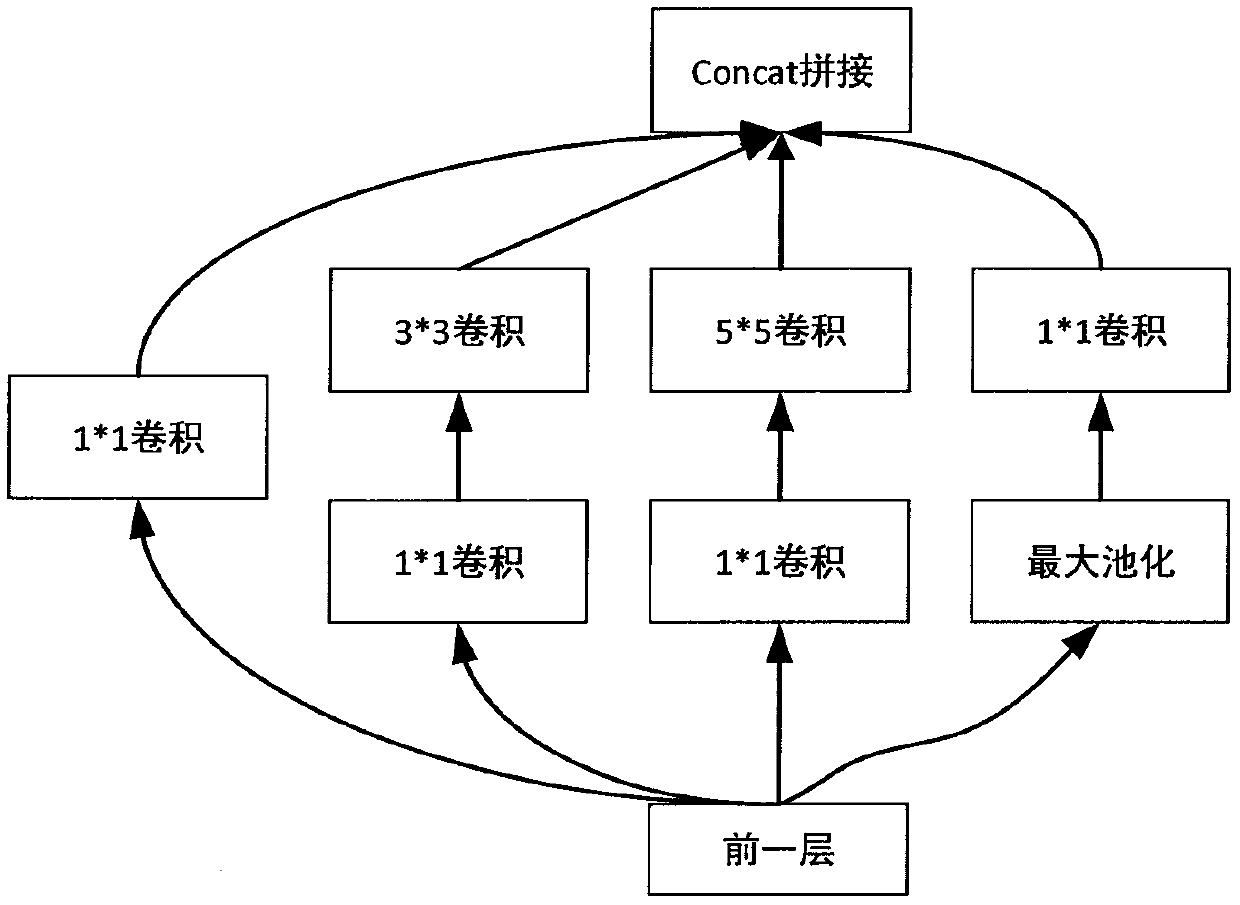

InactiveCN108765427AImprove classification accuracyNot affected by scaleImage enhancementImage analysisNetwork structureImage segmentation

The invention provides a prostate image segmentation method. The method comprises the steps that S1, prostate region training samples are acquired and marked; S2, a training prostate region is preprocessed to obtain a preprocessing result; S3, a full-convolution network structure for prostate region-of-interest segmentation is constructed; S4, the training samples are utilized to train a prostatesegmentation model so as to acquire an optimal prostate image segmentation model; S5, a prostate region sample of an object is acquired and marked; S6, a testing prostate region is preprocessed to obtain a preprocessing result; S7, the trained segmentation model is used to segment a test set; S8, segmentation results of the full-convolution network are post-processed; and S9, evaluation indexes for image segmentation are selected to perform statistical evaluation on the segmentation results. Through the method, pixel classification precision is improved, and the method has scale invariance, ishigh in segmentation speed and has a good application prospect.

Owner:BEIJING L H H MEDICAL SCI DEV

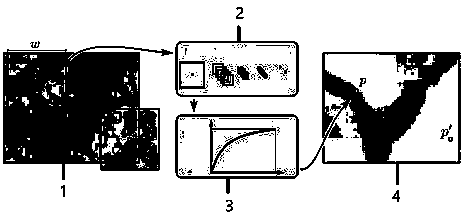

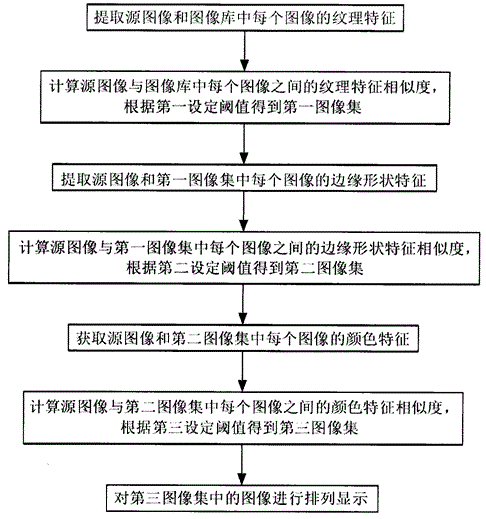

Image retrieval method

InactiveCN104809245ALow dimensionality of eigenvectorsProcessing speedSpecial data processing applicationsImage retrievalSource image

The invention discloses an image retrieval method. On the basis of an image library comprising N comparison images, the method comprises the following steps: extracting the texture features of a source image and each image in the image library; calculating the similarity degree of texture features of the source image and each image in the image library, and obtaining a first image set according to a first set threshold; extracting the shape features of boundary of the source image and each image in the first image set; calculating the similarity degree of shape features of boundary of the source image and each image in the first image set, and obtaining a second image set according to a second set threshold; obtaining the color features of the source image and each image in the second image set; calculating the similarity degree of color features of the source image and each image in the second image set, and obtaining a third image set according to a third set threshold; arranging and showing images in the third image set. The method combines the similarity degree of texture features, the shape features of boundary and color features of the images, so that the degree of accuracy of image retrieval is greatly improved, and the precision of retrieved results is ensured.

Owner:XINYANG NORMAL UNIVERSITY

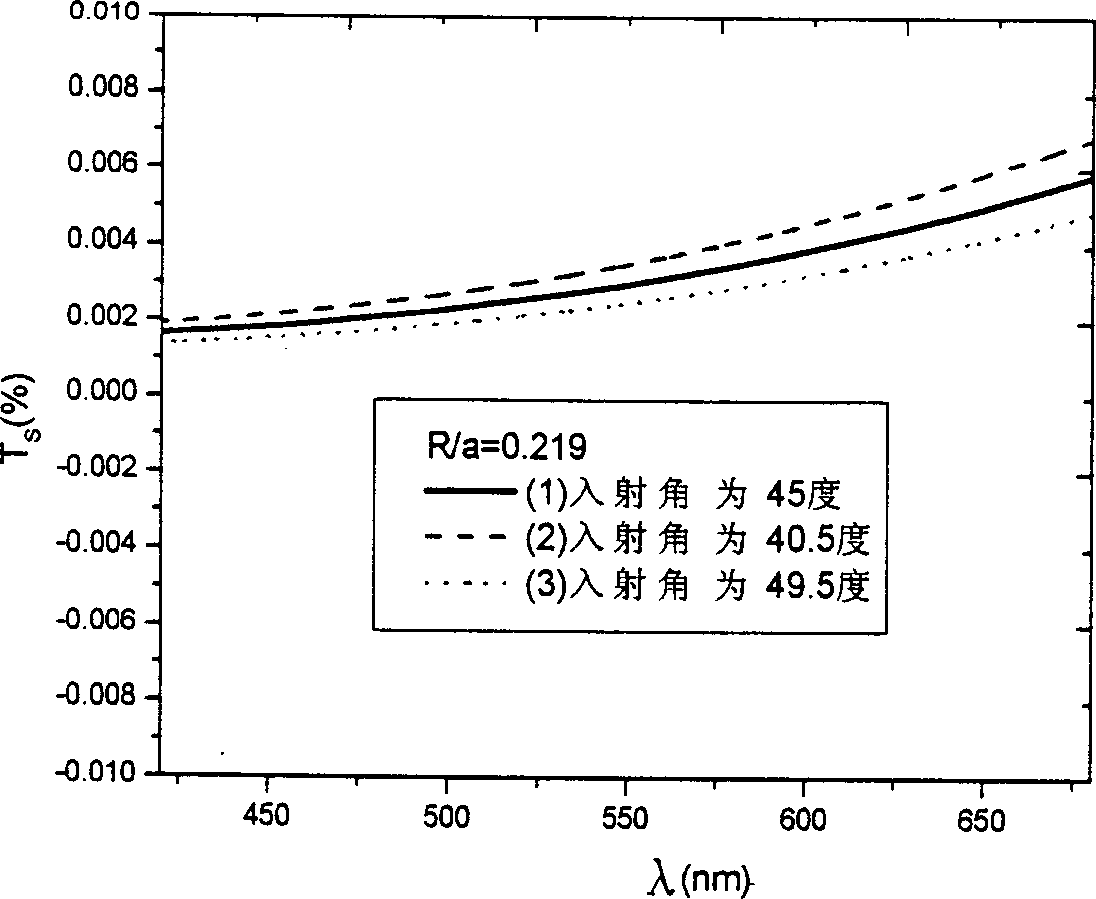

Splitter for high-polarization polarized light beam in visual light band

InactiveCN1383002AHas "scale invarianceScale invariantPolarising elementsNon-linear opticsMetallic materialsPolarimeter

The invention discloses the separator of polarization light beam with high degree of polarization in the visible light wave band by using the metal photon crystal structure. The medium sticks in two dimensions array structure are formed on the substrate. The said two dimensions array structure can be square lattice or hexagonal lattice. The said medium stick is made from metal material. SiO2 is inserted between medium sticks. The structure of polarimeter is different from the traditional polarimeter. Changing the ratio of R / a between the radius of the metal stick and the lattice constant can design the working wave band in advance and obtain high degree of polarization and transmission rate.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

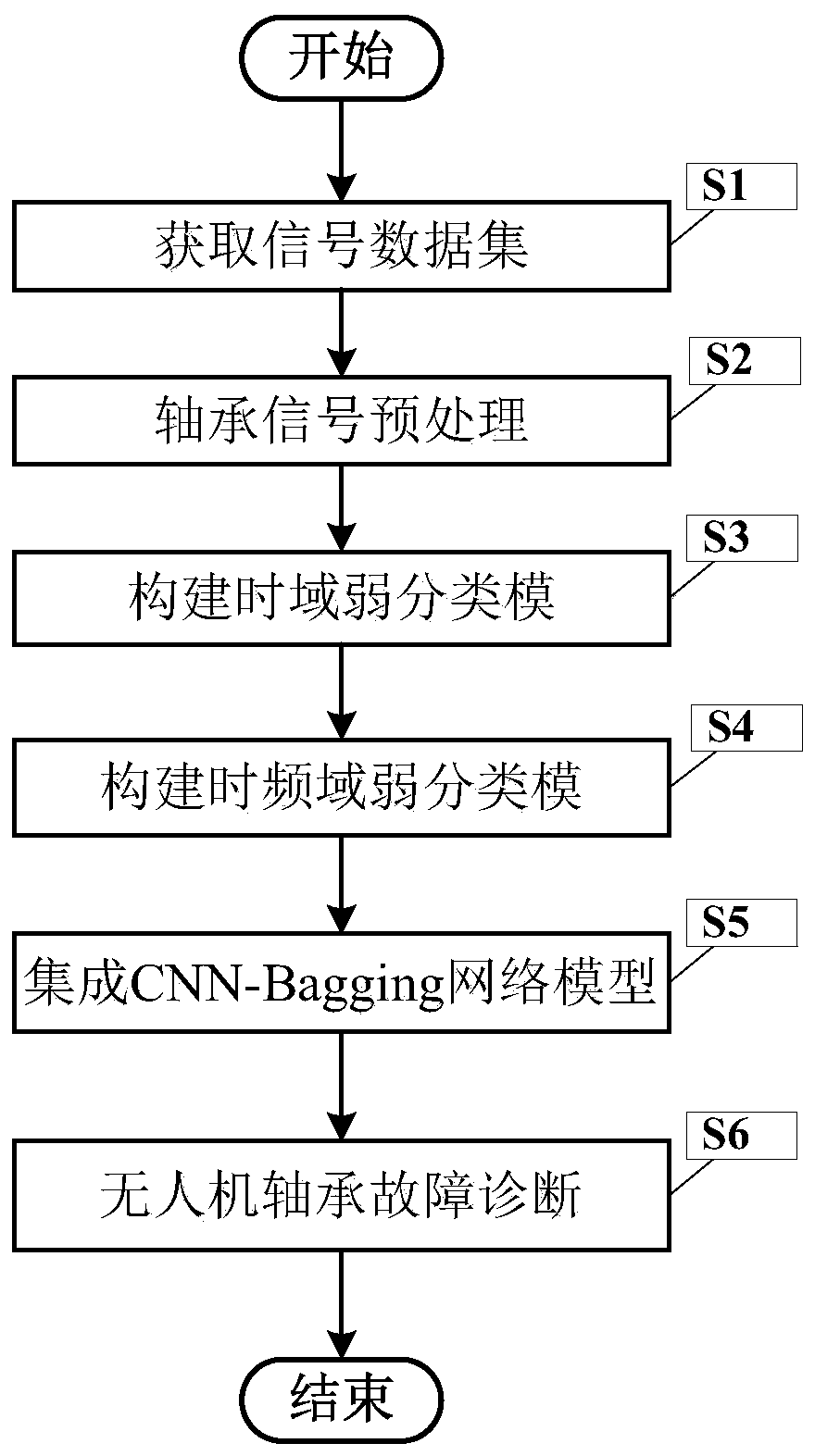

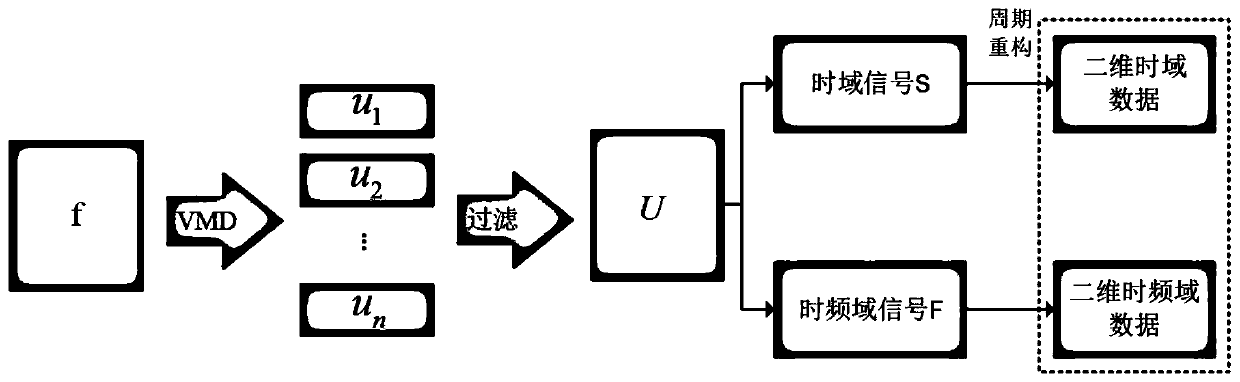

CNN-Bagging-based fault diagnosis method for UAV bearing

ActiveCN110307983AReduce computational complexityConducive to real-timeMachine part testingCharacter and pattern recognitionDiagnosis methodsTime frequency domain

The invention discloses a CNN-Bagging-based fault diagnosis method for a UAV bearing. A bearing signal is collected, the bearing signal is preprocessed, and a time-domain signal and a time-frequency-domain signal are extracted; a time domain weak classification module and a time-frequency-domain weak classification module are constructed based on an integrated learning algorithm according to the atime-domain signal and the time-frequency-domain signal; and then a membership probability value of a to-be-detected unmanned aerial vehicle bearing signal is predicted based on the time domain weakclassification module and the time-frequency-domain weak classification module. Therefore, the fault diagnosis of the UAV bearing is realized.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

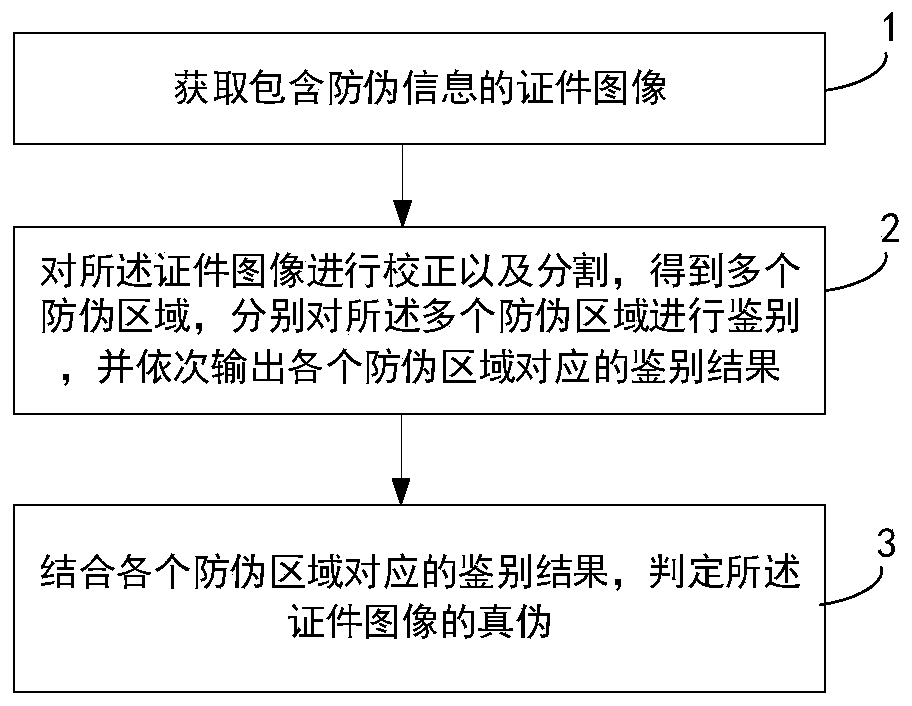

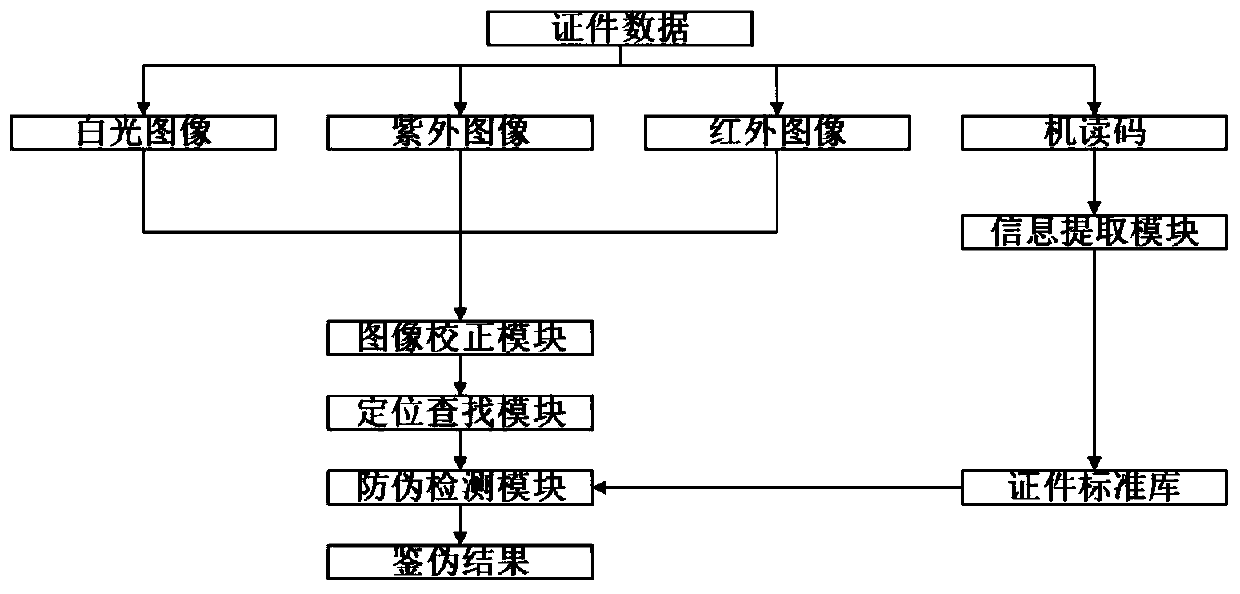

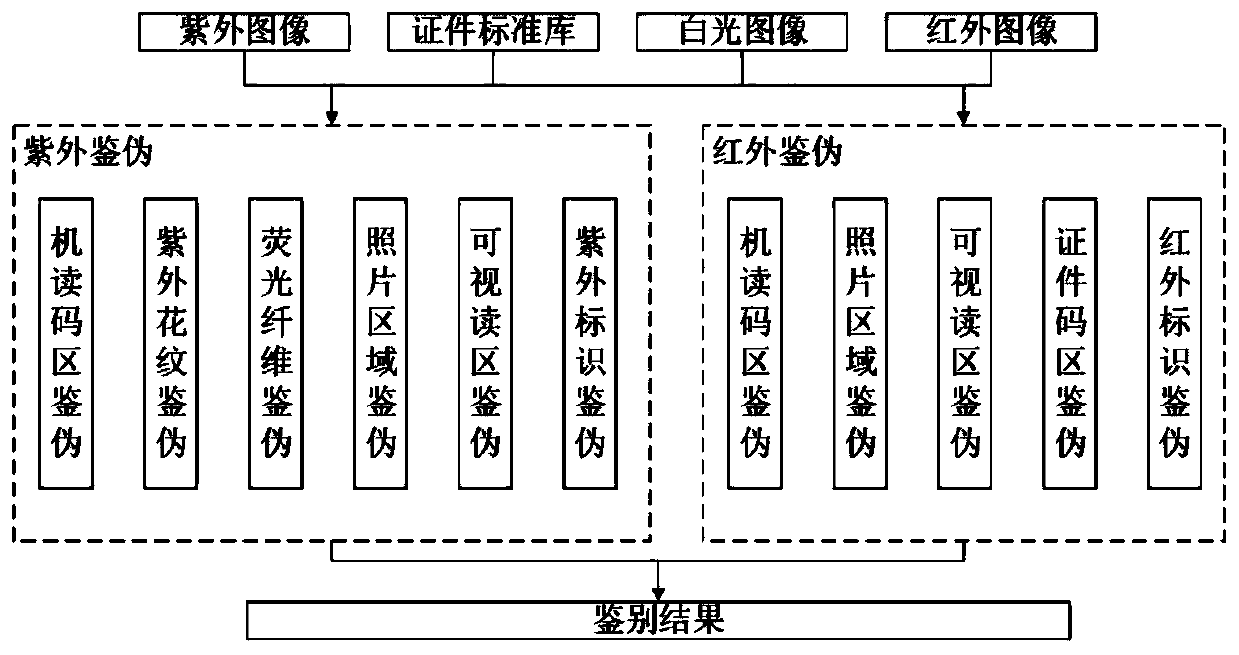

Identification method and identification system for anti-counterfeiting information of certificate

ActiveCN110895693AComprehensive detectionImprove accuracyCharacter and pattern recognitionAlgorithmEngineering

The invention relates to the field of information security authentication, in particular to an identification method and identification system for anti-counterfeiting information of a certificate, andthe identification method comprises the steps: obtaining a certificate image containing the anti-counterfeiting information; correcting and segmenting the certificate image to obtain a plurality of anti-counterfeiting areas, identifying the plurality of anti-counterfeiting areas respectively, and outputting identification results corresponding to the anti-counterfeiting areas in sequence; and judging the authenticity of the certificate image in combination with the identification result corresponding to each anti-counterfeiting area. The identification method has the advantages that the detection of the anti-counterfeiting information is comprehensive, the anti-counterfeiting detection of various certificates can be covered, the efficiency and the accuracy are higher compared with the traditional mode, and the safety is integrally improved.

Owner:HUAZHONG UNIV OF SCI & TECH

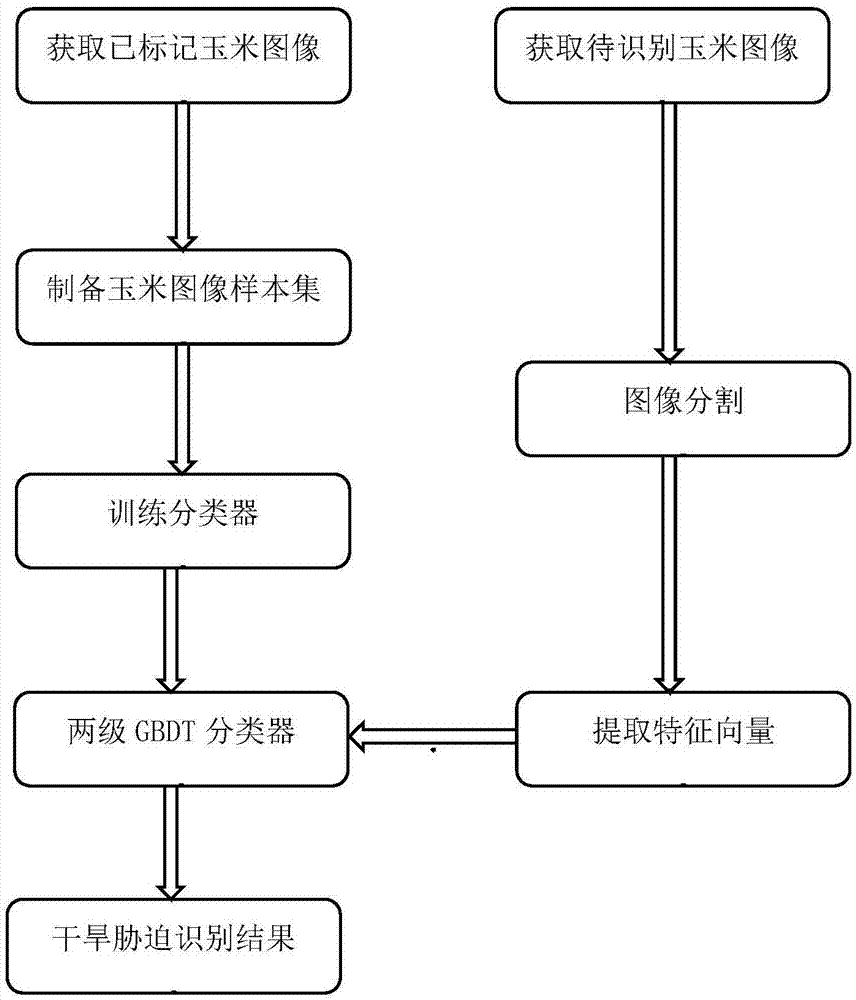

Image-based automatic identification method for drought stress on corn at earlier growth stage

ActiveCN107392892AScale invariantRotation invariantImage enhancementImage analysisFeature vectorFeature extraction

The invention discloses an image-based automatic identification method for drought stress on corn at an earlier growth stage. The method comprises: step one, preparing earlier-growth-stage corn plant image sample sets on different drought stress conditions; to be specific, acquiring a plurality of earlier-growth-stage corn plant image samples on different drought stress conditions by using imaging equipment, carrying out segmentation on the sample images, carrying out feature extraction on the segmented target images to obtain feature vectors of the image samples, and recording drought types to which the image samples belong; step two, training a two-stage drought stress automatic identification model by using the obtained earlier-growth-stage corn plant image sample sets; and step three, carrying out automatic identification on the drought stress on the earlier-growth-stage corn image samples. With the method disclosed by the invention, automatic identification of the drought stress state of the earlier-growth-stage corn plant is realized; the agricultural drought disasters can be warned early and timely; and thus the corn plant and economic losses are reduced. And the effectiveness of the method is verified by the experiment.

Owner:TIANJIN UNIV

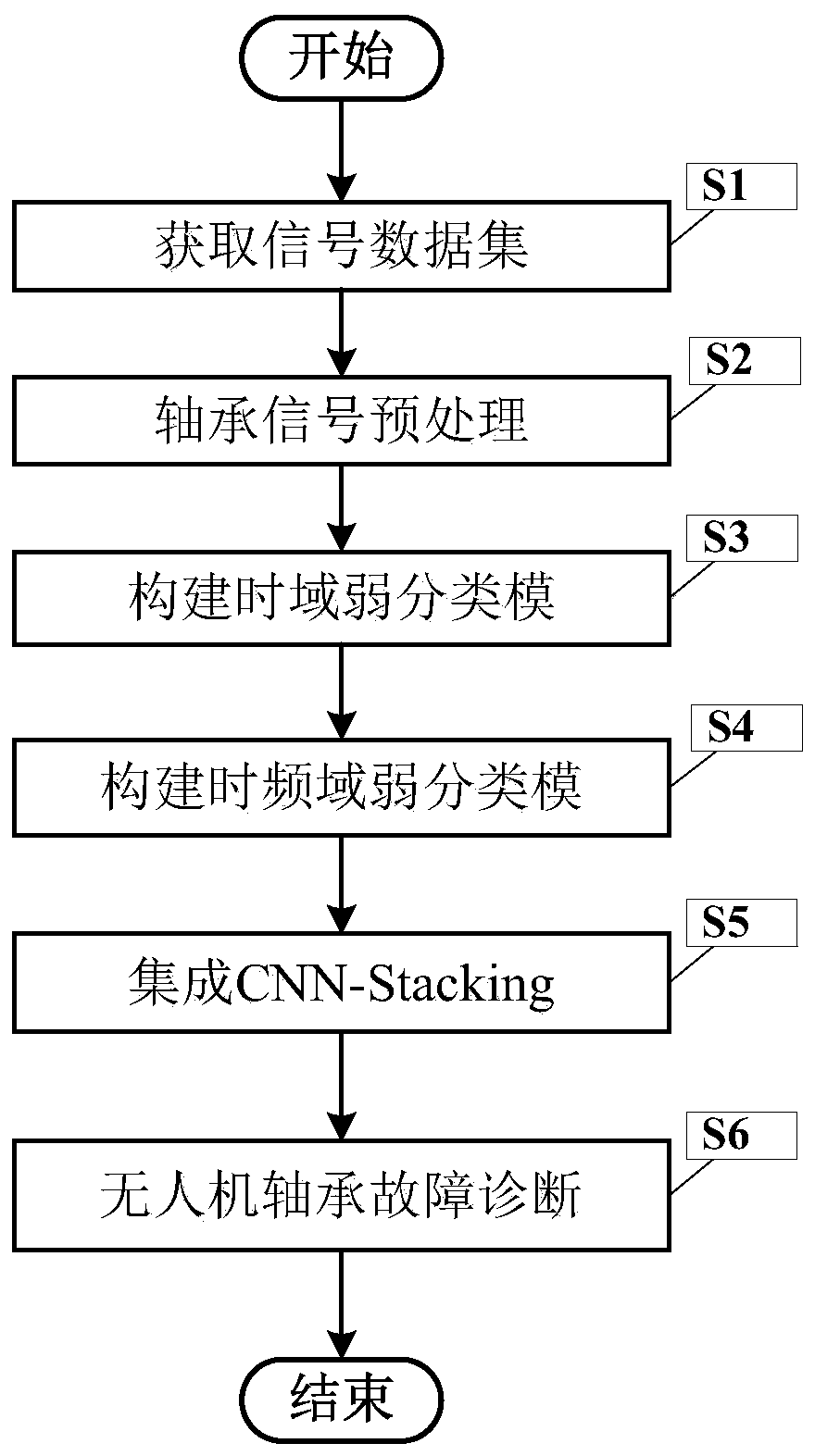

Bearing fault diagnosis method based on CNN-Stacking

ActiveCN110333076AImprove real-time performanceReduce computational complexityMachine part testingCharacter and pattern recognitionTime domainUncrewed vehicle

The invention discloses an unmanned aerial vehicle bearing fault diagnosis method based on CNN-Stacking. According to the method, first, bearing signals are collected, then the bearing signals are preprocessed, and a time domain signal and a time-frequency domain signal are extracted; second, a time domain weak classification mode and a time-frequency domain weak classification mode are constructed through an integrated learning algorithm based on the time domain signal and the time-frequency domain signal respectively; and last, after cascade fusion of the time domain weak classification modeand the time-frequency domain weak classification mode, a membership probability value of a to-be-detected unmanned aerial vehicle bearing signal is predicted, and therefore unmanned aerial vehicle bearing fault diagnosis is realized.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Sow lactation behavior recognition method based on computer vision

ActiveCN109492535ARealize automatic monitoringScale invariantCharacter and pattern recognitionPig farmsMatch algorithms

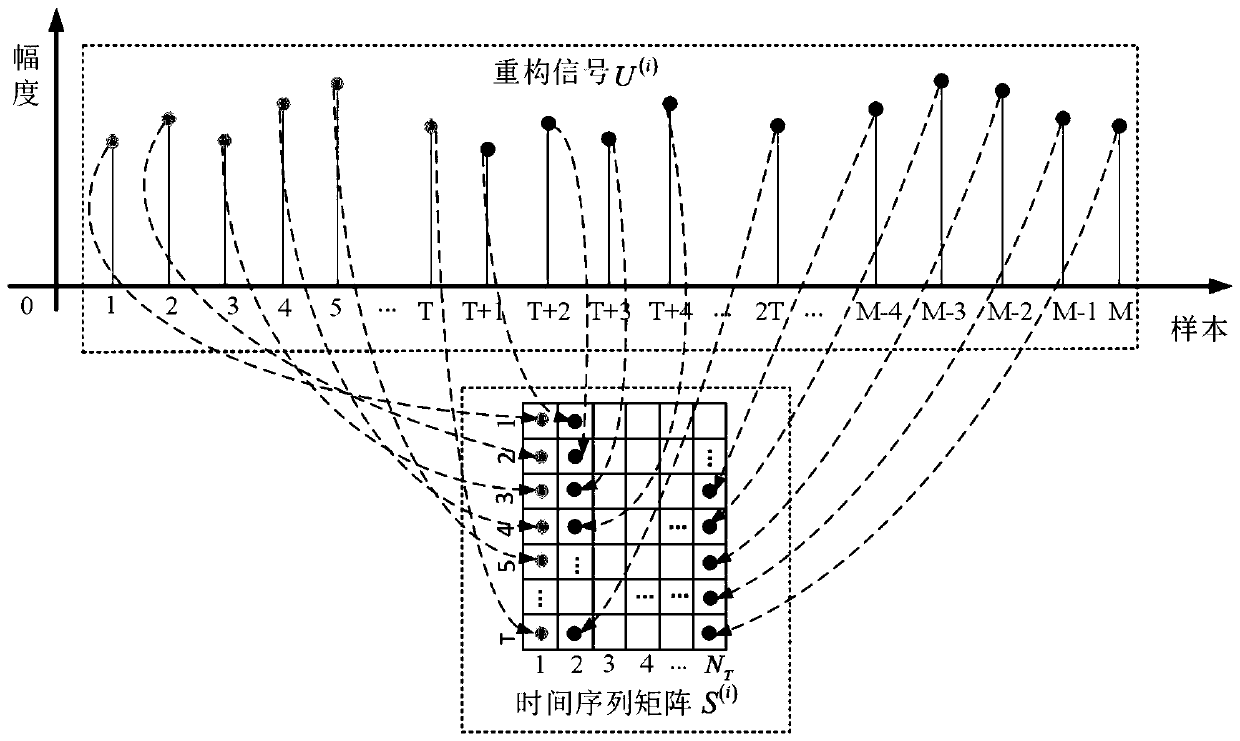

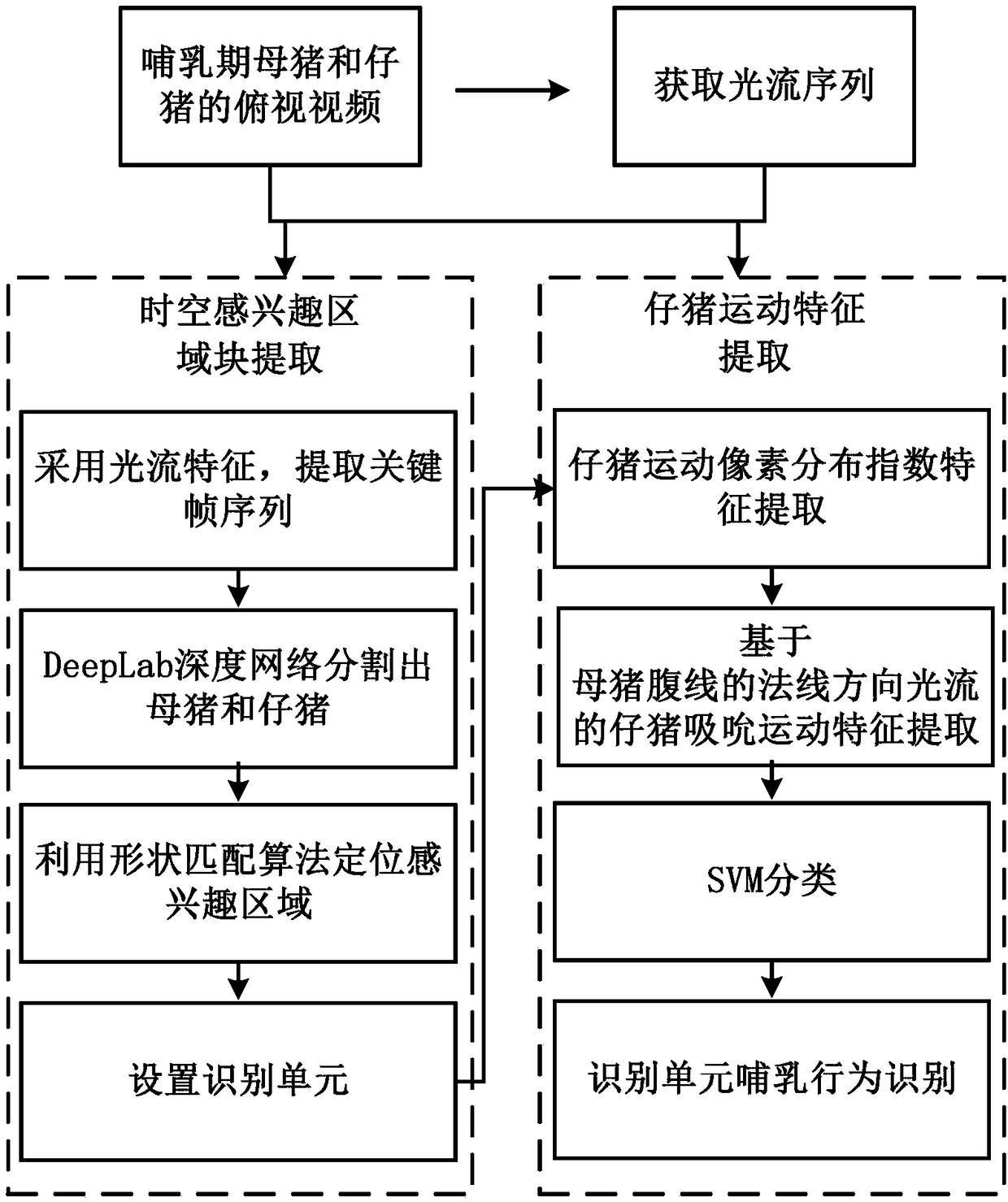

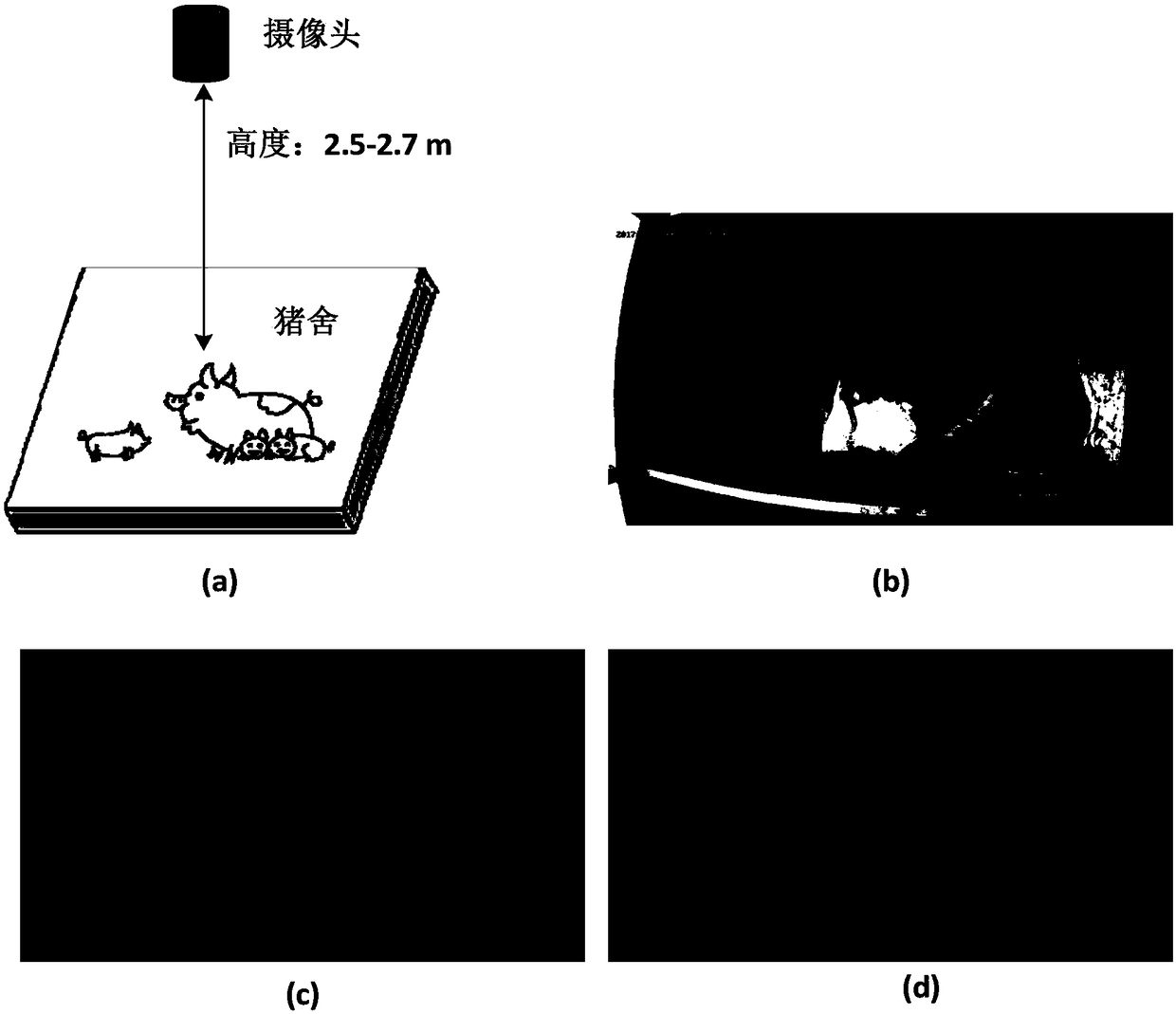

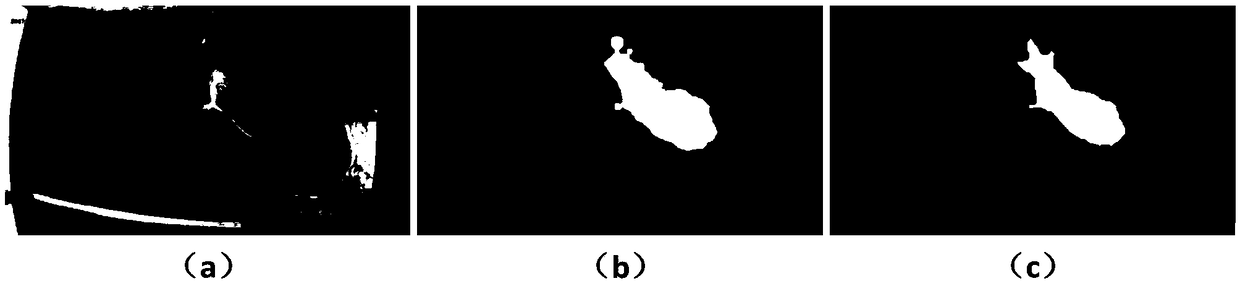

The invention discloses a sow lactation behavior recognition method based on computer vision, which comprises the following steps: 1) collecting overhead video segments of sows and piglets during lactation period; 2) calculating spatio-temporal characteristics such as intensity of move pixel, duty cycle and aggregation degree based on optical flow characteristics, and extracting time serie key frames; 3) using that DeepLab convolution network to segment the sow and the piglet in the key frame, automatically locating the region of inter of the suckling according to the shape matching algorithm,and obtain the spatio-temporal region of interest; 4) a recognition unit is arranged in that spatio-temporal region of interest to extract the motion characteristic of the piglets, including the motion distribution index characteristics of the piglets and the suck motion characteristics of the piglets based on the light flow in the normal direction of the ventral line of the sows; 5) inputting that motion characteristics of the piglet into the SVM classification model to realize automatic recognition of the sow lactation behavior. The invention utilizes the temporal and spatial motion information of the sow and the piglet in the lactation behavior to recognize the sow lactation behavior under the environment of the pig farm, thereby solving the problem that the artificial monitoring of the pig farm is difficult.

Owner:SOUTH CHINA AGRI UNIV

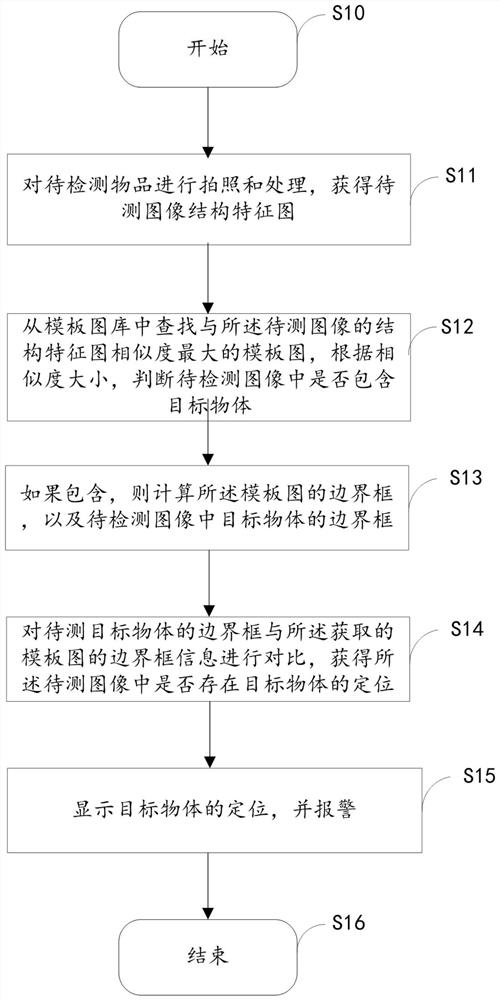

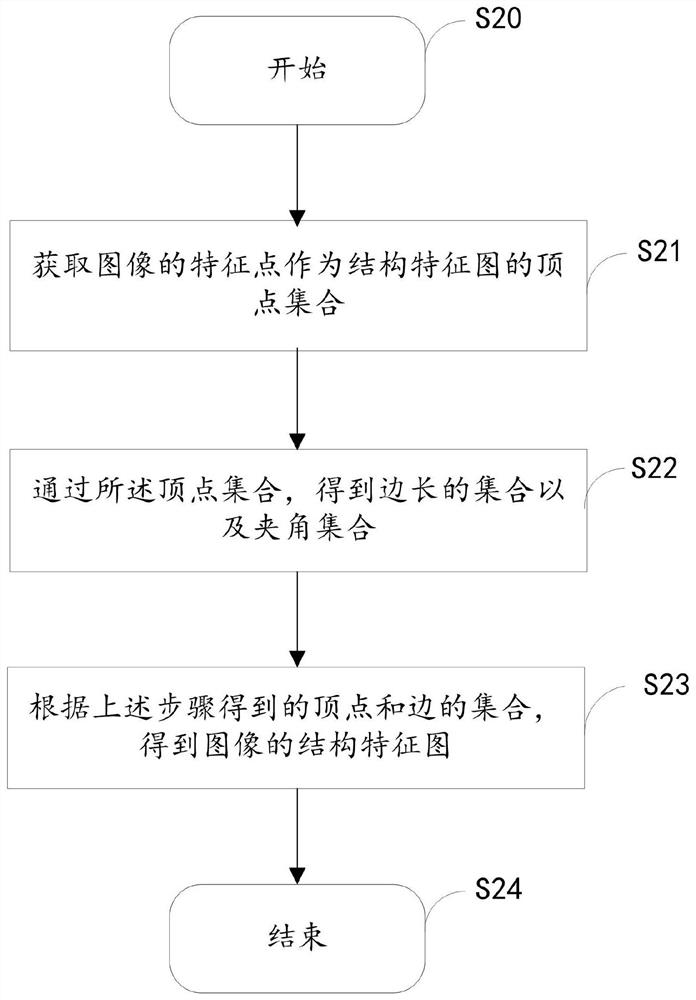

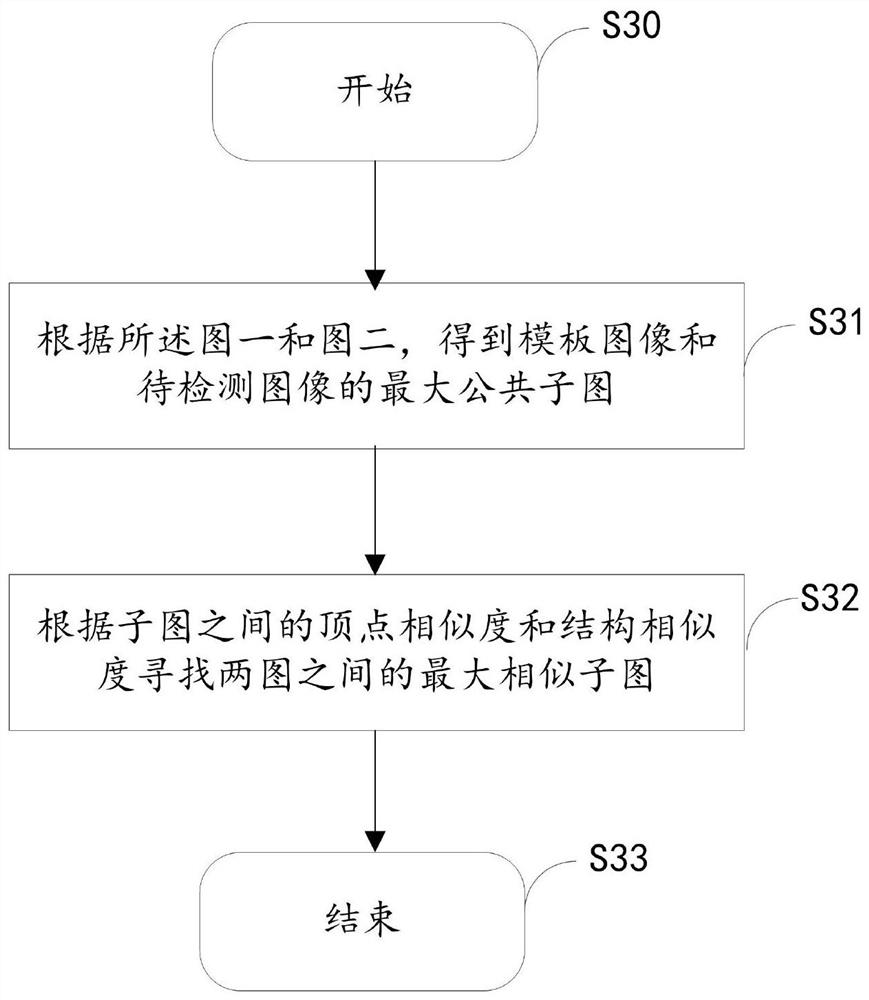

Target detection method and system

InactiveCN111914874AScale invariantRotation invariantCharacter and pattern recognitionNuclear medicineImage structure

The invention discloses a target detection method and system, and the method comprises the following steps: photographing and processing a to-be-detected object, and obtaining a structural feature mapof a to-be-detected image; searching a template graph with the maximum similarity with the structural feature graph of the to-be-detected image from a template graph library, and judging whether theto-be-detected image contains a target object or not according to the similarity; if so, calculating a bounding box of the template graph and a bounding box of a target object in the to-be-detected image; comparing a bounding box of a to-be-detected target object with the obtained bounding box information of the template graph to obtain the positioning of the target object in the to-be-detected image; and displaying the positioning of the target object and giving an alarm. By utilizing the method and the system disclosed by the invention, the scale change and the rotation change of the targetobject in an X-ray image can be adapted, and the method and the system can also be applied to the condition of local object shielding. In addition, the method and the system can complete a target detection task by only needing a small amount of template images containing the target object, and have relatively strong practicability.

Owner:SHANGHAI XINBA AUTOMATION TECH CO LTD

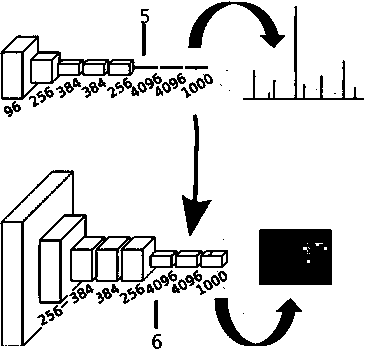

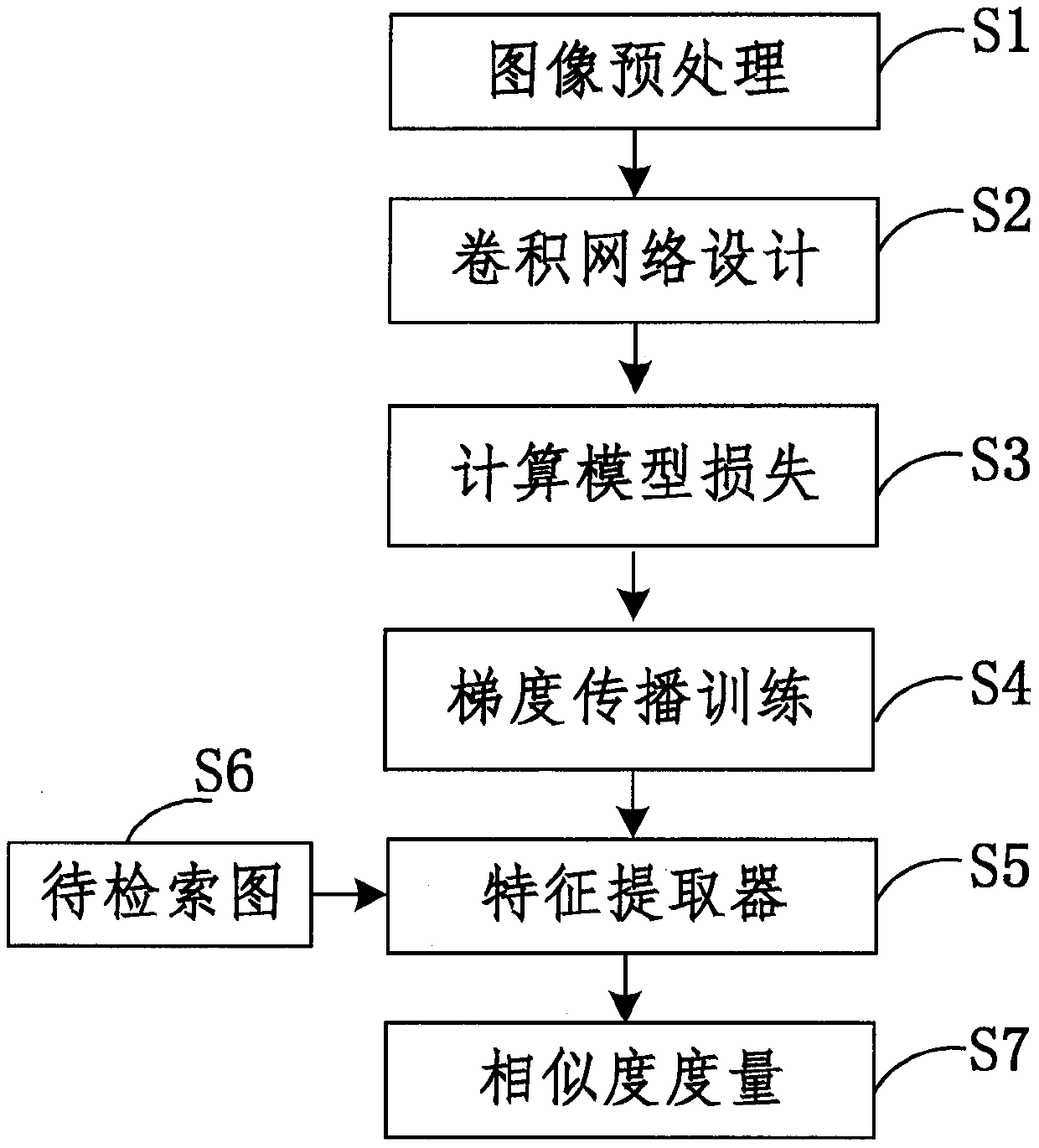

Cloth image retrieval method based on convolutional neural network

PendingCN111125397AImprove accuracy and robustnessReduce computational complexityDigital data information retrievalGeometric image transformationScale invarianceTranslation invariance

The invention discloses a cloth image retrieval method based on a convolutional neural network, and the method comprises the steps: carrying out preprocessing of a textile fabric image, zooming of theimage through bilinear interpolation, and carrying out the normalization and other preprocessing operations; designing a convolutional neural network as a classifier; training the neural network by using a classified loss function and gradient back propagation iteration to obtain a feature extractor; performing feature extraction on the retrieval graph and the fabric library to obtain a 1024-dimensional feature vector; and calculating the similarity of the two feature vectors by adopting an L2 measurement method, and sorting to realize recognition of textile fabric image retrieval. Accordingto the invention, contour spatial position feature extraction can be carried out on the target shape, and recognition of the target with occlusion is realized. The method has scale invariance, rotation invariance and translation invariance, so that the problem of incomplete contour recognition is effectively solved, and the accuracy and robustness of target recognition and shape retrieval are improved.

Owner:苏州正雄企业发展有限公司

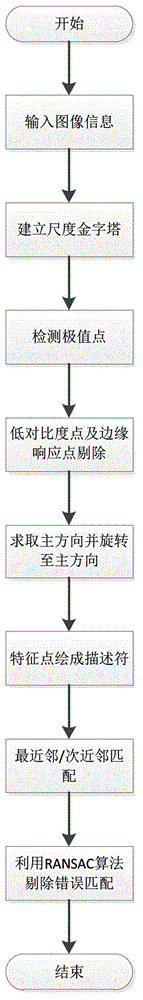

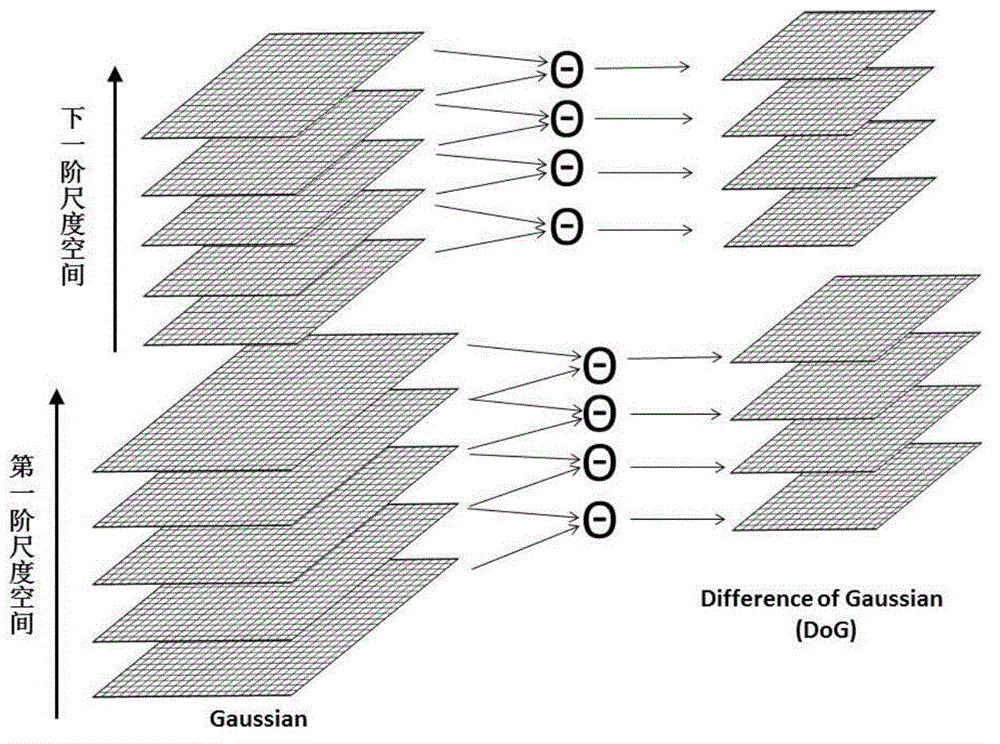

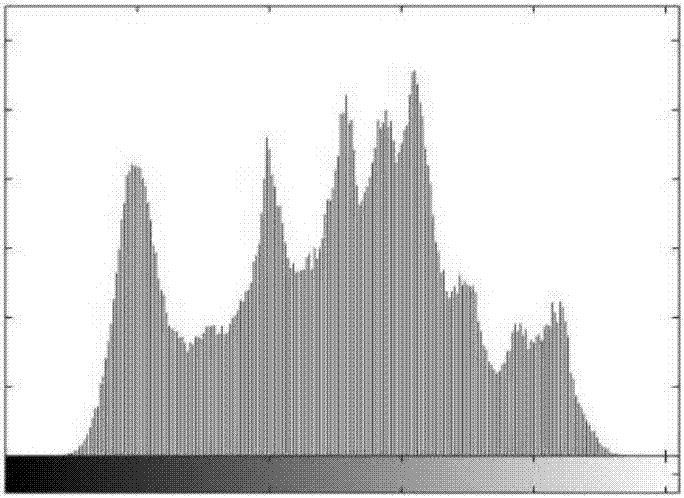

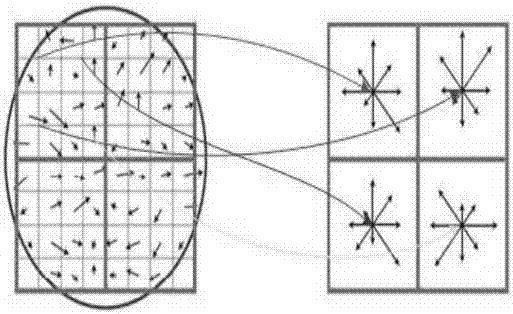

SIFT image matching method based on module value difference mirror image invariant property

ActiveCN103336964AImprove real-time performanceMirror invariantImage analysisCharacter and pattern recognitionPrincipal directionHistogram

The invention discloses an SIFT (Scale Invariant Feature Transform) image matching method based on a module value difference mirror image invariant property, which mainly solves the problems that an image matching method is higher in timeliness requirement, and a matching error appears due to the fact that a target is subjected to mirror image turning during a movement course in the existing tracking and recognition technology. As for situations that mirror image matching is weak and the timeliness is poor in the existing method, the method provides an efficient mirror image transformation processing direction, so that mirror image transformation is overcome and an effect of dimensionality reduction is achieved. The method comprises the steps that image information is input; a feature point is extracted; the gradient strength and a direction of the feature point are computed; a principal direction is determined; and coordinates of the feature point are rotated to the principal direction; a 16*16 neighborhood pixel is divided into 16 seed points; every two axisymmetric seed points are subtracted and subjected to modulus taking; eight seed points are obtained; each seed point is drawn into a four-direction histogram; and a 8*4=32 dimensional descriptor is formed finally. The mirror image transformation problem of the matching method is solved, and the original 128-dimensional vector descriptor is reduced to be 32-dimensional, so that the timeliness of the method is improved greatly.

Owner:BEIJING UNIV OF POSTS & TELECOMM

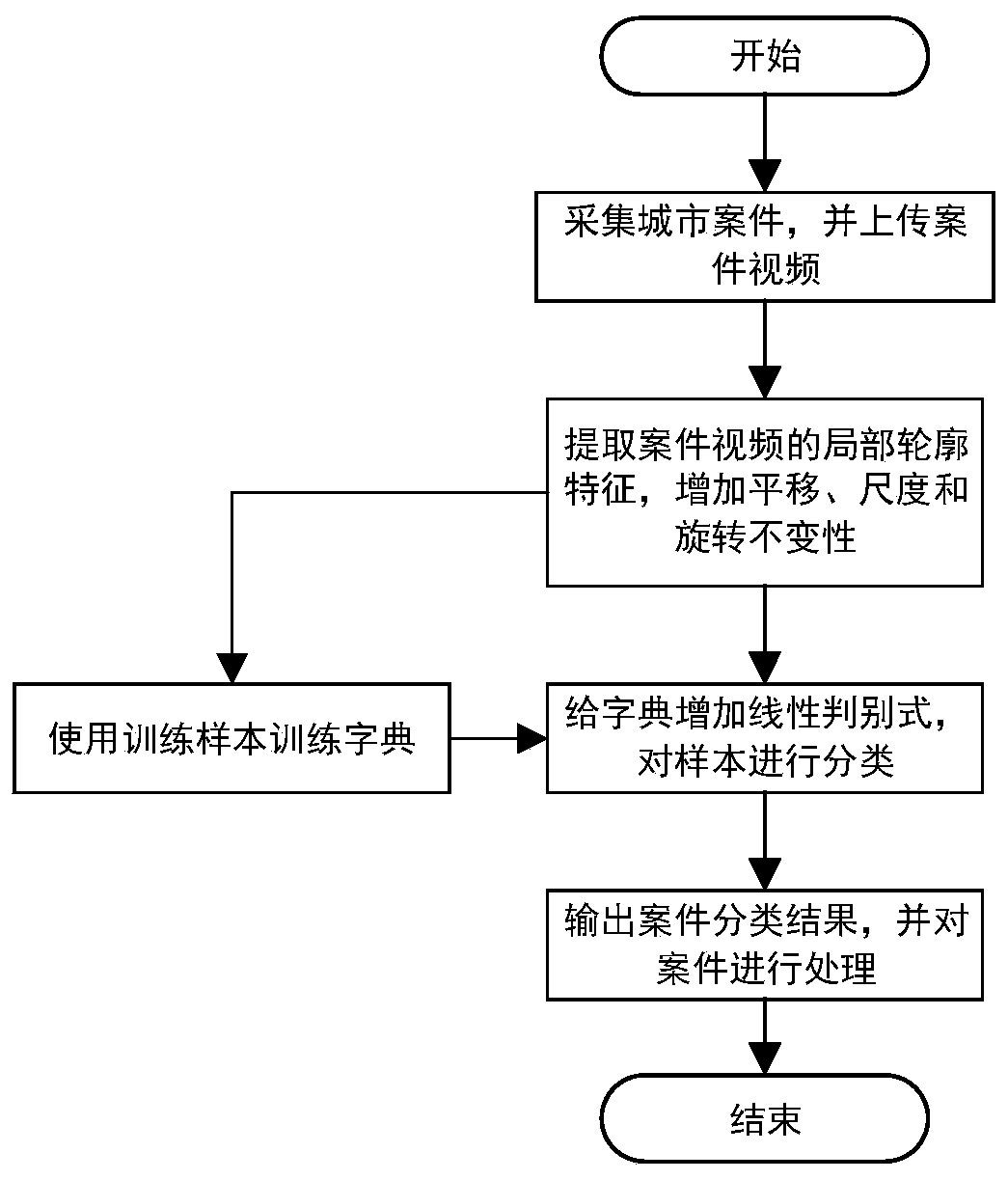

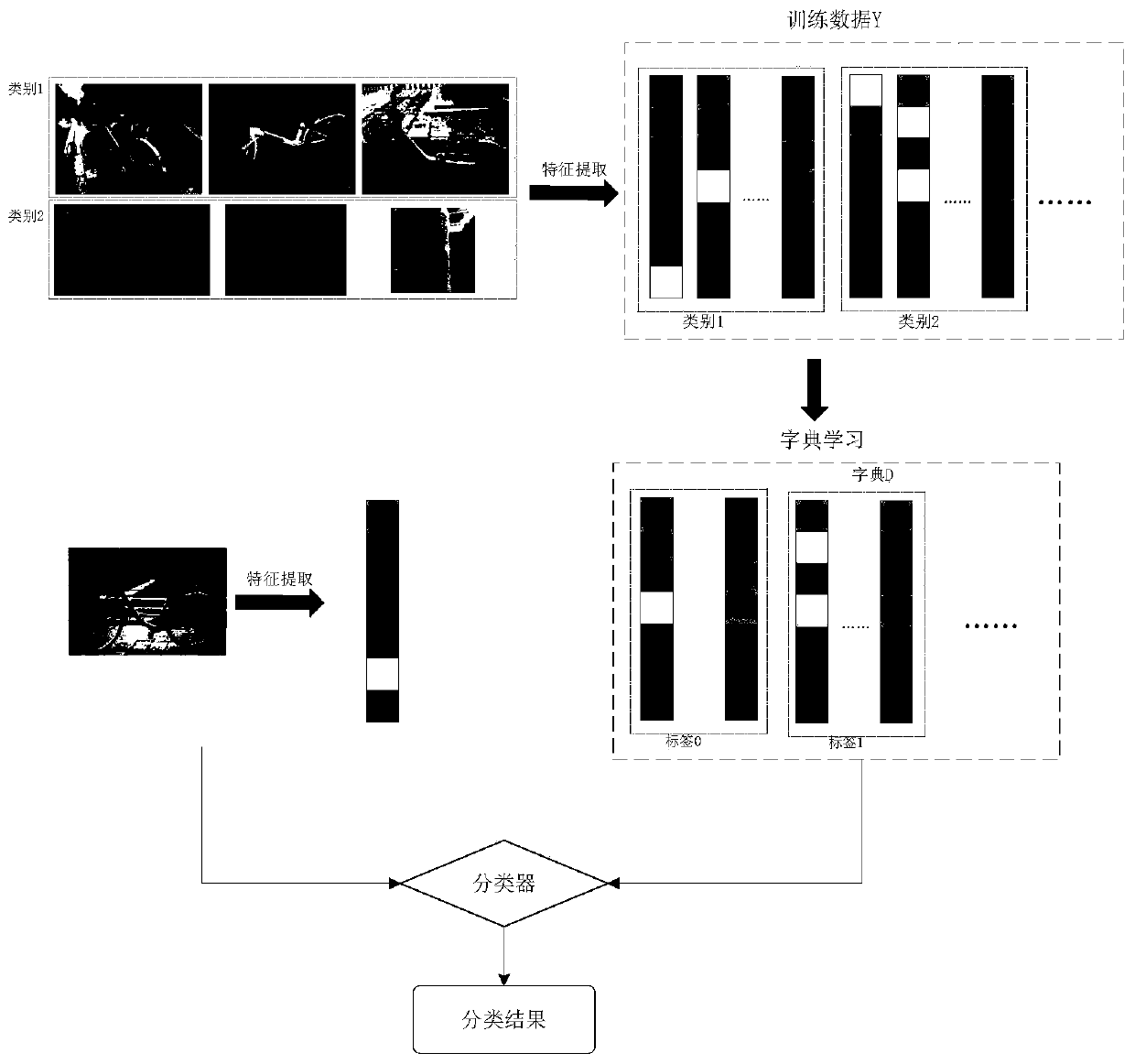

Urban management case image recognition method based on dictionary learning

ActiveCN111507413AEffectively distinguish between different case categoriesTranslation invariantData processing applicationsClimate change adaptationDictionary learningEngineering

The invention relates to an urban management case image recognition method based on dictionary learning. The method comprises the following steps: firstly uploading urban management case images and various types of cases of a monitoring video screenshots to a cloud library, compressing the collected various types of cases through a compression technology, reducing the redundant information, and then transmitting and storing the compressed cases; extracting contour features of case sample images, and using training sample features to construct a dictionary model; adding a sample label into thedictionary, and classifying the urban management cases by the dictionary through an added linear discriminant; and finally, after the urban management cases are classified, reporting and auditing thecase types, and sending the case types to workers in the region in time. The working efficiency is improved, and intelligent urban case management is realized.

Owner:JIYUAN VOCATIONAL & TECHN COLLEGE

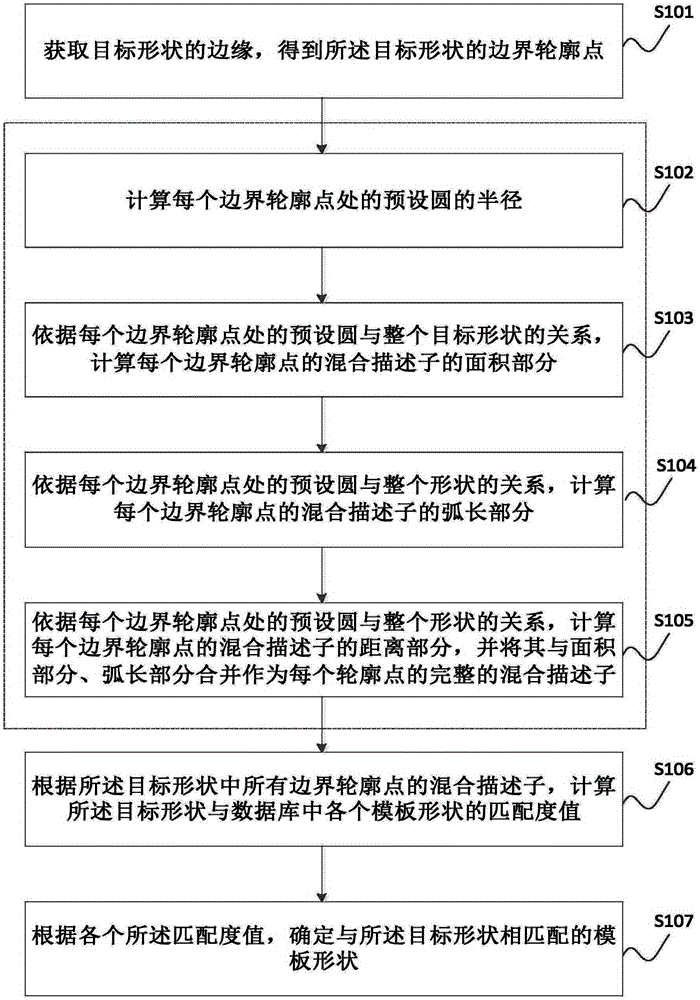

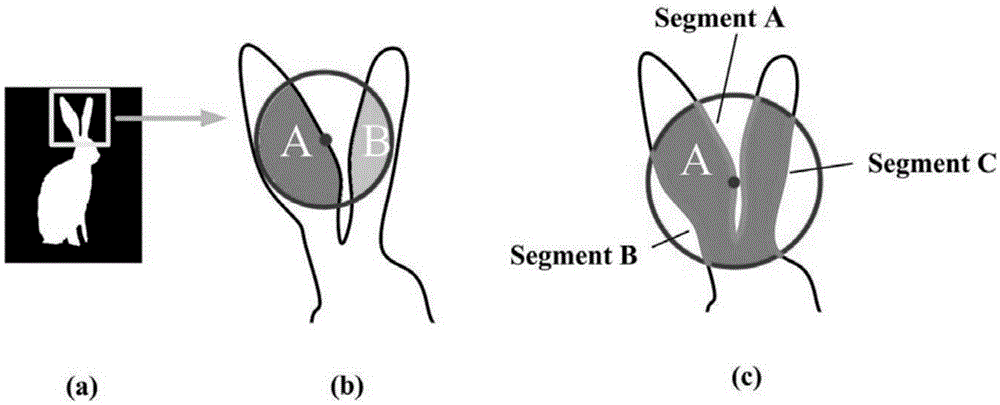

Shape matching method and system based on mixing descriptor

ActiveCN105303192AImprove accuracyImprove efficiencyCharacter and pattern recognitionBoundary contourFeature extraction

The invention provides a shape matching method based on a mixing descriptor, and the method comprises the steps: obtaining the edge of a target shape, and obtaining boundary contour points of the target shape; calculating the mixing descriptor of each boundary contour point; calculating the matching degree of the target shape with each template shape in a database according to the mixing descriptors of all boundary contour points in the target shape; and determining a template shape matched with the target shape according to all matching degrees. The method calculates the mixing descriptor of each boundary contour point, calculates the matching degree of the target shape with each template shape in the database according to the mixing descriptors of all boundary contour points in the target shape, can achieve the feature extraction and effective expression of an image shape, has scale invariance, rotation invariance and translation invariance, effectively inhibits the interference from noises, and improves the accuracy and efficiency of shape matching.

Owner:ZHANGJIAGANG INST OF IND TECH SOOCHOW UNIV +1

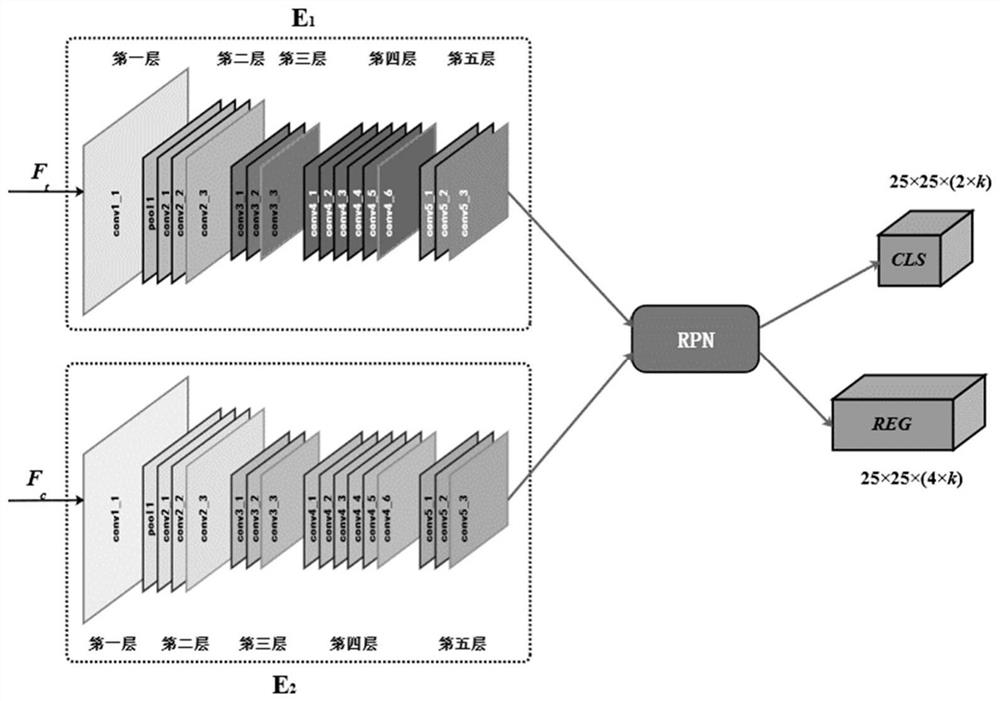

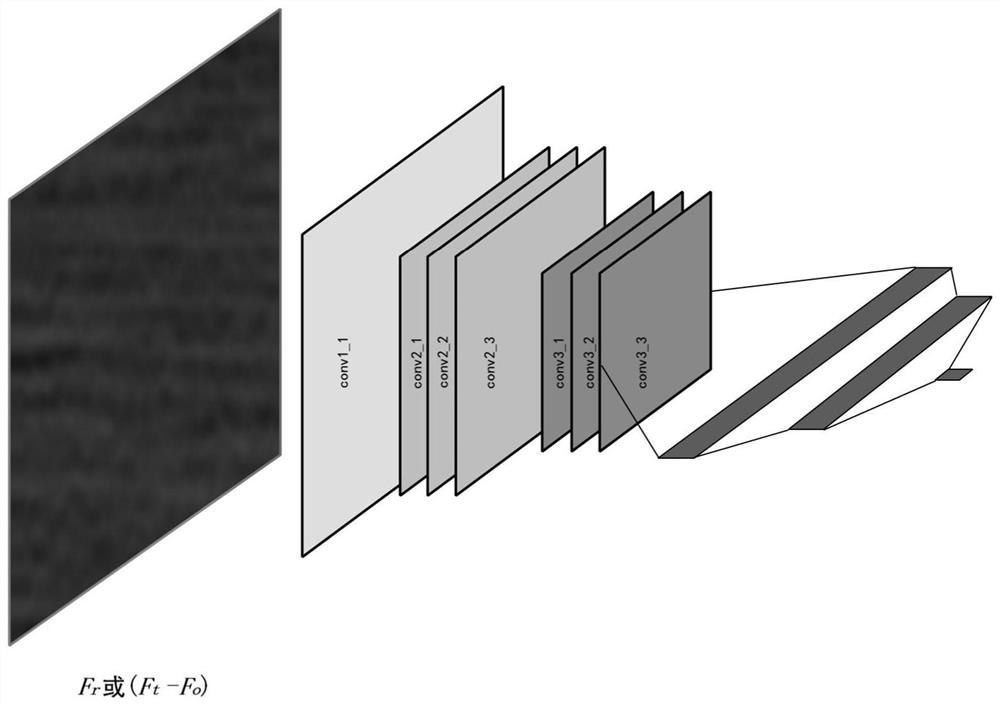

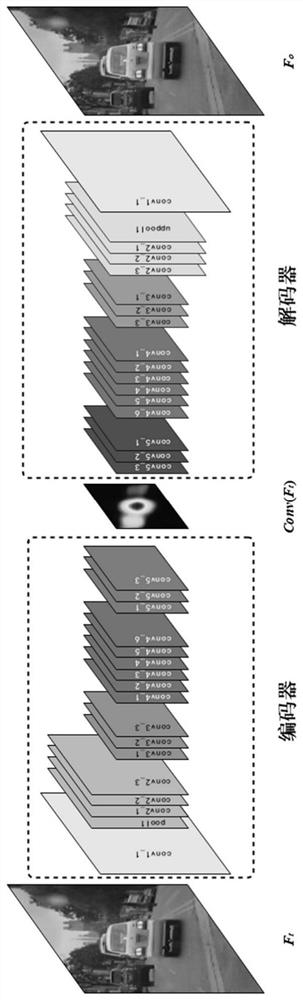

Target tracking method based on coding and decoding structure

ActiveCN111696136ARobustReduce lossesImage enhancementImage analysisEncoder decoderNetwork structure

The invention discloses a target tracking method based on an encoding and decoding structure. According to the method, a similar generative adversarial network structure is generated through the combination of an encoder-decoder and a discriminator, the features extracted by an encoder are more generalized, and the essential features of a tracked object are learned. Due to the fact that the objects which are semi-shielded and affected by illumination and motion blur exist in the object frames, the influence on the network is smaller, and the robustness is higher. According to the method, FocalLoss is used for replacing a traditional cross entropy loss function, so that the loss of easy-to-classify samples in the network is reduced, the model pays more attention to difficult and misclassified samples, and meanwhile the number of positive and negative samples is balanced. Distance-U loss is used as regression loss, an overlapping region is concerned, other non-overlapping regions are concerned, scale invariance is achieved, the moving direction can be provided for a bounding box, and meanwhile the convergence speed is high.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

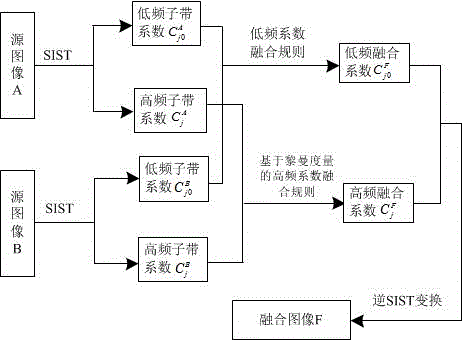

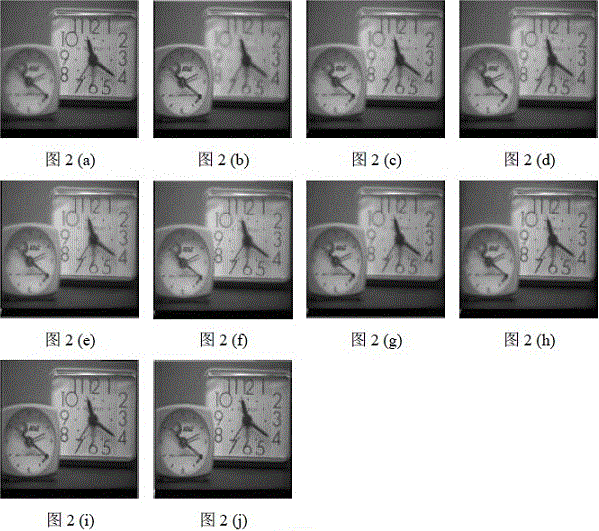

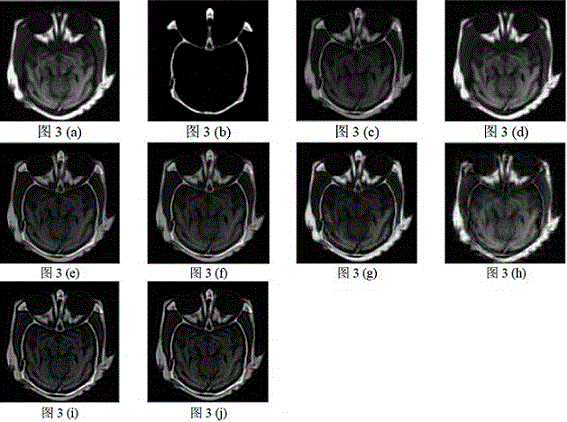

Self-adaptive multi-strategy image fusion method based on riemannian metric

InactiveCN104899848ASuppression of distortionCorrespondence is clearImage enhancementGeometric image transformationDecompositionImage fusion

The invention discloses a self-adaptive multi-strategy image fusion method based on Riemannian metric. The method comprises the following steps: (1) carrying out the translation invariant shear wave transformation (SIST) decomposition for a to-be-fused image, and obtaining a low-frequency sub-band coefficient and a series of high-frequency sub-band coefficients; (2) taking different fusion principles respectively for the low-frequency sub-band coefficient and the high-frequency sub-band coefficients, taking a weighted average fusion strategy for the low-frequency sub-band coefficient; taking a self-adaptive multi-strategy fusion based on the riemannian metric for the high-frequency sub-band coefficients, giving Riemannian space non-simularity, calculating a geodesic distance of a Riemannian space formed by the high-frequency sub-band coefficients by utilizing affine invariant metric and Log-Euclidean metric, measuring a complementary redundant attribute of the image, and then obtaining the fused SIST coefficient; and (3) carrying out SIST inversion for the fused coefficient to obtain a fusion image. The invention belongs to the technical field of the image fusion, and the high-efficiency image fusion for multi-source images can be realized.

Owner:SUZHOU UNIV OF SCI & TECH

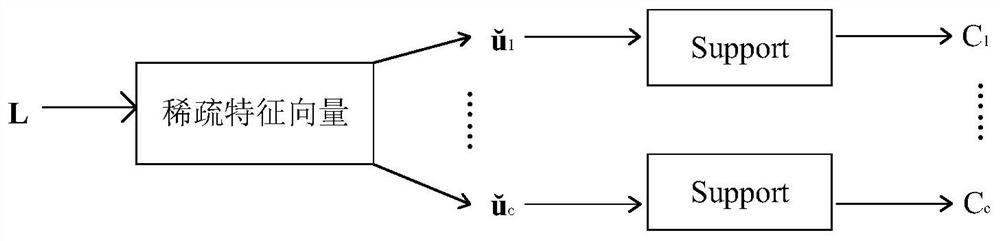

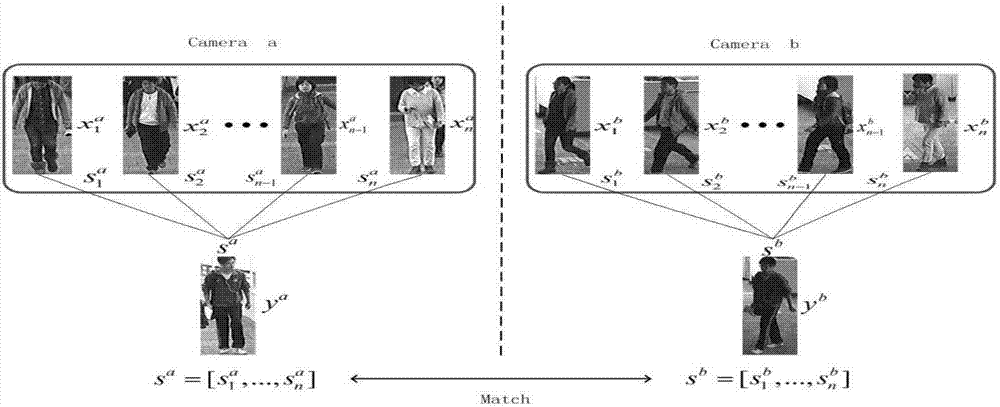

Support sample based indirect pedestrian re-identification method

ActiveCN106980864AImprove accuracyEfficient use ofCharacter and pattern recognitionRecognition algorithmSample image

The invention discloses a support sample based indirect pedestrian re-identification method. The method comprises the following steps that videos collected by two cameras without intersection are preprocessed, needed training sample images are obtained, a dense color histogram is combined with dense SIFT to extract features from the images, support samples of the two different cameras are obtained in a clustering method, and when pedestrians from different cameras are matched, the support samples are used to discriminate the pedestrian types in the corresponding cameras on the basis of distance measurement, and the types are compared to determine whether the pedestrians are the same one. According to the invention, pedestrian images from the different cameras are matched directly, the problems in visual angle, illumination and scale caused by different cameras are solved effectively, the pedestrian re-identifying accuracy is improved, and the robustness of a pedestrian re-identification algorithm is enhanced.

Owner:安徽科大擎天科技有限公司

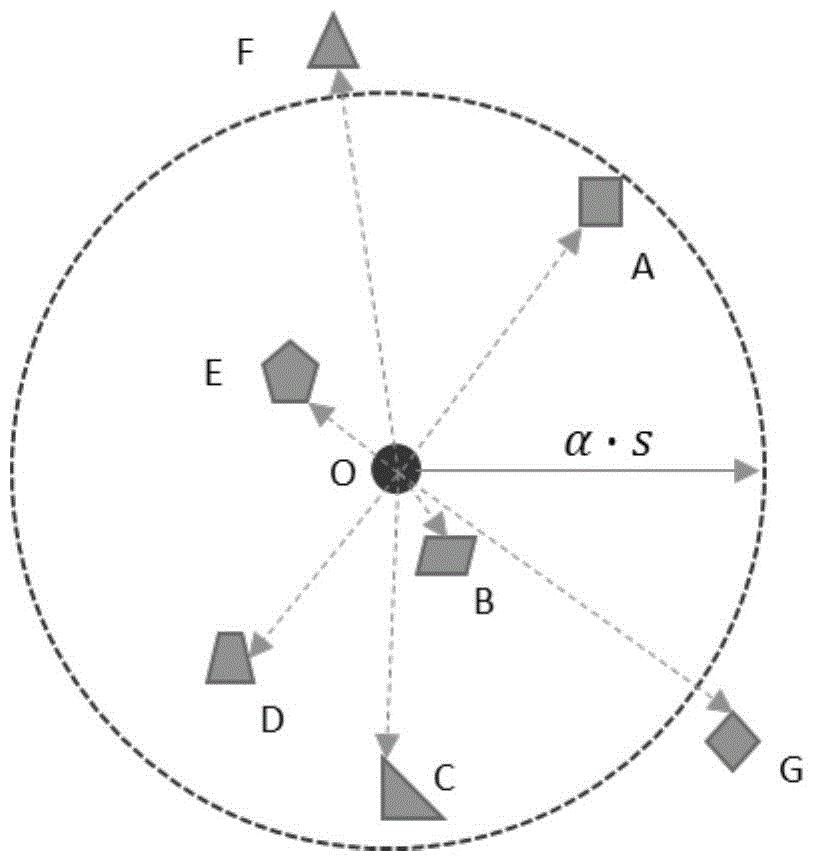

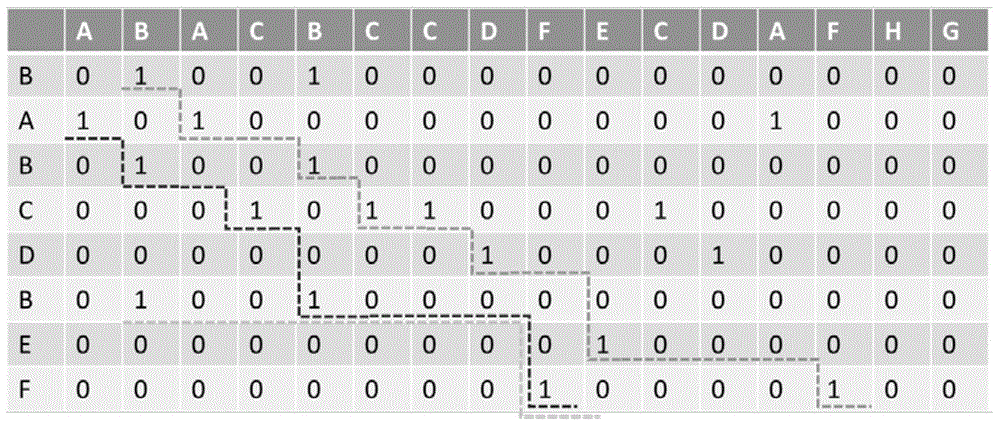

Spatial relationship matching method and system applicable to video/image local features

ActiveCN105224619ARobustRotation invariantSpecial data processing applicationsPattern recognitionKeyword Code

The present invention discloses a spatial relationship matching method and system applicable to video / image local features. The method comprises: acquiring scale information of all video / image feature points, determining a local neighborhood space of each video / image feature point, acquiring visual keyword codes of all the video / image feature points in the local neighborhood spaces, performing quantification treatment on the visual keyword codes to generate new visual keyword codes, and sequencing the new visual keyword codes to generate spatial relationship codes of the video / image feature points; and comparing spatial relationship codes between video / image feature points to be matched and the video / image feature points, constructing a relationship matrix, calculating the similarity of the spatial relationship codes between the video / image feature points to be matched and the video / image feature points in the relationship matrix, and fusing the visual similarity and the spacial relationship codes between video / image feature points to be matched and the video / image feature points.

Owner:中科星云(鹤壁)人工智能研究院有限公司

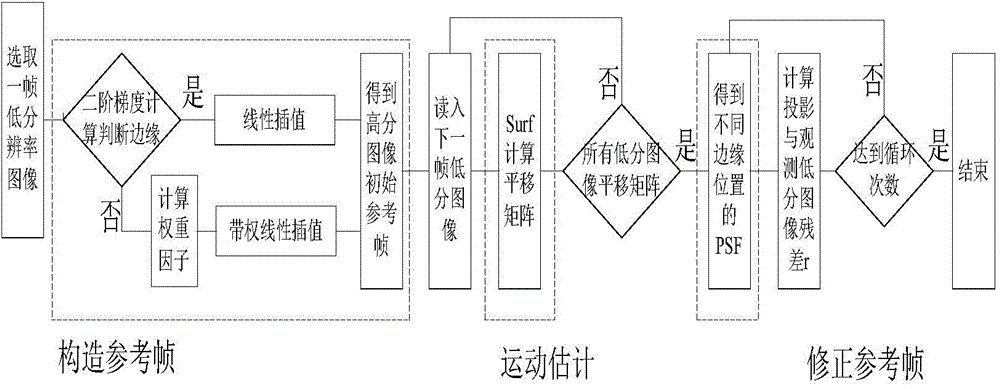

Projection-onto-convex-sets image reconstruction method based on SURF matching and edge detection

InactiveCN104318518AReduce the effect of grayscale change ratePreserve edge featuresImage enhancementPattern recognitionImaging quality

The invention discloses a projection-onto-convex-sets (POCS) image reconstruction method based on SURF matching and edge detection. To solve the problems of fuzzy edge and matching limitation in a traditional POCS super-resolution image reconstruction algorithm, 0-degree, 45-degree, 90-degree and 135-degree edges around a pixel are detected by a second-order gradient first. A gradient-based interpolation algorithm is adopted in reference frame construction, linear interpolation is carried out along the edge direction, and weighted interpolation based on first-order gradient is carried out along the non-edge direction. A SURF matching algorithm is adopted in motion estimation to improve the robustness and real-time performance of matching. In reference frame correction, the point spread functions (PSF) of the center in the four edge directions are defined. A simulated experiment and a real experiment are respectively assessed by using full-reference image quality assessment and no-reference image quality assessment. The method obviously improves the quality of reconstructed images and improves the robustness and real-time performance of matching.

Owner:BEIHANG UNIV

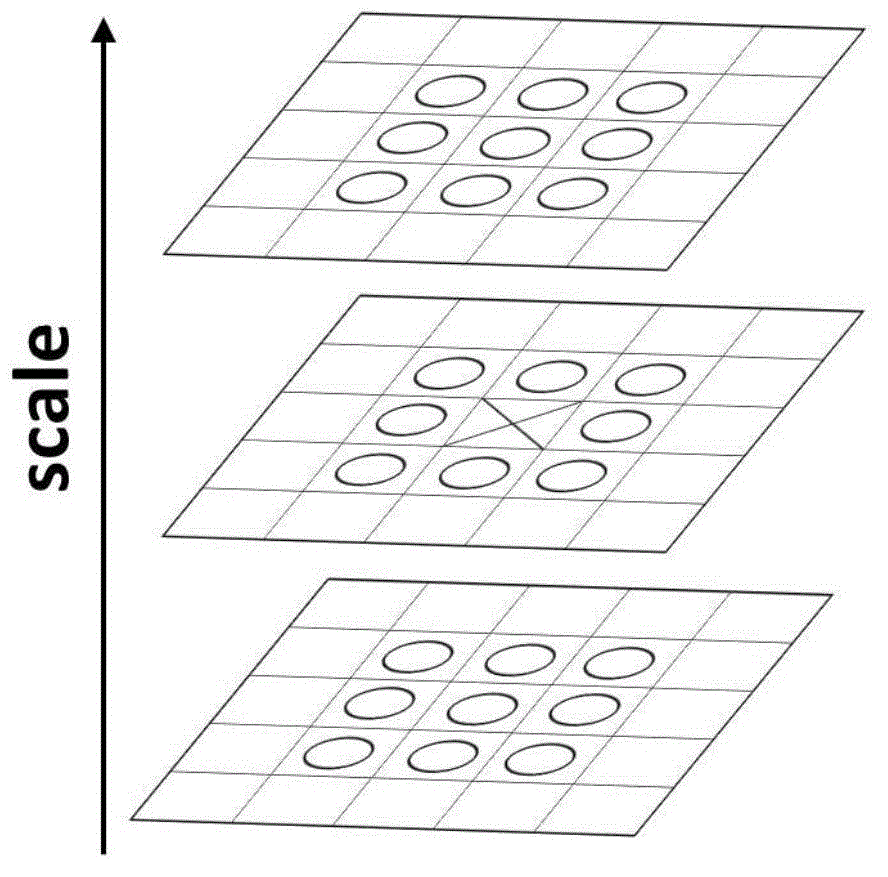

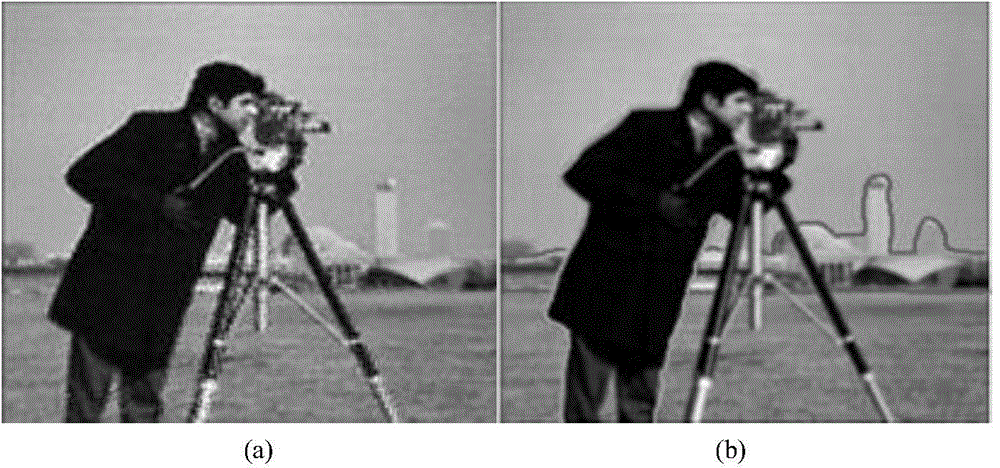

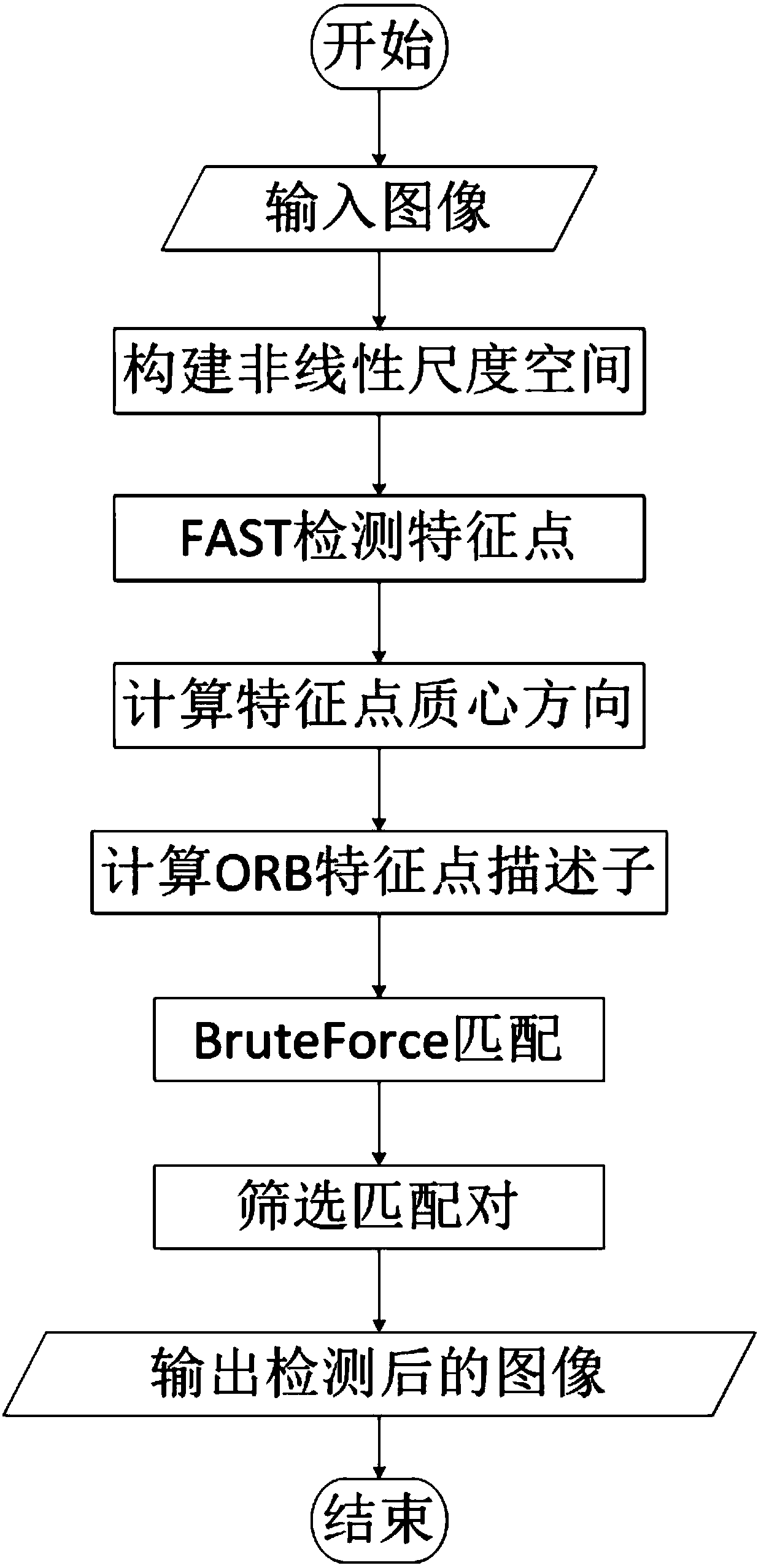

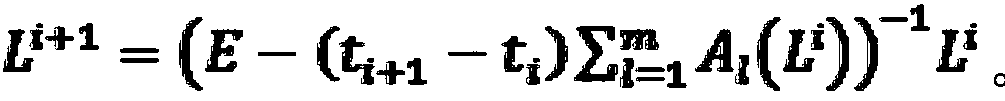

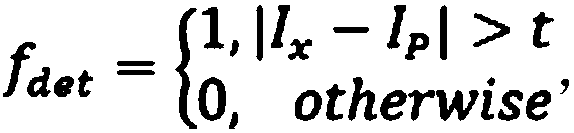

Nonlinear scale space-based ORB feature point matching method

InactiveCN107944471AScale invariantFast operationCharacter and pattern recognitionScale invarianceScale space

The invention discloses a nonlinear scale space-based ORB feature point matching method. The method comprises the following steps of 1, inputting an image and constructing a nonlinear scale space; 2,performing feature point detection by utilizing FAST; 3, calculating centroid directions of residual feature points; 4, calculating ORB feature point descriptors; 5, performing feature point matchingby adopting a BruteForce algorithm; and 6, screening feature point matching pairs and outputting a detected image. The method has the advantages that ORB has scale invariance, the characteristic of high calculation speed of the ORB is reserved, and the problems of boundary fuzziness and detail loss easily caused by constructing a scale space by utilizing a linear Gaussian pyramid in an existing improved algorithm are solved.

Owner:ANHUI UNIVERSITY OF TECHNOLOGY AND SCIENCE

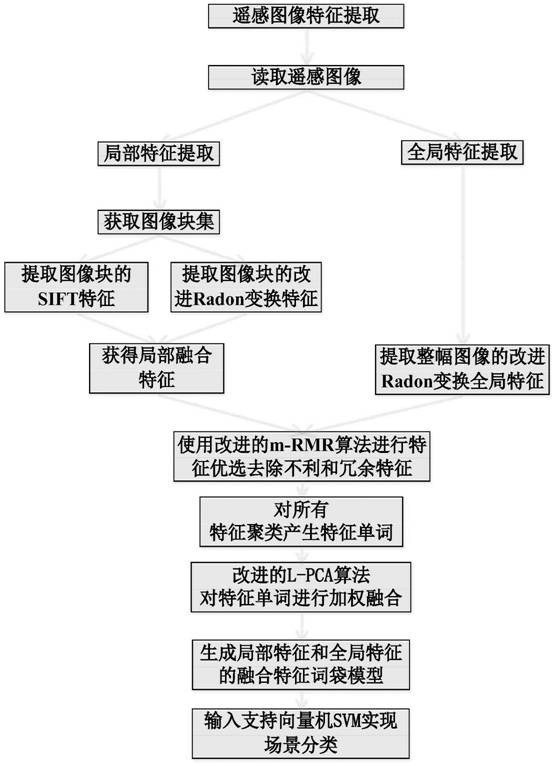

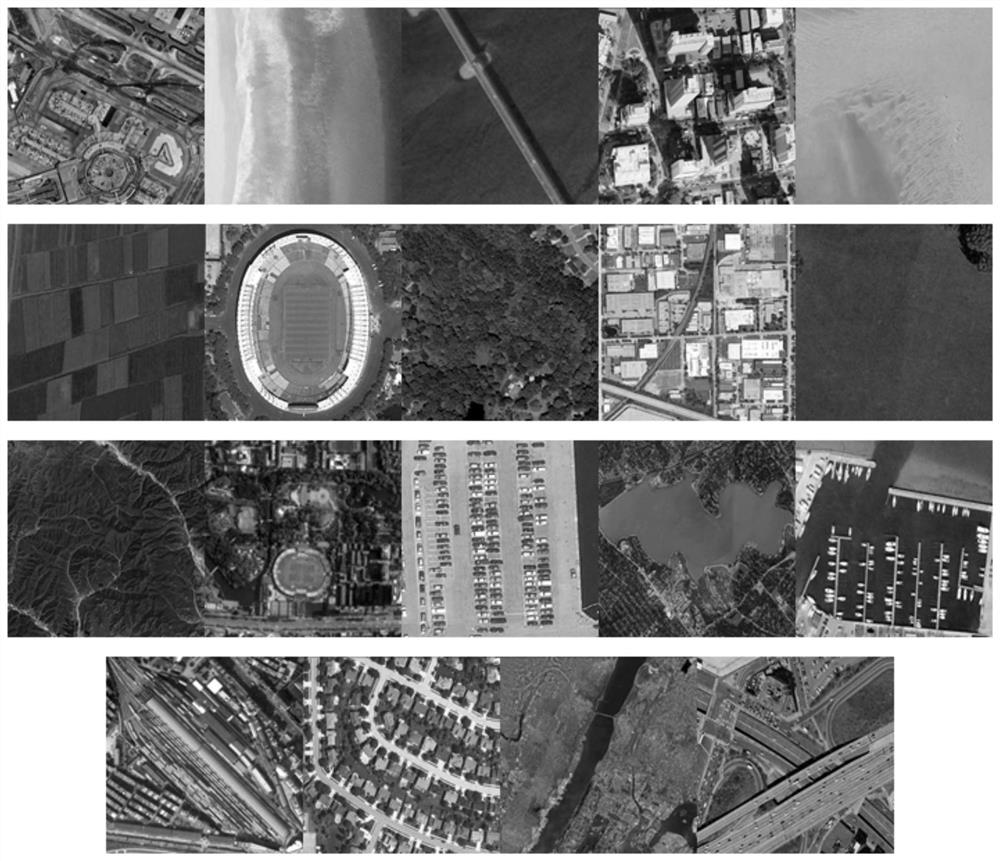

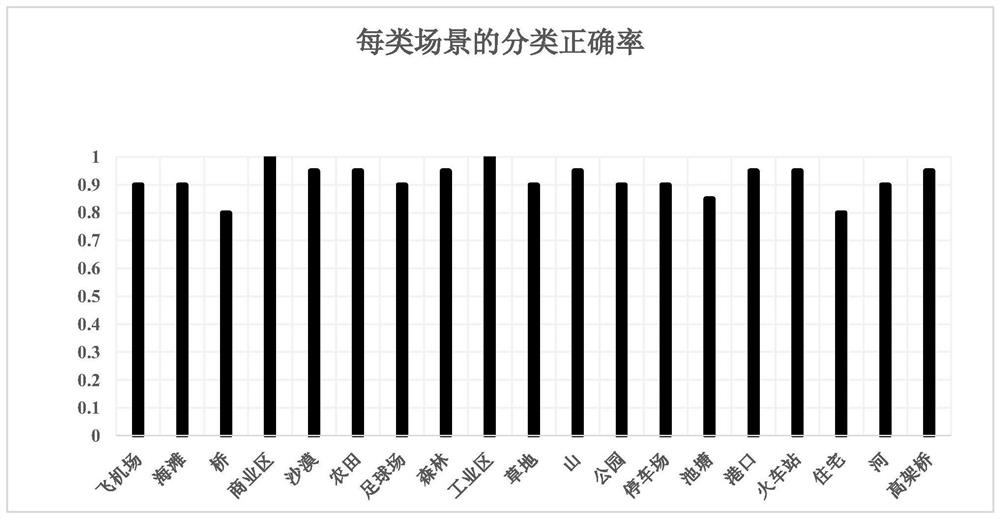

Remote sensing image scene classification method based on image transformation and BoF model

ActiveCN112966629ASolve space problemsResolve locationInternal combustion piston enginesScene recognitionScale-invariant feature transformMutual information

The invention discloses a remote sensing image scene classification method based on image transformation and a BoF model, and the method comprises the steps of carrying out the partitioning processing of a remote sensing image, obtaining an image block set, carrying out the improved Radon transformation of all image block sets, carrying out the local feature extraction through combining the scale invariant feature transformation SIFT of the image block set, and obtaining a local fusion feature of the improved Radon transform feature and the SIFT feature; secondly, carrying out edge detection on the whole remote sensing image, and improving Radon transformation to obtain global features of the remote sensing image; then, using an improved m-RMR correlation analysis algorithm based on mutual information for carrying out feature optimization on the local fusion features and the global features, removing unfavorable and redundant features, clustering all the features to generate feature words, and using an improved PCA algorithm for carrying out weighted fusion on the feature words to obtain a fusion feature; obtaining a fusion feature word bag model of the local features and the global features; and finally, inputting a support vector machine (SVM) to generate a classifier and realizing remote sensing image scene classification.

Owner:EAST CHINA UNIV OF TECH

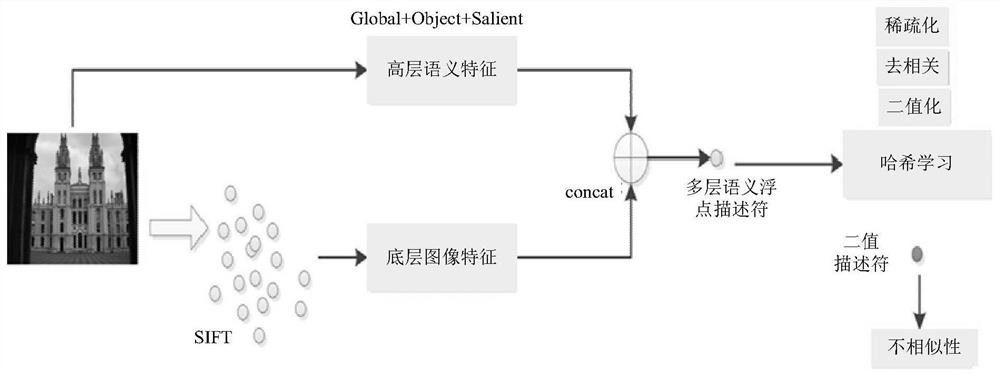

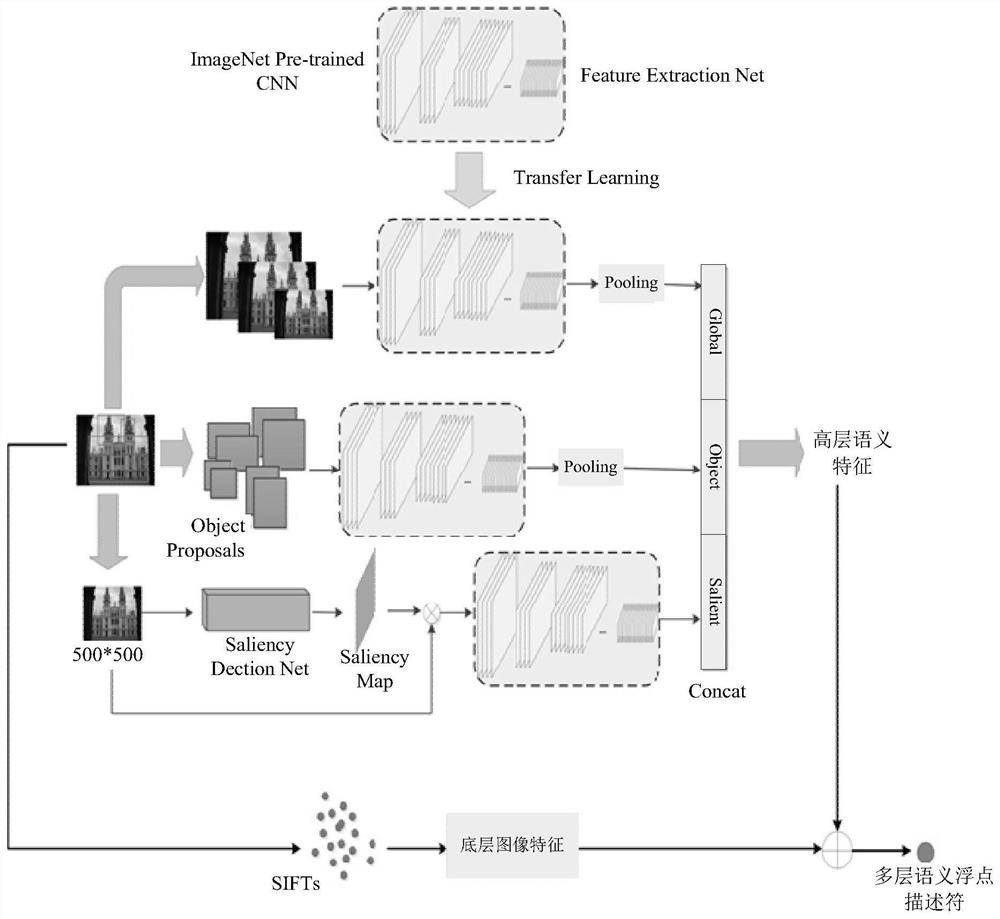

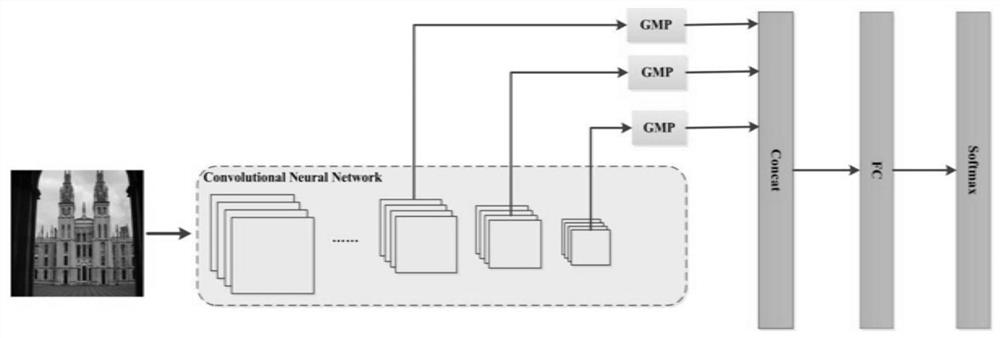

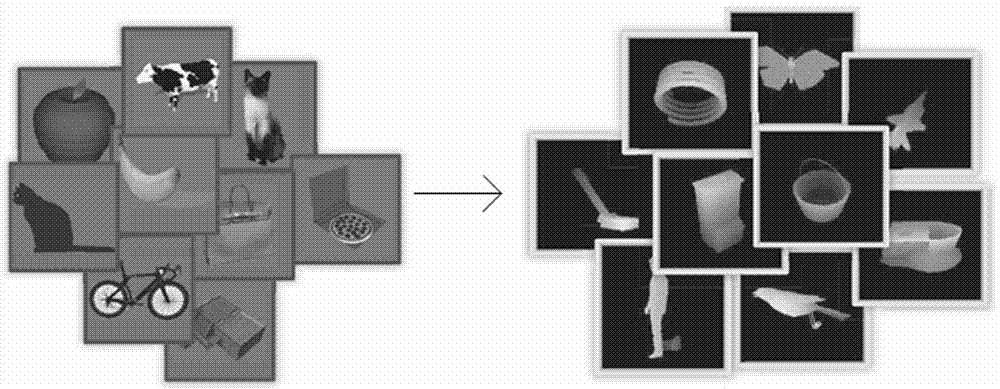

Image retrieval method based on feature fusion

PendingCN112163114AImprove discriminationBridge the gapImage codingCharacter and pattern recognitionRadiologyImage retrieval

The invention discloses an image retrieval method based on feature fusion, which belongs to the field of image retrieval and comprises the following steps of: training a feature extraction network; extracting a multi-layer semantic floating point descriptor of each image in a training image set, and performing hash learning to generate a rotation matrix R; extracting a multi-layer semantic floating point descriptor of each image in an image library, and performing binaryzation after rotation by utilizing the R; classifying the images in the image library by using a classification network; correspondingly storing a binary descriptor and a class probability vector of each image, wherein the extraction of the multi-layer semantic floating point descriptor is implemented by extracting high-layer semantic features and bottom-layer image features of each image and fusing the features, the high-layer semantic features comprise global descriptors, and are extracted in a mode of zooming each image to a plurality of different scales, extracting features by using the feature extraction network and fusing the features, the bottom-layer image features comprise SIFT descriptors, and are extracted in a mode of extracting a plurality of SIFT features of each image and aggregating the SIFT features into VALD. According to the image retrieval method, the descriptor with high distinguishing capability and small occupied space can be constructed.

Owner:HUAZHONG UNIV OF SCI & TECH

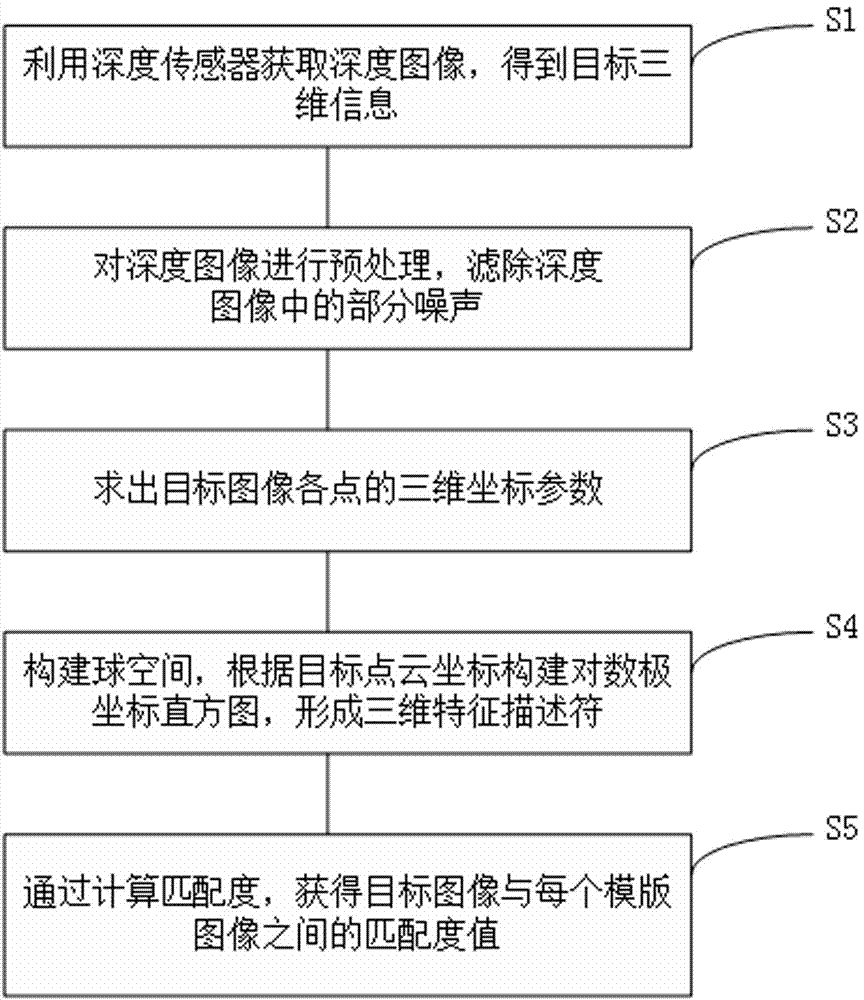

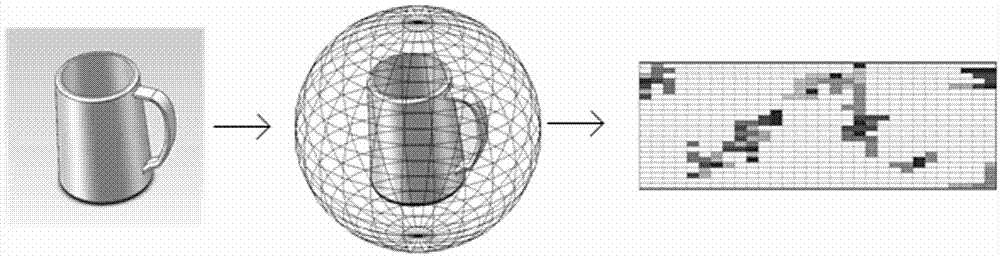

3D target identification method based on sphere space

InactiveCN107273831AScale invariantRotation invariantThree-dimensional object recognitionPoint cloudScale invariance

The invention discloses a 3D target identification method based on a sphere space, comprising the following steps: acquiring a depth image through a depth sensor, and getting 3D information of a target; preprocessing the depth image, and removing part of noise in the depth image; calculating the 3D coordinate parameters of points in a target image; constructing a sphere space, constructing a log-polar histogram according to the point cloud coordinates of the target, and forming a 3D feature descriptor; and calculating the matching degree, and getting the matching degree values between different images. Features of image shape and depth information can be extracted and represented. The method has scale invariance, rotation invariance and translation invariance. The accuracy of identification is improved.

Owner:SUZHOU UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com