Gesture identification method and apparatus

A gesture recognition and gesture technology, applied in the field of target recognition, can solve the problems of complex calculation, low recognition efficiency, and failure to achieve real-time, etc., and achieve high recognition efficiency, high recognition accuracy and efficiency, and reduced computational complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

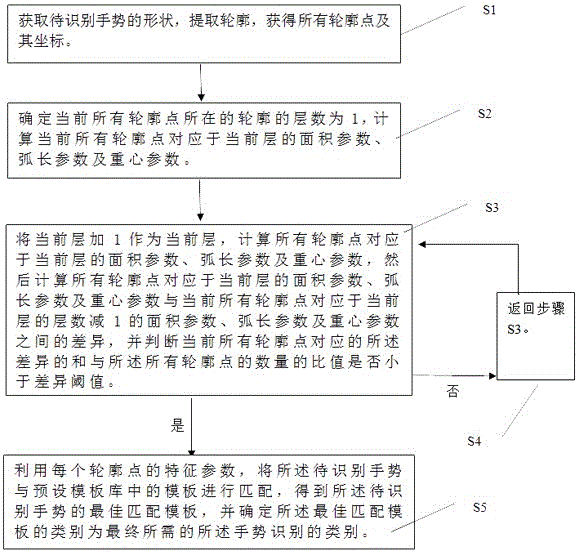

[0049] Embodiment one: see figure 1 Shown, a kind of gesture recognition method, described method comprises the steps:

[0050] S1. Obtain the gesture shape to be recognized, and extract a closed contour from the edge of the gesture shape to be recognized, and obtain all contour points on the contour and the coordinates of each contour point;

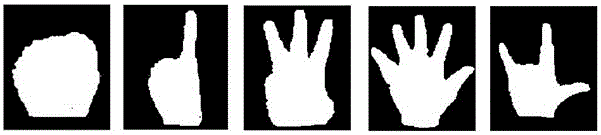

[0051] It should be noted that the target shape designed in the present invention can be a shape with a closed contour, such as figure 2 Shown is a specific example of the object shape involved in the present invention. In addition, the number of contour points is the number of all points on the contour point, and its specific value is determined according to the actual situation, and the contour feature that completely represents the shape of the gesture shall prevail.

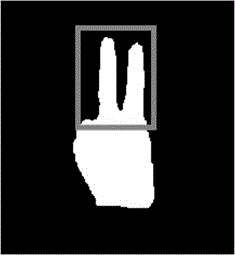

[0052] In a digital image, the edge of a shape can be represented by a series of contour points with coordinate information, and the set S of contour points of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com