Patents

Literature

63results about How to "Translation invariant" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

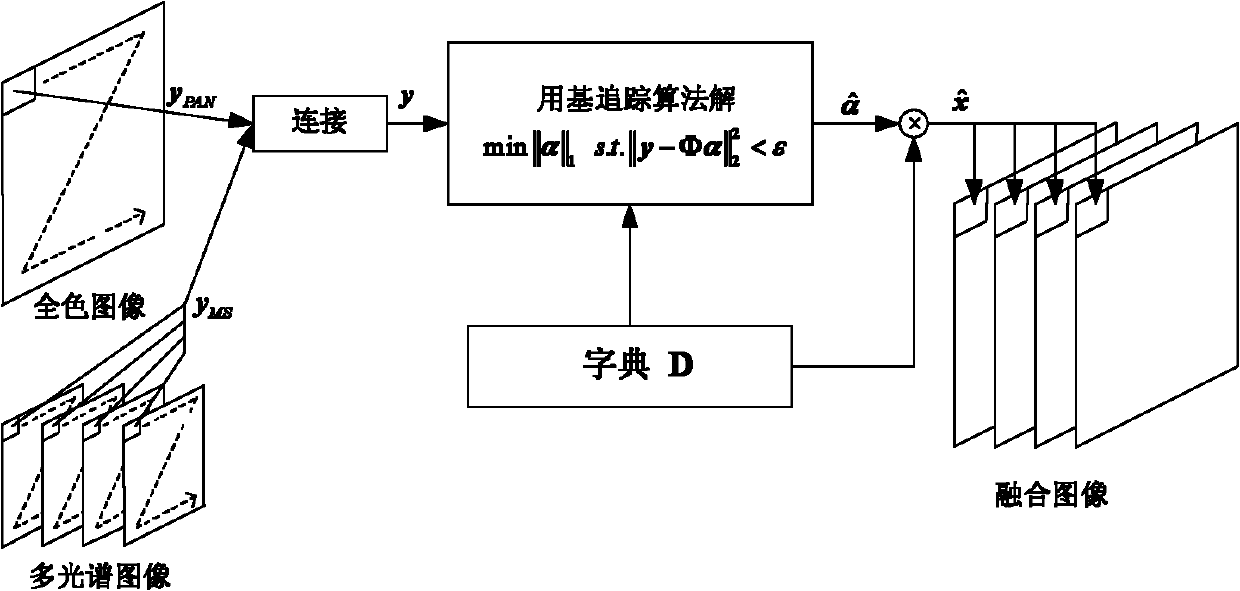

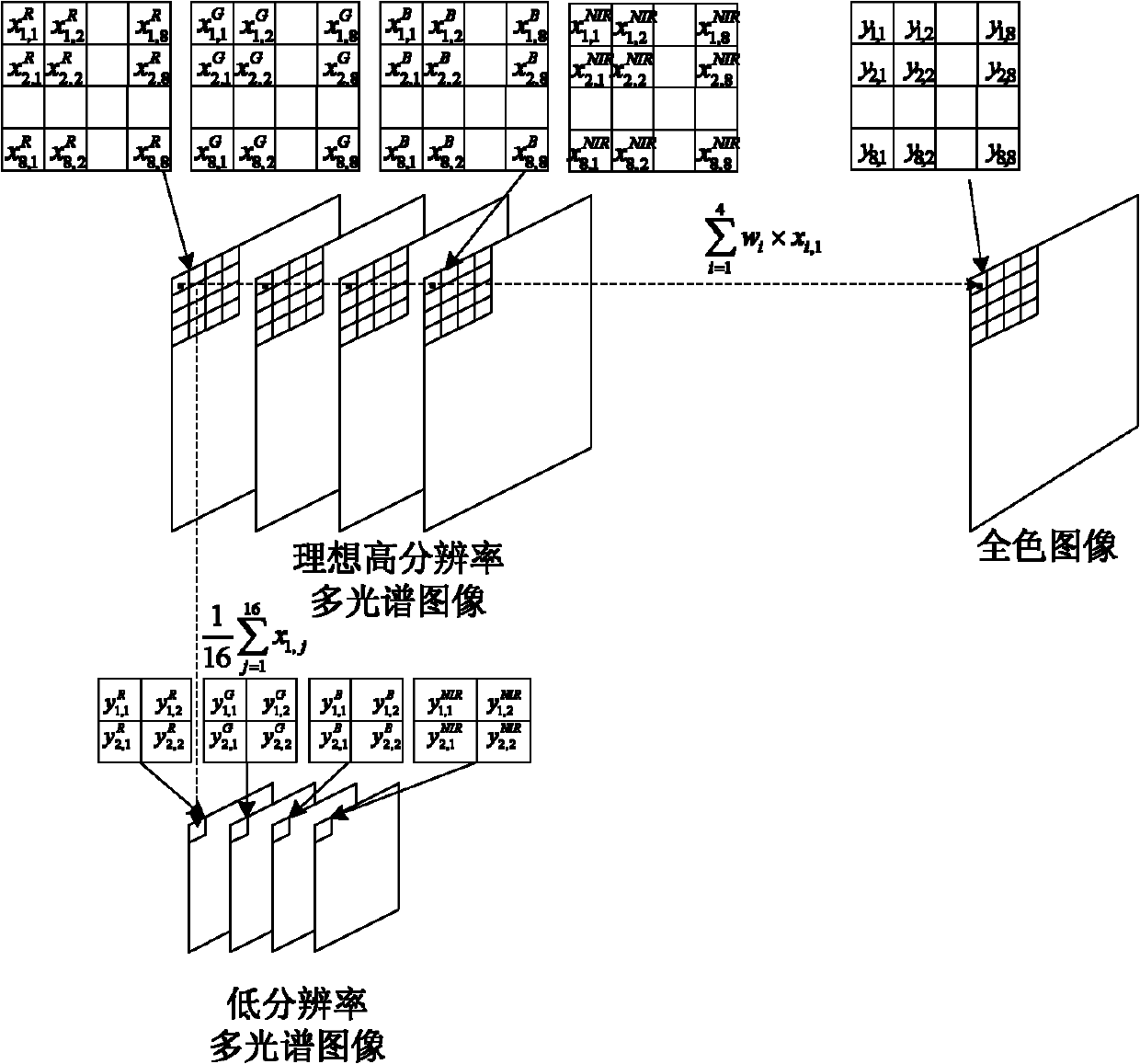

Compressive sensing theory-based satellite remote sensing image fusion method

InactiveCN101996396AConform to visual characteristicsAvoid complexityImage enhancementColor imageRemote sensing image fusion

The invention discloses a compressive sensing theory-based satellite remote sensing image fusion method. The method comprises the following steps of: vectoring a full-color image with high spatial resolution and a multi-spectral image with low spatial resolution; constructing a sparsely represented over-complete atom library of an image block with high spatial resolution; establishing a model from the multi-spectral image with high spatial resolution to the full-color image with high spatial resolution and the multi-spectral image with low spatial resolution according to an imaging principle of each land observation satellite; solving a compressive sensing problem of sparse signal recovery by using a base tracking algorithm to obtain sparse representation of the multi-spectral color image with high spatial resolution in an over-complete dictionary; and multiplying the sparse representation by the preset over-complete dictionary to obtain the vector representation of the multi-spectral color image block with high spatial resolution and converting the vector representation into the image block to obtain a fusion result. By introducing the compressive sensing theory into the image fusion technology, the image quality after fusion can be obviously improved, and ideal fusion effect is achieved.

Owner:HUNAN UNIV

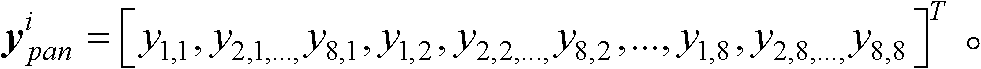

Significant guidance and unsupervised feature learning based image classification method

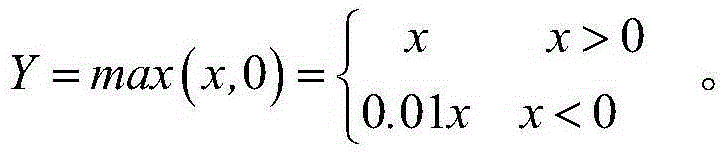

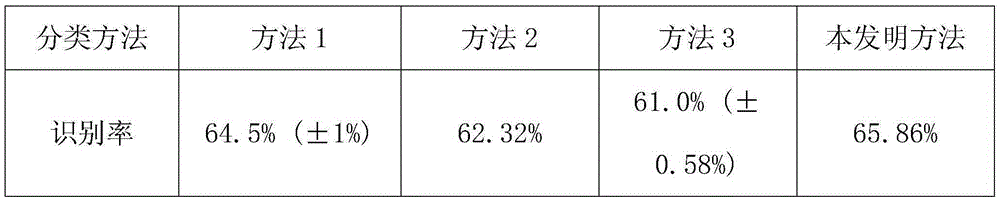

InactiveCN105426919ATranslation invariantAvoid fit problemsCharacter and pattern recognitionData setFeature learning

The invention discloses a significant guidance and unsupervised feature learning based image classification method and belongs to the field of machine learning and computer vision. The image classification method comprises significant guidance based pixel point collection, unsupervised feature learning, image convolution, local comparison normalization, spatial pyramid pooling, central prior fusion and image classification. With the adoption of the classification method, representative pixel points in an image data set are collected through significant detection; the representative pixel points are trained with the sparse self-coding unsupervised feature learning method to obtain high-quality image features; features of a training set and a test set are obtained through image convolution operation; convolution features are subjected to local comparison normalization and spatial pyramid pooling; pooled features are fused with central prior features; and images are classified by adopting a liblinear classifier. According to the method, efficient and robust image features can be obtained and the classification accuracy of various images can be significantly improved.

Owner:HOHAI UNIV

Tooth X-ray image matching method based on SURF point matching and RANSAC model estimation

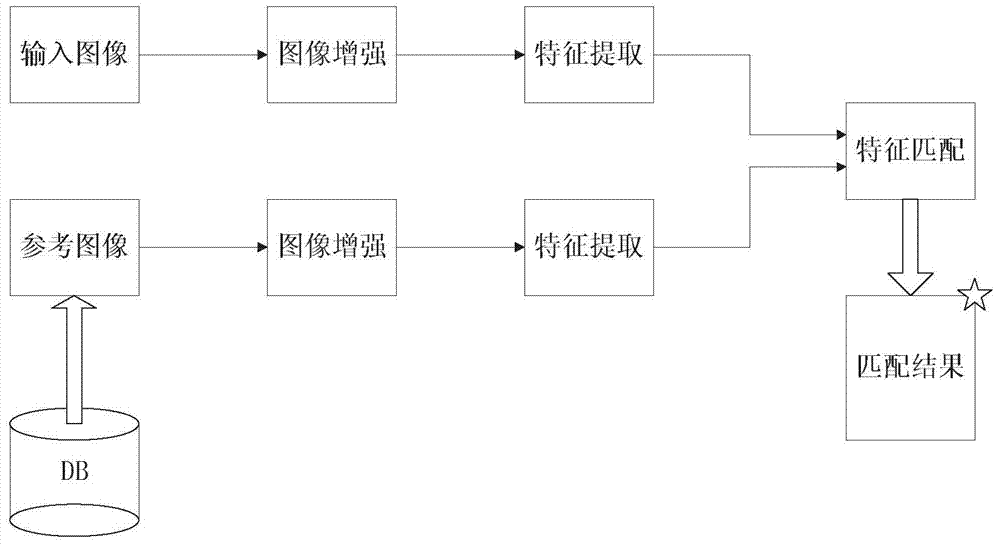

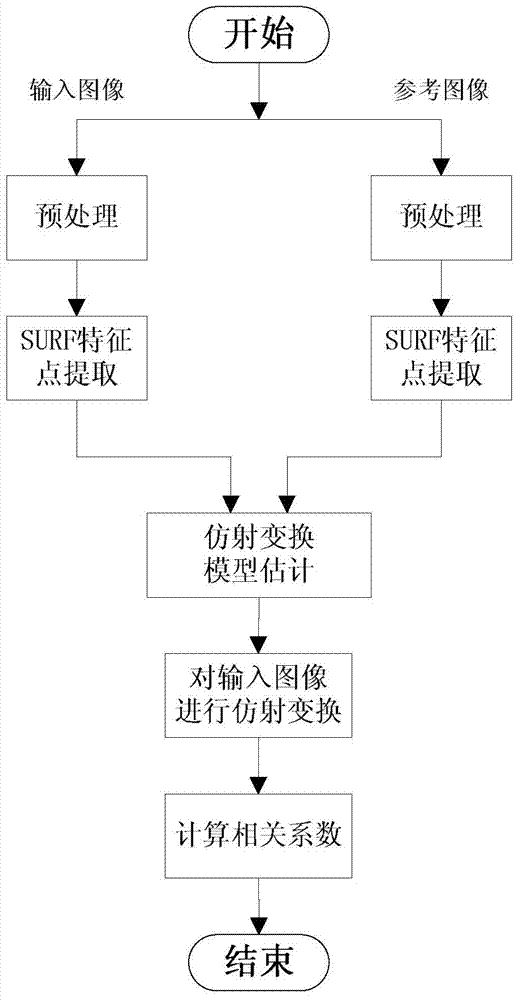

InactiveCN103886306AReduce volumeAvoid destructionCharacter and pattern recognitionCorrelation coefficientFeature extraction

The invention relates to a tooth X-ray image matching method based on SURF point matching and RANSAC model estimation. The tooth X-ray image matching method includes the steps that (1), images are collected; (2), the images are enhanced; (3), features are extracted; (4), the features are matched; (5), related matching coefficients are calculated; (6), a matching result is displayed; (7), if reference images which do not participate in matching exist in an image library, the step (1) is carried out, a new reference image is selected from the image library, and if all images participate in matching, operation is finished; (8), matching succeeds, personal information corresponding to the reference images is recorded, and then operation is finished. The feature points of an input tooth image and a reference tooth image are extracted according to an SUFR algorithm, then the feature points are matched according to an RANSAC algorithm, and finally, the matching degree of the two images is measured according to the correlation coefficient of the two matched images. Experiment shows that by the tooth X-ray image matching method, the high precision ratio and high real-time performance are achieved.

Owner:SHANDONG UNIV

Swatch sparsity image inpainting method with directional factor combined

InactiveCN103325095APriority fillingGuaranteed smoothnessImage enhancementPattern recognitionContourlet

Disclosed is a swatch sparsity image inpainting method with directional factors combined. The swatch sparsity image inpainting method mainly comprises the steps of conducing preprocessing on an image to be inpainted by the utilization of an existing image inpainting algorithm, extracting directional factors in four directions from the preprocessed image through non-subsampled contourlet transform, determining a new structural sparseness function and a new matching criterion according to the color-directional factor weighting distance, determining a filling-in order by means of the structure sparseness function and searching for a plurality of matching blocks according to the new matching criterion, establishing a constraint equation with color space local sequential consistency and directional factor local sequential consistency, optimizing and solving the constraint equation to obtain sparse representation information of the matching blocks, conducting filling, and updating filled-in regions until damaged areas are completely filled in. By means of the swatch sparsity image inpainting method, the consistency of the structure part, the clearness of the texture part and the sequential consistency of neighborhood information can be effectively kept, and the swatch sparsity image inpainting method is particularly applicable to inpainting of real pictures or composite images with complex textures and complex structural characteristics.

Owner:SOUTHWEST JIAOTONG UNIV

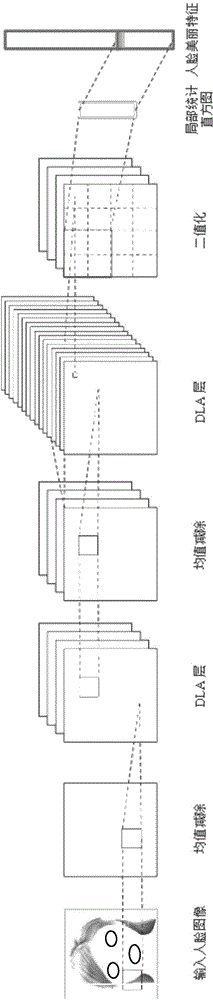

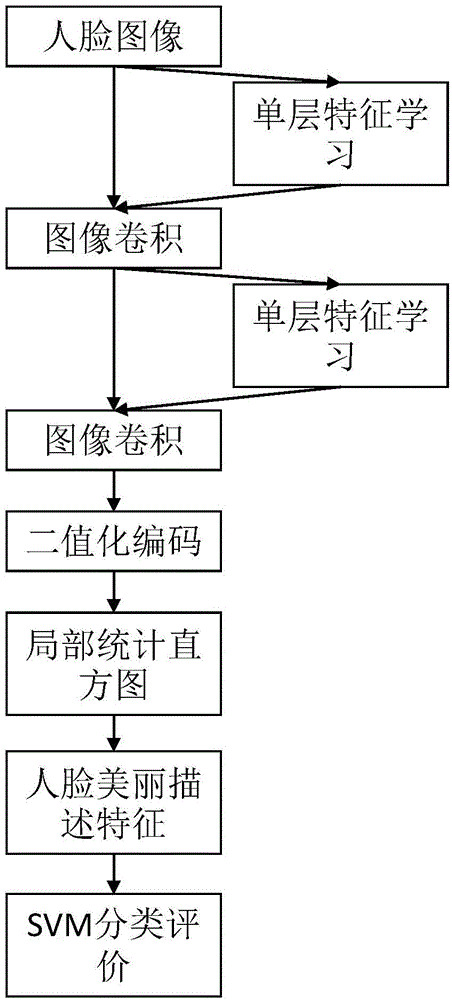

Face beauty evaluation method based on deep learning

InactiveCN104636755AImprove accuracyEffective beauty feature expressionCharacter and pattern recognitionSvm classifierStudy methods

The invention provides a face beauty evaluation method based on deep learning. The method comprises the following steps: (1), acquiring a trainer face image set and a tester face image set; (2) learning face beauty characteristics of the trainer face image set by virtue of characteristic learning, and convoluting original images by use of a convolution template so as to form multiple characteristic images; (3) by taking the obtained characteristic images as input, learning a second-layer convolution template by use of a same characteristic learning method, and convoluting the characteristic images obtained in the step (2) by use of the convolution template so as to form multiple characteristic images; (4) performing binarization encoding on the obtained characteristic images, calculating and counting histograms in a local region, and then splicing all the counted histograms of the local region into a face image characteristic.; and (5), quantifying face beauty evaluation into multiple equivalence forms, and classifying by use of an SVM (Support Vector Machine) classifier so as to obtain an evaluation result. According to the method, the face beauty characteristics are automatically learned from a sample by virtue of a deep learning algorithm, so that a computer can intelligently evaluate the face beauty.

Owner:SOUTH CHINA UNIV OF TECH

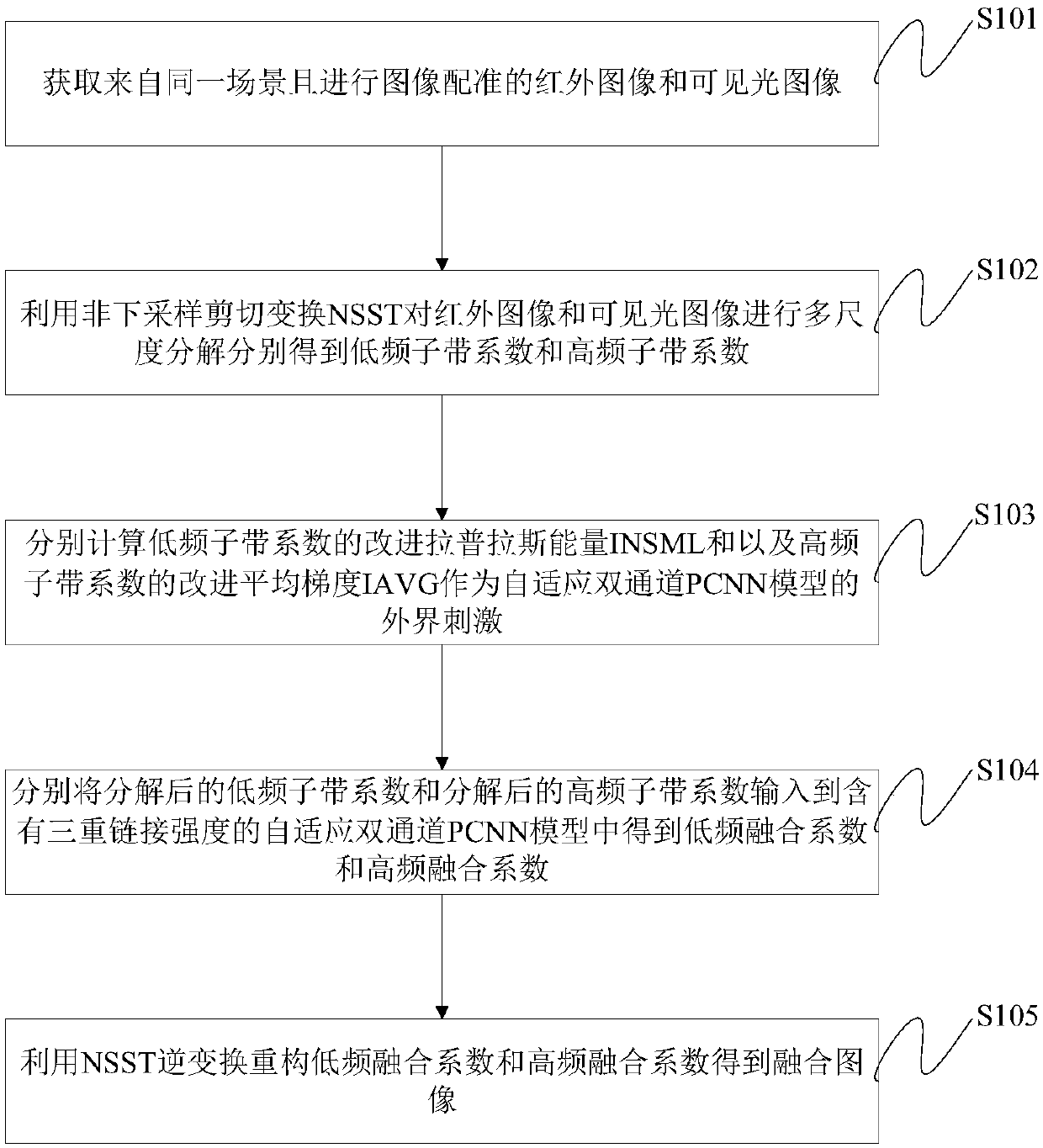

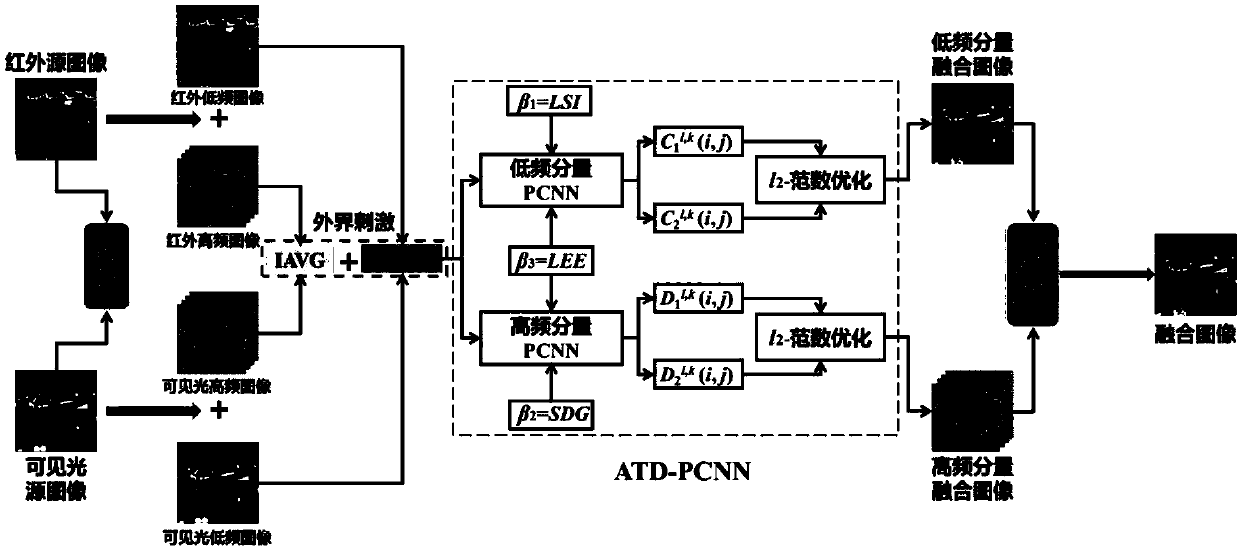

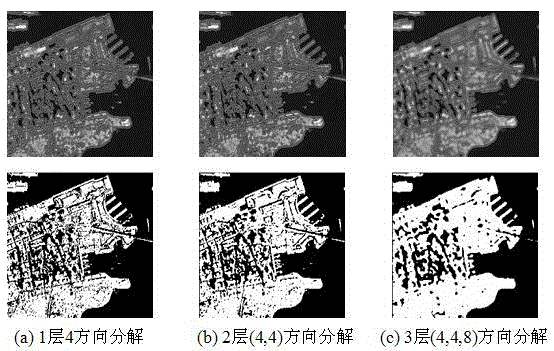

Image fusion method and device based on NSST and adaptive dual-channel PCNN

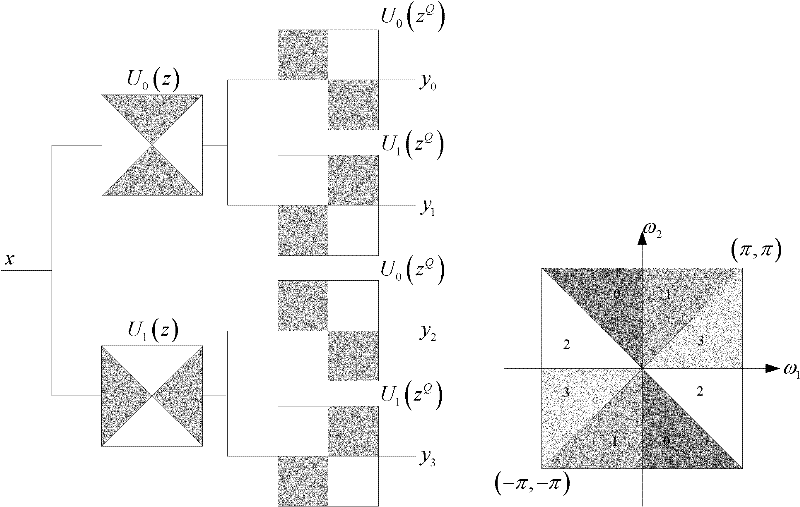

InactiveCN109102485APromote decompositionPromote reconstructionImage enhancementImage analysisPattern recognitionMultiscale decomposition

The invention provides an image fusion method and a device based on NSST and adaptive dual-channel PCNN. NSST is used as a multi-scale transform tool to decompose the image, NSST removes the sample operation in the decomposition phase, and has translation invariance, at the same time, a localize small size shearing filter can avoid spectral aliasing, so that the effect of image decomposition and reconstruction is better, using an adaptive simplified two-channel PCNN model with three kinds of link strengths, through three different link strengths, the features of the image are integrated, the infrared and visible images are fused effectively, the contrast is high, the texture information and detail information are well preserved, and the image transition is natural, no artifact phenomenon occurs.

Owner:CHANGCHUN INST OF OPTICS FINE MECHANICS & PHYSICS CHINESE ACAD OF SCI

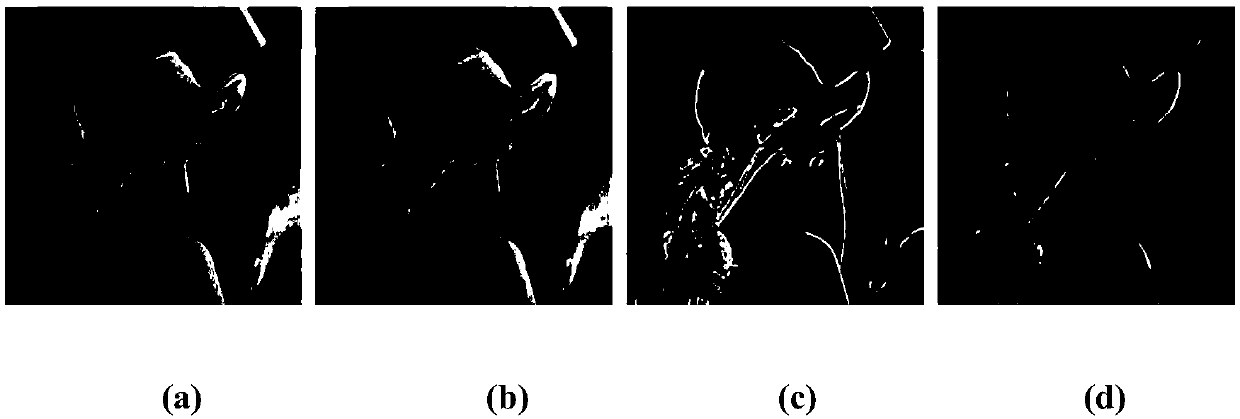

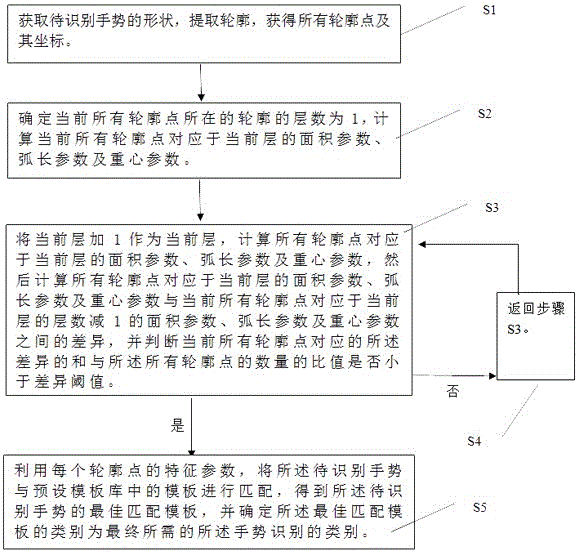

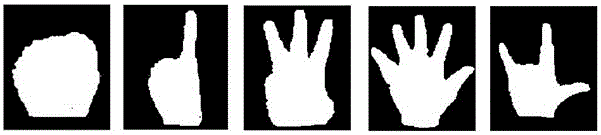

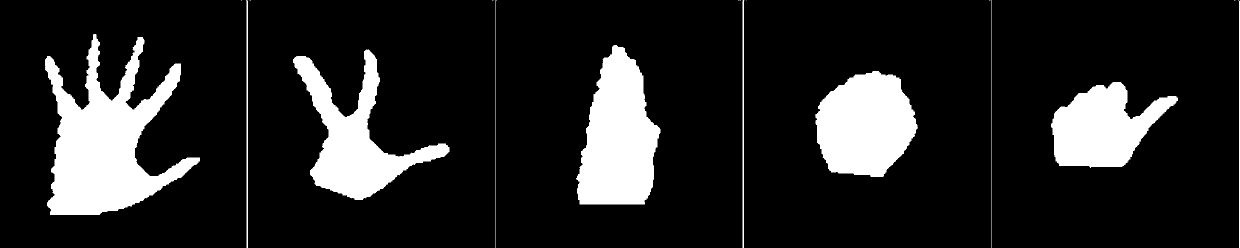

Gesture identification method and apparatus

InactiveCN106022227AReduce dimensionalityAvoid situations with low recognition accuracyCharacter and pattern recognitionTemplate basedGravity center

The invention discloses a gesture identification method. The method comprises: a shape of a to-be-identified gesture is obtained, a closed profile is extracted from a gesture-shaped edge, and all profile points on the profile and a coordinate of each profile point are obtained; the layer number of the profile is determined, and an area parameter, an arc length parameter, and a gravity center parameter that correspond to each profile point at each layer are calculated based on the coordinate of each profile point, wherein the parameters are used as feature parameters of the profile point; with the feature parameters of each profile point, a to-be-identified gesture and a template in a preset template base are matched to obtain an optimal matching template, and the optimal matching template is determined as the to-be-identified gesture. According to the invention, a global feature, a local feature, and a relation between the global feature and the local feature are described; multi-scale and omnibearing analysis expression is carried out; effective extraction and expression of the global feature and the local feature of the to-be-identified gesture shape can be realized; and a phenomenon of low identification accuracy caused by a single feature can be avoided.

Owner:SUZHOU UNIV

Method and device for gesture identification based on substantial feature point extraction

ActiveCN107203742AEfficient extractionReduce dimensionalityCharacter and pattern recognitionPoint sequenceScale invariance

The present invention discloses a method and device for gesture identification based on substantial feature point extraction. The device comprises: an extraction module configured to obtain shape of a gesture to be identified, extract an unclosed contour from the edges of the shape of the gesture to be identified and obtain coordinates of all the contour points on the contour; a calculation module configured to calculate the area parameters of each contour point, perform screening of the contour points according to the area parameters, extract the substantial feature points and take the area parameters of a substantial feature point sequence and the point sequence parameters after normalization as the feature parameters of the contour; and a matching module configured to facilitate the feature parameters of the substantial feature points, perform matching of the gestures to be identified and templates in a preset template library, obtain the optimal matching template of the gesture to be identified and determine the type of the optimal matching template as the type of the gesture to be identified. The method and device for gesture identification based on the substantial feature point extraction have good performances such as translation invariance, rotation invariance, scale invariance and hinging invariance while effectively extracting and expressing gesture shape features so as to effectively inhibit noise interference.

Owner:SUZHOU UNIV

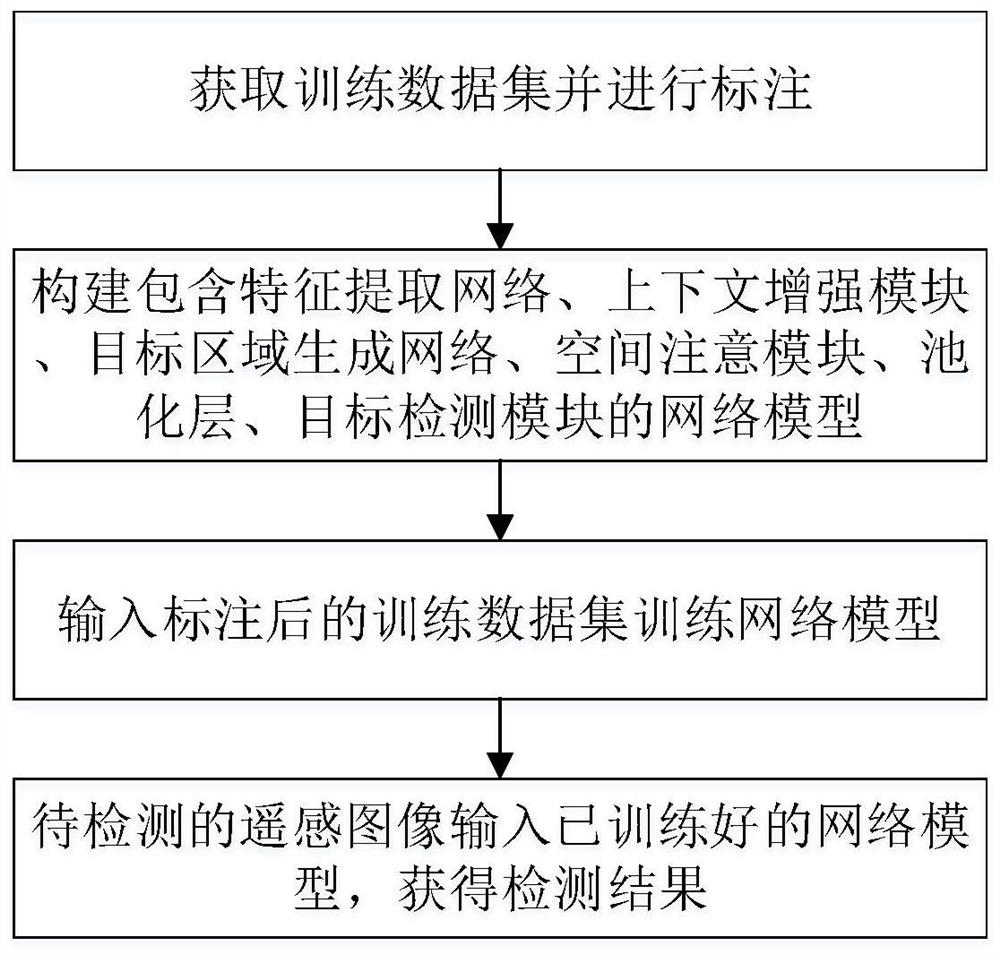

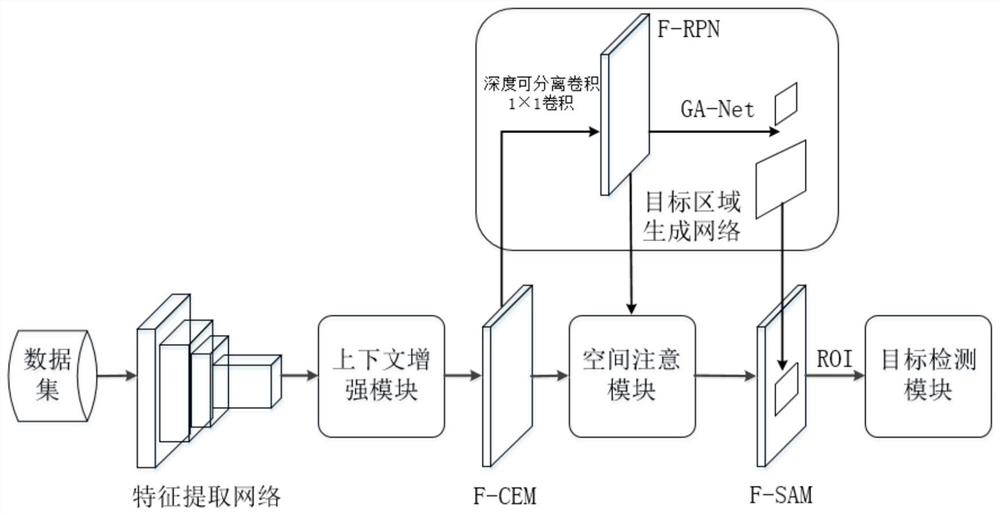

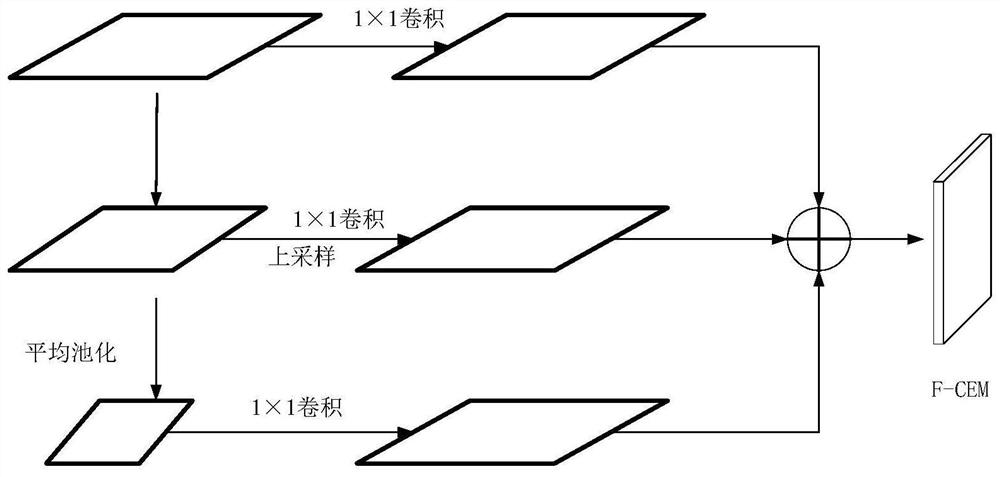

Remote sensing image blue-topped house detection method based on large-scale deep convolutional neural network

PendingCN112016511AEasy to detectImprove detection accuracyCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention discloses a remote sensing image blue-topped house detection method based on a large-scale deep convolutional neural network. The method comprises the steps of obtaining a training dataset and performing labeling; constructing a network model comprising a feature extraction network, a context enhancement module, a target region generation network, a spatial attention module, a pooling layer and a target detection module; inputting the labeled training data set to train a network model; and inputting a to-be-detected remote sensing image into the trained network model to obtain adetection result of the blue-top house. The invention has the advantages that depth feature extraction, target candidate region generation, anchor box generation, context enhancement, a spatial attention mechanism and a target detection process are integrated into an end-to-end deep network model, and a good detection effect can be achieved for multi-scale remote sensing image blue-topped house detection.

Owner:CHONGQING GEOMATICS & REMOTE SENSING CENT

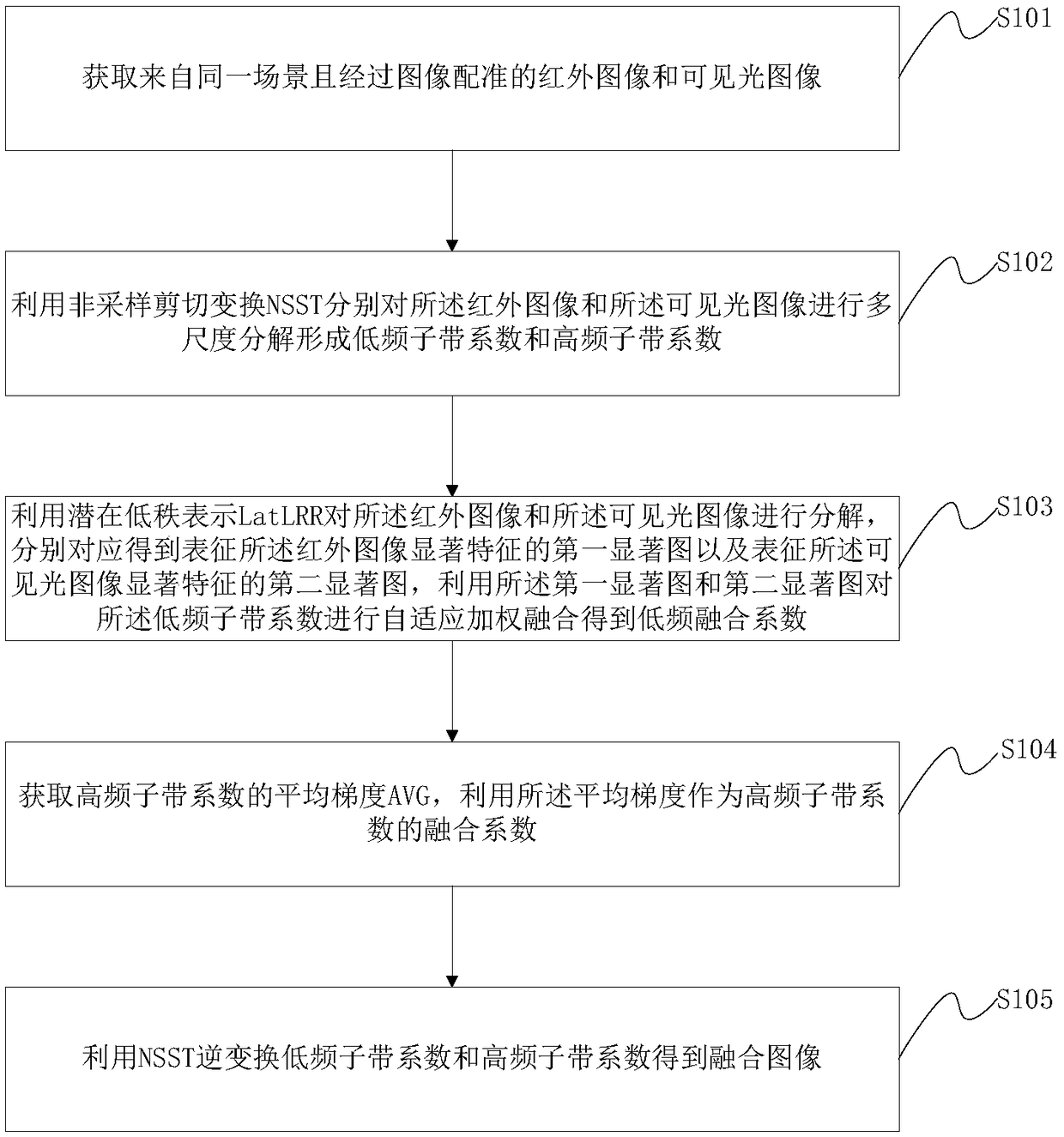

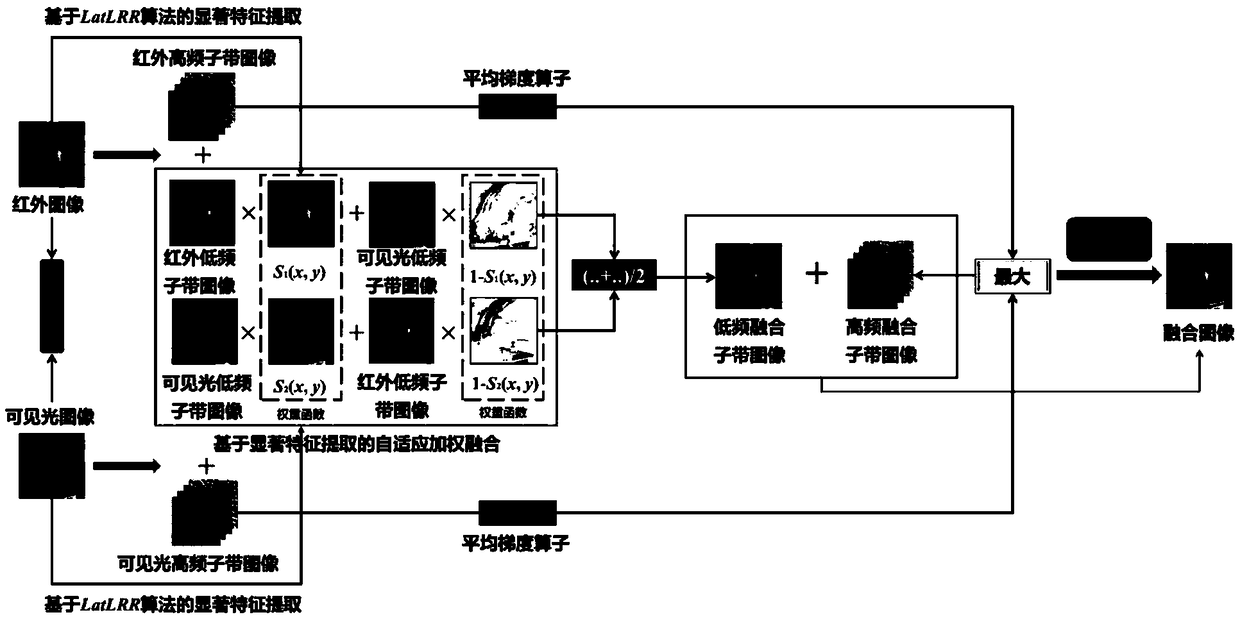

Image fusion method and apparatus based on potentially low rank representation and NSST

InactiveCN109242813APromote decompositionPromote reconstructionImage enhancementImage analysisFrequency spectrumDecomposition

The invention provides an image fusion method and device based on potential low rank representation and NSST. A source image is discomposed into multi-scale and multi-direction by an NSST including asmall-size shearing wave filter. Because NSST eliminates the sampling operation in the decomposition phase, it has translation invariance. At the same time, in the phase of directional localization,localized small-scale shearing filter can avoid spectrum aliasing phenomenon, and make the image decomposition and reconstruction effect better. For the low frequency component, LatLRR can extract thesalient features from the data robustly, and is more robust to noise, so it can identify the salient objects and regions in the image precisely. For the high frequency component, because the averagegradient represents the change of image gray value, it can reflect the details of image edge and texture, so the average gradient operator can better express the image features and achieve better fusion effect.

Owner:CHANGCHUN INST OF OPTICS FINE MECHANICS & PHYSICS CHINESE ACAD OF SCI

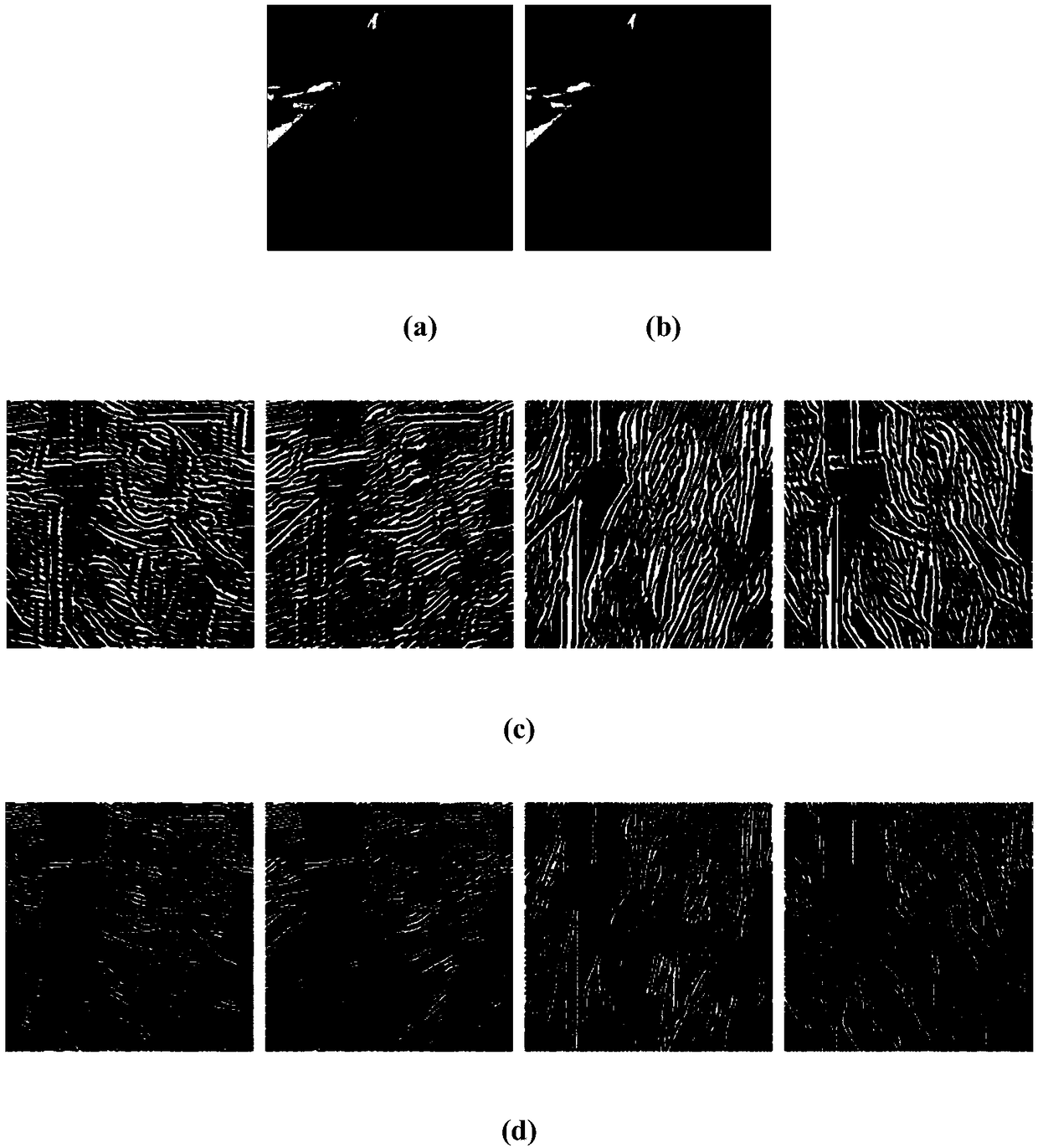

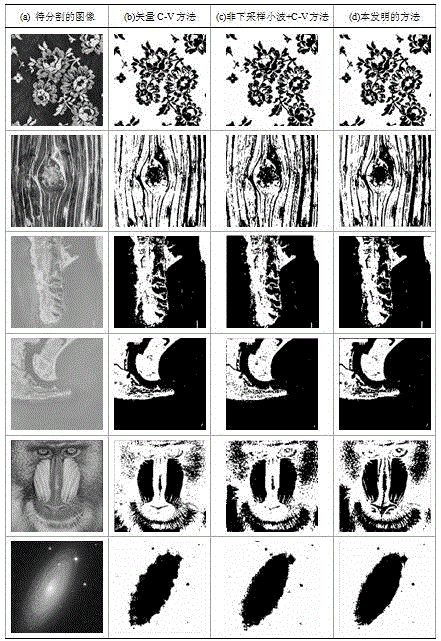

Image segmentation method based on non-down-sampling shearlet conversion and vector C-V model

InactiveCN105608705AResolve aliasingTake full advantage of segmentation performanceImage analysisPattern recognitionDecomposition

The invention, which belongs to the image processing field, puts forward an image segmentation method based on non-down-sampling shearlet conversion and a vector C-V model. According to the method, multi-level decomposition is carried out on a given image by using non-down-sampling shear waves to obtain low-frequency and high-frequency components at different directions; the components are sent to a vector C-V model; a series of evolving curves distributed uniformly are initialized on the overall image; and an obtained energy functional is minimized to obtain a segmentation result. With the method, the segmentation effect is obvious; and objective evaluation quality and the visual effect are good.

Owner:LIAONING NORMAL UNIVERSITY

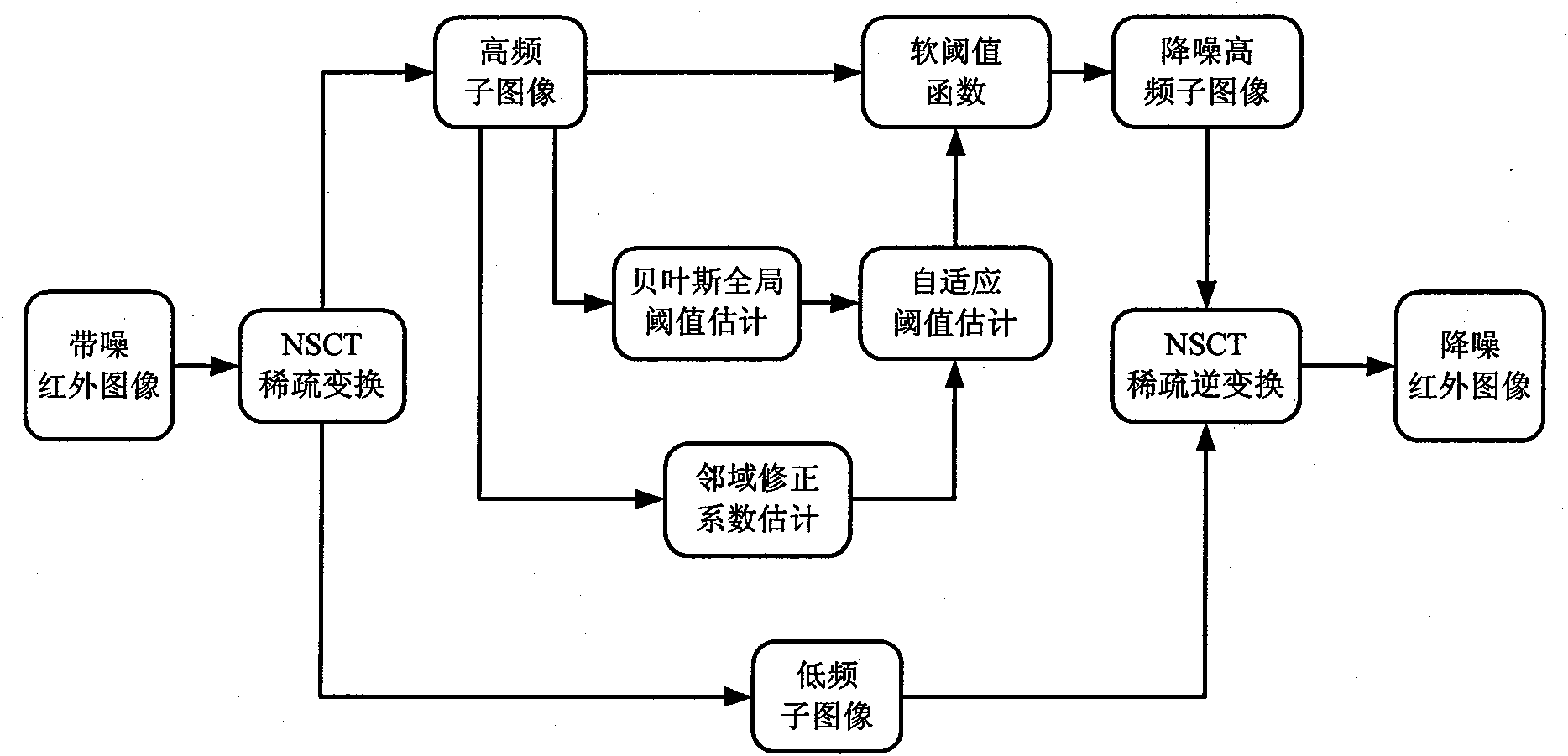

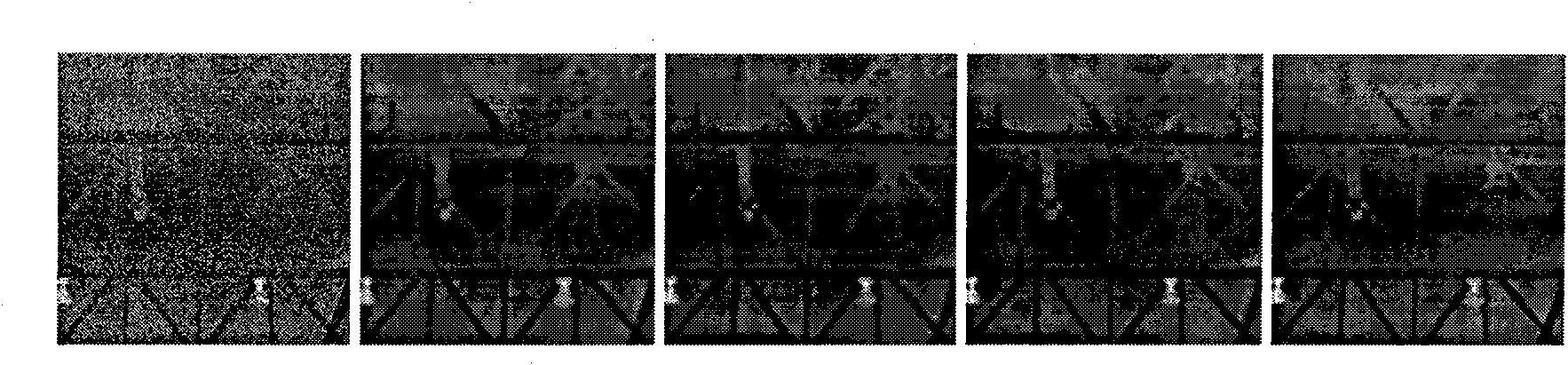

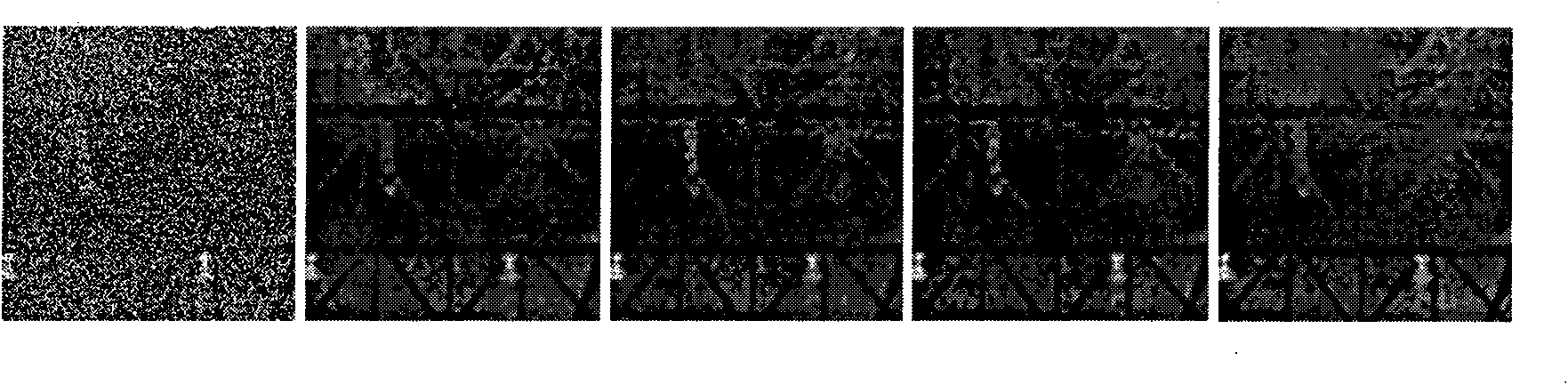

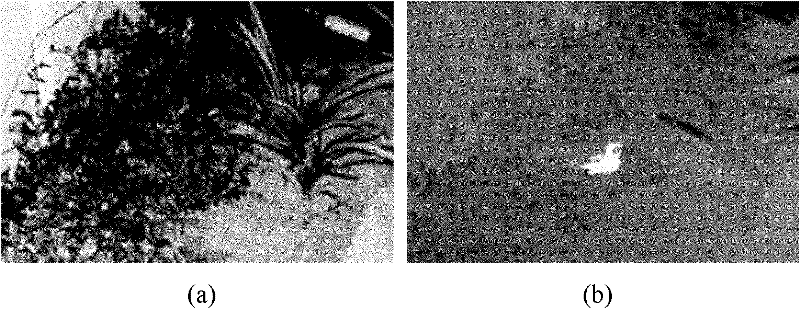

Transform domain neighborhood self-adapting image denoising method for detecting fire accident

ActiveCN101930590AReduce noise levelQuality improvementImage enhancementPattern recognitionNoise level

The invention discloses a transform domain neighborhood self-adapting image denoising method for detecting a fire accident, comprising the following steps: firstly performing sparse decomposition to a noise infrared fire accident detection image by non-sampling Contourlet with a plurality of sizes and directions, using a Laplace distribution according to the decomposed high frequency images, using a Bayes evaluating method to evaluate the overall best threshold value; using a neighborhood static characteristic to modify the overall threshold value, and getting a self-adapting threshold value of each pixel point of the high frequency image, processing the threshold value of the high frequency image, removing the noise content in the image, using non-sampling Contourlet split conversion to get the pre-denoising image and reach the denoising effect. The method can effectively reduce the noise level of the infrared fire detection image and increase the quality of the fire detection image.

Owner:SHANGHAI FIRE RES INST OF THE MIN OF PUBLIC SECURITY +1

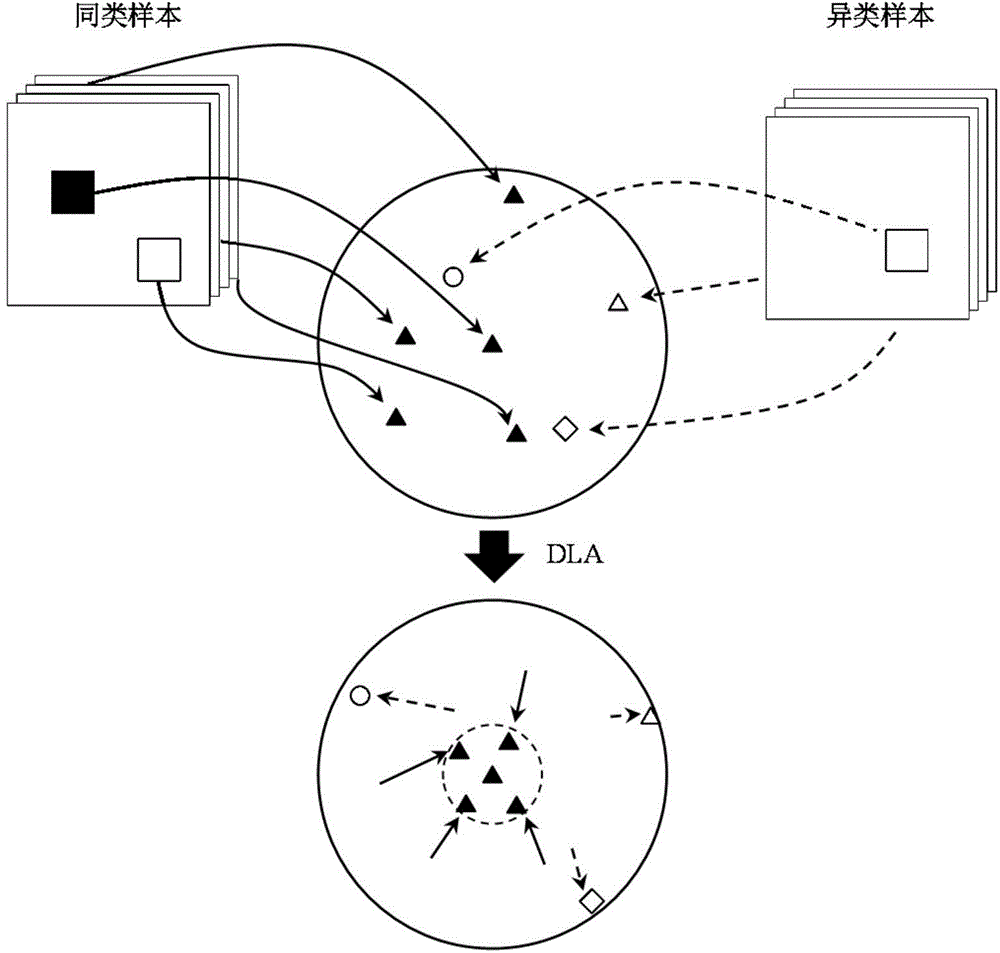

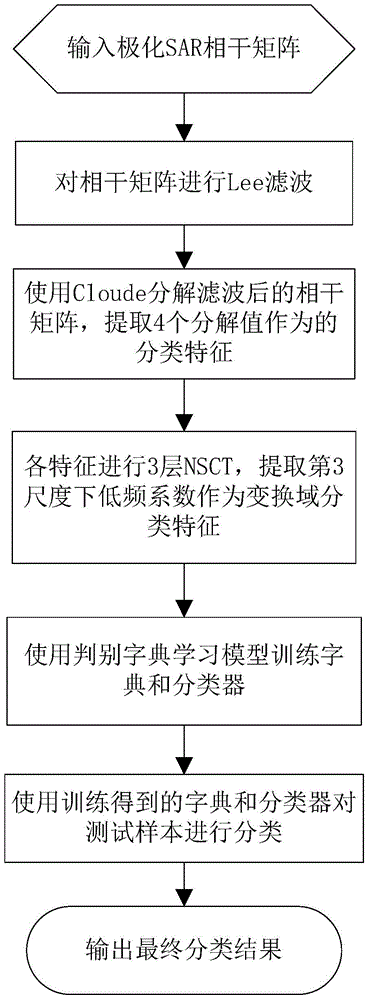

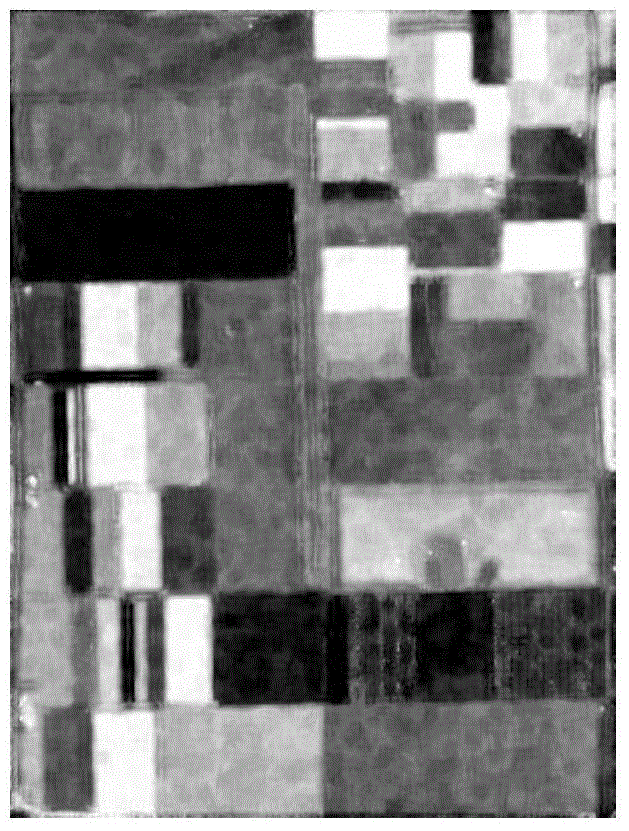

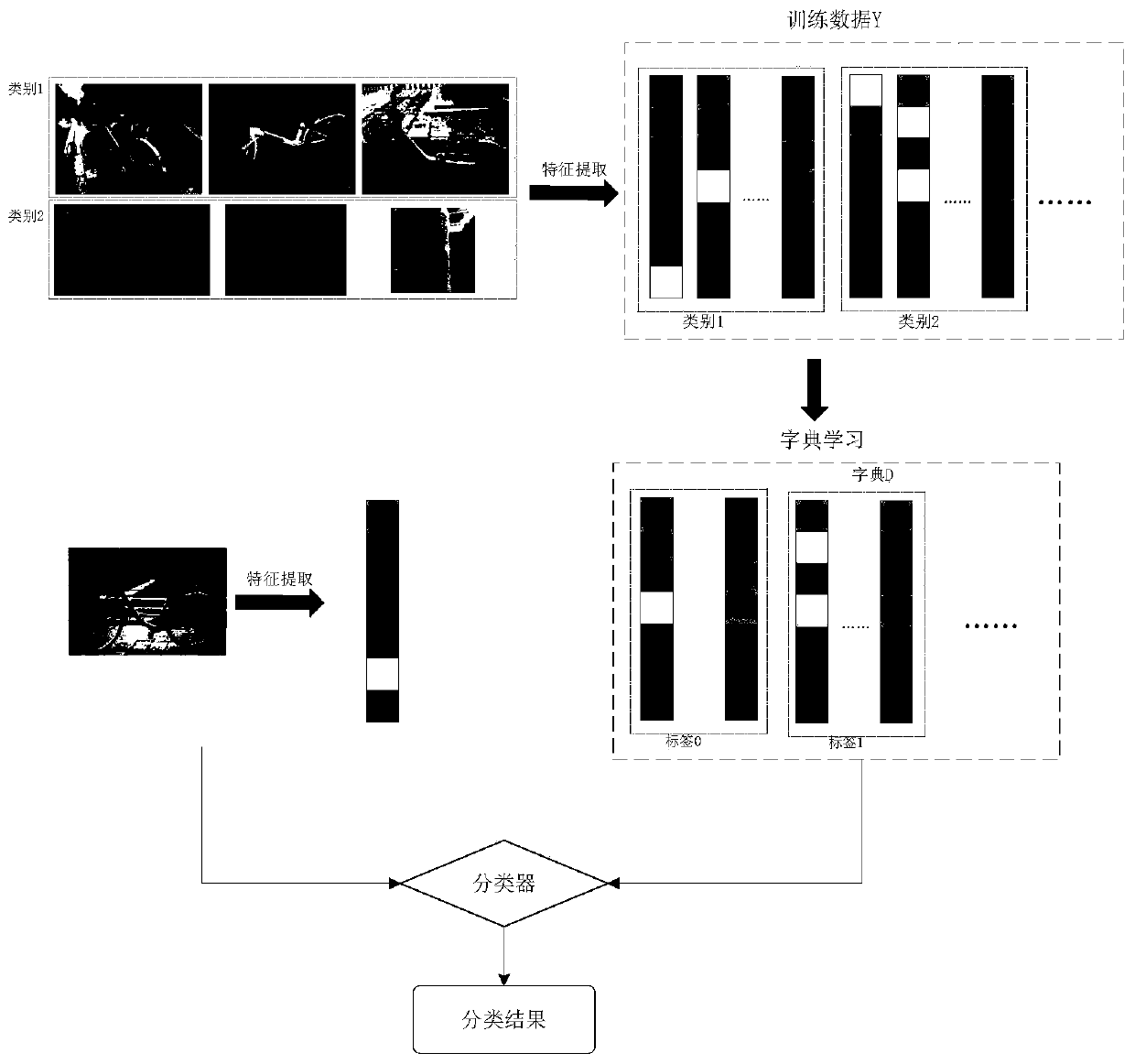

Polarimetric SAR classification method on basis of NSCT and discriminative dictionary learning

ActiveCN104680182AReduce computational complexityEasy to understandCharacter and pattern recognitionDictionary learningImaging processing

The invention discloses a polarimetric SAR classification method on the basis of NSCT and discriminative dictionary learning and mainly solves the problems of low classification accuracy and low classification speed of an existing polarimetric SAR image classification method. The polarimetric SAR classification method comprises the following implementing steps: 1, acquiring a coherence matrix of a polarimetric SAR image to be classified and carrying out Lee filtering on the coherence matrix to obtain the de-noised coherence matrix; 2, carrying out Cloude decomposition on the de-noised coherence matrix and using three non-negative feature values of decomposition values and a scattering angle as classification features; 3, carrying out three-layer NSCT on the classification features and using a transformed low-frequency coefficient as a transform domain classification feature; 4, using the transform domain classification feature and combining a discriminative dictionary learning model to train a dictionary and a classifier; 5, using the dictionary and the classifier, which are obtained by training, to classify a test sample so as to obtain a classification result. The polarimetric SAR classification method improves classification accuracy and increases a classification speed and is suitable for image processing.

Owner:XIDIAN UNIV

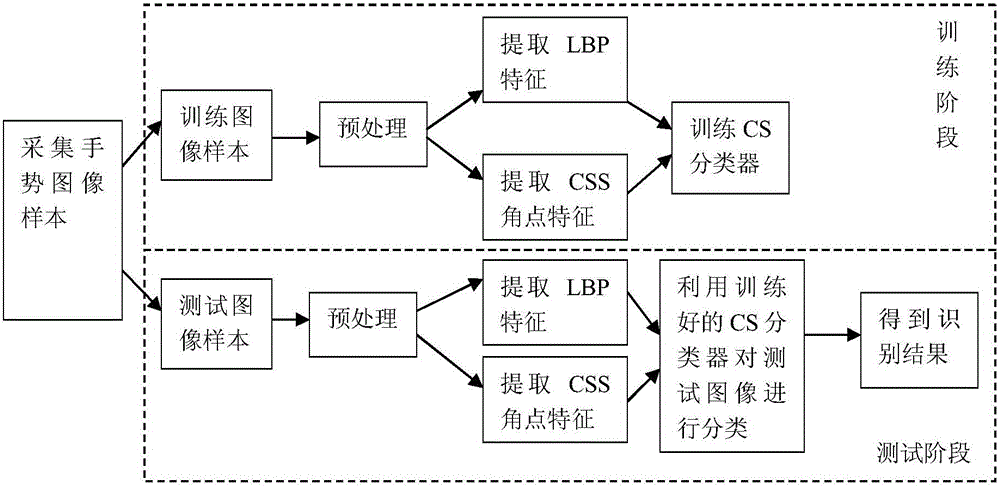

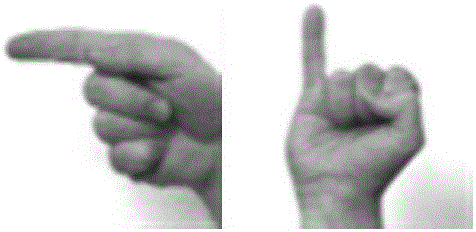

Visual sense based static gesture identification method

InactiveCN105760828AImprove recognition rateImprove accuracyCharacter and pattern recognitionAngular pointVision based

The invention relates to a visual sense based static gesture identification method. The method comprises a training stage and a testing stage. In the training stage, a training image is preprocessed, an LBP feature and a CSS angular point feature are extracted from the training image, the extracted features are fused, and a classifier designed on the basis of compressive sensing theories is trained. In the testing stage, a shot gesture image is preprocessed, an LBP feature and a CSS angular point feature are extracted from the test image, the two features are fused, and the trained classifier is used for classified identification. According to the invention, two features are fused, the classifier is designed via the compressive sensing theories, disadvantages of single feature can be overcome, and the gesture identification rate is improved.

Owner:SHANDONG UNIV

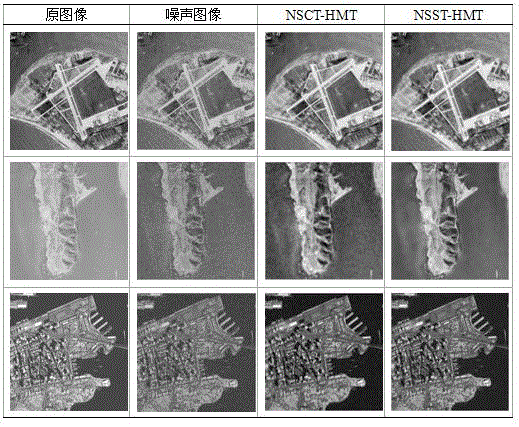

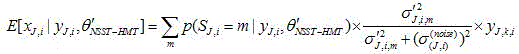

Remote sensing image de-noising method based on shearing-wave-domain hidden markov tree model

InactiveCN105894462AOvercome the problem of aliasingTranslation invariantImage enhancementMultiscale decompositionPattern recognition

The invention, which belongs to the image processing field, provides a remote sensing image de-noising method based on a shearing-wave-domain hidden markov tree model. Multi-direction and multi-scale decomposition is carried out on an image by using non-down-sampling shearing wave transform to obtain a sparse expression of the image; and modeling is carried out on transform coefficient distribution rules of the image and the noise by using a hidden markov tree model. Therefore, a common problem of frequency aliasing of the existing frequency domain de-noising algorithm can be solved; and complicated detained texture information in an image can be protected well during the de-noising process.

Owner:LIAONING NORMAL UNIVERSITY

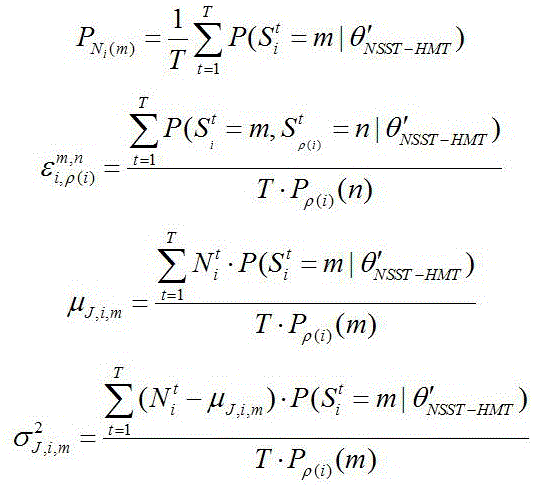

Urban management case image recognition method based on dictionary learning

ActiveCN111507413AEffectively distinguish between different case categoriesTranslation invariantData processing applicationsClimate change adaptationDictionary learningEngineering

The invention relates to an urban management case image recognition method based on dictionary learning. The method comprises the following steps: firstly uploading urban management case images and various types of cases of a monitoring video screenshots to a cloud library, compressing the collected various types of cases through a compression technology, reducing the redundant information, and then transmitting and storing the compressed cases; extracting contour features of case sample images, and using training sample features to construct a dictionary model; adding a sample label into thedictionary, and classifying the urban management cases by the dictionary through an added linear discriminant; and finally, after the urban management cases are classified, reporting and auditing thecase types, and sending the case types to workers in the region in time. The working efficiency is improved, and intelligent urban case management is realized.

Owner:JIYUAN VOCATIONAL & TECHN COLLEGE

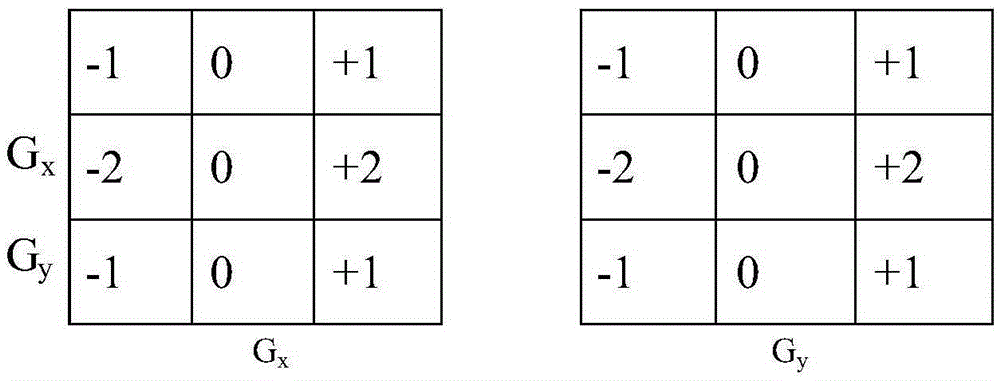

Gauss noise variance estimation method based on NSCT and PCA

InactiveCN105303538ALittle effect on image detailsReduce the impactImage enhancementImage analysisPattern recognitionDecomposition

A Gauss noise variance estimation method based on NSCT and PCA belongs to the technical field of variance estimation. According to the method, a raw image with Gauss noise is firstly subjected to NSCT decomposition, and a low-pass filtering image and a plurality of high-pass filtering images in different directions are obtained; a new image Y is obtained by subtracting the low-pass filtering image from the raw image, and the image Y contains the Gauss noise and high-frequency information of the raw image; then an edge detection algorithm is used for edge detection of the image Y, and detected edge positions are marked; edge position images are removed, and the noise variances of non-edge position images are estimated by means of a PCA method. The method is widely applicable, more precise, and more robust.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING)

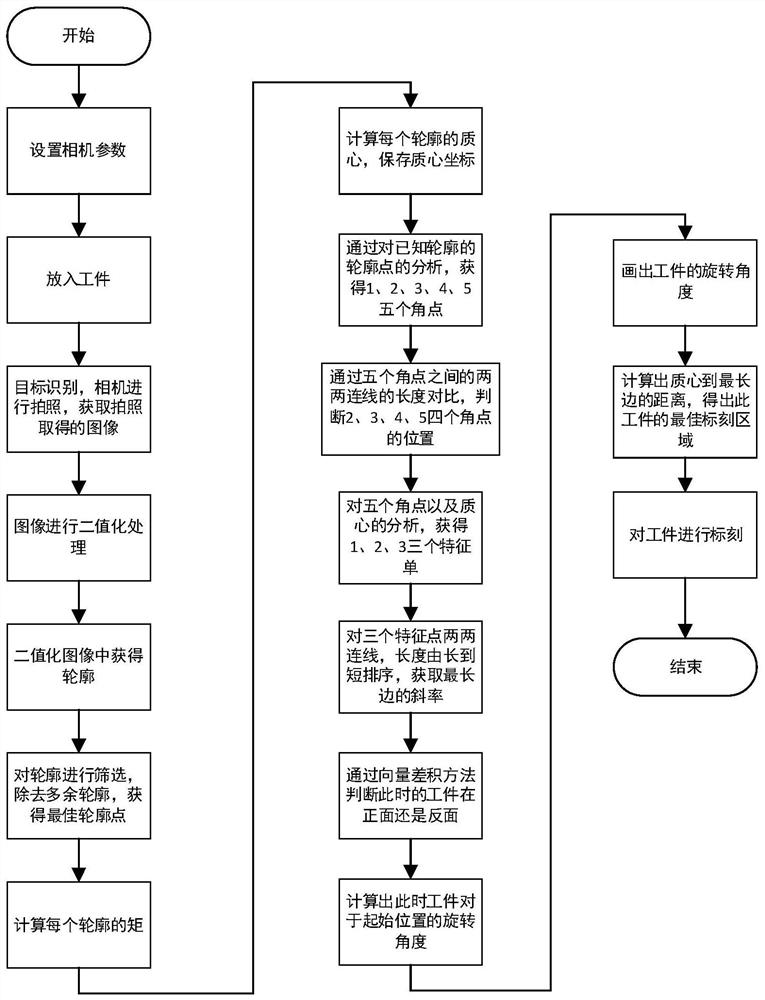

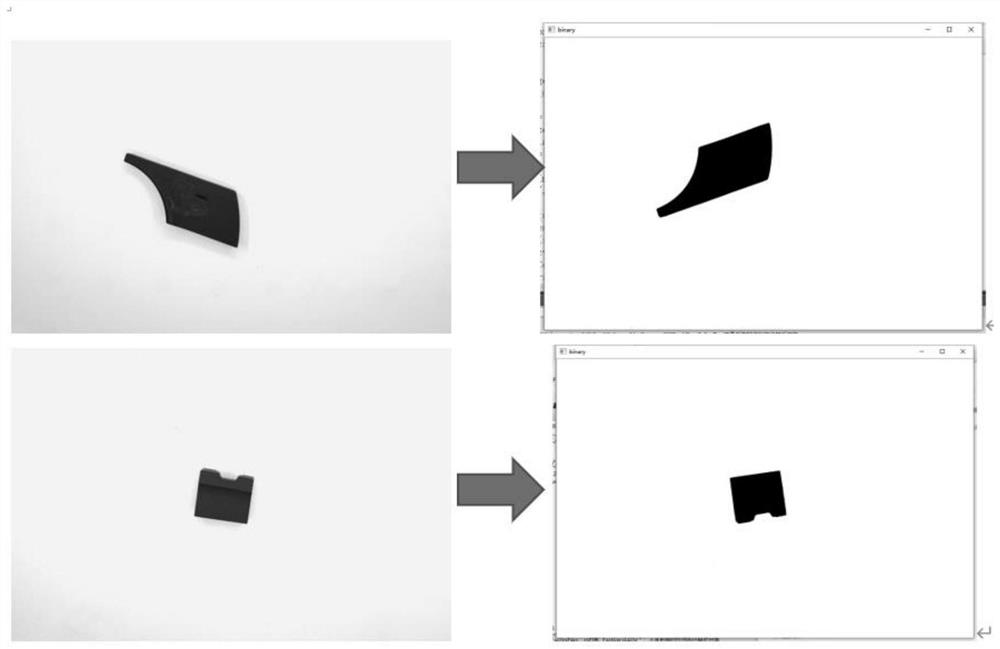

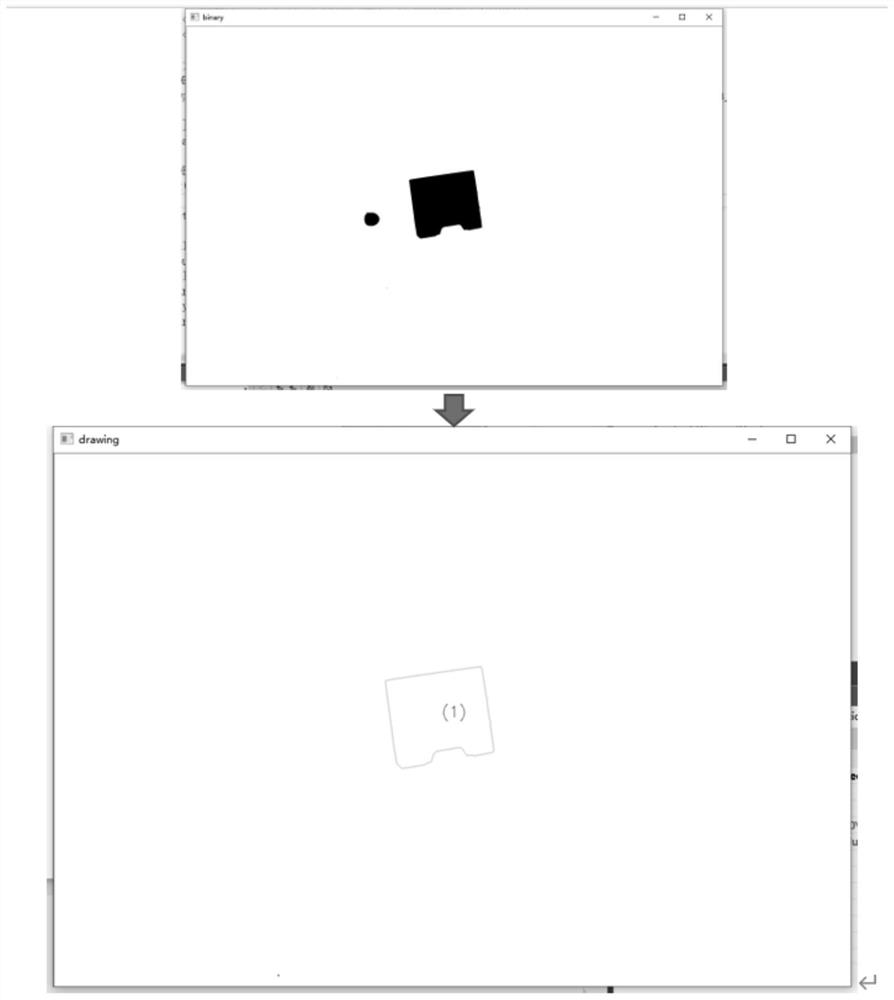

Laser marking system and method based on feature point extraction algorithm detection

ActiveCN111832659ALow latitudeEfficient extractionCharacter and pattern recognitionManufacturing computing systemsImage extractionFeature parameter

The invention belongs to the technical field of image recognition, and discloses a laser marking system and method based on feature point extraction algorithm detection. The method includes extractingan unclosed contour from the to-be-identified workpiece image; obtaining coordinates of all contour points on the contour; calculating an area parameter of each contour; screening the optimal contourout through the area, calculating the moment and the centroid of the contour, finally, extracting the needed significant feature points through the relation between the contour points and the centroid, so that the shape of the workpiece to be identified is effectively extracted and expressed, and the relation between the feature points and the centroid is calculated to determine the effective marking area, and the final marking precision is improved. Moreover, the latitude of the used characteristic parameters is low, and the invention can guarantee the higher recognition accuracy and efficiency at the same time. The invention has excellent performances such as translation invariance and rotation invariance while effectively extracting and representing the shape characteristics of the workpiece, and can effectively inhibit noise interference.

Owner:WUHAN TEXTILE UNIV

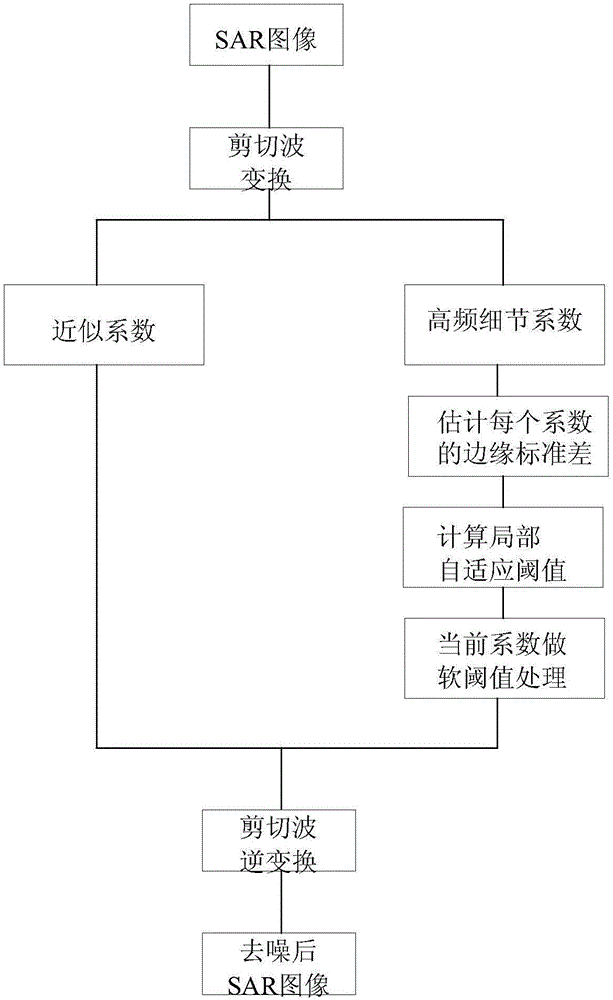

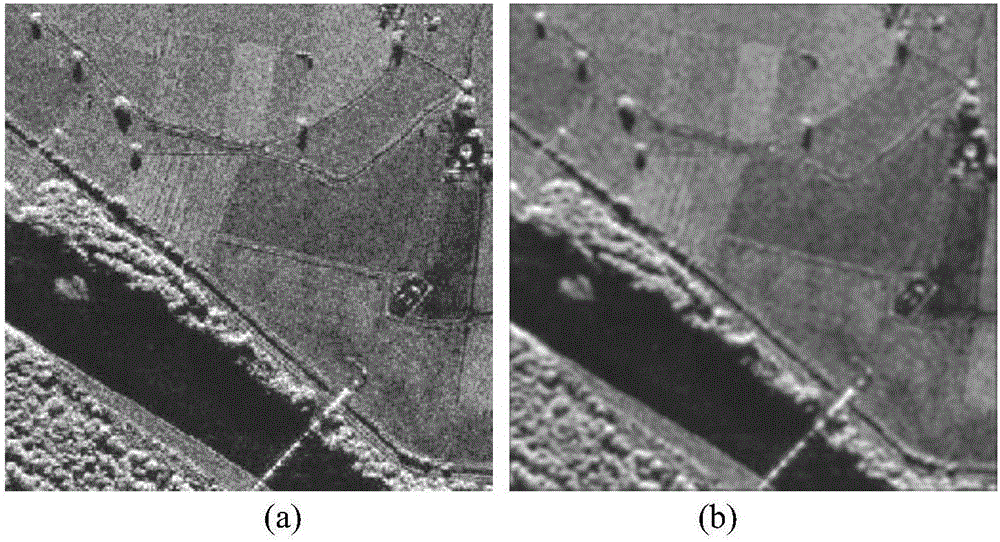

Synthetic aperture radar image de-noising method based on shear wave domain parameter estimation

ActiveCN106408532AMaintain stabilityDirectionalImage enhancementImage analysisSynthetic aperture radarDe noising

The invention relates to a synthetic aperture radar (SAR) image de-noising method based on shear wave domain parameter estimation. In coherent speckle filtering processing for SAR images, the statistics features of speckle noises are described by using Rayleigh distribution. Since the mathematic expression of a Laplace distribution model is simple, the estimated parsing solution can be obtained in combination with Bayes theory. For shear wave coefficients representing backward scattering components, Laplace distribution is used to present the probability density function of the shear wave coefficients. The experiment shows that the de-noising method based on shear wave parameter estimation has obvious suppression effect on coherent speckle noise in SAR images, and better retains edge texture information in the images.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

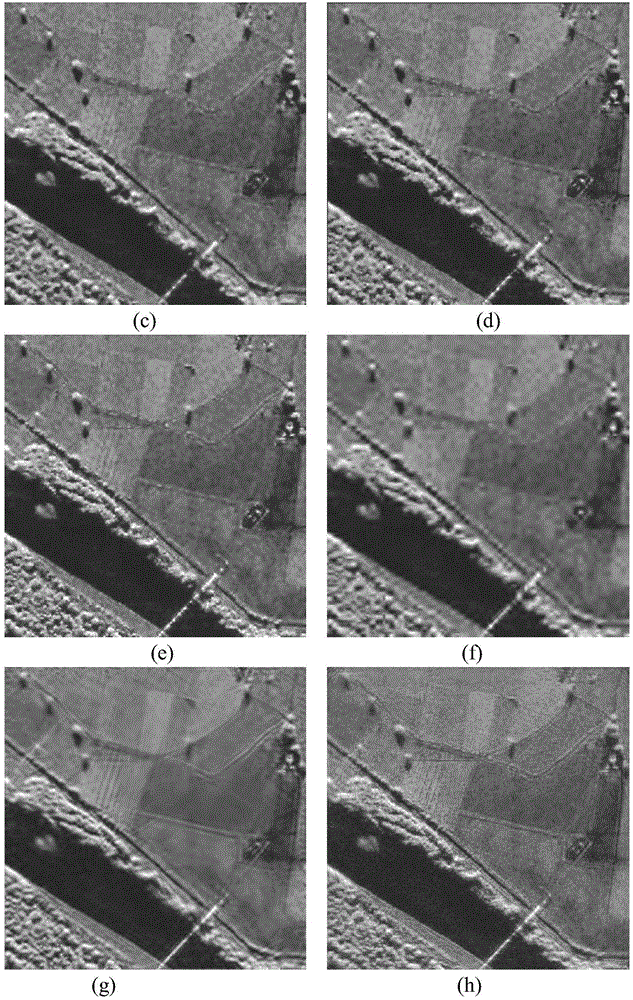

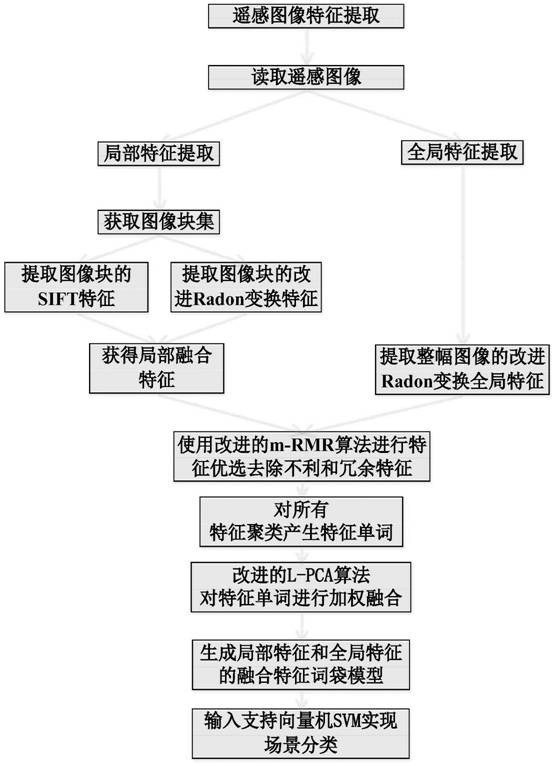

Remote sensing image scene classification method based on image transformation and BoF model

ActiveCN112966629ASolve space problemsResolve locationInternal combustion piston enginesScene recognitionScale-invariant feature transformMutual information

The invention discloses a remote sensing image scene classification method based on image transformation and a BoF model, and the method comprises the steps of carrying out the partitioning processing of a remote sensing image, obtaining an image block set, carrying out the improved Radon transformation of all image block sets, carrying out the local feature extraction through combining the scale invariant feature transformation SIFT of the image block set, and obtaining a local fusion feature of the improved Radon transform feature and the SIFT feature; secondly, carrying out edge detection on the whole remote sensing image, and improving Radon transformation to obtain global features of the remote sensing image; then, using an improved m-RMR correlation analysis algorithm based on mutual information for carrying out feature optimization on the local fusion features and the global features, removing unfavorable and redundant features, clustering all the features to generate feature words, and using an improved PCA algorithm for carrying out weighted fusion on the feature words to obtain a fusion feature; obtaining a fusion feature word bag model of the local features and the global features; and finally, inputting a support vector machine (SVM) to generate a classifier and realizing remote sensing image scene classification.

Owner:EAST CHINA UNIV OF TECH

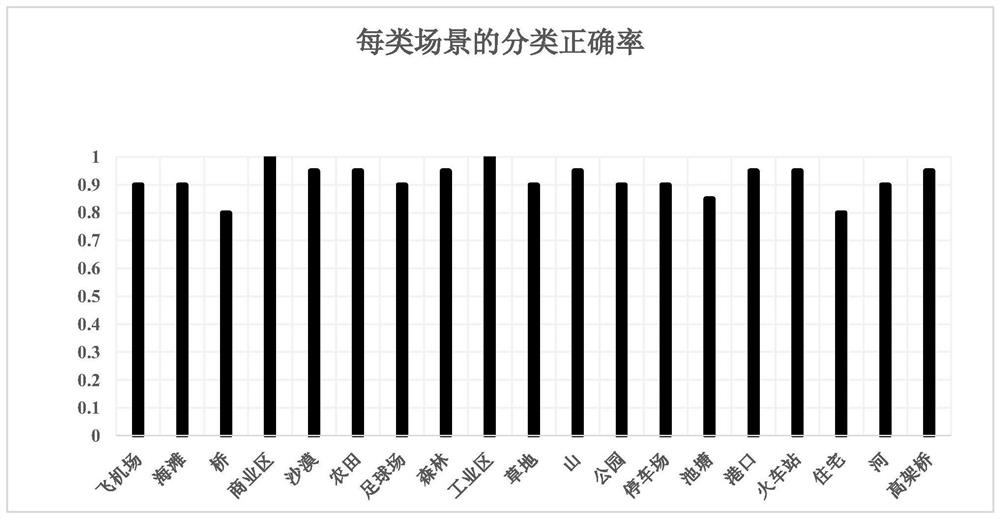

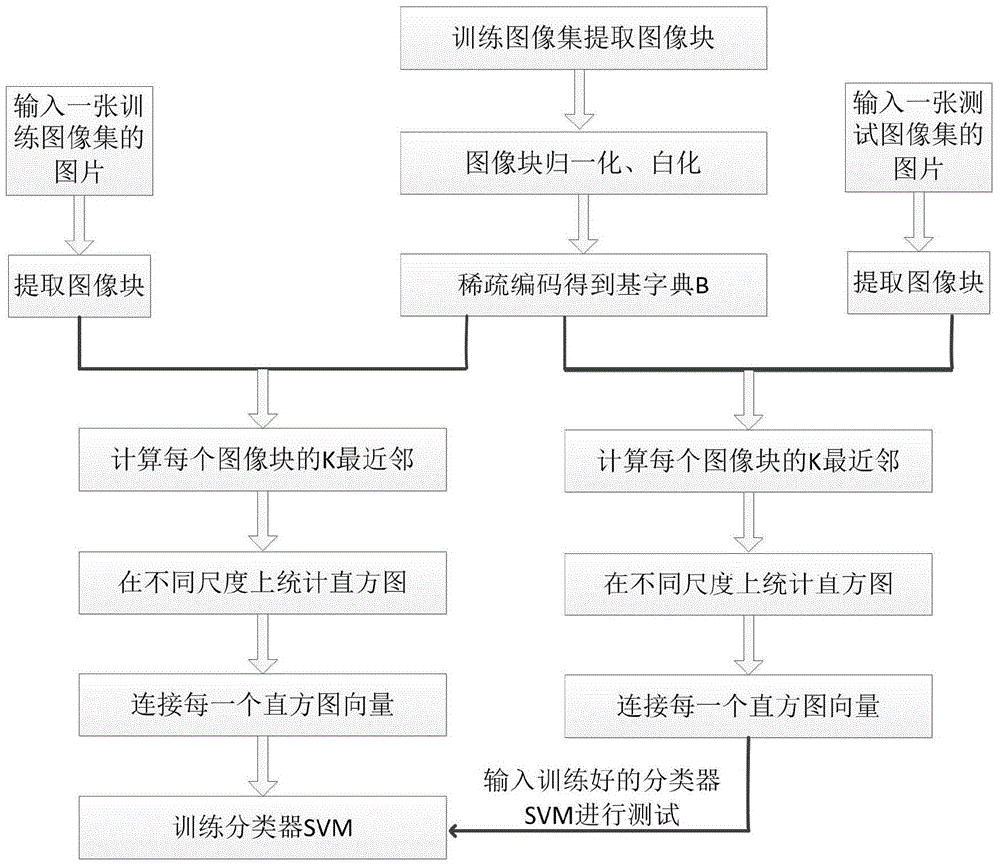

Large image classification method based on sparse coding K nearest neighbor histograms

InactiveCN104361354AImprove classification accuracyGuaranteed classification accuracyCharacter and pattern recognitionInformation processingImaging processing

The invention provides a large image classification method based on sparse coding K nearest neighbor histograms, and belongs to the technical field of pattern recognition and information processing. According to the image feature expression provided by the method, histogram statistics is conducted on different scales, and the feature information of all regions of an image is captured to a great extent, so that the obtained image feature has translation invariance, and various deformed images can be distinguished effectively; the accuracy of a large image classification task is improved with image expression as concise as possible, the image expression is extremely concise in the image processing process, the computing complexity is low, and meanwhile, very high robustness is provided for image deformation.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

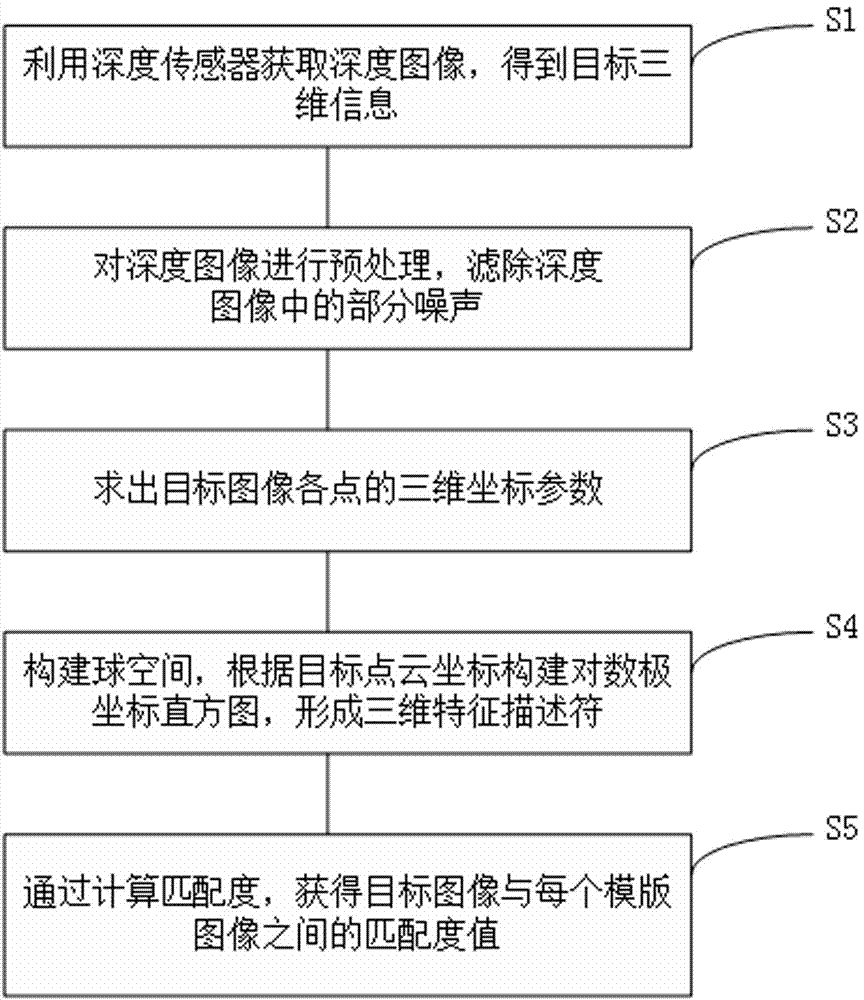

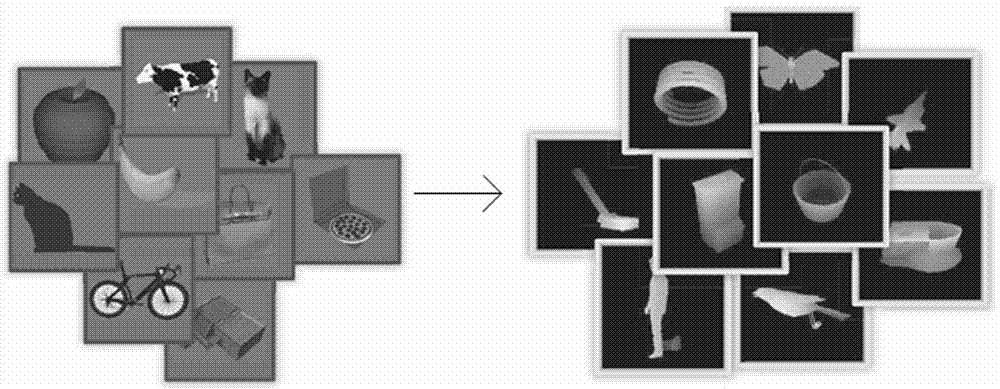

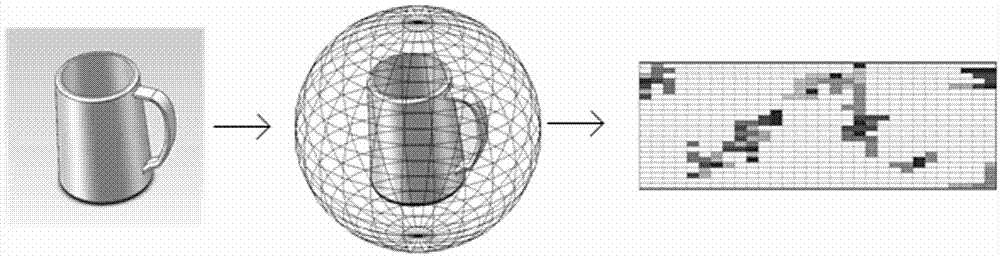

3D target identification method based on sphere space

InactiveCN107273831AScale invariantRotation invariantThree-dimensional object recognitionPoint cloudScale invariance

The invention discloses a 3D target identification method based on a sphere space, comprising the following steps: acquiring a depth image through a depth sensor, and getting 3D information of a target; preprocessing the depth image, and removing part of noise in the depth image; calculating the 3D coordinate parameters of points in a target image; constructing a sphere space, constructing a log-polar histogram according to the point cloud coordinates of the target, and forming a 3D feature descriptor; and calculating the matching degree, and getting the matching degree values between different images. Features of image shape and depth information can be extracted and represented. The method has scale invariance, rotation invariance and translation invariance. The accuracy of identification is improved.

Owner:SUZHOU UNIV

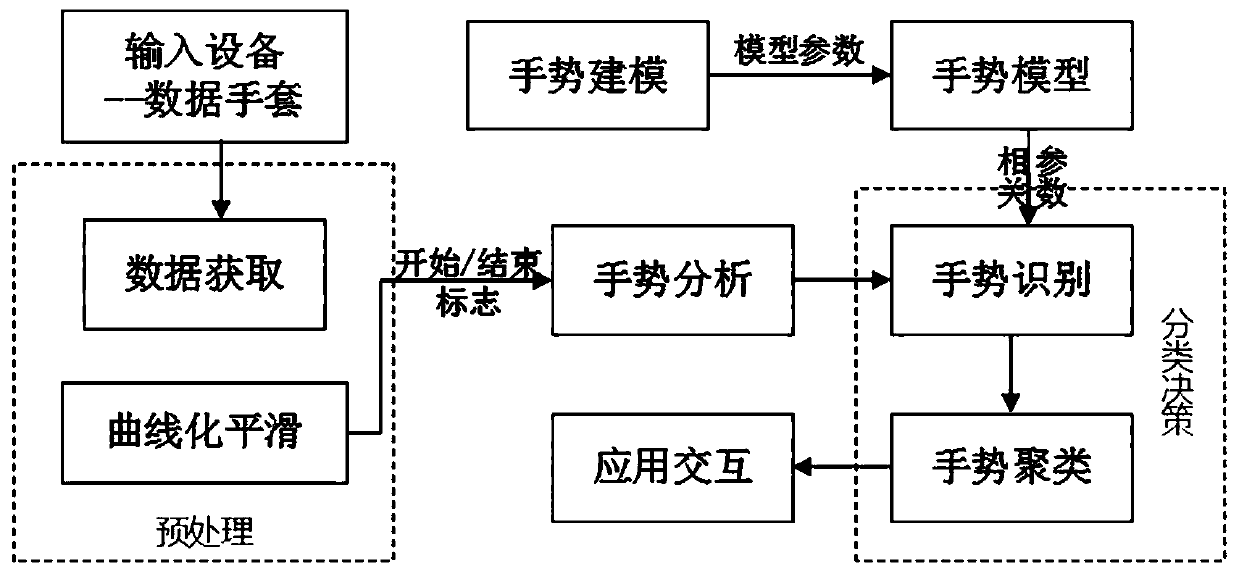

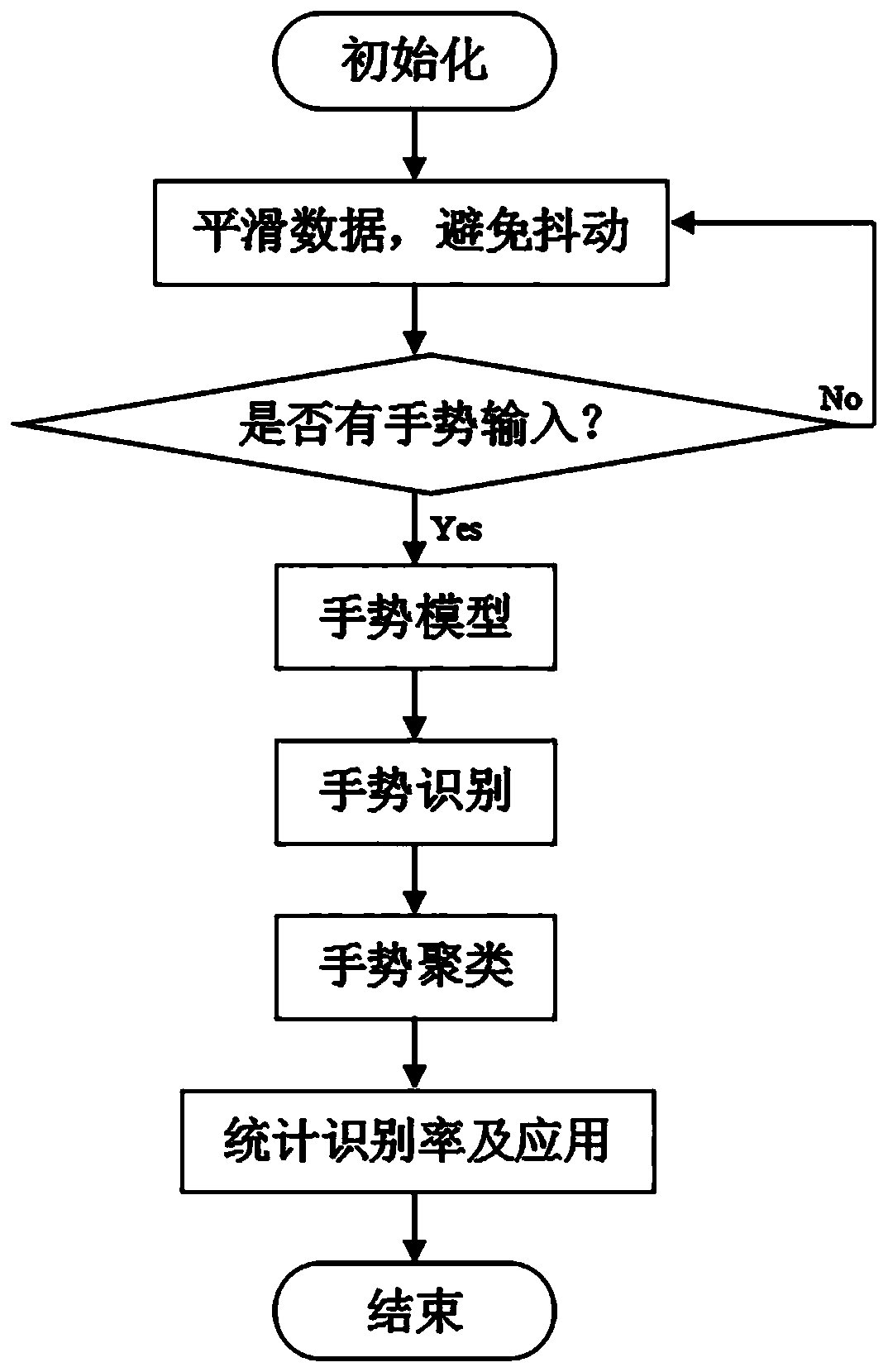

Micro-gesture recognition method

ActiveCN110309726AAccurate dataLow time complexityInput/output for user-computer interactionCharacter and pattern recognitionTemplate matchingLeast squares

The invention provides a micro-gesture recognition method, taking a data glove as input equipment, wherein the data glove can obtain rotation angle data of m joints. The micro-gesture recognition method comprises the following steps: a, firstly, carrying out data preprocessing, and carrying out curve fitting processing on the obtained data by using a least square method; b, carrying out gesture modeling, so that each gesture is represented by a discrete sub-sequence SLZ containing m elements; and c, carrying out gesture recognition, and carrying out gesture matching and recognition by using anediting distance based on template matching. According to the micro-gesture recognition method, the identification direction of the fine actions of the steering fingers is identified from traditionallarge-amplitude actions such as palms and arms, and a more natural and harmonious solution is provided for human-computer interaction in a universal environment.

Owner:UNIV OF JINAN

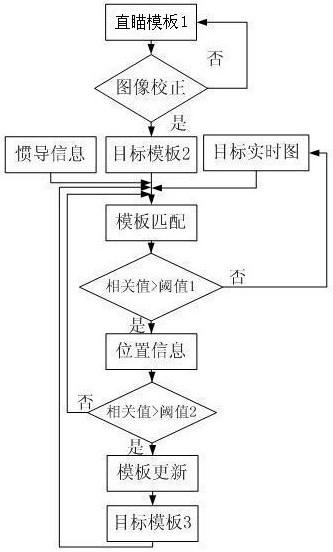

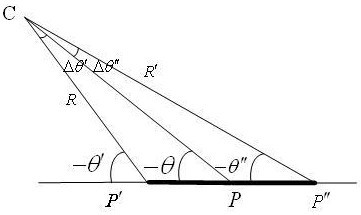

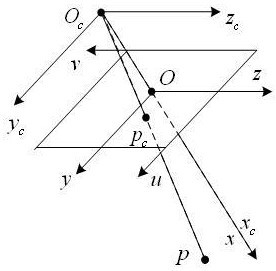

Full-strapdown image seeker target tracking method based on direct aiming template

ActiveCN112489091AImprove tracking accuracyNarrowing down object tracking searchesImage enhancementImage analysisTranslation invarianceMutual information

The invention discloses a full-strapdown image seeker target tracking method based on a direct aiming template, which corrects a target image by using the direct aiming template and inertial navigation information to enable the target image to be consistent with a view angle and a scale between target images acquired by a full-strapdown image seeker, and has the characteristic of high tracking precision. The target tracking range can be reduced by fusing inertial navigation information and image information target memory tracking methods, and the problems of translation invariance, scale invariance and rotation invariance are solved; interference of similar targets is filtered, and target tracking is processed under the conditions of non-linear maneuvering short-time field of view, shielding and the like of the targets; a hill-climbing search strategy is adopted and improved mutual information entropy is used as a similarity measurement function, so that the method has the advantages of small calculated amount and strong anti-interference capability, and the target tracking robustness can be improved.

Owner:湖南华南光电(集团)有限责任公司

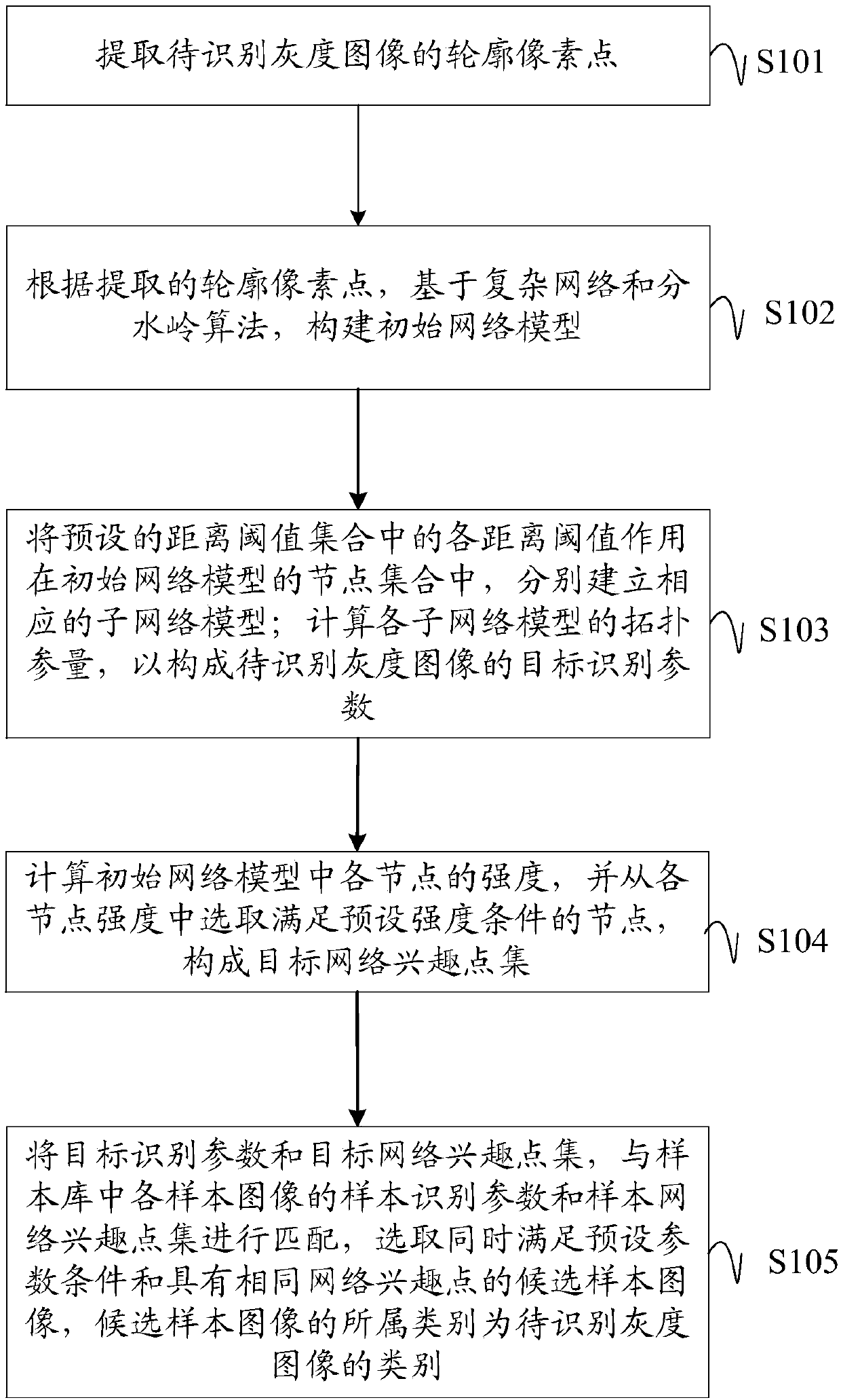

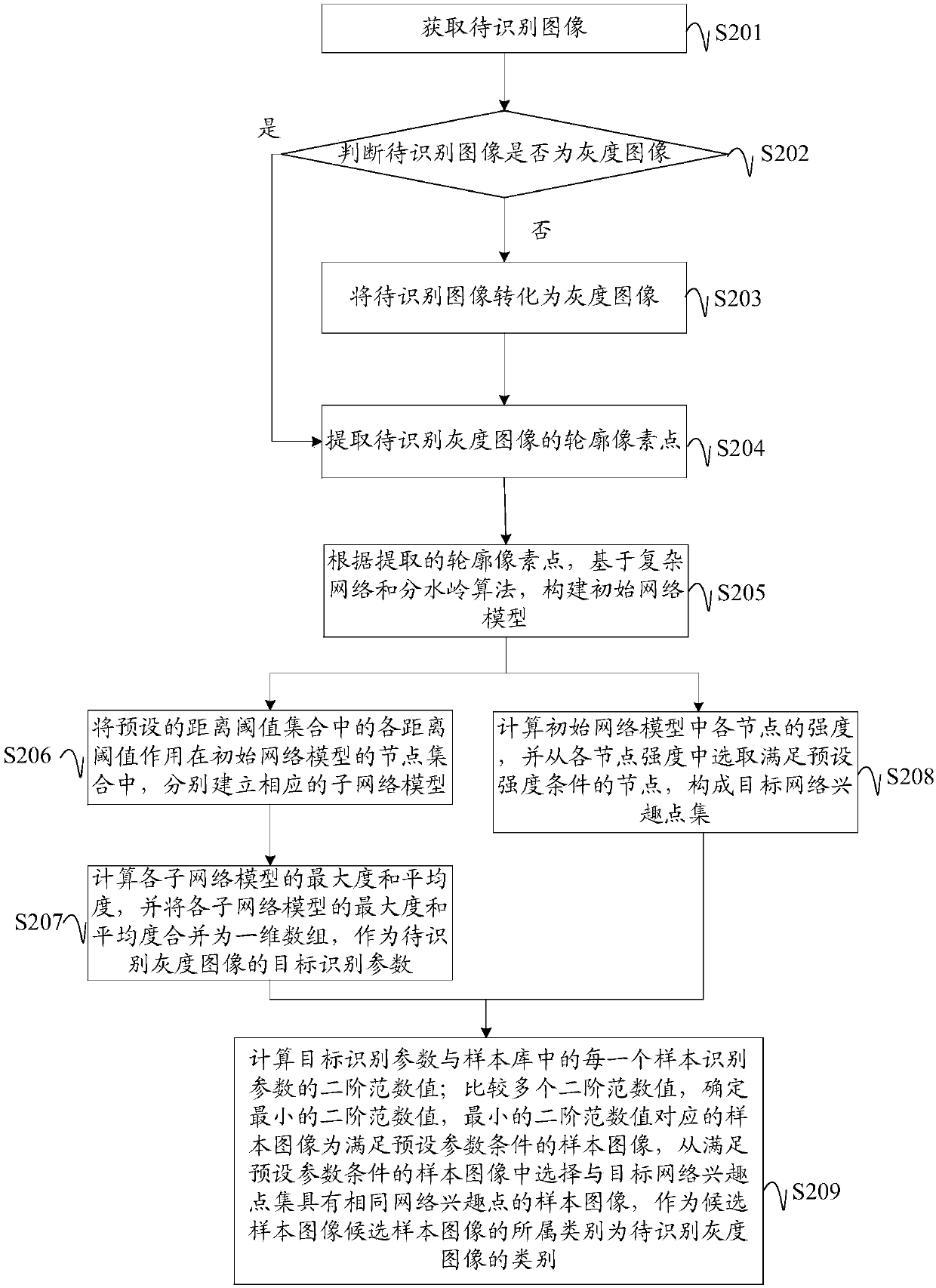

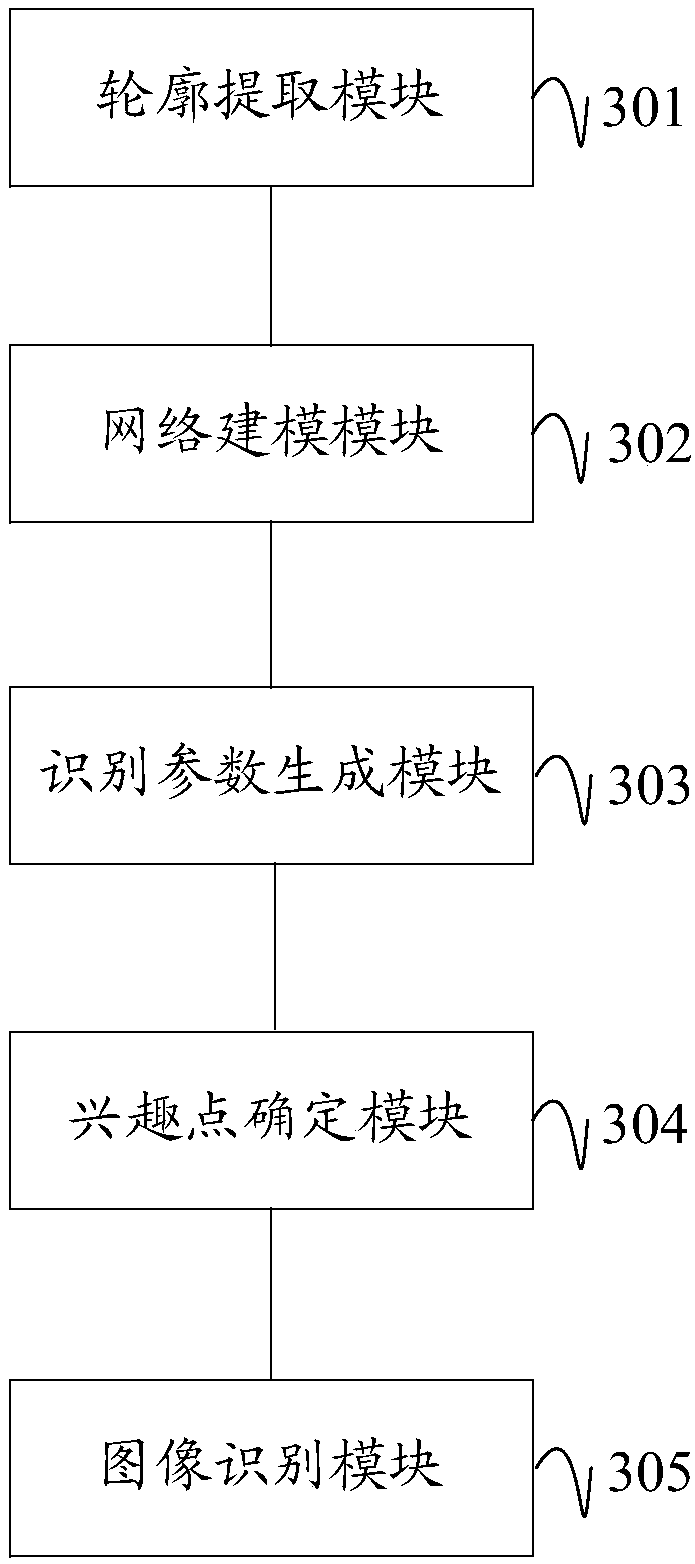

Grayscale image recognition method, device, apparatus and readable storage medium

ActiveCN108256578AReduce the amount of modelingReduce processing difficultyCharacter and pattern recognitionValue setGoal recognition

The embodiments of the invention disclose a grayscale image recognition method, a grayscale image recognition device, a grayscale image recognition apparatus and a readable storage medium. The methodincludes the following steps that: the network model of the extracted outline pixel points of a grayscale image to be identified is established based on a complex network method and a watershed algorithm; distance threshold values in a distance threshold value set are applied to the node set of the initial network model, corresponding sub-network models are established; the topological parametersof the sub-network models are calculated, so as to form the target recognition parameters of the grayscale image to be identified; the strength of each node in the initial network model is calculated,nodes of which the strength satisfies a preset strength condition are selected from the nodes according to the strength of the nodes, so as to form a target network interest point set; and a candidate sample image which satisfies a preset parameter condition and has the same network interest point can be obtained from a sample library by means of matching according to the target recognition parameters and the target network interest point set, and the category of the candidate sample image is the category of the grayscale image to be identified. With the grayscale image recognition method, device, apparatus and readable storage medium of the invention adopted, the recognition efficiency of grayscale images can be improved.

Owner:GUANGDONG UNIV OF TECH

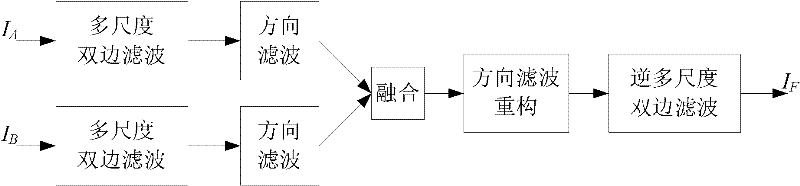

Multimodality image fusion method combining multi-scale bilateral filtering and direction filtering

InactiveCN102005037BEfficient captureEasy to captureImage enhancementInformation processingMultiscale geometric analysis

The invention belongs to the technical field of information processing and particularly relates to a multimodality image fusion method combining multi-scale bilateral filtering and direction filtering. The invention is used to fuse the images of different sensors of the same scene or the same target. The method comprises the following steps: firstly, the multi-scale bilateral filtering is utilized to decompose each source image and obtain a low-pass image and a series of high-pass images; secondly, direction filtering is performed to the high-pass images to the direction indications of the images, then the low-pass images and the directional sub-band images are separtely fused according to a certain fusion rule to obtain a fused low-pass image and a fused directional sub-band image; and finally, the fused image is obtained through direction filtering reconstruction and inverse multi-scale bilateral filtering. The method of the invention has better fusion effect and is better than the traditional multi-scale geometric analysis method; and the quality of the fused image is greatly increased.

Owner:HUNAN UNIV

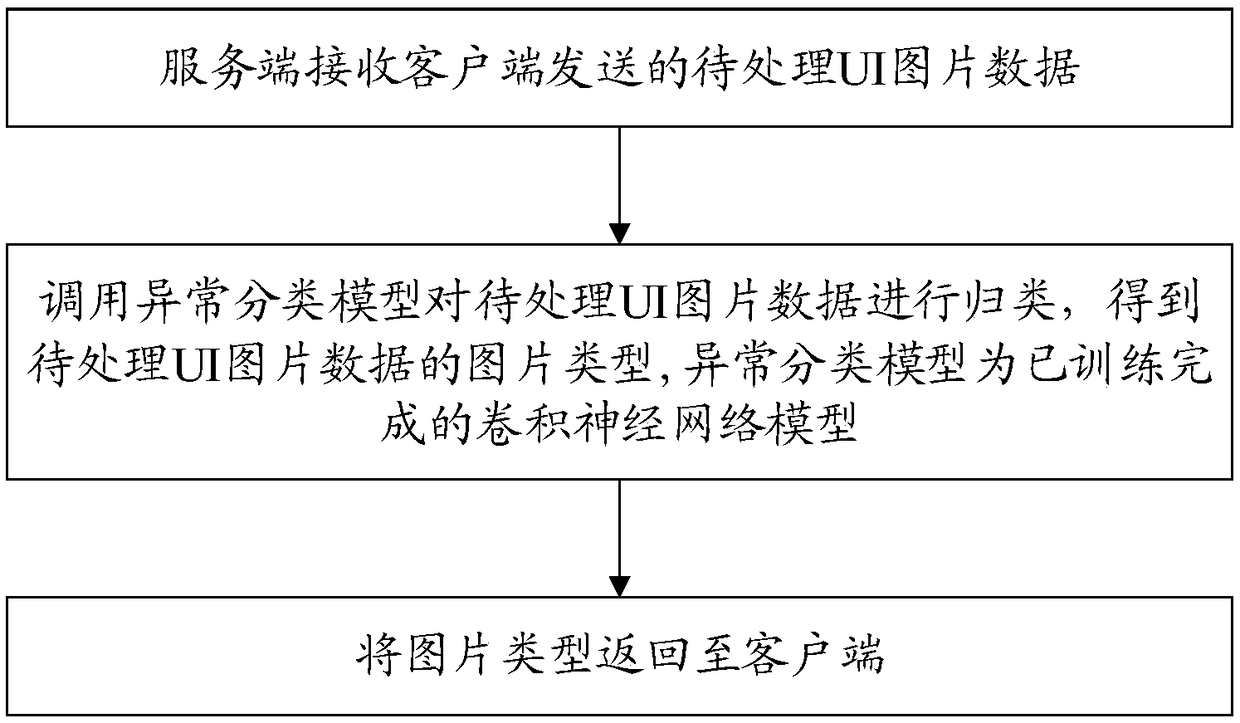

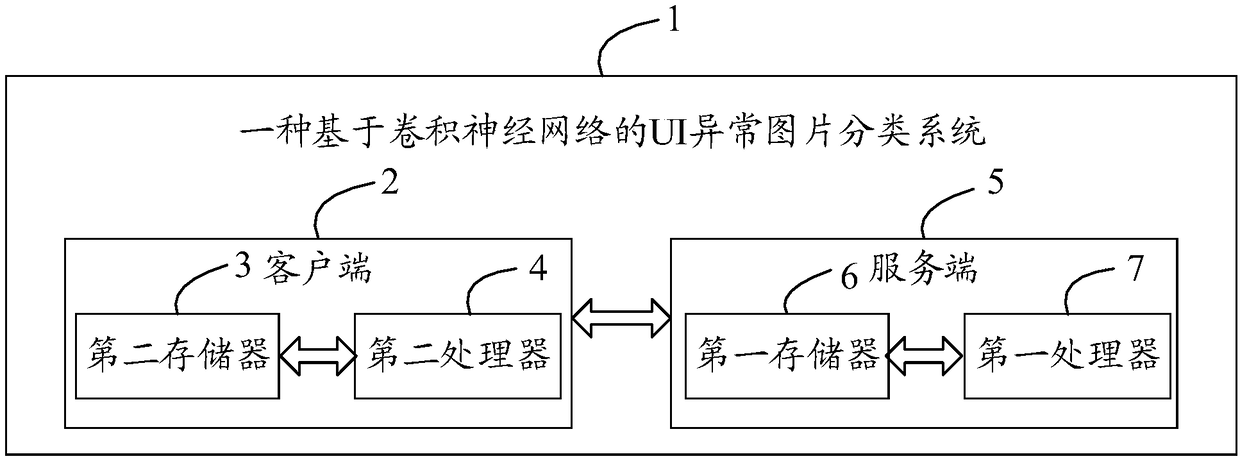

Method and system for classifying UI (User Interface) abnormal images based on convolutional neural network

ActiveCN108764289AImprove accuracyEfficient extractionCharacter and pattern recognitionClassification methodsReusability

The invention discloses a method and a system for classifying UI (User Interface) abnormal images based on a convolutional neural network. The method comprises the steps of enabling a server side to receive to-be-processed UI picture data sent by a client side; calling an abnormality classification model to classify the to-be-processed UI picture data to obtain a picture type of the to-be-processed UI picture data, wherein the abnormality classification model is a convolutional neural network model which is completely trained; and returning the picture type to the client side. According to themethod and the system for classifying the UI (User Interface) abnormal images based on the convolutional neural network, effective features of the UI pictures can be effectively extracted by use of the convolutional neural network, the features do not need to be artificially designed but are learned by training of the convolutional neural network so that the learning features can be guaranteed asa whole to have translation invariance; on the one hand, a certain reusability and universality are possessed, on the other hand, a good classification effect can be achieved through the effective features of the UI pictures, and thus the accuracy for picture classification is greatly improved.

Owner:FUJIAN TQ DIGITAL

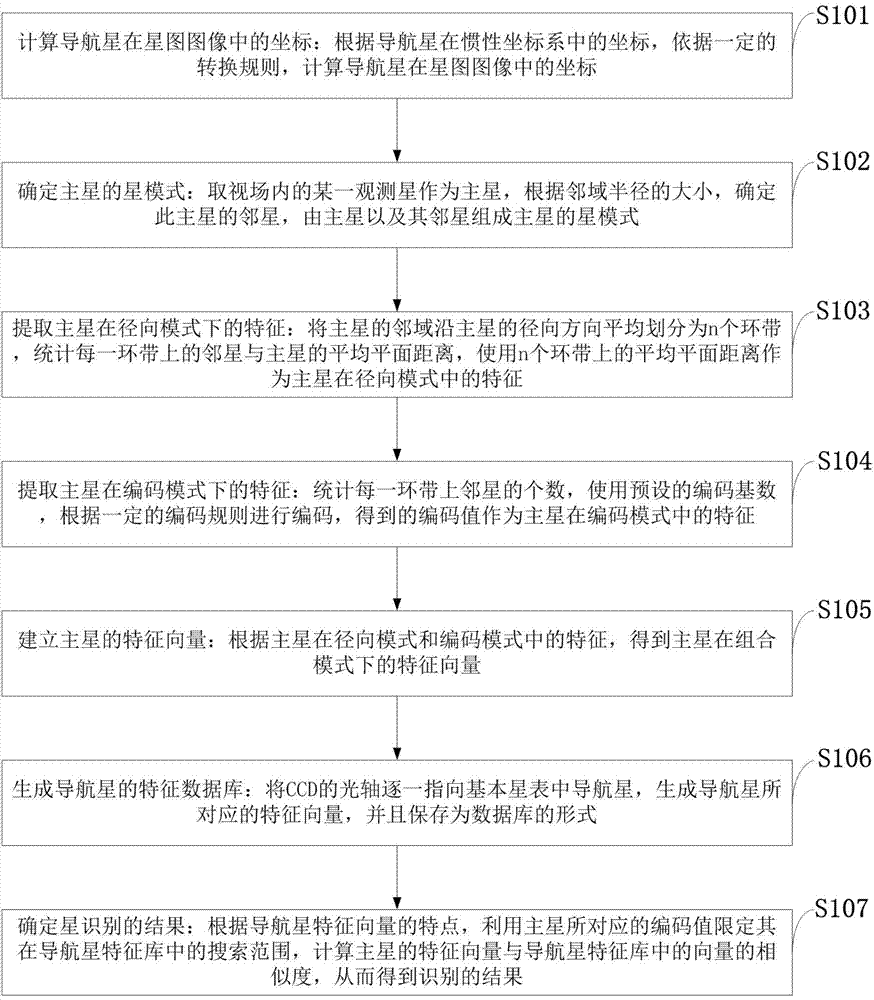

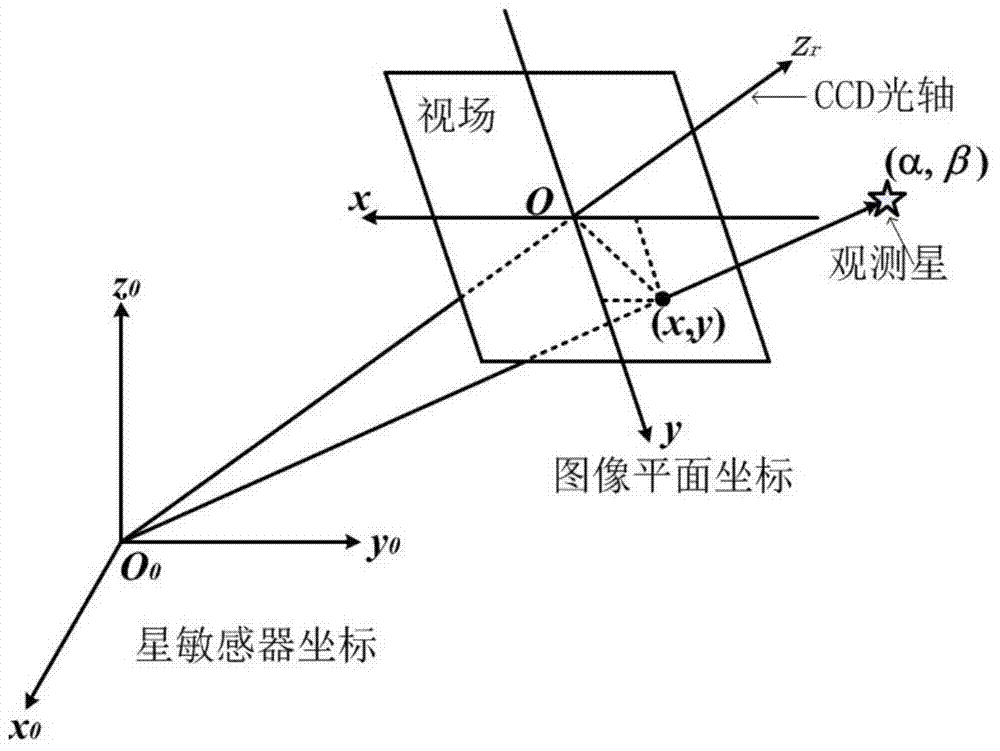

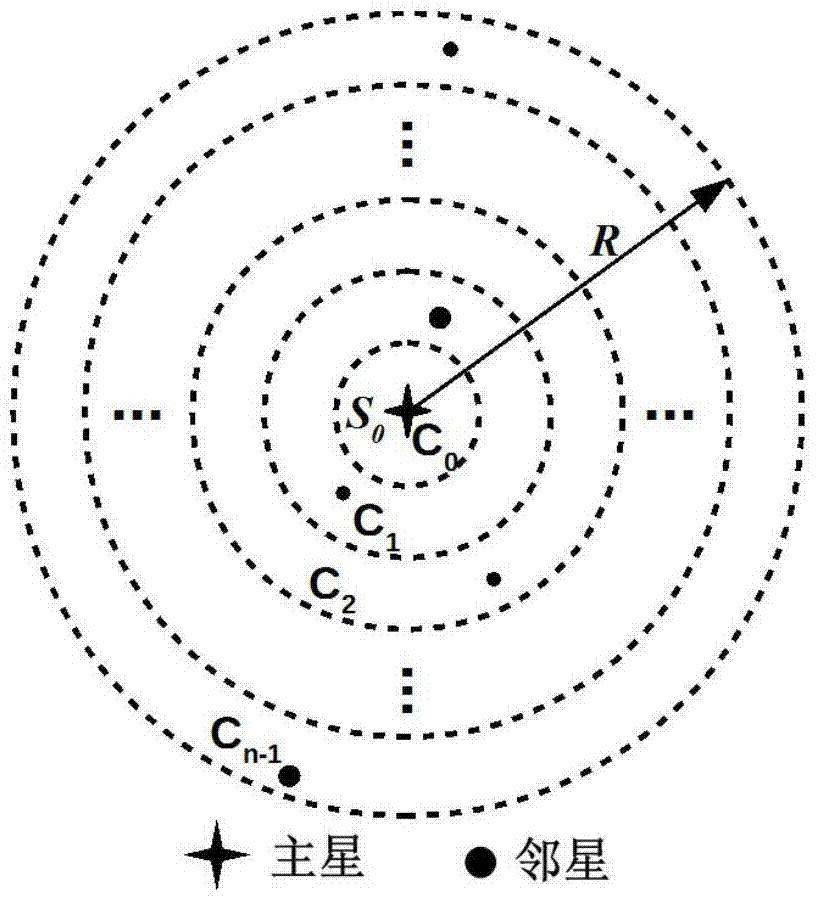

Autonomous star recognition method based on combination mode

InactiveCN104776845ATranslation invariantSuitable for star identificationNavigation by astronomical meansMarine navigationFeature vector

The invention discloses an autonomous star recognition method based on a combination mode. The feature of a combined main star in the combined mode of a radial mode and an encoding mode is adopted as the feature vector of the main star. In the recognition process, the search range of the main star in a navigation star feature library is reduced through an encoded value corresponding to the main star, the feature vector of the main star is matched and compared with vectors in the navigation star feature library, and autonomous recognition of the star is completed. The combination mode of the main star has horizontal movement and rotation invariance, and the high recognition rate is achieved, so that the method is more suitable for autonomous recognition of the star. Meanwhile, high star recognition speed is achieved, and the sensitivity of a system can be improved.

Owner:XIDIAN UNIV

A method for image inpainting with sample block sparsity combined with direction factor

InactiveCN103325095BPriority fillingGuaranteed smoothnessImage enhancementPrimary operationContourlet

Disclosed is a swatch sparsity image inpainting method with directional factors combined. The swatch sparsity image inpainting method mainly comprises the steps of conducing preprocessing on an image to be inpainted by the utilization of an existing image inpainting algorithm, extracting directional factors in four directions from the preprocessed image through non-subsampled contourlet transform, determining a new structural sparseness function and a new matching criterion according to the color-directional factor weighting distance, determining a filling-in order by means of the structure sparseness function and searching for a plurality of matching blocks according to the new matching criterion, establishing a constraint equation with color space local sequential consistency and directional factor local sequential consistency, optimizing and solving the constraint equation to obtain sparse representation information of the matching blocks, conducting filling, and updating filled-in regions until damaged areas are completely filled in. By means of the swatch sparsity image inpainting method, the consistency of the structure part, the clearness of the texture part and the sequential consistency of neighborhood information can be effectively kept, and the swatch sparsity image inpainting method is particularly applicable to inpainting of real pictures or composite images with complex textures and complex structural characteristics.

Owner:SOUTHWEST JIAOTONG UNIV

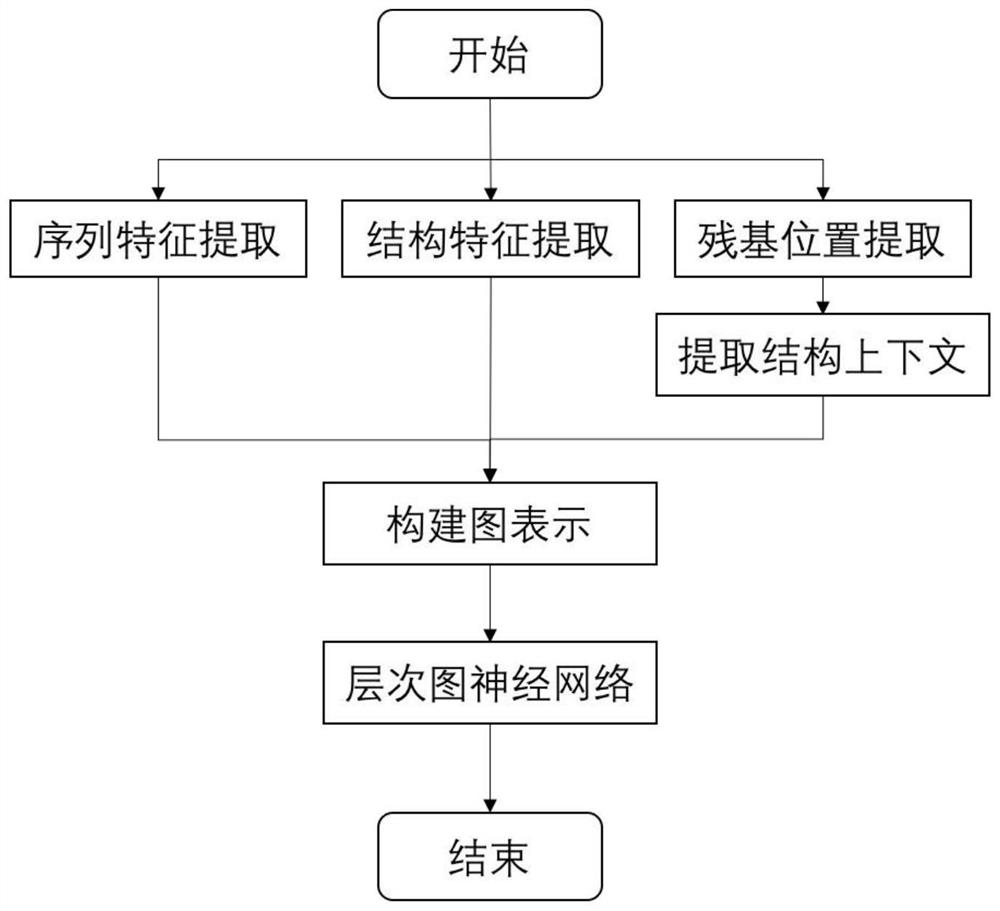

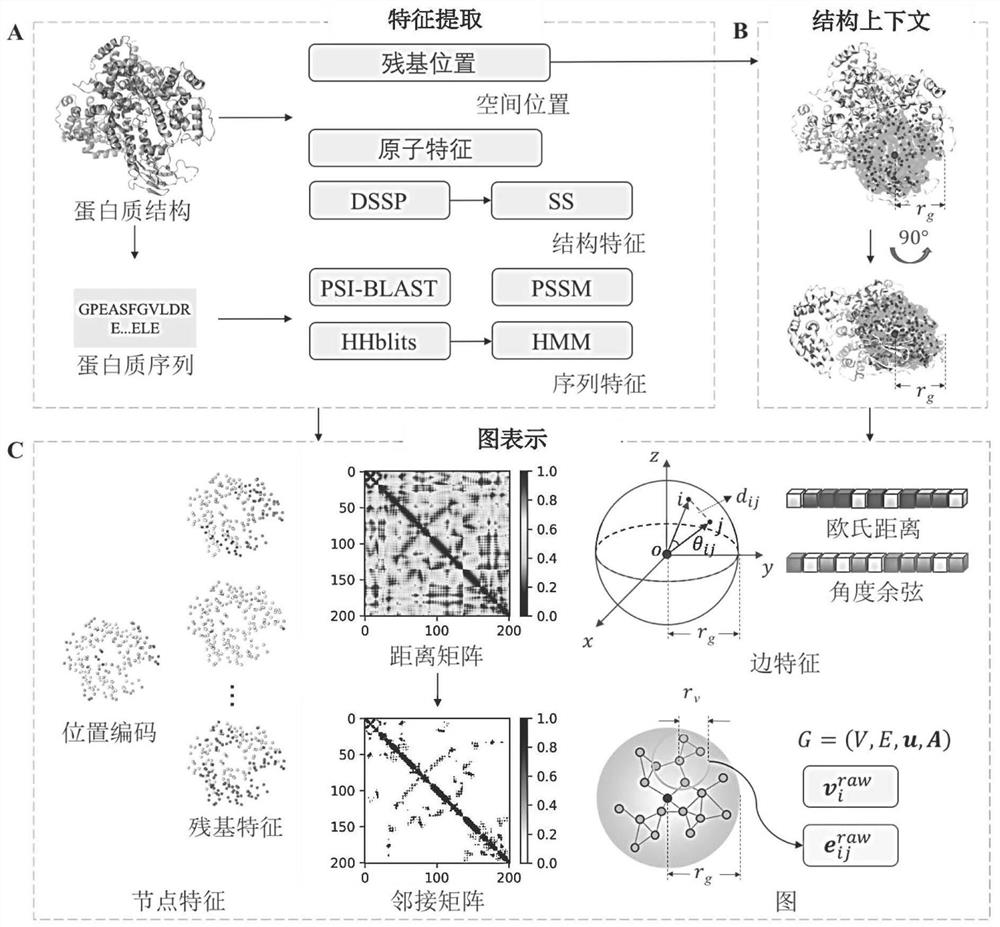

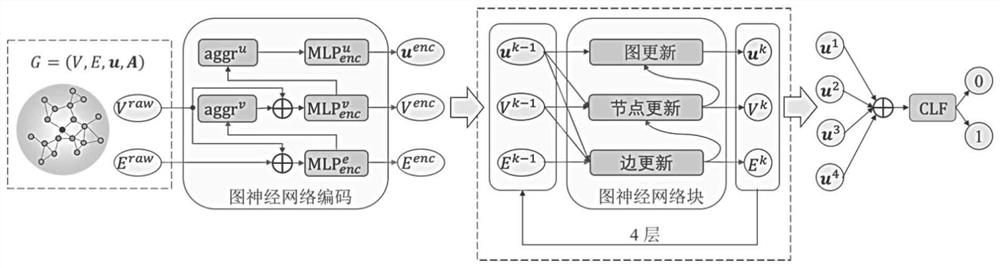

Protein and nucleic acid binding site prediction method based on graph neural network characterization

PendingCN114765063ASolve the low prediction accuracyImprove forecast accuracyProteomicsGenomicsBinding siteGraph neural networks

A protein and nucleic acid binding site prediction method based on graph neural network characterization comprises the following steps: constructing a protein and nucleic acid interaction data set, extracting the position and feature information of each residue in the protein and the structural context thereof after sample fusion processing, and constructing graph representation of the structural context of the residues according to the graph representation. And predicting the graph representation of the to-be-predicted protein through the hierarchical graph neural network to obtain the probability that each residue is combined with DNA / RNA, thereby realizing prediction of the binding site of the protein and the nucleic acid. Key structures and feature patterns of binding sites are learned from graph representations through graph representations based on residues of structural context and a hierarchical graph neural network model.

Owner:SHANGHAI JIAO TONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com