Visual sense based static gesture identification method

A gesture recognition and gesture technology, applied in the field of human-computer interaction, can solve the problems of high gesture segmentation requirements, unsatisfactory classification results, high computational complexity of support vector machines, etc., and achieve the effect of improving recognition rate and classification effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

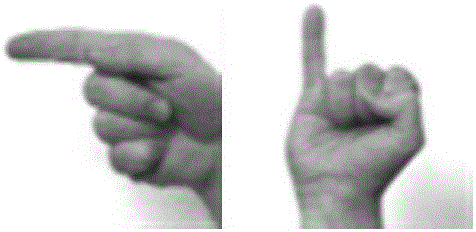

[0088] This embodiment is carried out on the JochenTriesh gesture database, which has a total of 720 images, including 10 gestures, and 24 different people were photographed under 3 different backgrounds, including a single bright background, a single dark background and complex background. In this embodiment, only a total of 480 images under a single bright background and a single dark background are used, and each image is cropped to a size of 80×80 pixels.

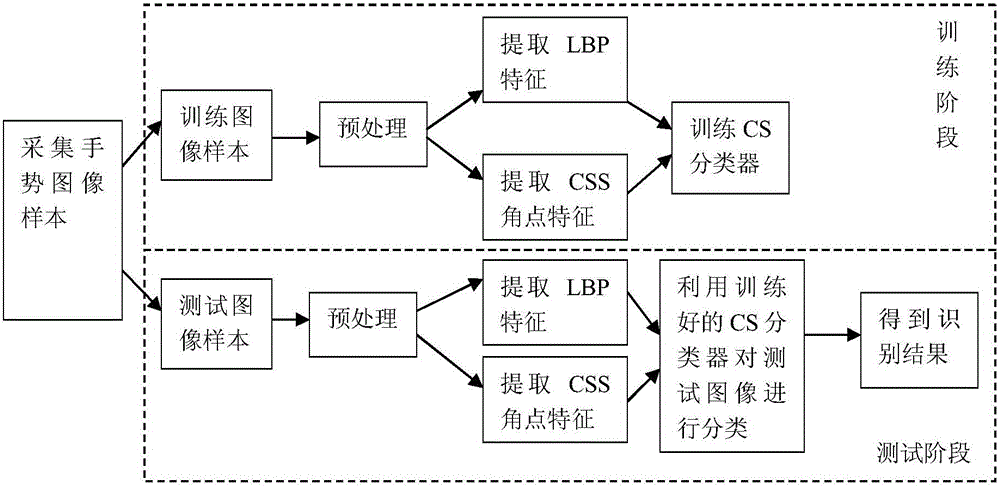

[0089] A vision-based static gesture recognition method, the specific steps comprising:

[0090] A. Training phase

[0091] (1) Collect training gesture image samples;

[0092] (2) Preprocessing the training gesture images collected in step (1);

[0093] (3) the LBP feature of the training gesture grayscale image after extraction step (2) preprocessing;

[0094] (4) extracting the CSS corner feature of the training gesture outline image after step (2) preprocessing;

[0095] (5) the LBP feature of the training gest...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com