Method for many-core circulation partitioning based on multi-version code generation

A code generation, multi-version technology, applied in the computer field, can solve the problems of ineffective use of on-chip storage, affecting the acceleration effect, and low utilization of on-chip storage.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0010] In order to make the content of the present invention clearer and easier to understand, the content of the present invention will be described in detail below in conjunction with specific embodiments and accompanying drawings.

[0011] In the present invention, the many-core processor is composed of a control core and a computing core array, wherein each computing core has a cache. And among them, a piece of high-speed cache that comes with each computing core is used as on-chip storage, which can perform data transmission with the main memory through direct memory access DMA.

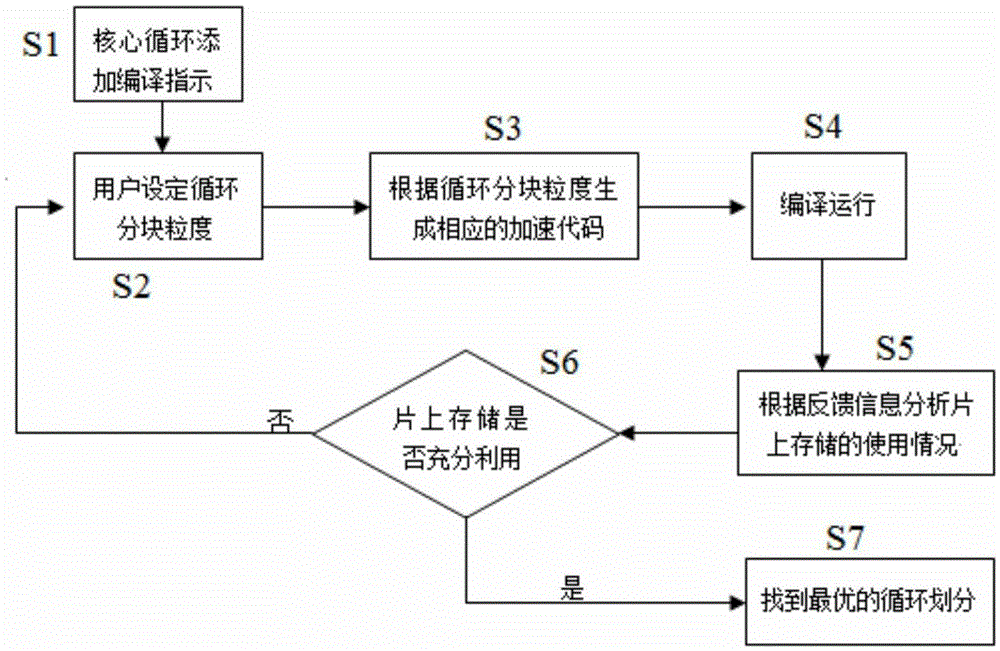

[0012] The invention proposes a method of using a pragma to instruct a parallel compiler to block many-core loops. When the compiler performs parallel transformation on the many-core loop, it determines the granularity of the loop block according to the value indicated by the compiler, thereby generating the corresponding parallel code (different granularity of the loop block generates different...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com