Method for sensing stereoscopic video coding based on parallax just-noticeable difference model

A technology of stereoscopic video coding and error model, which is applied in the field of video processing and can solve problems such as the reduction of coding efficiency of coding software

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

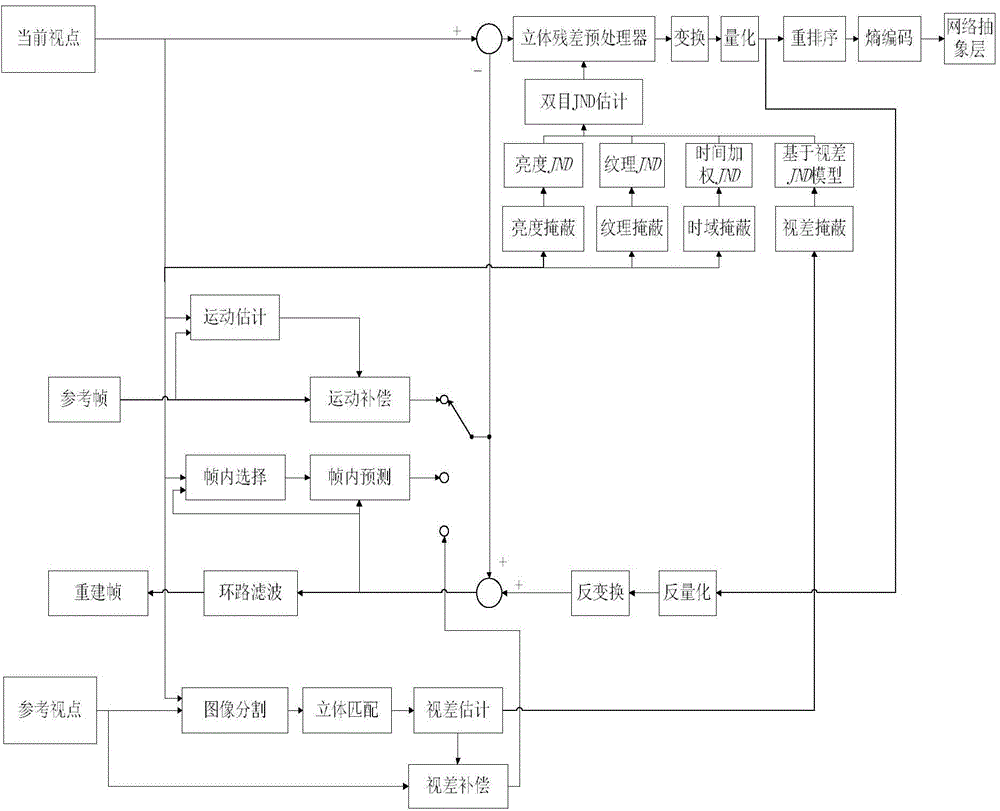

[0081] Such as figure 1 Shown, the realization steps of the present invention are as follows:

[0082] Step 1. Disparity estimation

[0083] 1a) Read in each frame image I corresponding to the left and right viewpoints of the binocular stereo video respectively iL and I iR , and use the method based on the mean shift color segmentation to segment and preprocess it to obtain the image I' iL and I' iR ;

[0084] (1a1) Perform mean shift filtering on each frame of images corresponding to the left and right viewpoints respectively, to obtain the information of all subspace convergence points;

[0085] (1a2) segmented regions are obtained by combining clusters of pixel points delineating the same domain through mean-shift filtering;

[0086] 1b) For I' iL and I' iR Stereo matching is performed to obtain the disparity d(x,y) between the left and right viewpoints. The specific steps are as follows:

[0087] (1b1) Using local stereo matching, we can get:

[0088] d(x,y)=a x+...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com