Interaction method and device of touch screen interface

A technology of interface interaction and touch screen, which is applied in the direction of instruments, electrical digital data processing, input/output process of data processing, etc., and can solve problems such as cumbersome operation of graphic objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

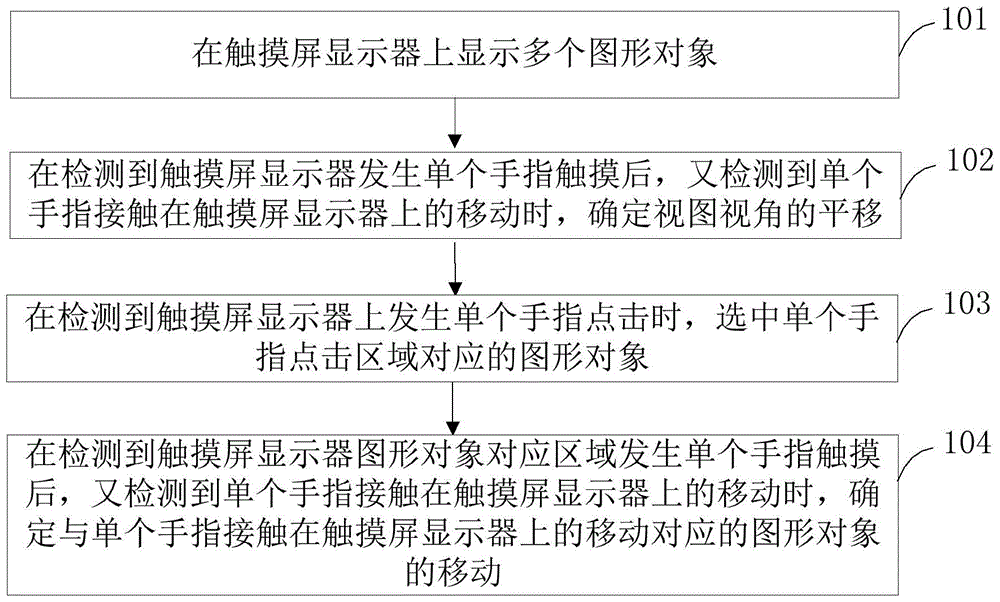

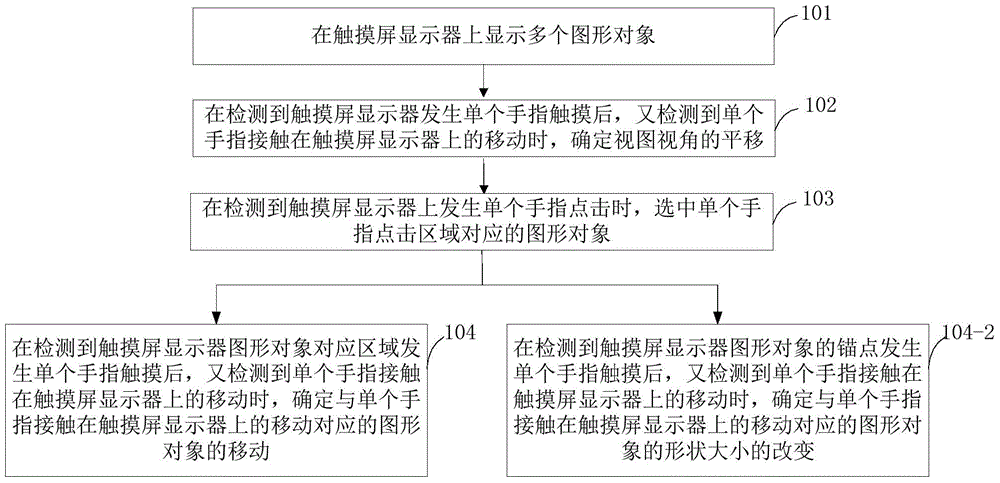

[0031] figure 1 The implementation process of the touch screen interface interaction method provided by this embodiment is shown. For the convenience of description, only the parts related to this embodiment are shown, and the details are as follows:

[0032] In step 101, a plurality of graphic objects are displayed on a touch screen display. The touchscreen display shows a portion of the system view in a scaled ratio.

[0033] In step 102, when a single finger touch on the touch screen display is detected and then a movement of a single finger contact on the touch screen display is detected, the translation of the viewing angle is determined.

[0034] In specific implementation, step 102 can be divided into the following steps:

[0035] A. Obtain the first coordinates of the single finger leaving the touch screen display and the second coordinates of the single finger falling.

[0036] B. Determine the target area according to the first coordinates and the second coordinat...

Embodiment 2

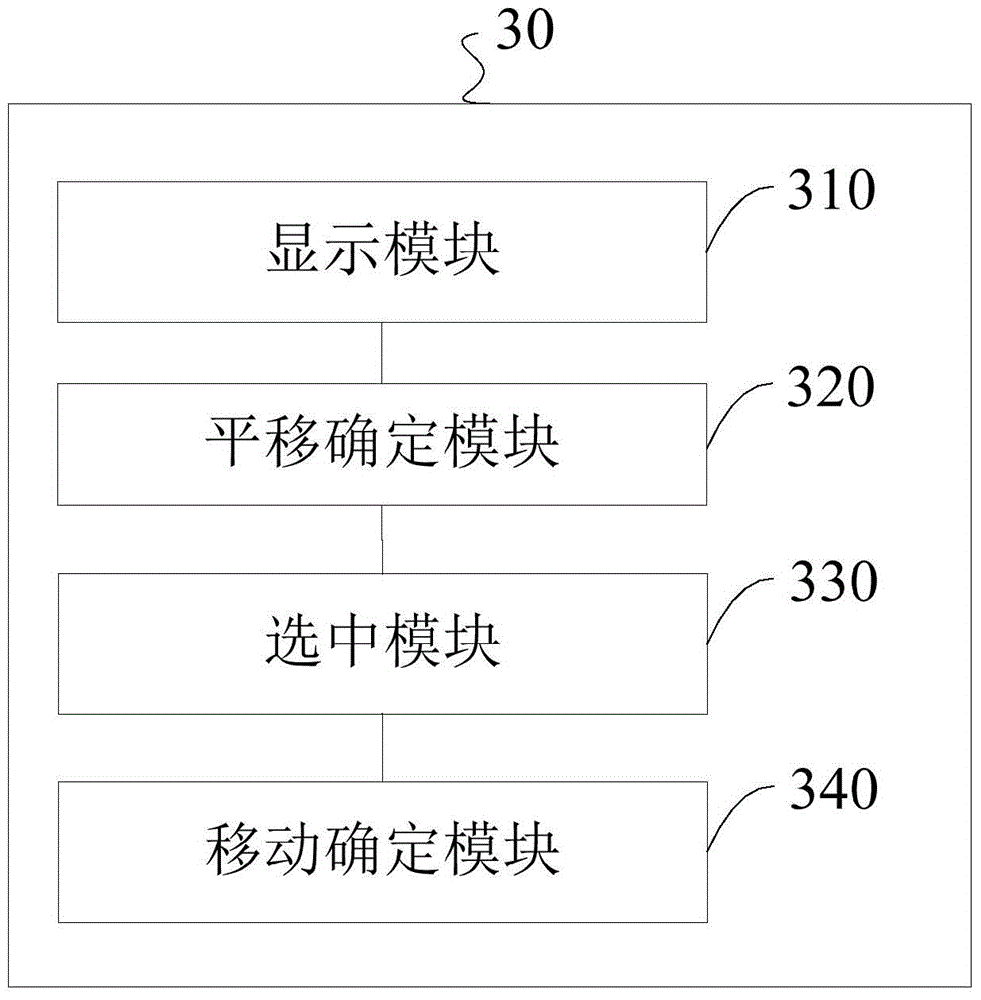

[0049] Embodiment 2 of the present invention provides a touch screen interface interaction device, such as image 3 As shown, a touch screen interface interaction device 30 includes a display module 310 , a translation determination module 320 , a selection module 330 and a movement determination module 340 .

[0050] The display module 310 is configured to display multiple graphic objects on the touch screen display.

[0051] The translation determination module 320 is configured to determine the translation of the viewing angle when a single finger touch on the touch screen display is detected and then a movement of a single finger contact on the touch screen display is detected.

[0052] The selecting module 330 is configured to select a graphic object corresponding to a single finger clicked area when a single finger click is detected on the touch screen display.

[0053] The movement determination module 340 is configured to determine the graphic corresponding to the mov...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com