Recognition method and system based on dual-modal emotion fusion of voice and facial expression

A facial expression and recognition method technology, applied in the field of emotion recognition, can solve the problems of poor facial expression recognition effect, great influence on emotion recognition results, and no consideration of acoustic channel obstruction, etc., to achieve excellent spatial position and direction selectivity, Effects of reduced computation, good rotation invariance, and grayscale invariance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] The principles and features of the present invention are described below in conjunction with the accompanying drawings, and the examples given are only used to explain the present invention, and are not intended to limit the scope of the present invention.

[0066] In this embodiment, the data of the eNTERFACE'05 audio-video multimodal emotion database is used as the material, and the simulation platform is MATLAB R2015b.

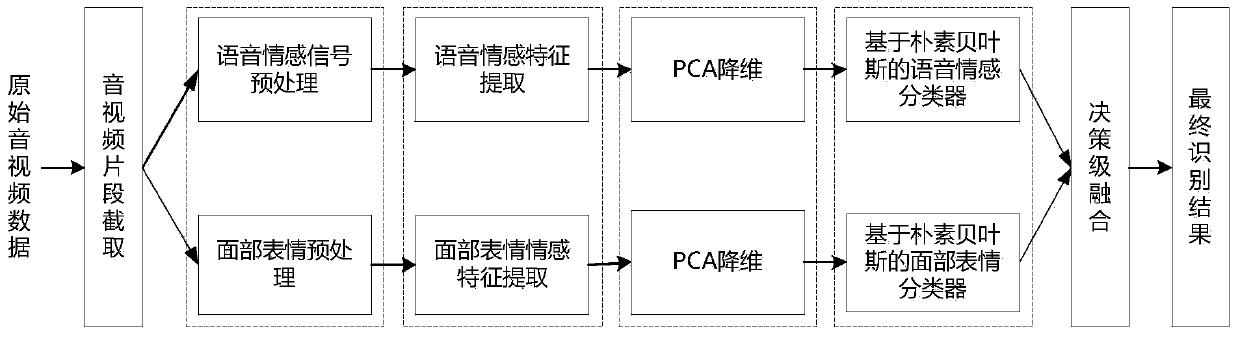

[0067] Such as figure 1 As shown, the recognition method based on the bimodal emotional fusion of voice and facial expression includes the following steps:

[0068] S1. Obtain audio data and video data of an object to be identified;

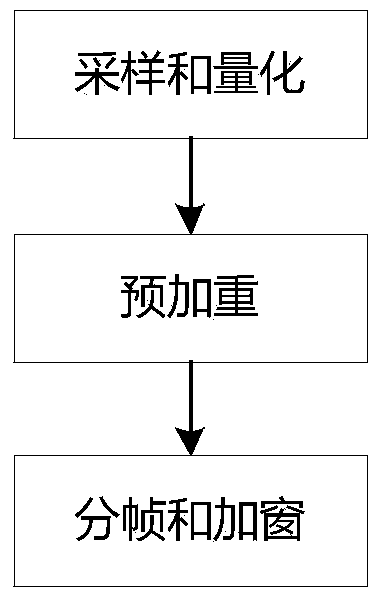

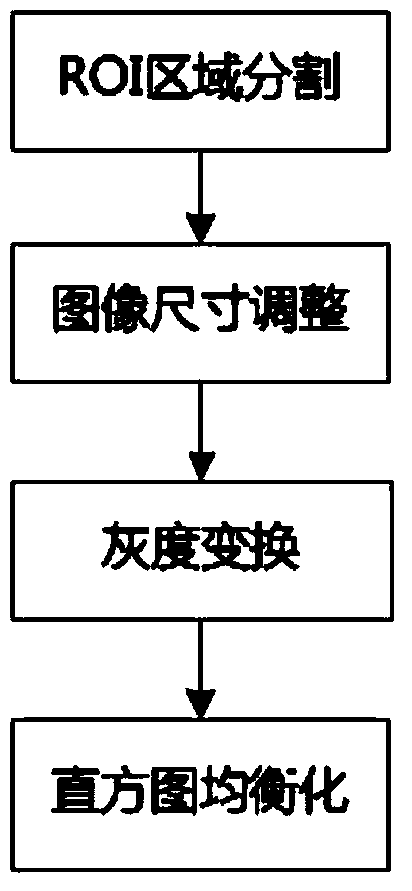

[0069] S2. The audio data is preprocessed to obtain an emotional voice signal; the facial expression image is extracted from the video data, and the eyes, nose, and mouth regions are segmented, and images of three regions of a unified standard are obtained after preprocessing. ;

[0070] S3, extracting the voice emot...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com