Cache data processing method, server and configuration device

A cache data and cache processing technology, which is applied in the direction of electronic digital data processing, memory system, memory address/allocation/relocation, etc., can solve the problem of reducing the performance of the server system, unable to meet the dynamic adjustment of the cache processing strategy, and unable to dynamically adjust in real time Adjust the elimination algorithm and other issues to achieve the effect of dynamic cache processing strategy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

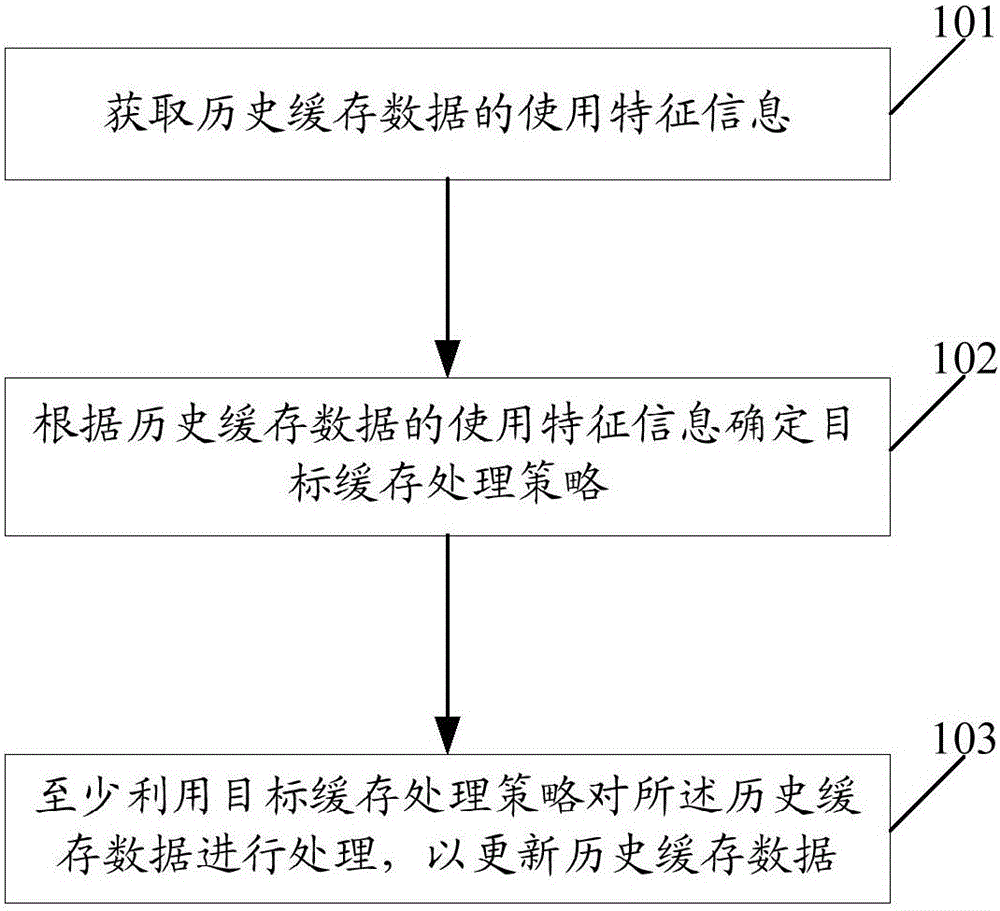

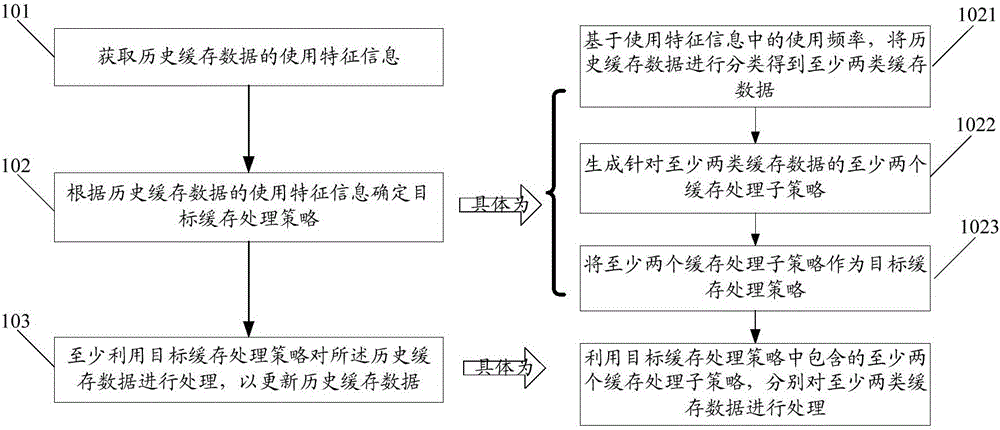

[0047] figure 1 It is a schematic diagram of the implementation flow of the cache data processing method in the embodiment of the present invention Figure 1 ;Such as figure 1 As shown, the method includes:

[0048] Step 101: the server acquires usage characteristic information of historical cache data;

[0049] In this embodiment, the usage feature information includes at least the usage frequency of the historical cache data; further, the usage feature information may also include a set priority for the historical cache data. Of course, those skilled in the art should know that in practical applications, the usage feature information can be set arbitrarily according to actual needs.

[0050] In practical applications, the step of obtaining the usage characteristic information of the historical cache data may specifically be:

[0051] The server receives the service request sent by the terminal, counts the calling feature information of the historical cache data called by...

Embodiment 2

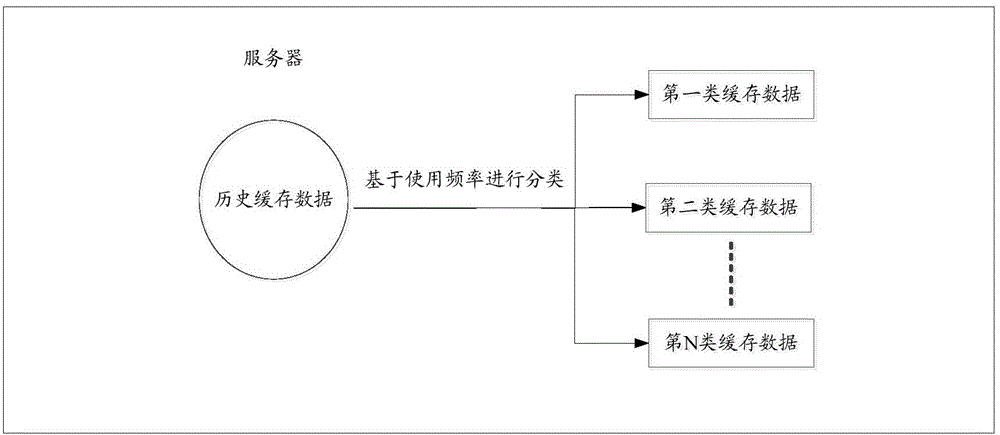

[0070] Based on the method described in Embodiment 1, in this embodiment, in order to avoid the process of the server determining the target cache processing strategy from causing an excessive load to itself, the server may selectively formulate a cache processing strategy for only part of the cached data; specifically ground, such as Figure 4 As shown, the server classifies the historical cache data into the first type of cache data and the second type of cache data based on the usage frequency in the usage feature information of the historical cache data, wherein the first type of cache data The frequency of use of the cached data of the second type is higher than the frequency of use of the second type of cached data; for example, the frequency of use of the first type of cached data is higher than the preset frequency, and the frequency of use of the second type of cached data is lower than the preset frequency; Furthermore, the server generates a target cache processing ...

Embodiment 3

[0076] Figure 5 It is the third schematic diagram of the implementation flow of the caching data processing method in the embodiment of the present invention; as Figure 5 As shown, the method includes:

[0077] Step 501: the server acquires usage characteristic information of historical cache data;

[0078] In this embodiment, the usage feature information includes at least the usage frequency of the historical cache data; further, the usage feature information may also include a set priority for the historical cache data. Of course, those skilled in the art should know that in practical applications, the usage feature information can be set arbitrarily according to actual needs.

[0079] In practical applications, the step of obtaining the usage characteristic information of the historical cache data may specifically be:

[0080] The server receives the service request sent by the terminal, counts the calling feature information of the historical cache data called by the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com