Integrated convolutional neural network-based gender recognition method

A convolutional neural network and neural network technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems that affect the performance of face gender recognition, heavy workload, and complex parameter tuning, and achieve a good face. The effect of gender recognition performance, reducing dependencies, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

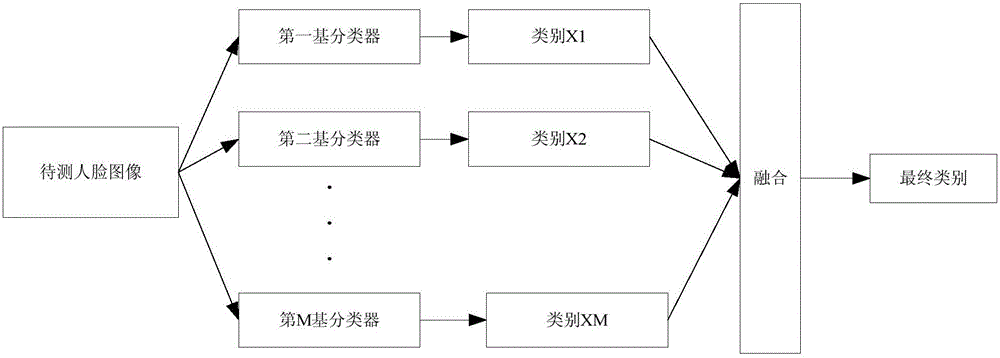

[0050] This embodiment discloses a gender recognition method based on an integrated convolutional neural network, such as figure 1 As shown, the steps are as follows:

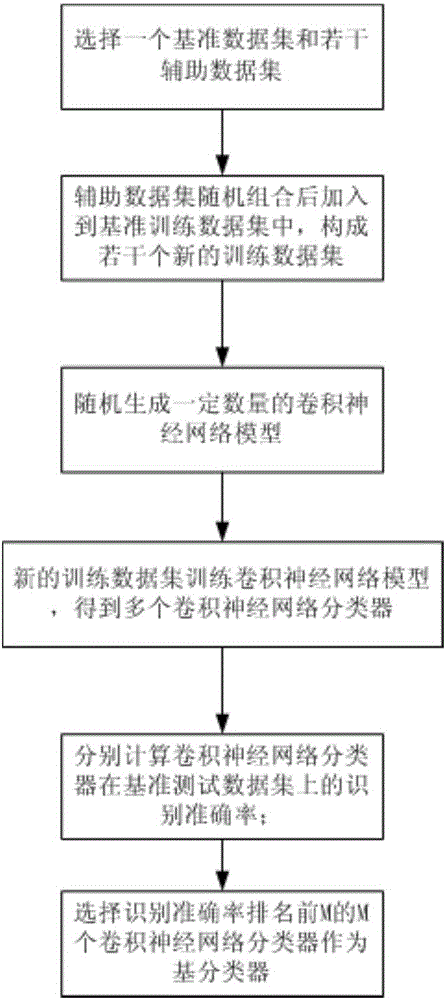

[0051] S1. First, a number of new training data sets are randomly combined to form several new training data sets, and then M convolutional neural network classifiers obtained through the training of the above new training data sets are selected as base classifiers, which are respectively the first base classifier, the second base classifier Base classifier, ..., the Mth base classifier; such as figure 2 As shown, the process of obtaining the base classifier in this step is as follows:

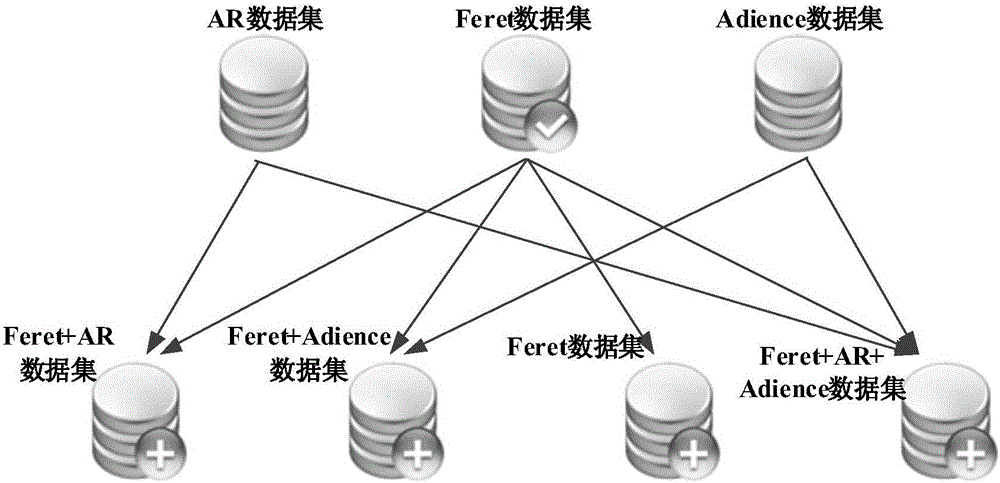

[0052] S11. Select a benchmark data set and several auxiliary data sets; wherein the benchmark data set is divided into a benchmark training data set and a benchmark test data set; as image 3 As shown, in the present embodiment, the Feret data set is selected as the benchmark data set, and the Adience data set and the AR da...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com