Image processing method and electronic equipment

An electronic device and image processing technology, which is applied in image communication, television, electrical components, etc., can solve problems such as blind eyes, poor viewing angle, and inability to adjust, so as to achieve a good image effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

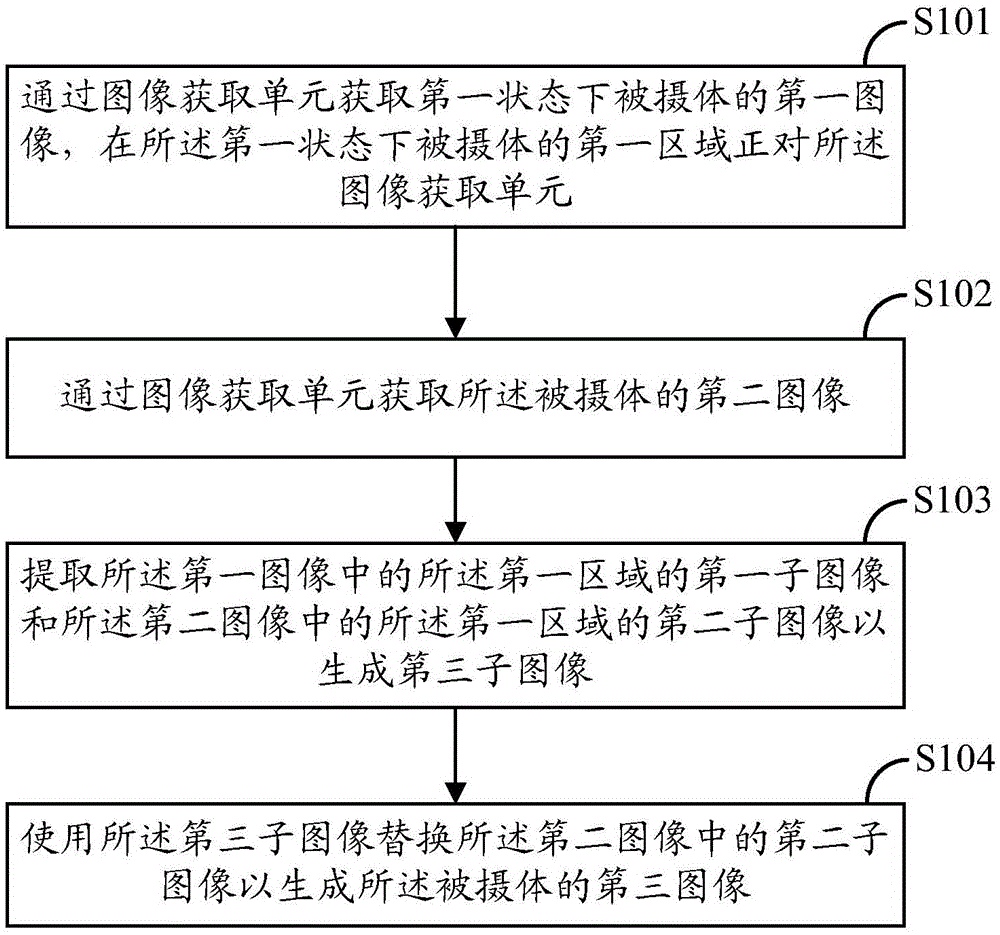

[0023] The following will combine figure 1 and Figure 2a-2d The image processing method according to the first embodiment of the present invention will be described together. figure 1 is a flowchart illustrating the image processing method according to the first embodiment of the present invention. Figure 2a-2c is an effect diagram illustrating the image processing method according to the first embodiment of the present invention. The image processing method 100 according to the first embodiment of the present invention is applied to electronic equipment, and such electronic equipment may be any electronic equipment as long as the electronic equipment has an image acquisition unit such as a camera. For example, the electronic device can be a desktop computer, a laptop computer, a tablet computer, a smart phone, and the like.

[0024] In addition, in this embodiment, the image processing method according to the first embodiment of the present invention will be described as...

no. 2 example

[0046] The following will combine image 3 and Figures 4a-4d The image processing method according to the second embodiment of the present invention will be described together. image 3 is a flowchart illustrating an image processing method according to a second embodiment of the present invention. Figures 4a-4c is an effect diagram illustrating the image processing method according to the second embodiment of the present invention. The image processing method 200 according to the second embodiment of the present invention is applied to an electronic device. Such an electronic device may be any electronic device as long as the electronic device has an image acquisition unit such as a camera. For example, the electronic device can be a desktop computer, a laptop computer, a tablet computer, a smart phone, and the like.

[0047] In addition, in this embodiment, it will be assumed that the electronic device is a notebook computer having a first camera subunit and a second ca...

no. 3 example

[0069] The following will refer to Figure 5 An electronic device 500 according to a fifth embodiment of the present invention is described.

[0070] Electronic device 500 includes:

[0071] an image acquiring unit 501 configured to acquire an image of a subject;

[0072] The control unit 502 is configured to control the image acquisition unit to acquire a first image of the subject in a first state, where a first area of the subject faces the image acquisition unit in the first state, and control the image an acquiring unit acquires a second image of the subject;

[0073] A sub-image extracting unit 503 configured to extract a first sub-image of the first region in the first image and a second sub-image of the first region in the second image to generate a third sub-image ;as well as

[0074] The image synthesis unit 504 is configured to use the third sub-image to replace the second sub-image in the second image to generate a third image of the subject.

[0075] Prefer...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com