Video space-time search method

A video, spatiotemporal technology, applied in video data retrieval, video data query, special data processing applications, etc., can solve the problems of dense spatial distribution, large number of retrieval result data sets, and difficulty in visual analysis of result video sets, and achieve clear imaging. , the effect of improving usability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

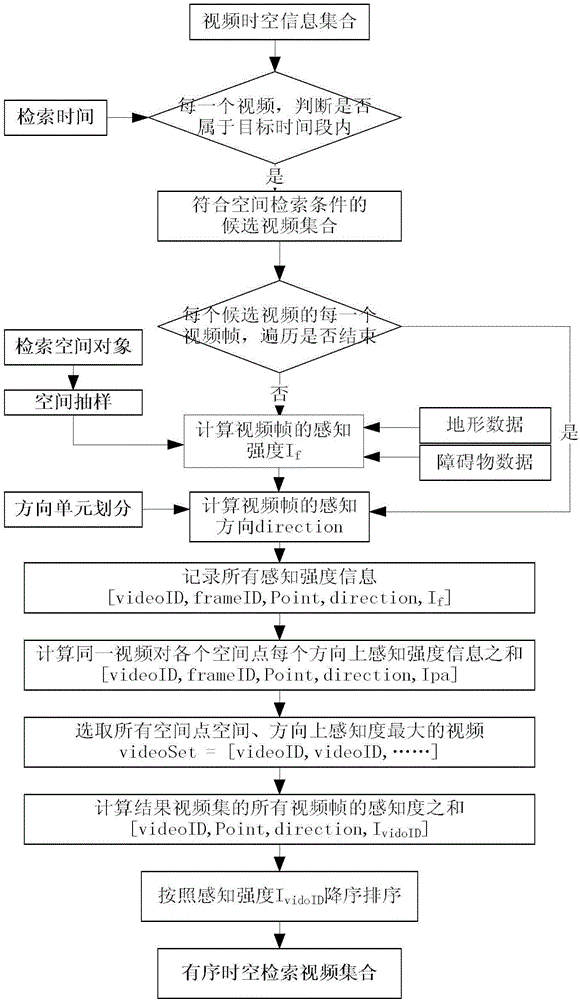

[0040] The basic steps of a kind of video spatio-temporal retrieval method of the present invention, as figure 1 As shown, specifically:

[0041] Step 1: Get the spatio-temporal information of the video:

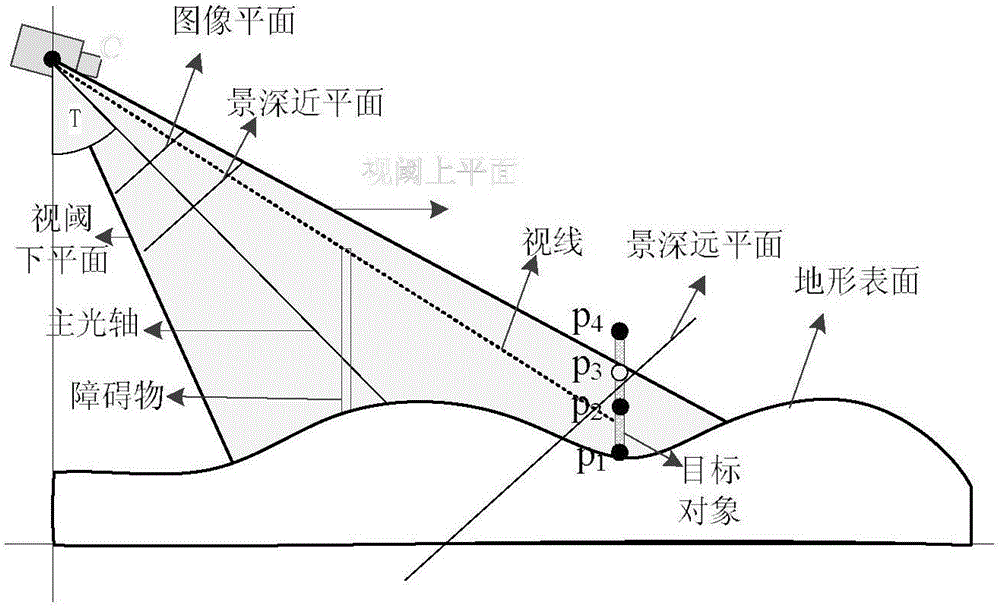

[0042] (1) Obtain various video information, including imaging chip size, image resolution, aperture diameter, focus distance, relative aperture, diameter of diffusion circle, video shooting start time, and video shooting end time;

[0043] (2) Obtain the information of each video frame in each video, including video frame shooting position (latitude and longitude coordinates, shooting height), shooting attitude (pitch angle, rotation angle), focal length, shooting time;

[0044] (3) All video information and the corresponding information of each video frame constitute the complete spatiotemporal description information of the video.

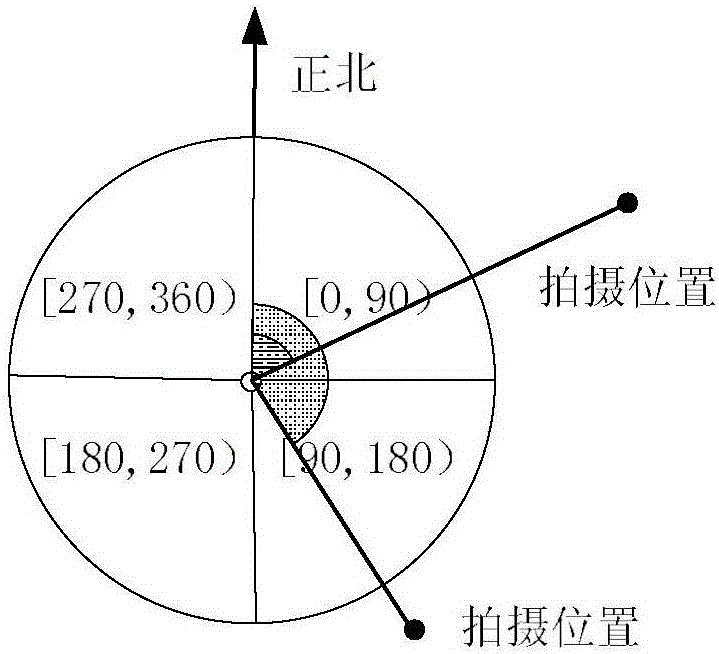

[0045]S...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com