Spatial coherence feature-based quick identification method for human face expression of any pose

A facial expression recognition and gesture technology, applied in the field of emotion recognition, can solve the problems of reducing the recognition rate of the model and lack of spatial coherence features, and achieve the effect of improving the recognition rate and accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

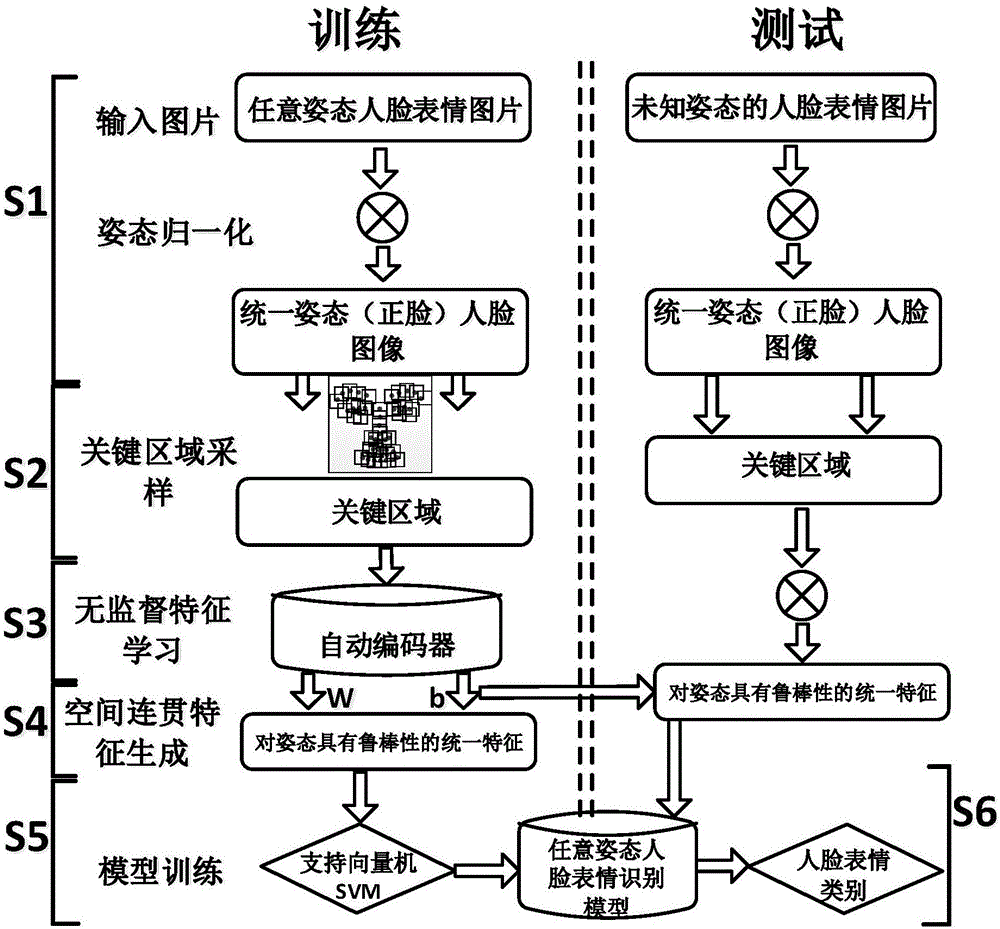

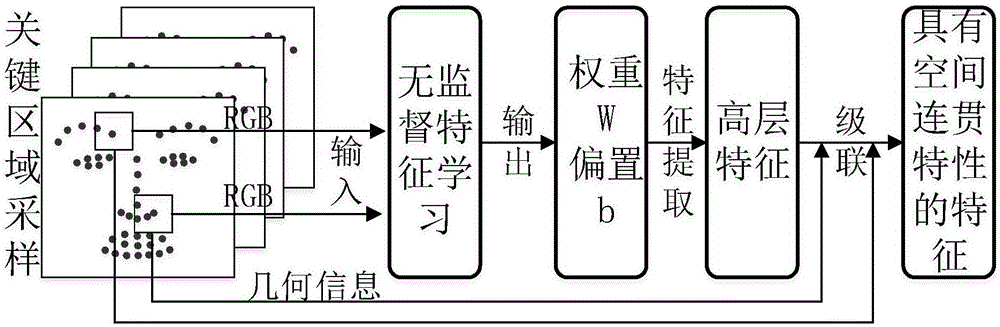

[0033] The present invention firstly normalizes the pose of the original image, synthesizes the front face image corresponding to the face image of any pose, and then performs preprocessing on the synthesized front face image, including image grayscale and image size normalization. Then, the preprocessed front face image is sampled through the tree model for key areas, and the unsupervised feature learning method autoencoder is trained based on the key areas that have been sampled, and the mapping relationship between input features and output features is learned. This mapping relationship is It is obtained by continuously updating the reconstruction error function between the input feature and the output feature. When the reconstruction error function value tends to converge, the function stops. At this time, the weight and bias of the function constitute the final mapping relationship. Use this mapping relationship for all synthesized front face images to obtain unified front...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com