Image pixel marking method based on deep convolution neural network

A neural network and deep convolution technology, applied in the field of computer vision, which can solve problems such as difficulty in improving and difficulty in learning labeling improvement tasks.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

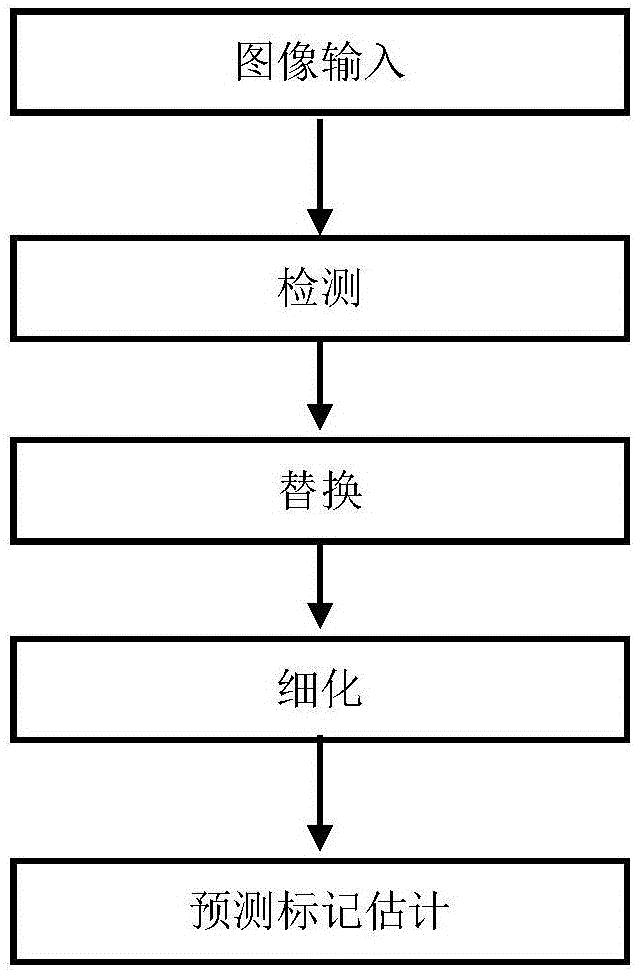

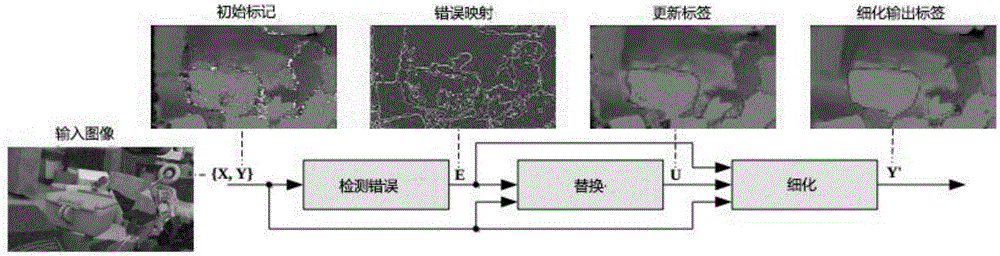

[0024] figure 1 It is a system flowchart of an image pixel labeling method based on a deep convolutional neural network in the present invention. Mainly including image input; detection; replacement; refinement; predictive marker estimation.

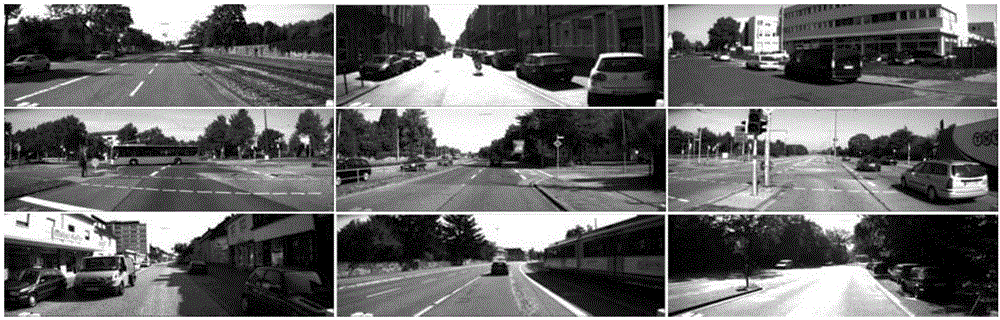

[0025] Wherein, the image input uses a traffic scene set as a data set, which includes scene maps of various types of vehicles driving on the road, with a resolution of 1392×512; vehicle objects include cars, trucks, trucks, rail Trams, etc.; let X = Represents an input image of size H×W, where x i is the i-th pixel of the image, Represents some initial marker estimates for the input image.

[0026] Wherein, the detection detects the wrong...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com