A method, device and terminal for realizing interactive image segmentation

An image segmentation and interactive technology, applied in the field of image processing, can solve problems such as unsatisfactory segmentation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

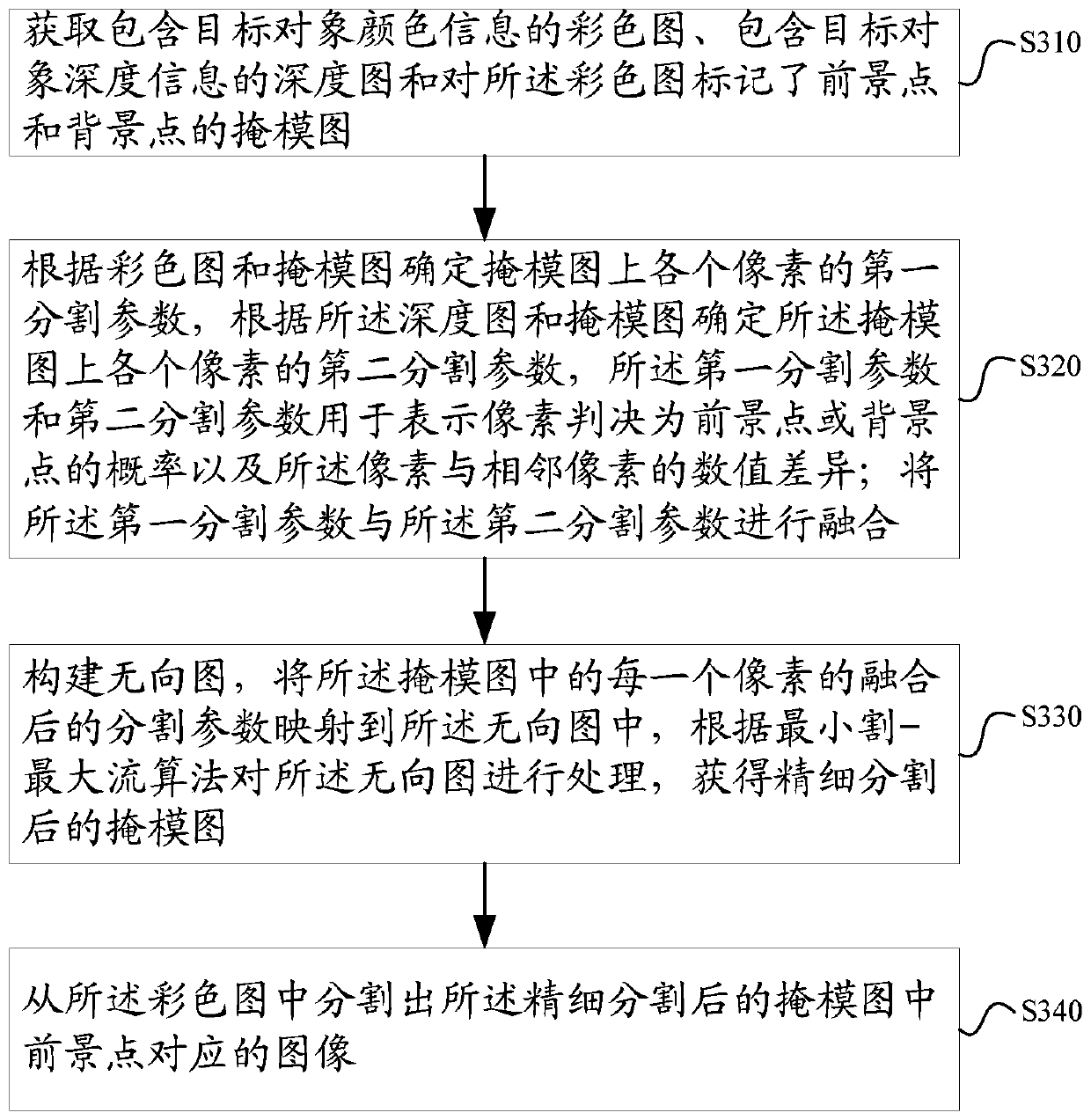

[0139] Such as image 3 As shown, the embodiment of the present invention proposes a method for realizing interactive image segmentation, including:

[0140] S310. Obtain a color map containing the color information of the target object, a depth map containing the depth information of the target object, and a mask map in which foreground points and background points are marked on the color map;

[0141] S320. Determine a first segmentation parameter for each pixel on the mask image according to the color image and the mask image, and determine a second segmentation parameter for each pixel on the mask image according to the depth image and the mask image, The first segmentation parameter and the second segmentation parameter are used to represent the probability that the pixel is determined to be a foreground point or a background point and the numerical difference between the pixel and adjacent pixels; the first segmentation parameter and the second segmentation parameter ca...

Embodiment 3

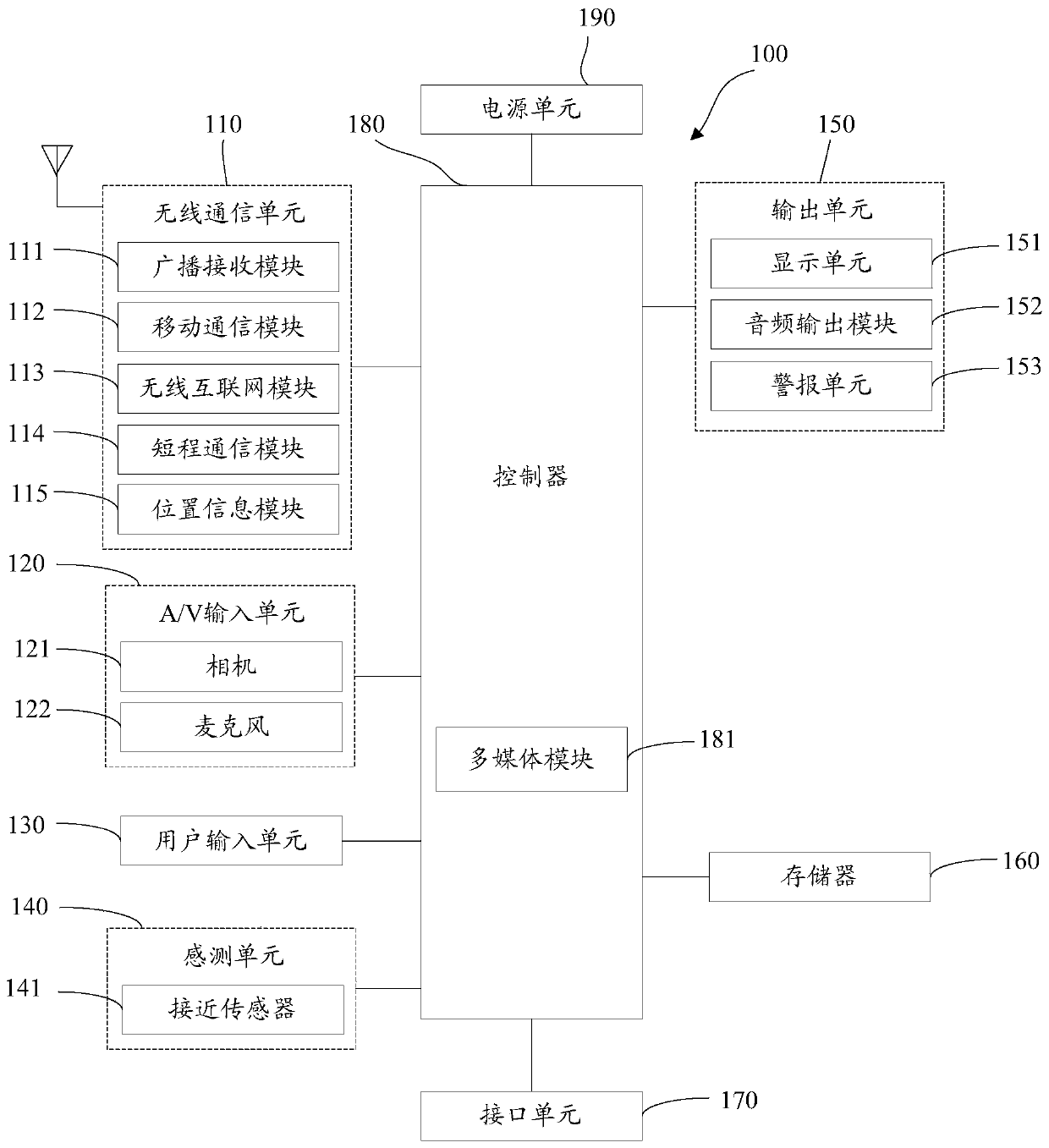

[0232] An embodiment of the present invention also provides a terminal, including the above-mentioned device for realizing interactive image segmentation.

application example 1

[0234] A method for implementing interactive image segmentation in this example includes the following steps:

[0235] Step S501, acquiring a color map containing the color information of the target object and a depth map containing the depth information of the target object;

[0236] Among them, such as Figure 5-a As shown, the original image (color map) contains the target object "stapler", and the user has scribbled on the original image, hoping to segment the target object "stapler"; Figure 5-b As shown, the depth map is a picture containing depth information, which is the same size as the color map; in the depth map, the darker part is taken at a farther distance, and the lighter part is taken at a closer distance.

[0237] Step S502, obtaining a mask image initially marked with foreground points and background points for the color image;

[0238] Among them, such as Figure 5-c As shown, the mask map is a picture initially marked with foreground points and backgroun...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com