Multi-source multi-view-angle transductive learning-based short video automatic tagging method and system

An automatic labeling, multi-view technology, applied in the field of short video labeling, can solve the problem of lack of multi-source and multi-view feature fusion capabilities.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0101] The present invention will be described below based on examples, but it should be noted that the present invention is not limited to these examples. In the following detailed description of the invention, some specific details are set forth in detail. However, the present invention can be fully understood by those skilled in the art about the parts that are not described in detail.

[0102] In addition, those of ordinary skill in the art should understand that the provided drawings are only for illustrating the objects, features and advantages of the present invention, and the drawings are not actually drawn to scale.

[0103]At the same time, unless the context clearly requires, the words "include", "include" and other similar words in the entire specification and claims should be interpreted as an inclusive meaning rather than an exclusive or exhaustive meaning; that is, "include but not limited to the meaning of ".

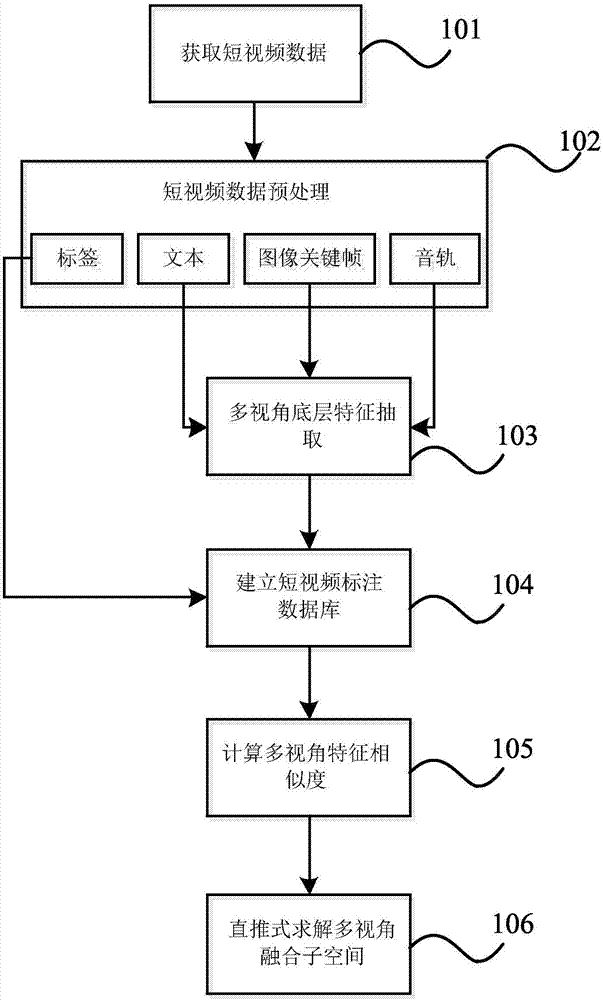

[0104] figure 1 It is a flowchart of a short vi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com