Index caching method and system based on off-heap memory

A technology of cache index and off-heap cache, applied in special data processing applications, instruments, electrical digital data processing, etc., to achieve the effect of no disk IO bottleneck and reducing performance bottleneck

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

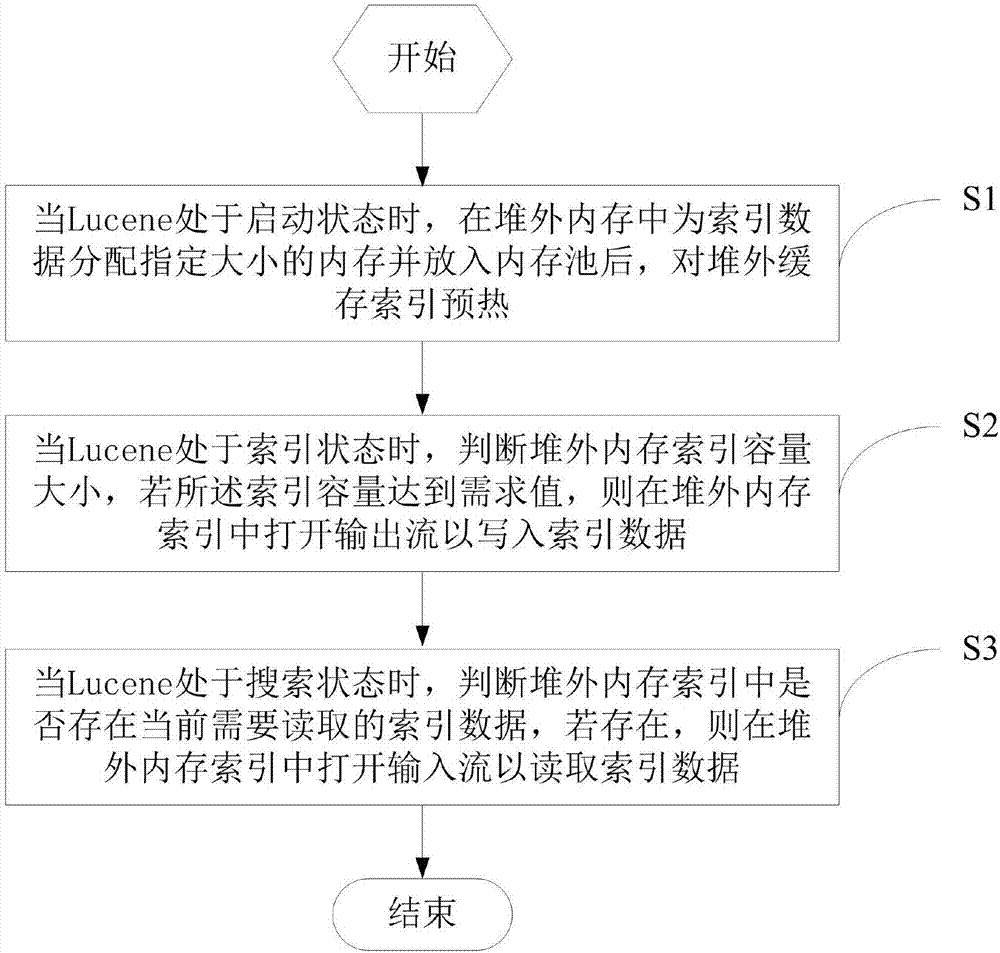

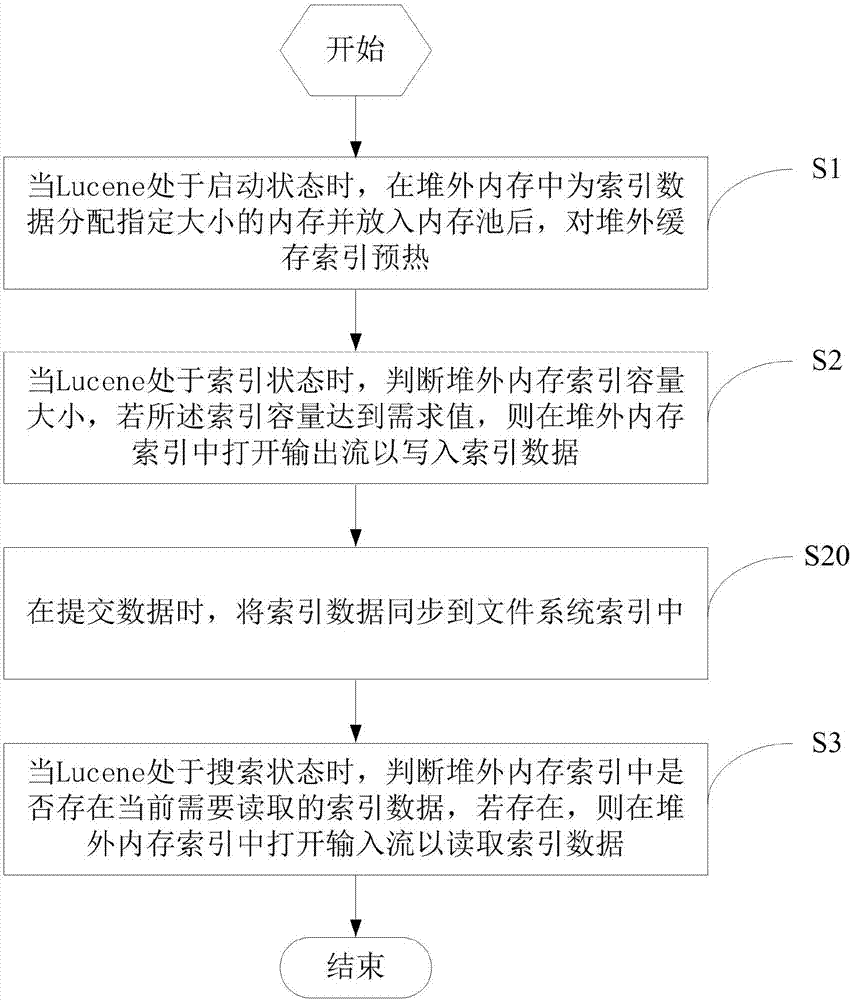

[0021] refer to figure 1 , the present invention discloses a method for cache indexing based on off-heap memory in the first embodiment. When the capacity of off-heap memory is large enough, both reading and writing are in the memory, so that there is no disk interaction between reading and writing, which greatly reduces the impact caused by disk IO. performance bottlenecks, the method includes:

[0022] S1. When Lucene is in the startup state, after allocating a specified size of memory for index data in the off-heap memory and putting it into the memory pool, preheat the off-heap cache index;

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com