Second-level sight line tracing method based on face orientation constraint

A face orientation and gaze tracking technology, applied in the field of human-computer interaction, can solve problems such as shaking the user's head

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

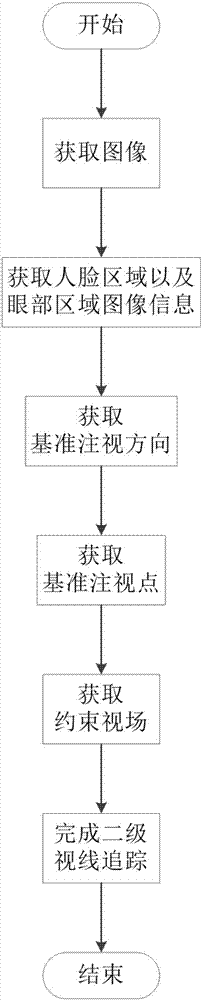

[0053] Such as figure 1 As shown, a two-level gaze tracking method based on face orientation constraints includes the following steps:

[0054] (1) Acquiring an image sequence through a non-invasive image acquisition unit;

[0055] Described step (1) comprises the following steps:

[0056] (1.1) Collect images used by users in real time through the camera and convert them into 256-level grayscale images.

[0057] (1.2) The color space reduction strategy is adopted, the color space is reduced to one thousandth of the original, and the calculation redundancy is reduced.

[0058] (1.3) Use a bilateral filter to preserve and denoise the image.

[0059] (1.4) Gray level equalization enhances image contrast.

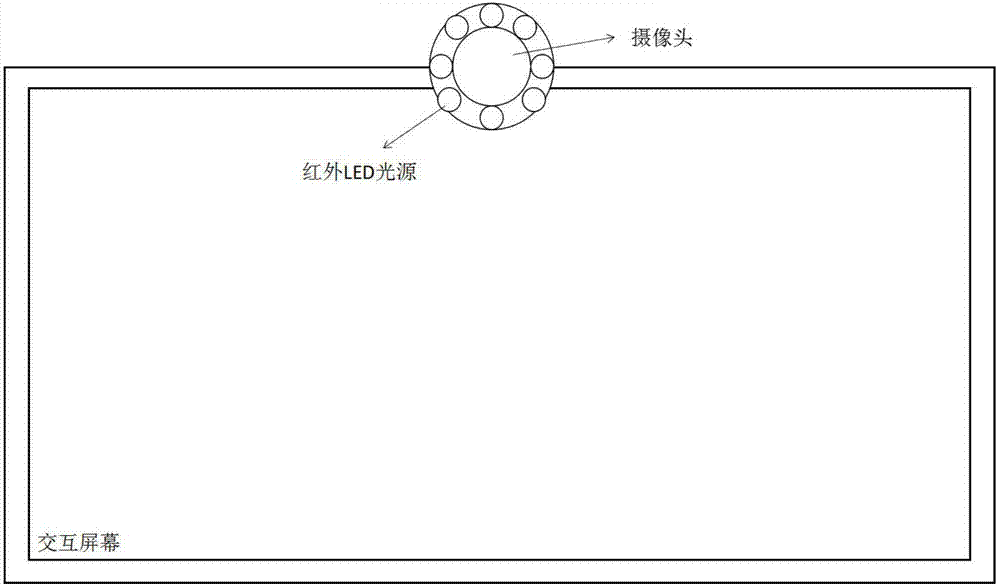

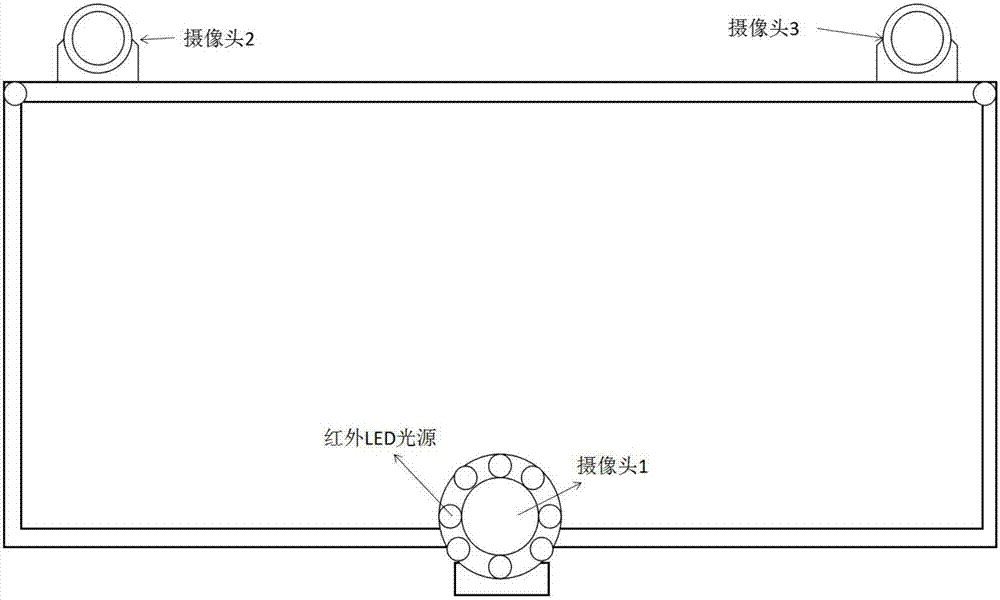

[0060] The human face and eye image acquisition unit is a camera installed near the monitoring screen observed by the user, which is used to photograph the user's face area and obtain images of the user's binocular area. At the same time, the user does not need to wear any ...

Embodiment 2

[0098] What differs from embodiment 1 is that described step (6) comprises following sub-steps:

[0099] (6.1) Based on the method of machine learning, in the face area, detect the eye area falling in the constrained field of view;

[0100] (6.2) Obtain the iris position through the gray scale integral function, then segment the pupil edge in the human eye area based on the Snake model, and locate the pupil center;

[0101] (6.3) Based on the relative position between the pupil center and the eye corner, construct the pupil center-inner corner eye movement vector (Δx, Δy);

[0102] (6.4) Implemented by a classification method: divide the constrained field of view into small intervals and encode them. The system loads the pre-trained artificial neural network, inputs the user's current eye movement information and head posture, and then outputs the current user's gaze hot spot.

[0103] If the method of classification is adopted, further, the constrained field of view is even...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com