Action Recognition Method Based on Deep Nonnegative Matrix Factorization Under Time-Dependent Constraints

A non-negative matrix decomposition, time-dependent technology, applied in the field of image processing, can solve the problem of ignoring the spatial and temporal characteristics of the video, and achieve the effect of improving the expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

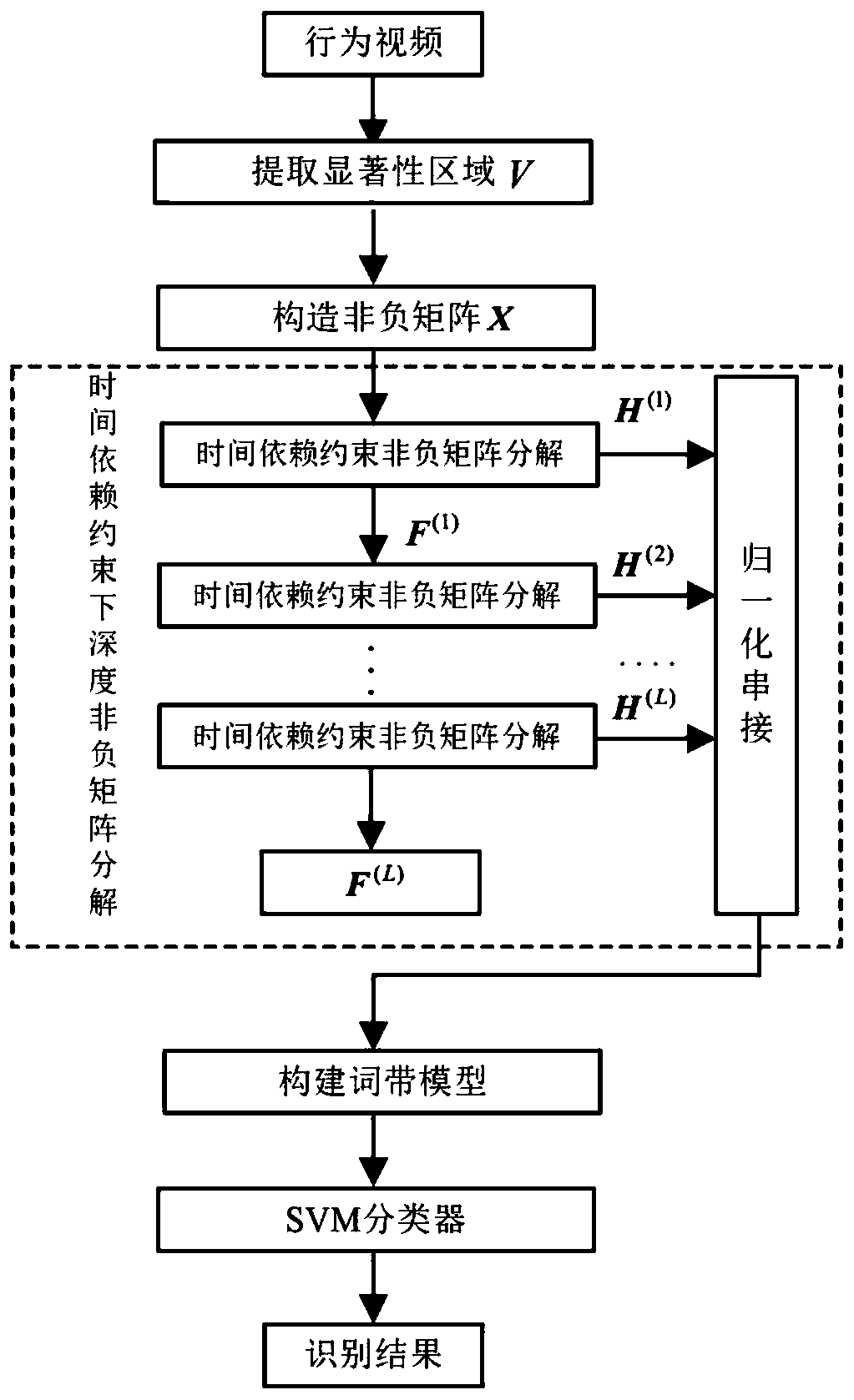

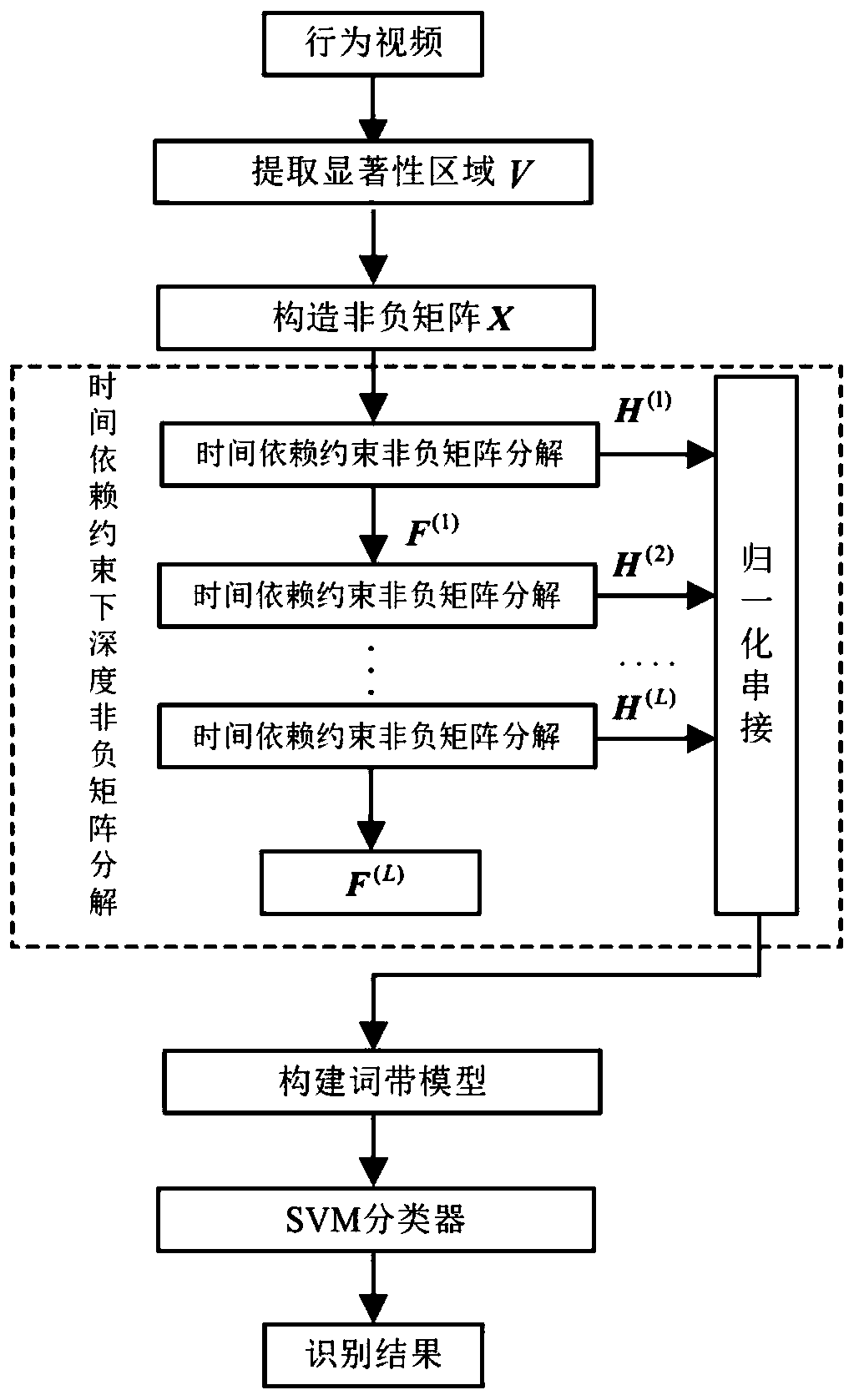

[0026] refer to figure 1 , the implementation steps of the present invention are as follows:

[0027] Step 1 extracts the motion saliency region V of the original video O.

[0028] (1a) Construct a Gaussian filter with a size of 5×5, and apply the original video O={o 1 ,o 2 ,...,o i ,...,o Z} for Gaussian filtering, corresponding to the filtered video B={b 1 ,b 2 ,...,b i ,...,b Z}, where b i Indicates the i-th video frame after filtering, i=1,2,...,Z;

[0029] (1b) Use the following formula to calculate the i-th video frame o i The motion salience region v i :

[0030] v i =|mo i -b i |,

[0031] Among them, mo i is the i-th video frame o i The pixel geometric mean of ;

[0032] (1c) Repeat the operation in step (1b) for all frames in the video O to obtain the entire video motion salient region V={v 1 ,v 2 ,…,v i ,...,vZ}.

[0033] The saliency extraction method in this step comes from the article "Frequency-tuned Salient Region Detection" published by ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com