Neural machine translation method of merging multilingual coded information

A technology for encoding information and machine translation, applied in the field of neural machine translation, which can solve problems such as low translation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

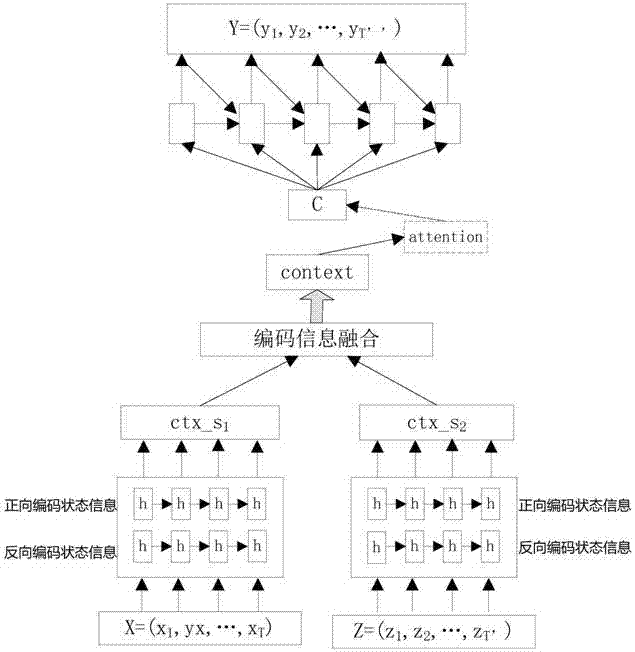

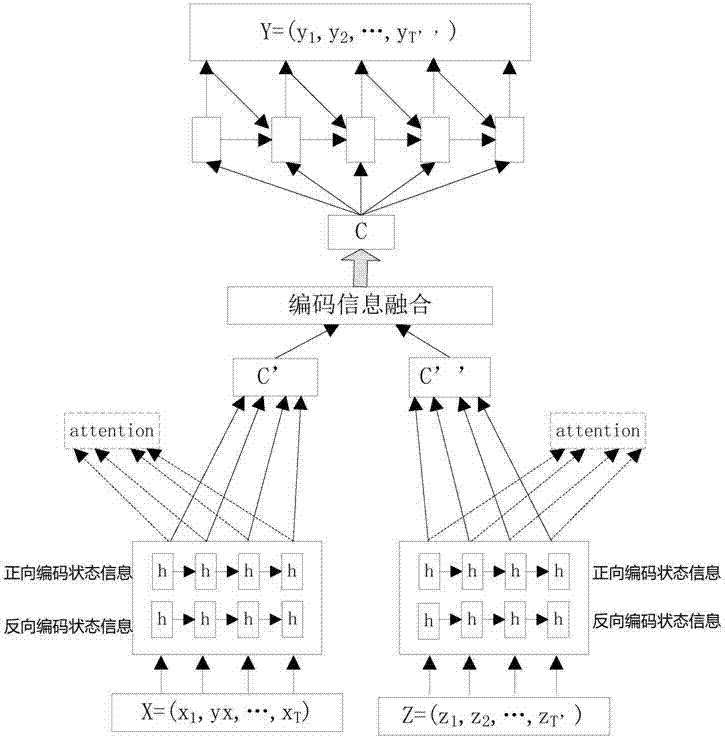

[0049] Specific implementation mode one: combine figure 1 , figure 2 To illustrate this embodiment, the specific process of the neural machine translation method for fusing multilingual coded information in this embodiment is as follows:

[0050] Step 1. Use the word segmentation script tokenizer.perl provided by the statistical machine translation platform Moses (Moses) to segment the trilingual (Chinese, English, converted into Japanese) parallel corpus, and then use BPE (Byte Pair Encoding, BPE) ) (learn_bpe.py script under the Nematus platform) characterizes the trilingual parallel corpus to be processed after word segmentation into a series of subword symbol sequences corresponding to each language, and uses the build_dictionary.py script under the Nematus platform to build source input Language dictionary dic_s 1 ,dic_s 2 and the target language dictionary dic_t;

[0051] Step 2. Input the language dictionary dic_s based on the source 1 To the subcharacter sequence...

specific Embodiment approach 2

[0066] Specific embodiment two: what this embodiment is different from specific embodiment one is: in described step 3, based on the GRU unit, form the bidirectional cyclic encoder that recurrent neural network is formed, the bidirectional cyclic encoder is to the word vector W=( w 1 ,w 2 ,...,w T ) and word vector W'=(w' 1 ,w′ 2 ,...,w′ T′ ) to encode, get W=(w 1 ,w 2 ,...,w T ) encoding vector ctx_s 1 and W'=(w' 1 ,w′ 2 ,...,w′ T′ ) encoding vector ctx_s 2 ; The specific process is:

[0067] Step three one,

[0068] The bidirectional encoder pair W=(w 1 ,w 2 ,...,w T ) Calculate the forward encoding state information according to the forward word sequence

[0069] The bidirectional encoder pair W=(w 1 ,w 2 ,...,w T ) Calculate the reverse encoding state information according to the reverse word sequence

[0070] The bidirectional encoder pair W'=(w 1 ',w 2 ',...,w T ″) Calculate the forward encoding state informa...

specific Embodiment approach 3

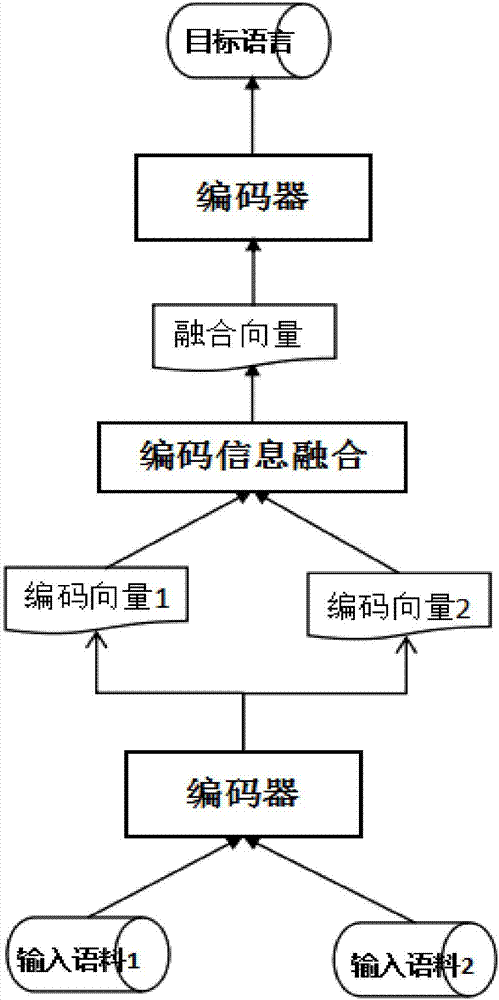

[0075] Specific implementation mode three: combination figure 1 , image 3 To illustrate this embodiment, the specific process of the neural machine translation method for fusing multilingual coded information in this embodiment is as follows:

[0076] Step 1), use the word segmentation script tokenizer.perl provided by the statistical machine translation platform Moses (Moses) to segment the trilingual (Chinese-English to Japanese) parallel corpus to be processed, and then use BPE (Byte Pair Encoding (BPE)) (learn_bpe.py script under the Nematus platform) characterize the trilingual parallel corpus to be processed after word segmentation into a series of subword symbol sequences corresponding to each language, and use the build_dictionary.py script under the Nematus platform to build the source input language dictionary dic_s 1 ,dic_s 2 and the target language dictionary dic_t;

[0077] Step 2), based on the source input language dictionary dic_s 1 To the subc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com