Translation model training method, translation method and system for sign language video in specific scene

A translation model and training method technology, applied in the field of video natural language generation, can solve problems such as poor translation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0078] The present invention will be further described below in conjunction with embodiment and accompanying drawing.

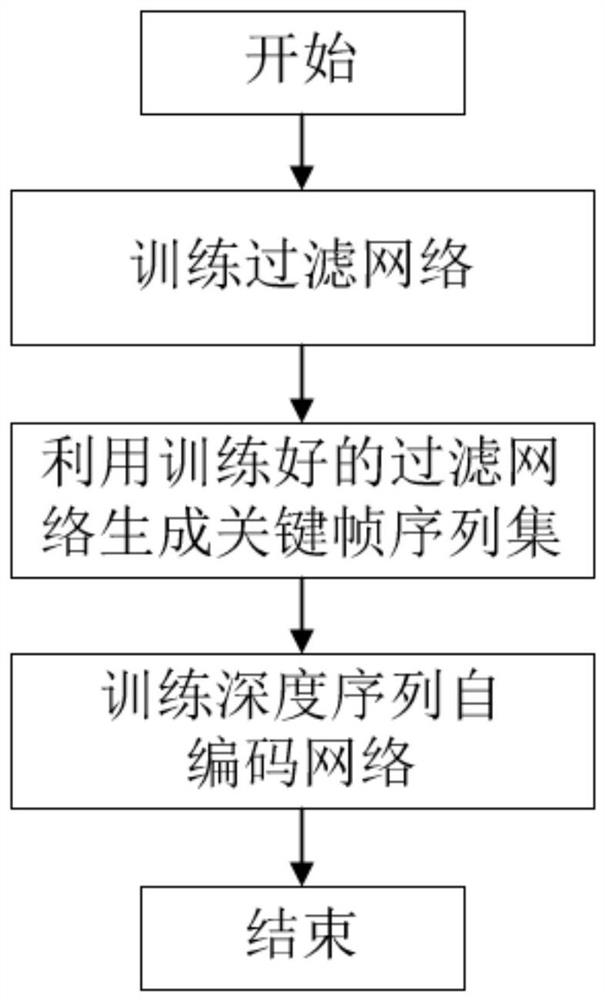

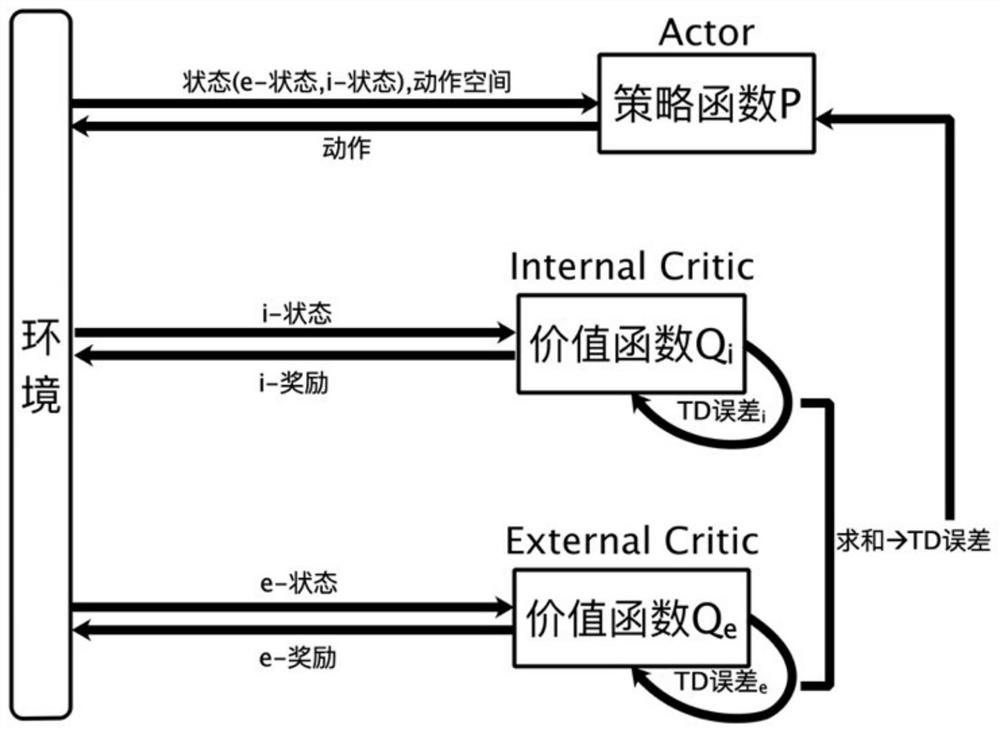

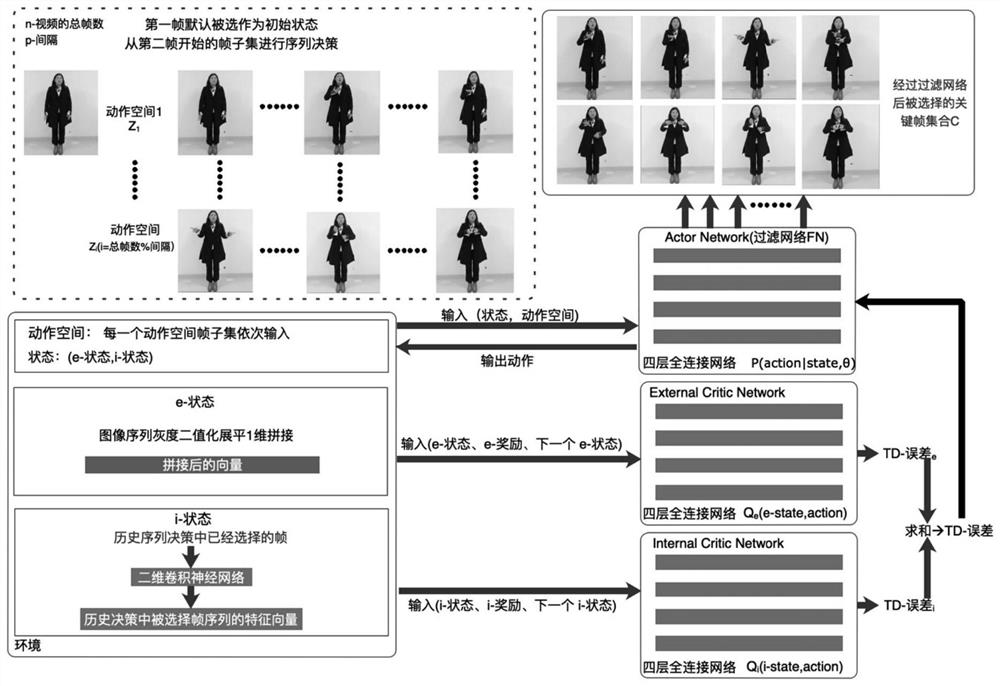

[0079] In the present invention, the sign language translation model includes a filter network and a deep sequence autoencoder network, wherein the filter network is used for frame sampling of the video, thereby filtering out key frame sequences; the depth sequence autoencoder network is used for feature extraction, and after encoding -The decoding process completes the translation of the sign language video and generates the content text of the sign language video.

[0080] In the embodiment of the present invention, in order to train the model, different data sets are constructed according to different public places. For example, for the scene of the station, the Chinese sign language data set CSL500 is used for reference to construct the sign language data set of the barrier-free windows of the station. The data set has marked a large number of vocabulary,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com