Robot sorting method and system based on vision sense

A robot and vision technology, which is applied in the field of vision-based robot sorting method and system, can solve the problem that the parts grasping method cannot be recognized and sorted

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

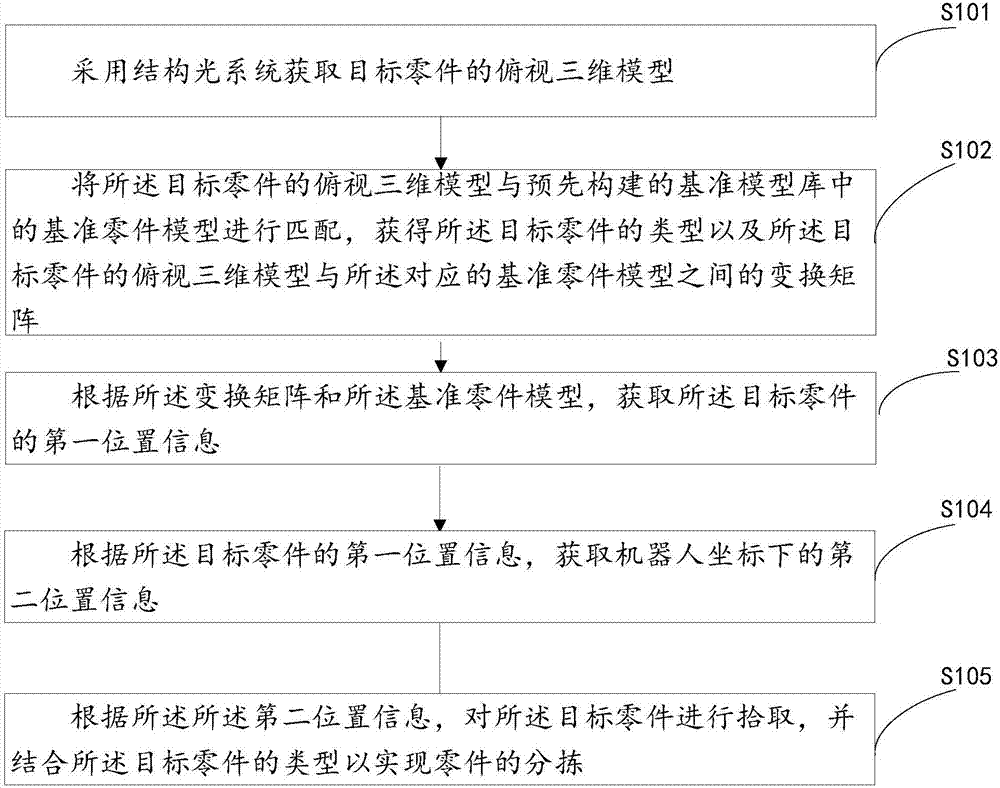

[0062] The present embodiment provides a vision-based robot sorting method, the method comprising:

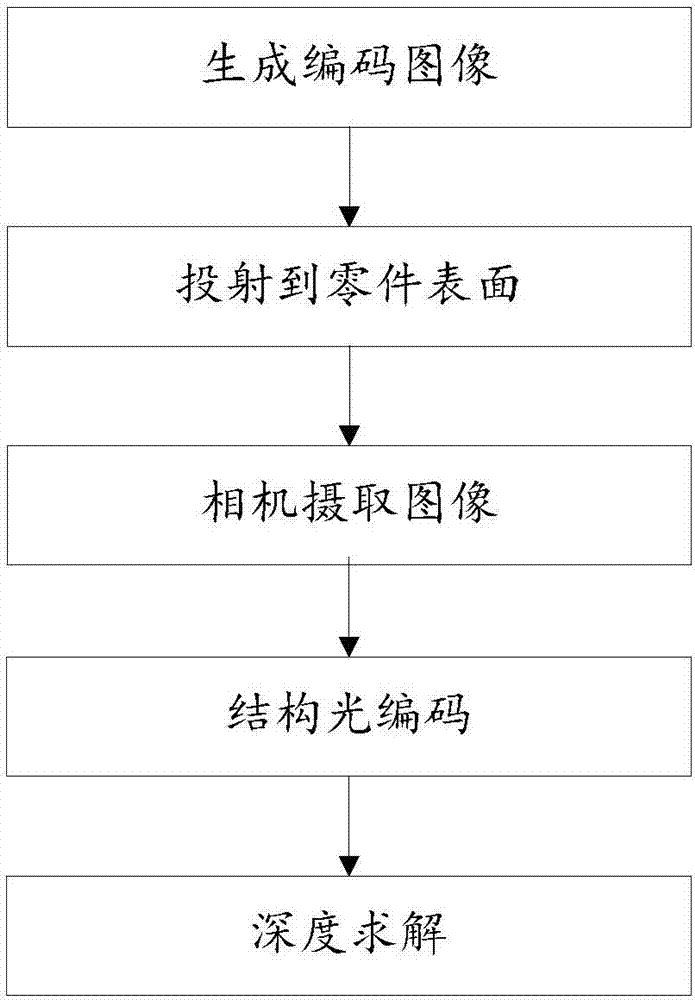

[0063] Step S101: Obtain a top-view 3D model of the target part by using a structured light system;

[0064] Step S102: Match the top-view 3D model of the target part with the reference part model in the pre-built reference model library to obtain the type of the target part and the top-view 3D model of the target part and the corresponding reference part Transformation matrix between models;

[0065] Step S103: Obtain first position information of the target part according to the transformation matrix and the reference part model;

[0066] Step S104: According to the first position information of the target part, obtain the second position information under the robot coordinates;

[0067] Step S105: According to the second position information, pick up the target part, and combine the type of the target part to realize part sorting.

[0068] In the above method, since the t...

Embodiment 2

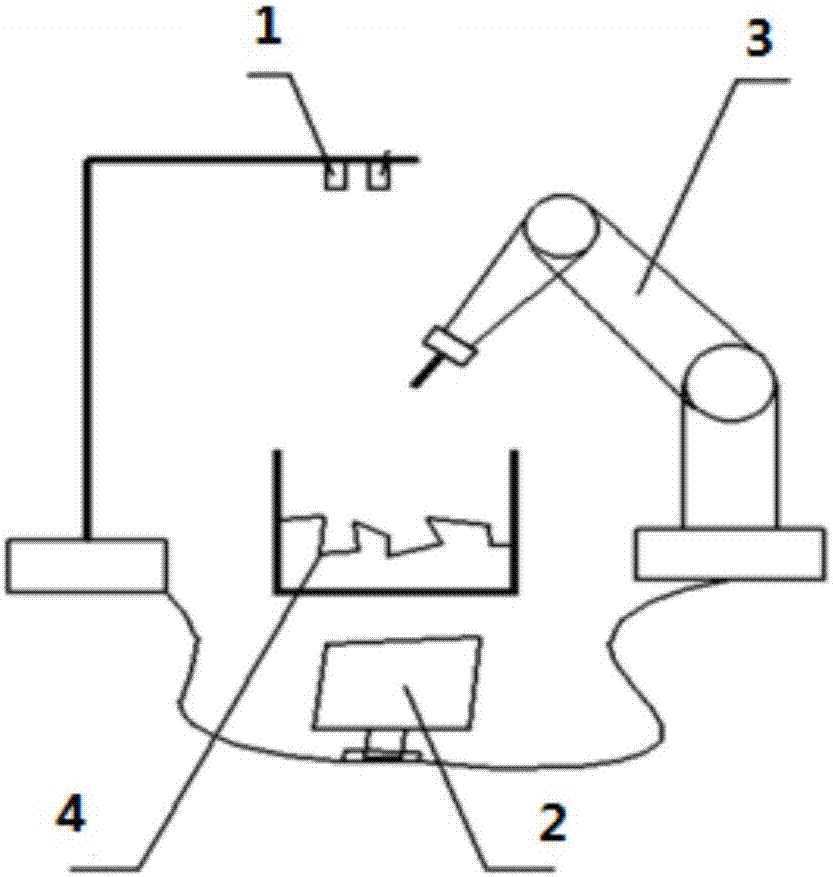

[0096] Based on the same inventive concept as that of Embodiment 1, Embodiment 2 of the present invention provides a vision-based robot sorting system. Please refer to Figure 5 , the system includes:

[0097] The acquiring module, 201, is used to acquire the top-view three-dimensional model of the target part by using the structured light system;

[0098] The matching module 202 is configured to match the top view three-dimensional model of the target part with the reference part model in the pre-built reference model library, obtain the type of the target part and the top view three-dimensional model of the target part and the corresponding The transformation matrix between the reference part models of ;

[0099] A first obtaining module 203, configured to obtain first position information of the target part according to the transformation matrix and the reference part model;

[0100] The second obtaining module 204 is used to obtain the second position information under t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com