A Deep Neural Network Method Based on Spatial Fusion Pooling

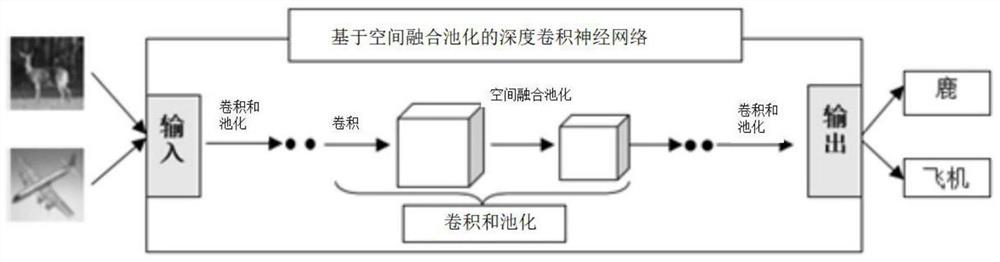

A deep neural network and spatial fusion technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problem that the pooling layer cannot effectively extract deep-level features and reduce feature channels, so as to promote wide application, The effect of reducing the number of spatial channels, improving classification efficiency and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The present invention will be further described below in conjunction with the accompanying drawings.

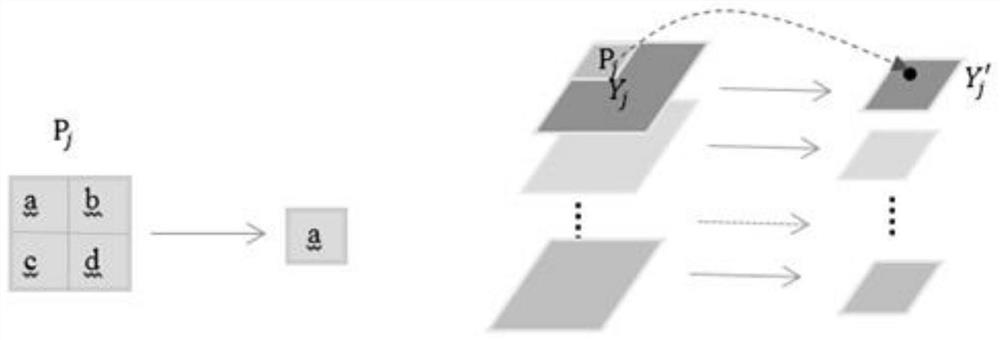

[0030] figure 1 Describes the traditional pooling operation. The traditional pooling operation is to perform a pooling operation on a single feature map, usually selecting a certain neighborhood P on the single feature map j A certain value in , such as a, replaces the entire neighborhood (a, b, c, d) as the output of pooling. Its main function is to perform down-sampling operations in the channel to reduce the spatial dimension and computational complexity. However, because it does not take into account the information between channels, the extracted feature representation ability is weak, and deep-level features cannot be extracted.

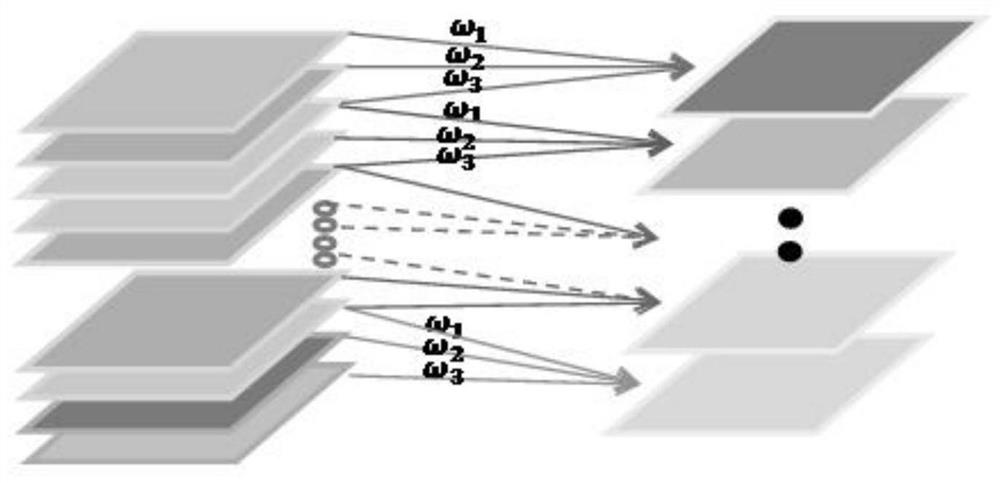

[0031] figure 2 The spatial fusion pooling operation proposed in this patent is described, which makes full use of the information between channels and within the channel, realizes the spatial fusion of information, and then extract...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com