Pedestrian re-identification method and device based on global features and coarse granularity local features

A technology of local features and global features, applied in the field of image processing and identity recognition, to achieve the effect of low computational complexity, realization of pedestrian identity recognition, and improved efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment 1

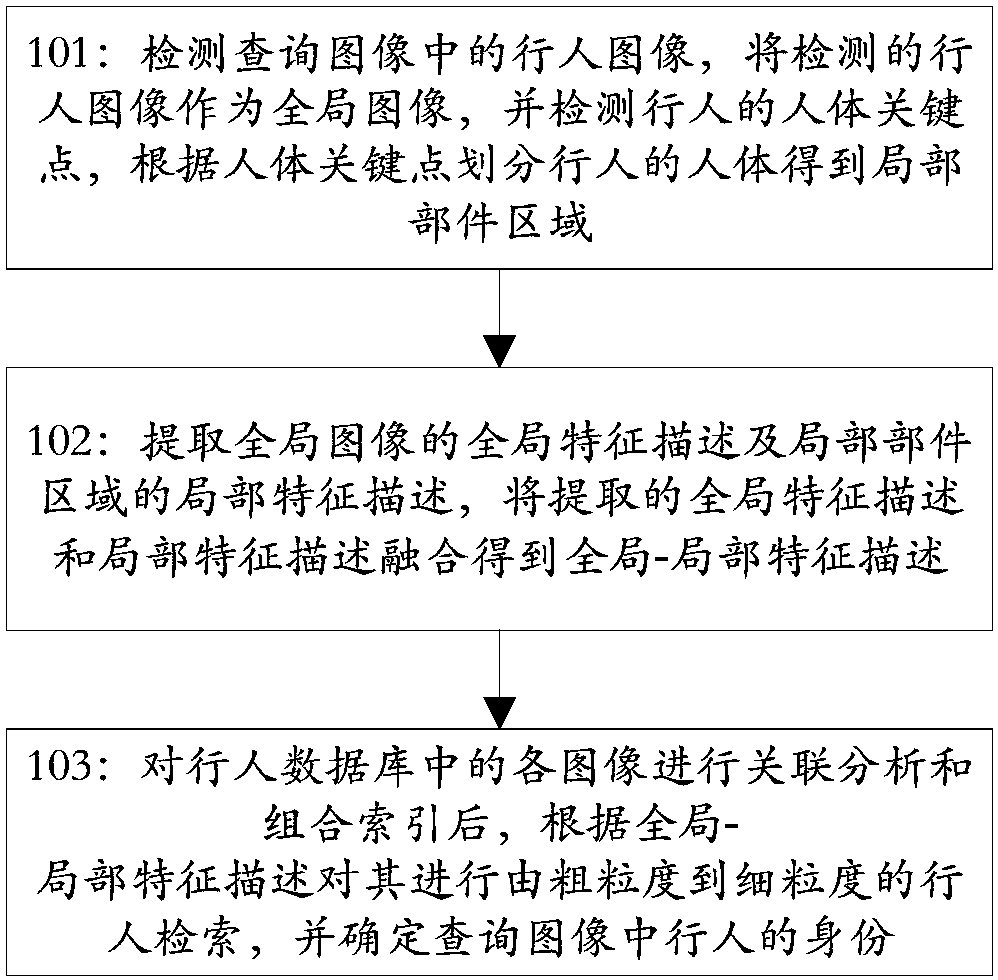

[0052] According to an embodiment of the present invention, a pedestrian re-identification method based on global features and coarse-grained local features is provided, such as figure 1 shown, including:

[0053] Step 101: Detect the pedestrian image in the query image, use the detected pedestrian image as the global image, and detect the key points of the human body of the pedestrian, and divide the human body of the pedestrian according to the key points of the human body to obtain the local component area;

[0054] Step 102: Extracting the global feature description of the global image and the local feature description of the local component area, and fusing the extracted global feature description and local feature description to obtain a global-local feature description;

[0055] Step 103: After performing association analysis and combined indexing on each image in the pedestrian database, perform pedestrian retrieval from coarse-grained to fine-grained according to the ...

Embodiment 2

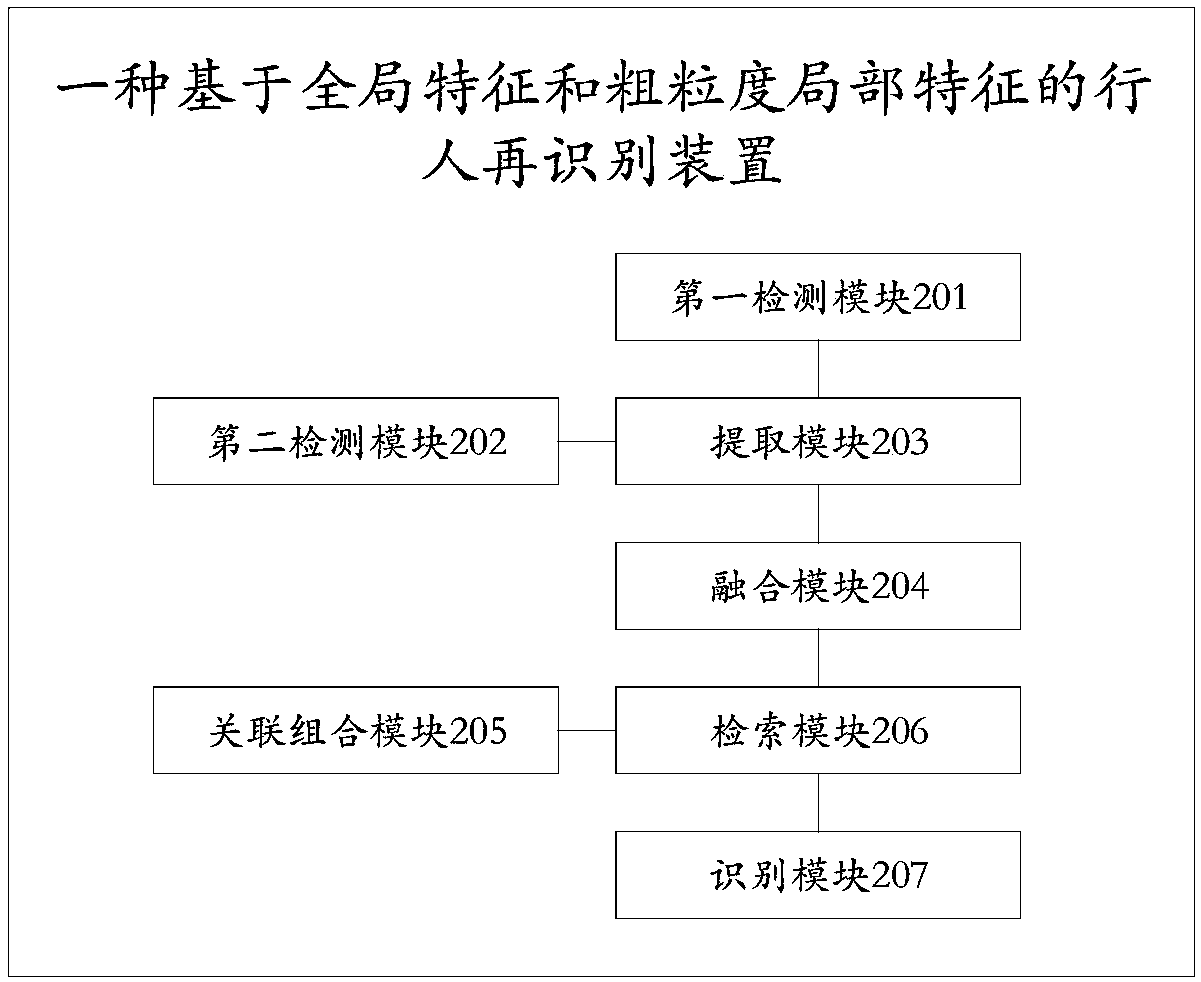

[0084] According to an embodiment of the present invention, a pedestrian re-identification device based on global features and coarse-grained local features is provided, such as figure 2 shown, including:

[0085] The first detection module 201 is used to detect pedestrian images in the query image, and use the detected pedestrian images as global images;

[0086] The second detection module 202 is used to detect the key points of the pedestrian's human body in the query image, and divide the human body of the pedestrian according to the key points of the human body to obtain the local component area;

[0087] An extraction module 203, configured to extract the global feature description of the global image obtained by the first detection module 201, and the local feature description of the local component area obtained by the second detection module 202;

[0088] The fusion module 204 is used to fuse the global feature description and the local feature description extracted b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com