Monocular vio (Visual-Inertial Odometry) based mobile terminal AR method

A mobile terminal, single-purpose technology, applied in the field of AR, can solve the problems that the effect depends on the environment, the effect is poor, and it is difficult to accurately estimate the camera pose, so as to achieve the effect of good effect, stable effect, and anti-deviation effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

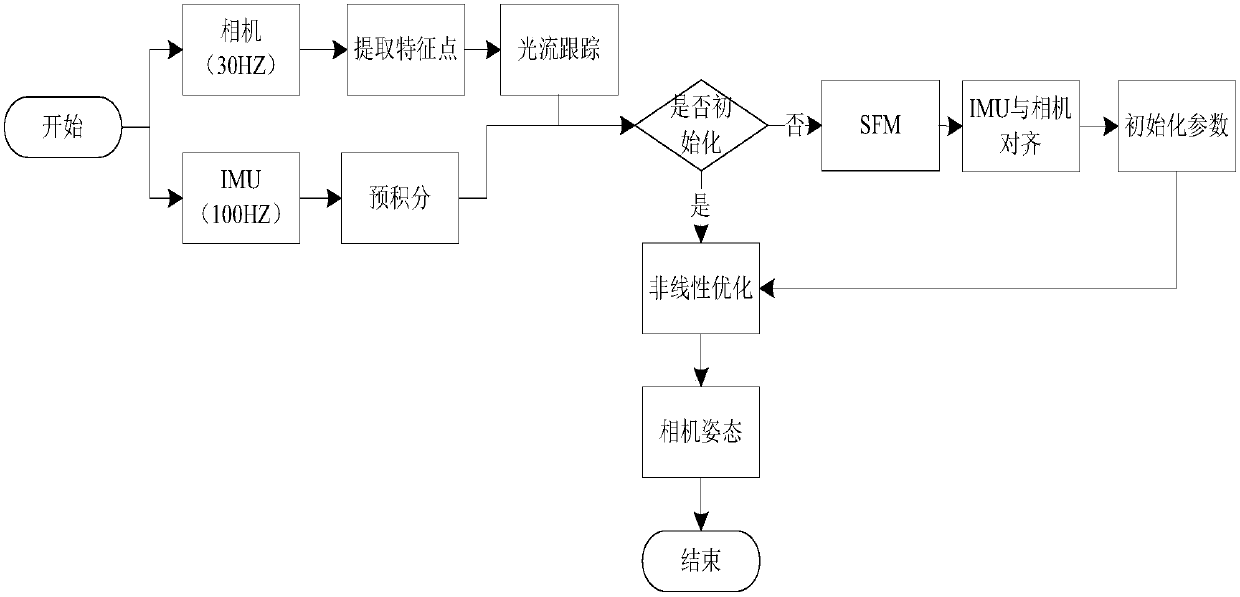

[0029] Such as figure 1 As shown, the present invention provides a mobile AR method based on monocular vio, including the following steps:

[0030] Step 1, using the camera and IMU to perform image acquisition and inertial data acquisition respectively, and the acquisition frequency of the IMU is greater than that of the camera;

[0031] Step 2, extract the feature points of each frame of image acquired by the camera, and use the optical flow method to track each feature point, and set the successfully tracked frame as a key frame;

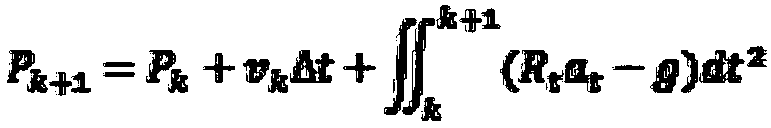

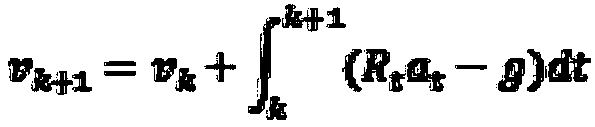

[0032] Step 3. Since the frequency of the IMU is much higher than the speed at which the camera acquires images, when two frames of images are obtained, multiple sets of IMU data are often obtained. Therefore, the multiple sets of IMU data obtained by the IMU are pre-integrated to calculate two frames The IMU position and velocity corresponding to the image are:

[0033]

[0034]

[0035] In the formula, P k and P k+1 Indicates the posit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com