Method for measuring significance of image target

A measurement method and target technology, applied in the field of image target saliency measurement, can solve the problems of inconsistent visual saliency, inaccurate salient values of salient factors, etc., and achieve the effect of avoiding inaccurate calculation, comprehensive calculation and accurate parameter acquisition.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

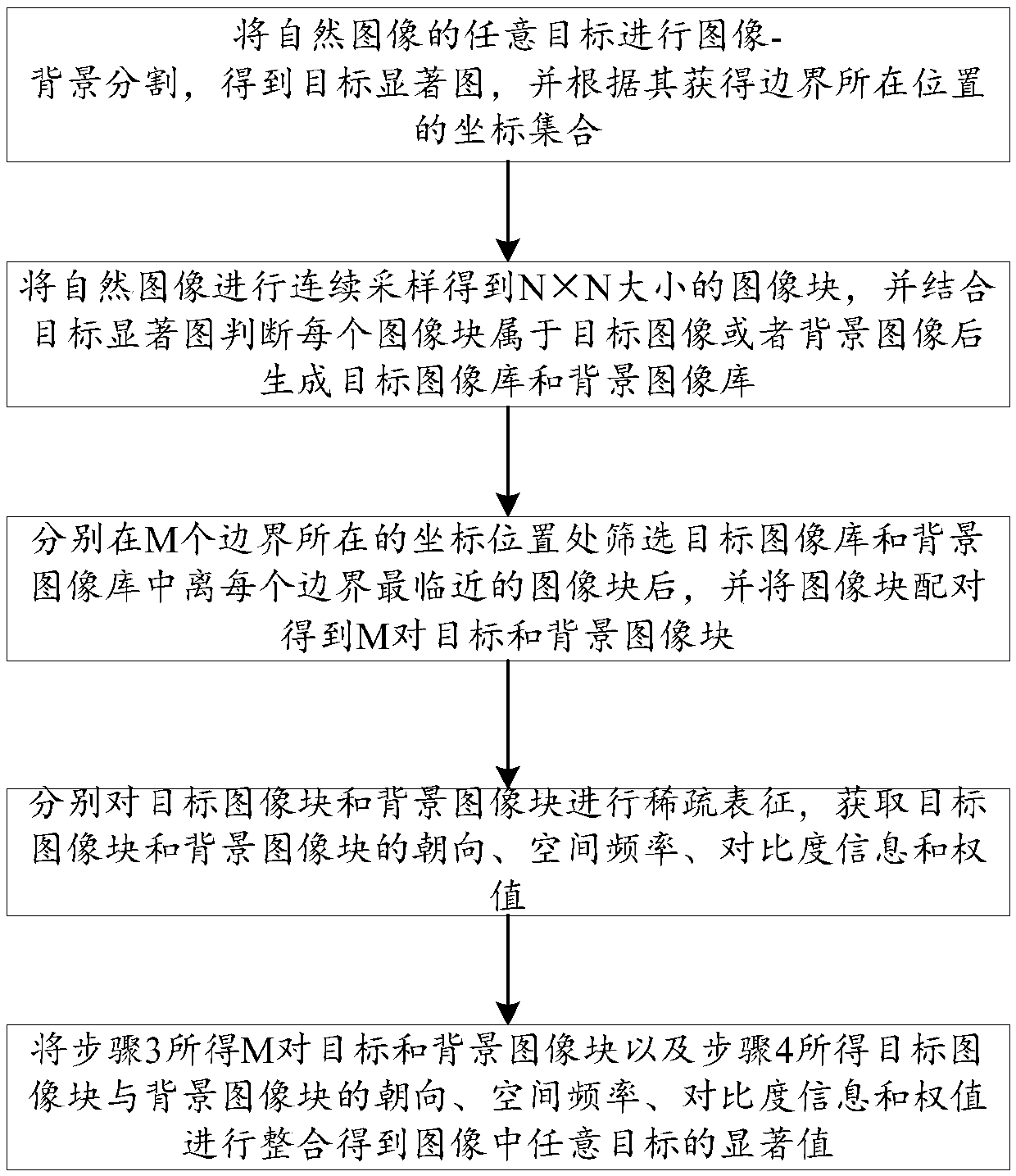

[0032] A method for measuring the saliency of an image target, comprising the steps of:

[0033] Step 1: Perform image-background segmentation on any target in the natural image to obtain the target saliency map, and obtain the coordinate set of the boundary position according to it;

[0034] Step 2: Continuously sample the natural image to obtain image blocks of N×N size, and combine the target saliency map to determine whether each image block belongs to the target image or the background image, and then generate the target image library and the background image library;

[0035] Step 3: After screening the image blocks closest to each boundary in the target image library and the background image library at the coordinate positions where the M boundaries are located, pair the image blocks to obtain M pairs of target and background image blocks;

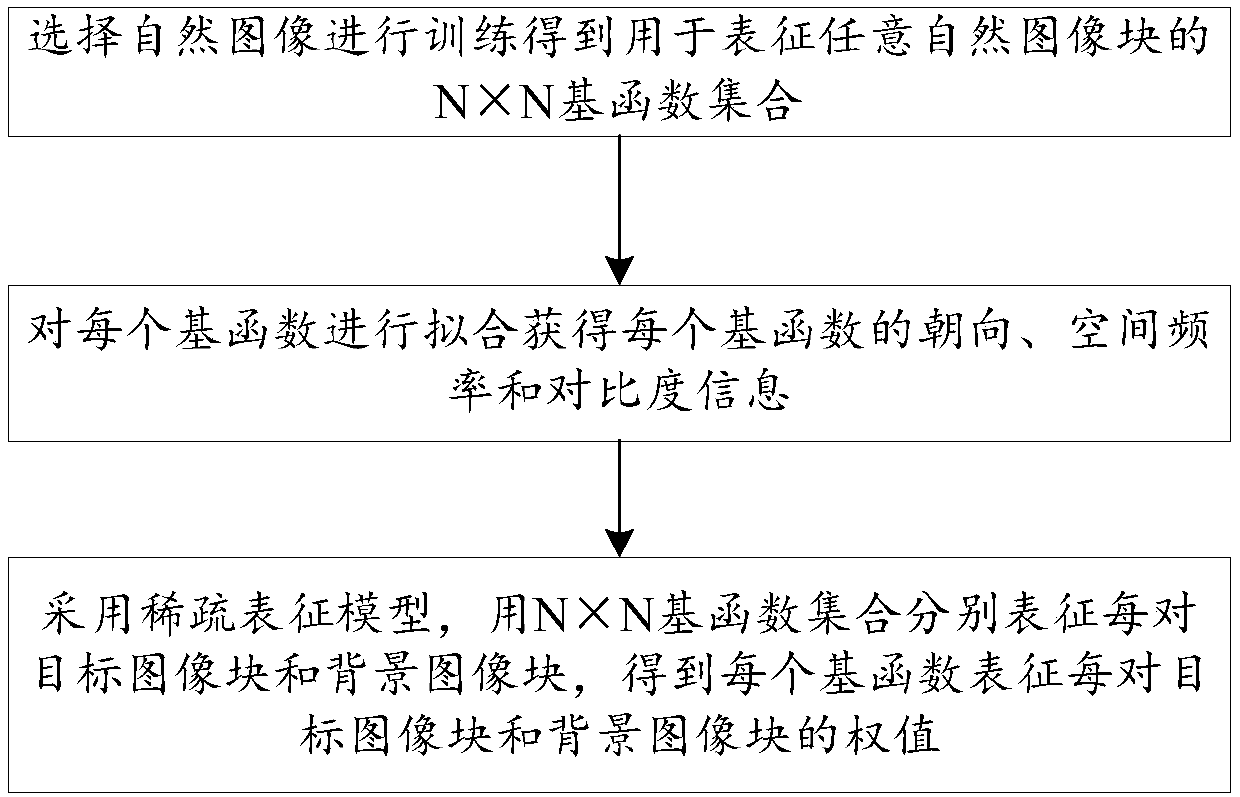

[0036] Step 4: Sparsely represent the target image block and the background image block respectively, and obtain the orientation, ...

Embodiment 2

[0047] When N is 16,

[0048] (1) Given any natural image, perform manual image-background segmentation on any target to obtain a binarized saliency map, where the area where the target is located is marked as 255, the background area is 0, and the boundary part is between two between them; according to the target saliency map, obtain the coordinate set of the boundary location;

[0049] (2) Continuously sample the original natural image to obtain 16×16 image blocks, combined with the obtained target saliency map, judge whether each image block belongs to the target image or the background image and save it into the target image library and the background image library, And mark the corresponding starting coordinate position;

[0050] (3) for each boundary, M coordinate positions are set, and the image blocks closest to the boundary are paired in the screening target image library and the background image library to obtain M pairs of target and background images;

[0051] (4...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com