Distributed cache live migration

A cache and cache element technology, applied in memory systems, memory architecture access/allocation, instruments, etc., can solve the problem of high latency of data operations and reduce bandwidth overhead.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

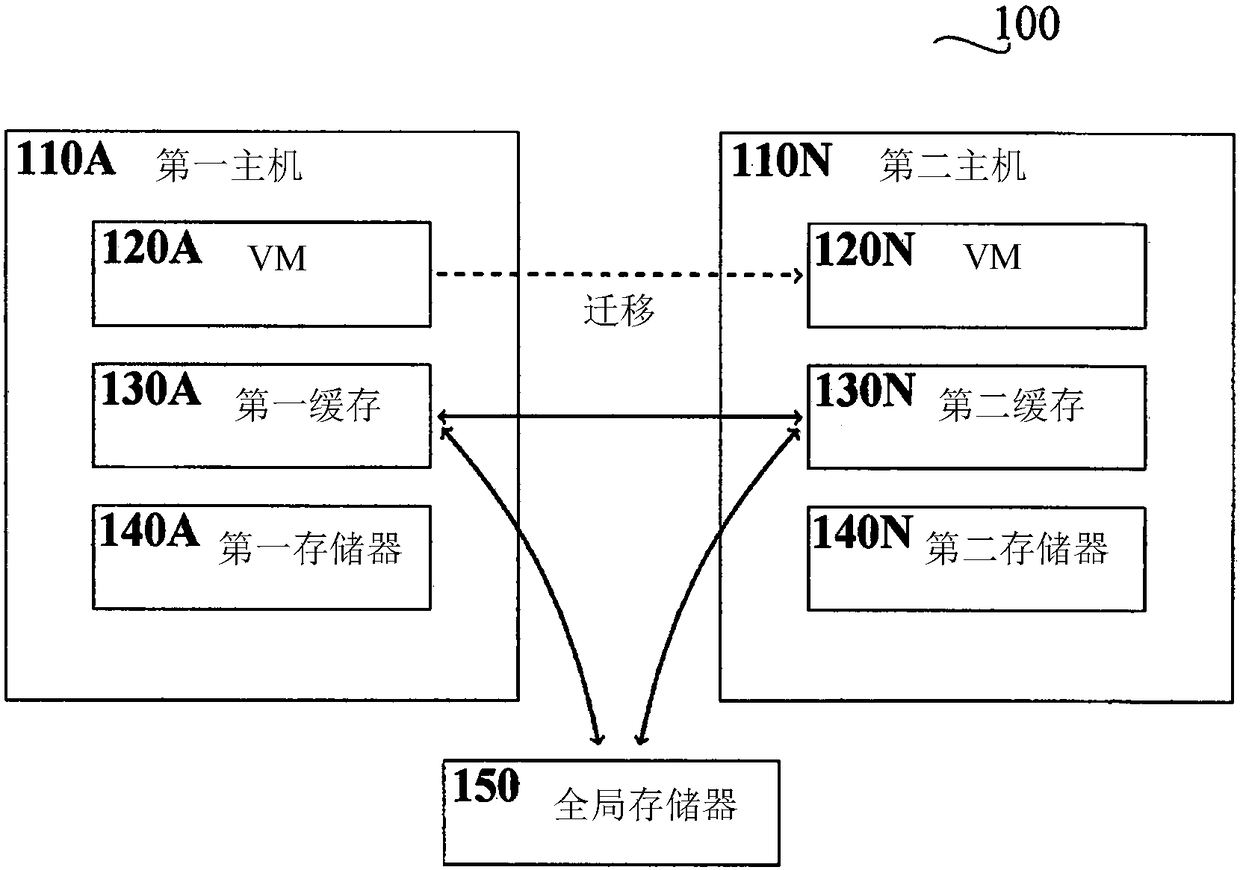

[0046] figure 1 Shown is a first host 110A, also referred to as the original host, and a second host 110N, also referred to as the destination host.

[0047] The first host 110A is shown with VM 120A, but it may include one or more other VMs (not depicted). In addition, the first host 110A includes a first cache 130A and a first memory 140A. The first cache 130A may be distributed among multiple hosts. Data related to not only VM 120A may be included, but also data related to one or more different VMs. Data from the first memory 140A may be cached in the first cache 130A, and may also contain data cached by a different cache and / or data associated with a different VM other than VM 120A. figure 1 Also shown is global memory 150, which may be local or remote memory of the first host 110A.

[0048] Caching media for caching discussed herein includes, but is not limited to, SSD, RAM, and flash memory.

[0049] The global storage 150 may be a cluster of storage arrays, such as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com