Method, device and electronic device for determining rendering objects in a virtual scene

A technology for virtual scene and determination method, which is applied in 3D image processing, instruments, calculations, etc., can solve problems such as reducing model accuracy, reducing image rendering quality, and degrading 3D scene visual effects, saving resource overhead and reducing the amount of calculation. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0074] The technical solutions in the embodiments of the present invention will be described below with reference to the drawings in the embodiments of the present invention.

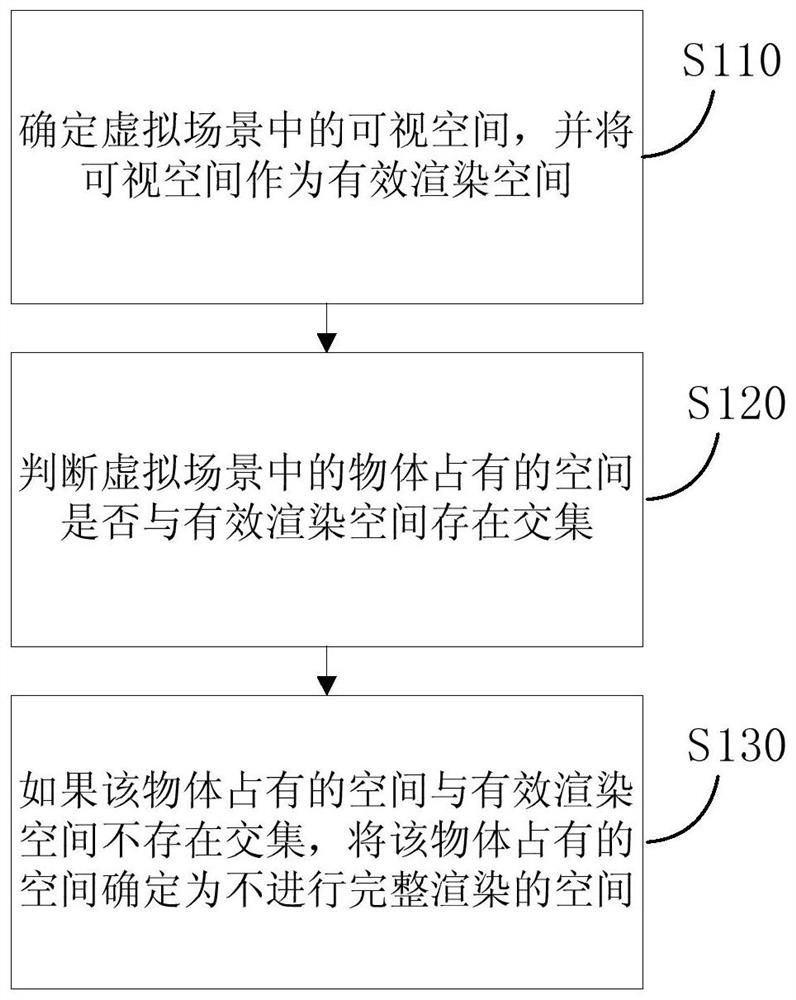

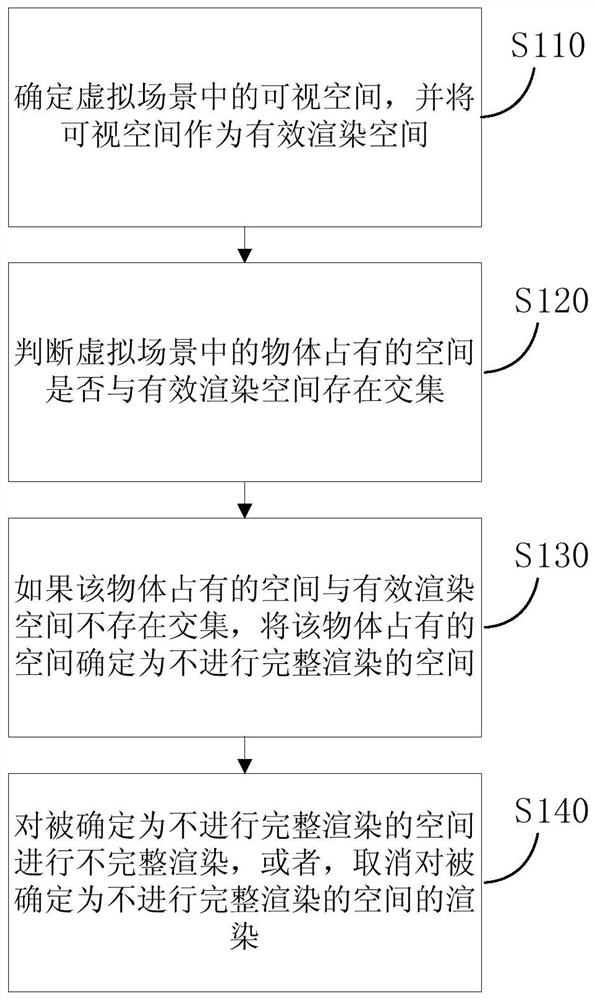

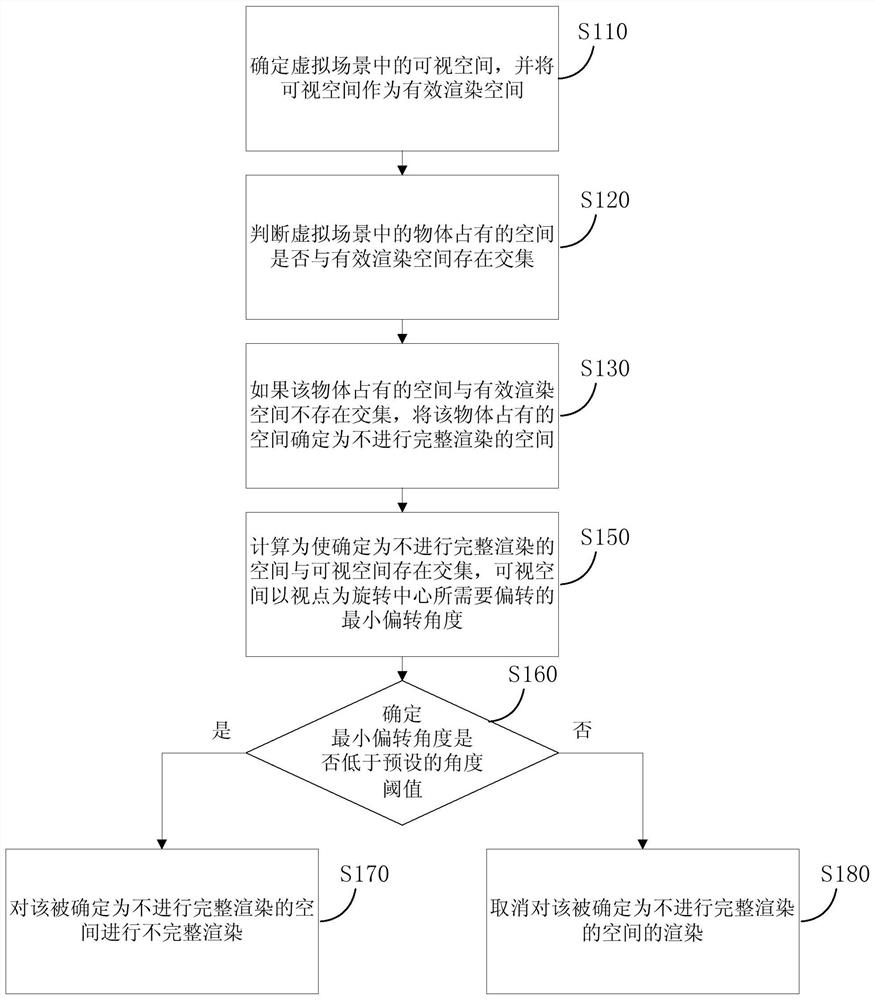

[0075] see figure 1 , figure 1 Shown is a schematic flowchart of a method for determining a rendered object in a virtual scene provided by an embodiment of the present invention, which may include the following steps:

[0076] S110. Determine a visible space in the virtual scene, and use the visible space as an effective rendering space.

[0077] The visible space refers to the spatial area in the virtual scene that the user can see at the current moment. In this embodiment, the virtual reality program may determine a position in the virtual scene as a viewpoint to simulate the position of the user's eyes in the virtual scene. The observation space that the viewpoint can observe in the virtual scene is determined according to the position of the viewpoint and the field of view (Field Of View, FOV for...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com