Systems and methods for robust large-scale machine learning

A machine learning and computing machine technology, applied in machine learning, neural learning methods, based on specific mathematical models, etc., can solve the problems of expensive horizontal expansion of commercial servers and limited I/O bandwidth scaling.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

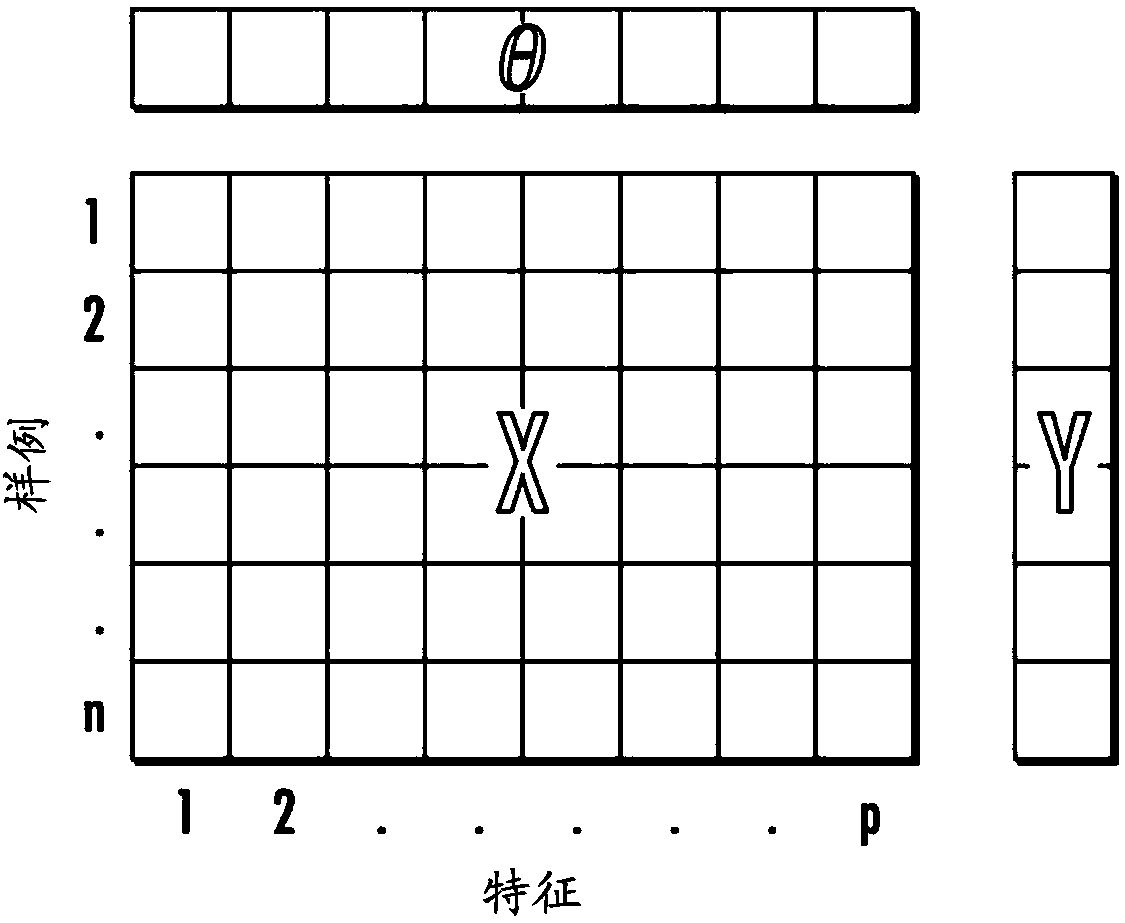

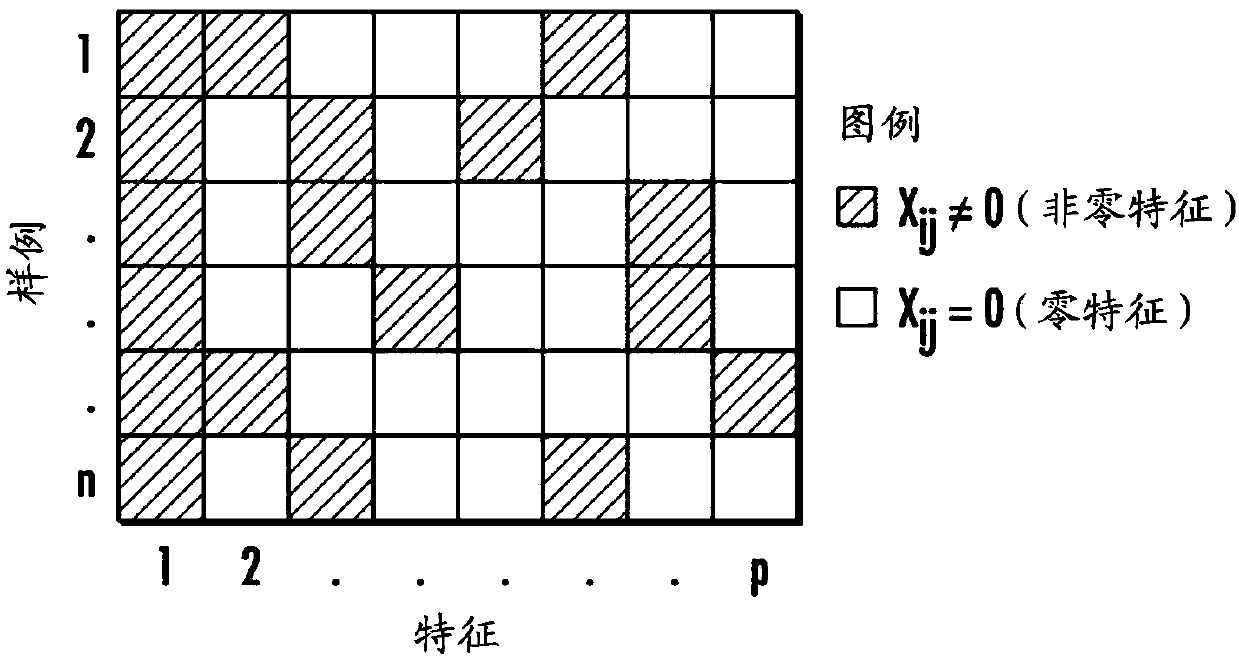

[0022] Overview of the Disclosure

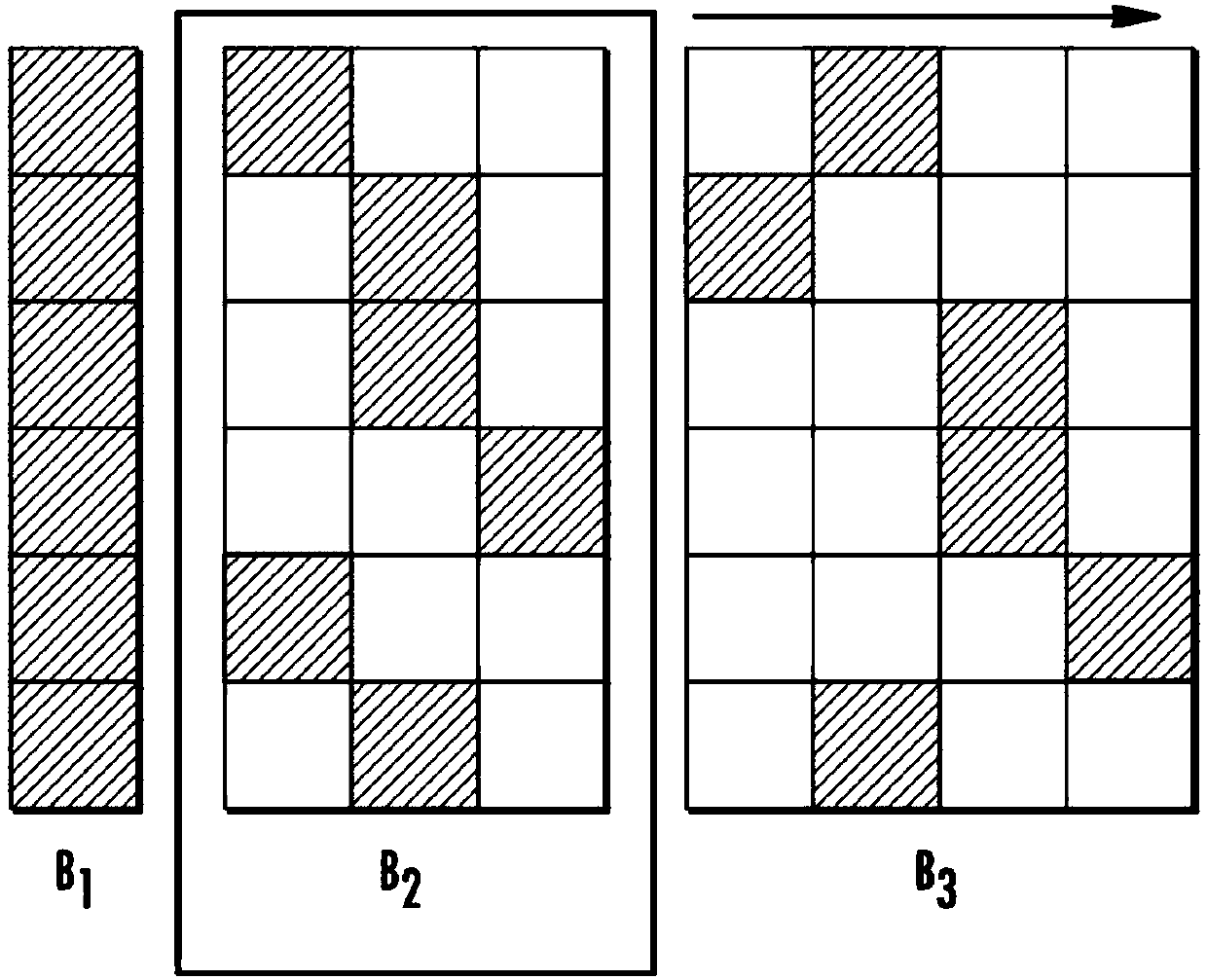

[0023] In general, the present disclosure provides systems and methods for robust large-scale machine learning. In particular, the present disclosure provides a new scalable coordinate descent (SCD) algorithm for generalized linear models that overcomes the scaling problems outlined in the Background section above. The SCD algorithm described herein is highly robust, having the same convergence behavior no matter how much it is scaled out and regardless of the computing environment. This allows SCD to scale to tens of thousands of cores and makes it well suited for running in distributed computing environments such as cloud environments with low-cost commodity servers, for example.

[0024] In particular, by using natural partitioning of parameters into blocks, updates can be performed in parallel one block at a time without compromising convergence. In fact, for many real-world problems, SCD has the same convergence behavior as the popu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com