Video perception-fused multi-task synergetic recognition method and system

A technology that integrates video and recognition methods, and is used in character and pattern recognition, instruments, computer parts, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

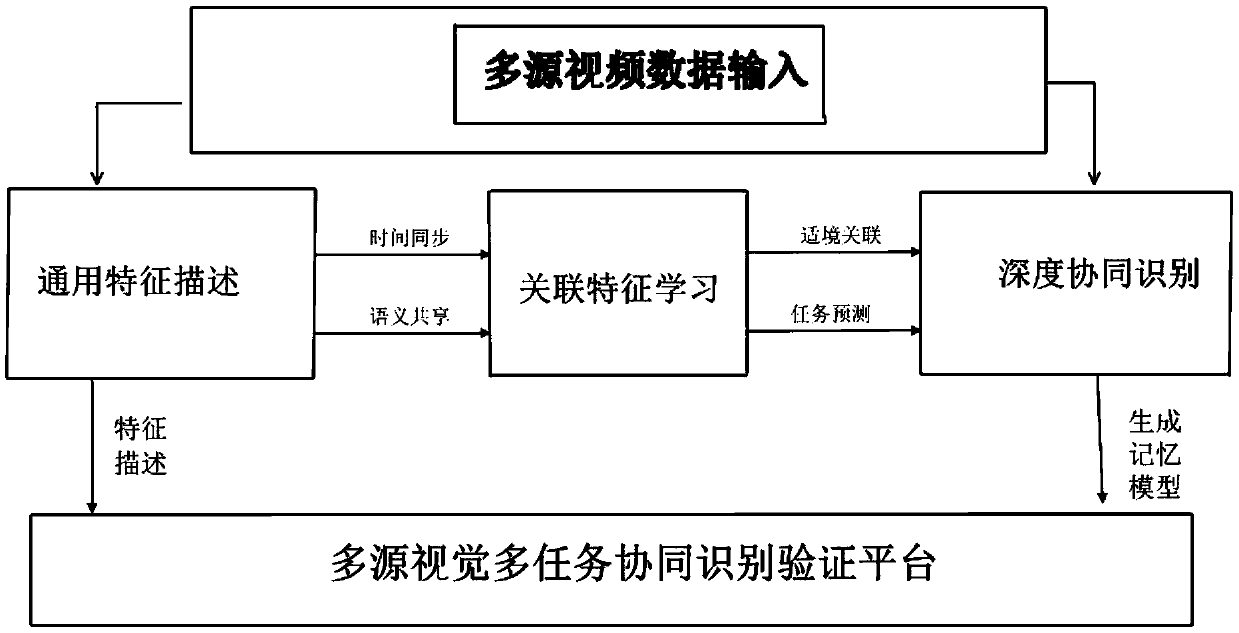

[0085] like figure 1 As shown, Embodiment 1 of the present invention provides a multi-task cooperative recognition system fused with video perception. The system includes a general feature extraction module, a collaborative feature learning module, and a deep collaborative recognition module;

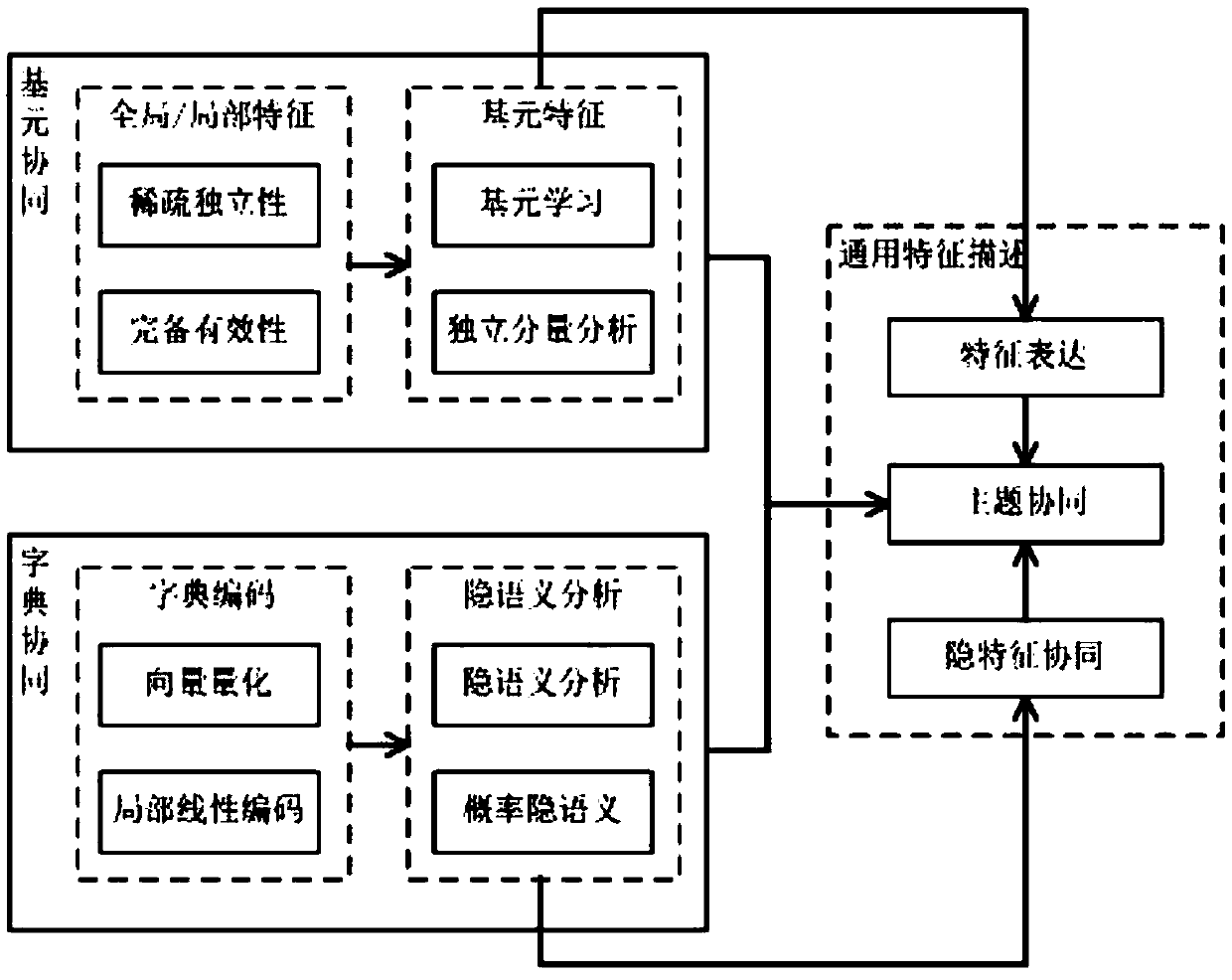

[0086] The general feature extraction module is used to study the shared semantic description of multi-source heterogeneous video data feature collaboration in combination with the biological visual perception mechanism, and obtain the general feature description of multi-source heterogeneous video data;

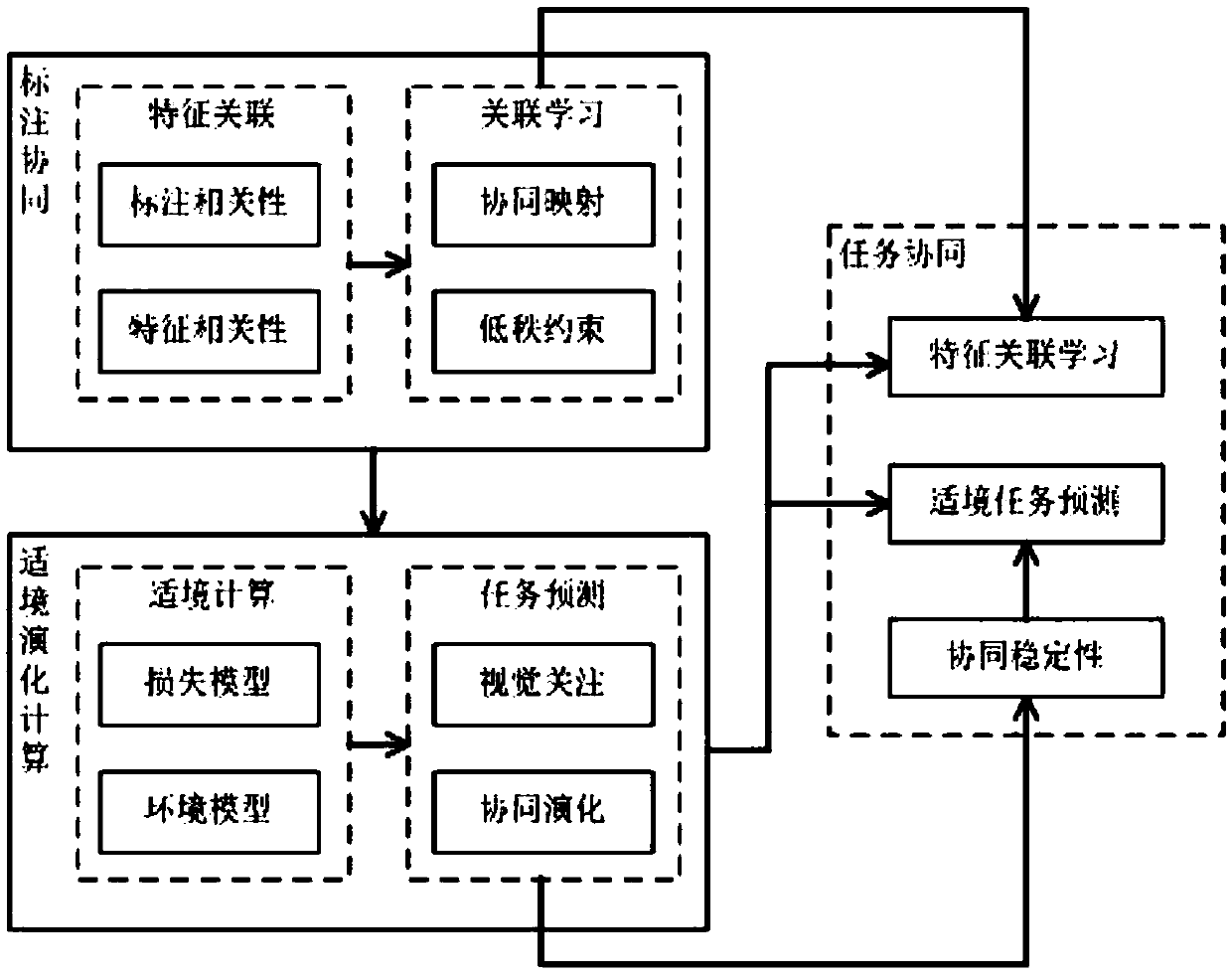

[0087] The collaborative feature learning module is used to establish a feature association learning and task prediction mechanism for task coordination by using the adaptive computing theory, so as to realize a context-aware task associated prediction mechanism;

[0088] The deep collaborative recognition module is used to combine long-term dependence, propose a visual multi-task de...

Embodiment 2

[0100] Embodiment 2 of the present invention provides a multi-task recognition method for fusing multi-source video perception data using the system described in Embodiment 1. The method comprises the steps of:

[0101] First, combined with the biological visual perception mechanism, the shared semantic description of multi-source heterogeneous video data feature collaboration is studied, and the general feature description of multi-source heterogeneous video data is obtained.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com