Multi-source image fusion method based on discriminant dictionary learning and morphological decomposition

A technology of dictionary learning and morphological components, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems that are not considered in image fusion methods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

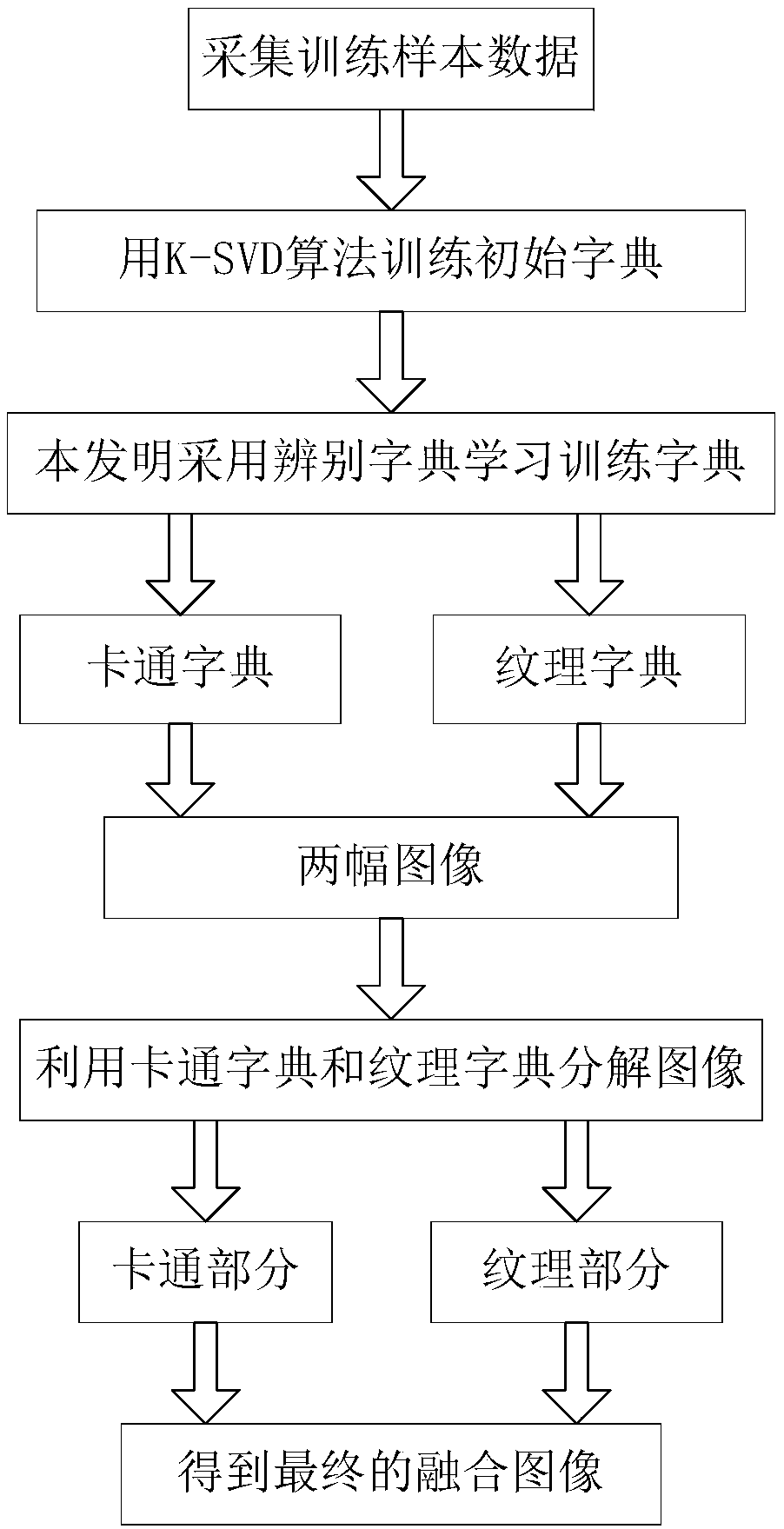

Method used

Image

Examples

Embodiment 1

[0078] Example 1: Medical Image Fusion

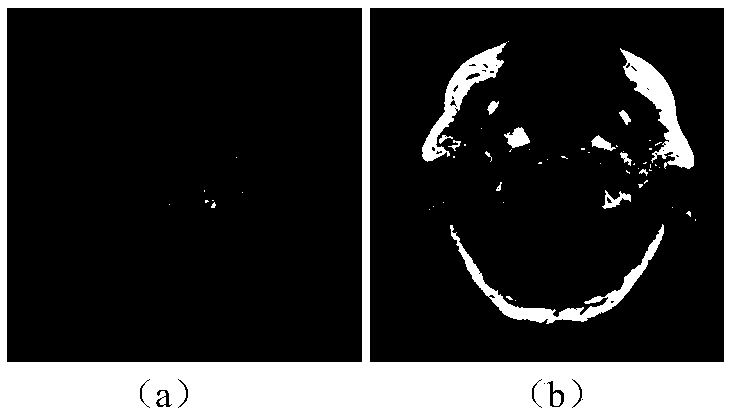

[0079] In the first set of experiments, we first set a group such as figure 2 Fusion experiments are performed on multimodal medical images shown in (a) and (b). in, figure 2 (a) is MR-T1 image, figure 2 (b) is MR-T2 image. Depend on figure 2 (a) and (b) can be regarded as, due to the difference in weights, the MR-T1 and MR-T2 images contain a large amount of complementary information. If these information can be synthesized to produce a fusion image, it will be very useful It is beneficial to doctors' diagnosis, treatment and subsequent image processing, such as image classification, segmentation, and target recognition.

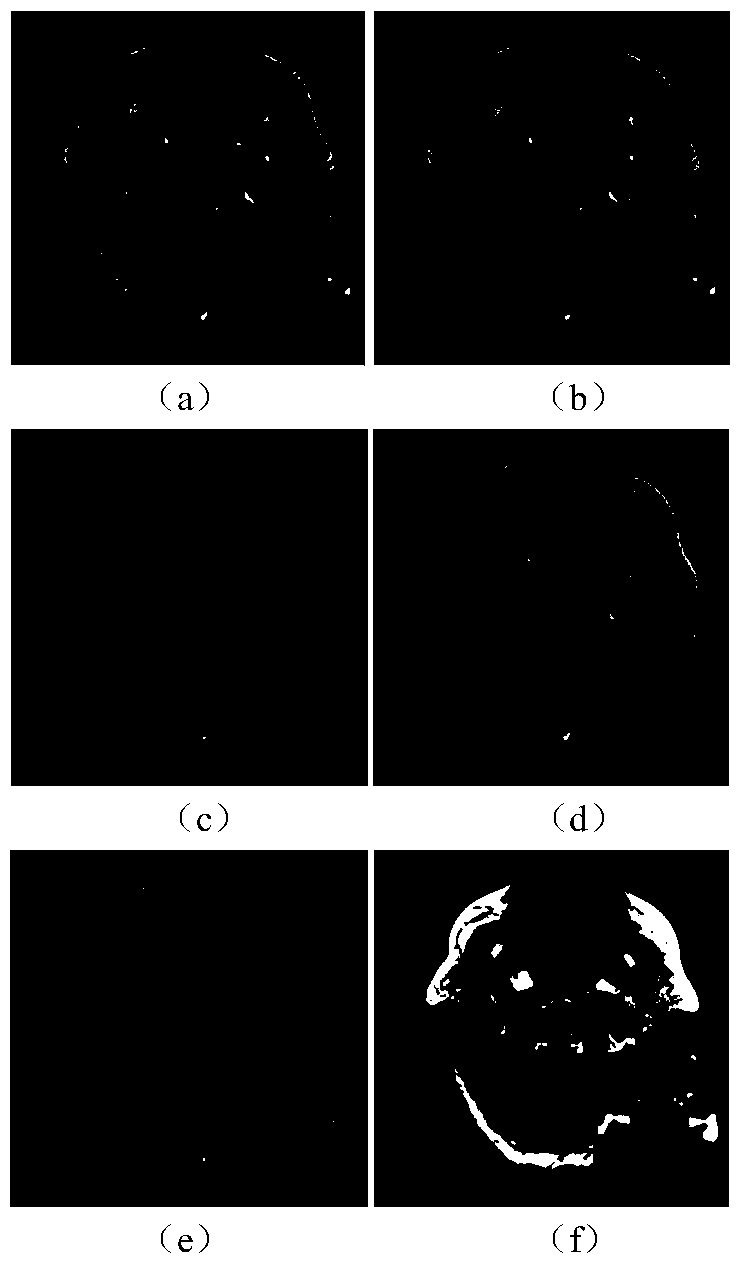

[0080] image 3 (a)-(f) show the fusion results of NSCT, NSCT-SR, Kim's, Zhu-KSVD, ASR and the method proposed in this paper in turn. It can be seen that different fusion methods have different performances in preserving image edge detail information. Among them, the NSCT-based fusion method can effective...

Embodiment 2

[0083] Embodiment 2: Multi-focus image fusion

[0084] In the second set of experiments, we Figure 4 A set of multifocus images shown in (a) and (b) were subjected to fusion experiments. Depend on Figure 4 As shown in (a) and (b), it can be seen that when the lens of the camera is focused on a certain object, the object can be clearly imaged, but the image of objects far away from the focal plane is blurred. But in reality, for some computer vision tasks or image processing tasks such as target segmentation, image classification, target recognition, etc., it is very necessary to obtain an image with clear targets. This problem can usually be solved by multi-focus image fusion. The method proposed in this paper can not only be used to solve the fusion problem of medical images, but also can be used to solve the fusion of multi-focus images.

[0085] Figure 5 (a)-(f) show the visual effect comparison of NSCT, NSCT-SR, Kim’s, Zhu-KSVD, ASR and the fusion results of our me...

Embodiment 3

[0088] Example 3: Fusion of infrared and visible light images

[0089] In the third set of experiments, we used different methods to Figure 6 Infrared and visible light images shown in (a) and (b) were subjected to fusion experiments. in, Figure 6 (a) is an infrared image, Figure 6 (b) is a visible light image. From these source images, it can be seen that visible light images can clearly reflect the background details of the scene, but cannot clearly image thermal targets (such as pedestrians, vehicles); on the contrary, infrared images can clearly reflect thermal targets (such as pedestrians , vehicle), but it cannot clearly image the background without high temperature. In order to obtain an image with clear background and thermal targets, it plays an important role in target tracking, recognition, segmentation and detection.

[0090] The fusion images obtained by different methods are as follows: Figure 7 (a)-(f) shown. in Figure 7 (a) and (b) are the fusion r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com