Human action recognition and its neural network generation method, device and electronic equipment

A technology of human action recognition and neural network, applied in the field of human action recognition and neural network generation, can solve the problem of low ability of action recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

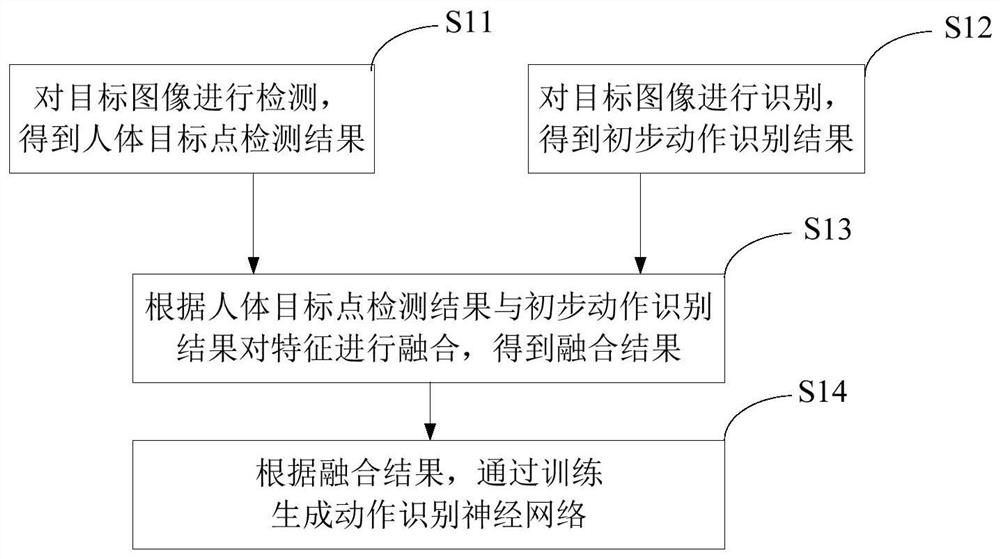

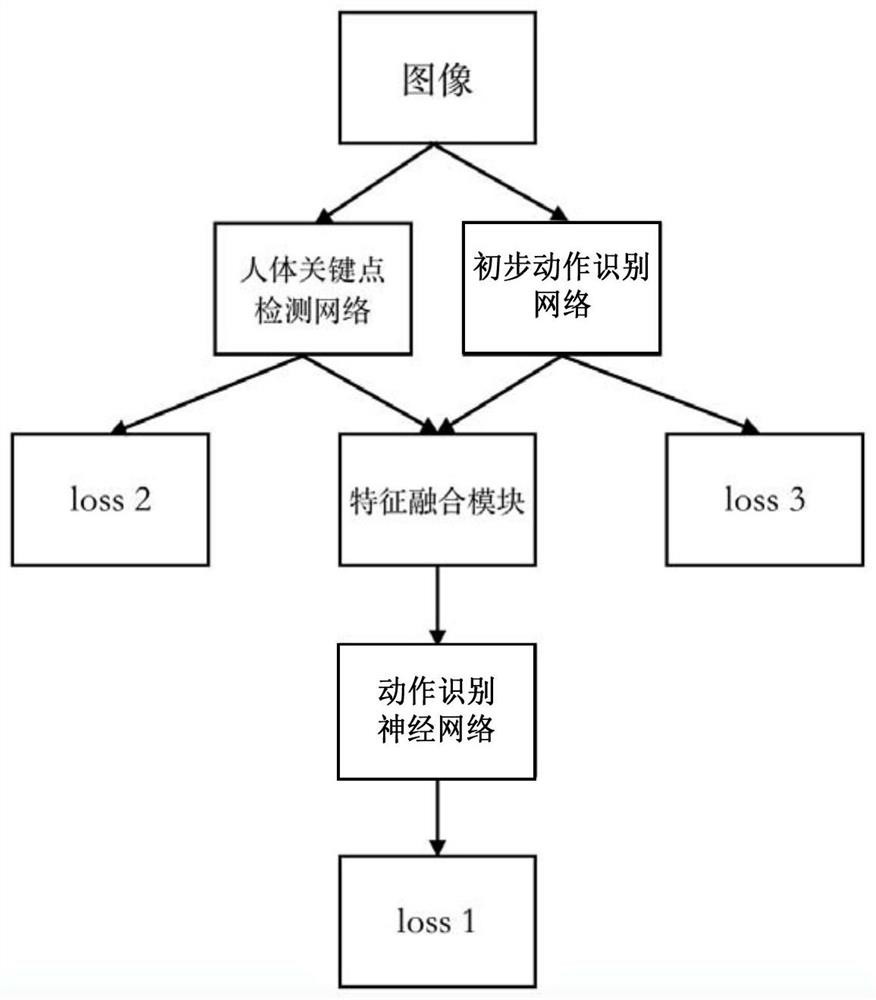

[0061] An embodiment of the present invention provides a neural network generation method for human body action recognition, as a neural network generation method for fusing key point information of the human body, such as figure 1 As shown, the neural network generation method includes:

[0062] S11: Detect the target image, and obtain the detection result of the human target point.

[0063] Wherein, the target image may be a dynamic video, a still picture, etc. acquired by an image acquisition device such as a common camera or a depth camera. Furthermore, the detection result of the human body target point may be position information of several key points of the human body and angle information between the several key points of the human body.

[0064] In this embodiment, the target image to be subjected to action recognition is detected first, that is, before the target image is officially input into the action recognition neural network, the target image is detected first...

Embodiment 2

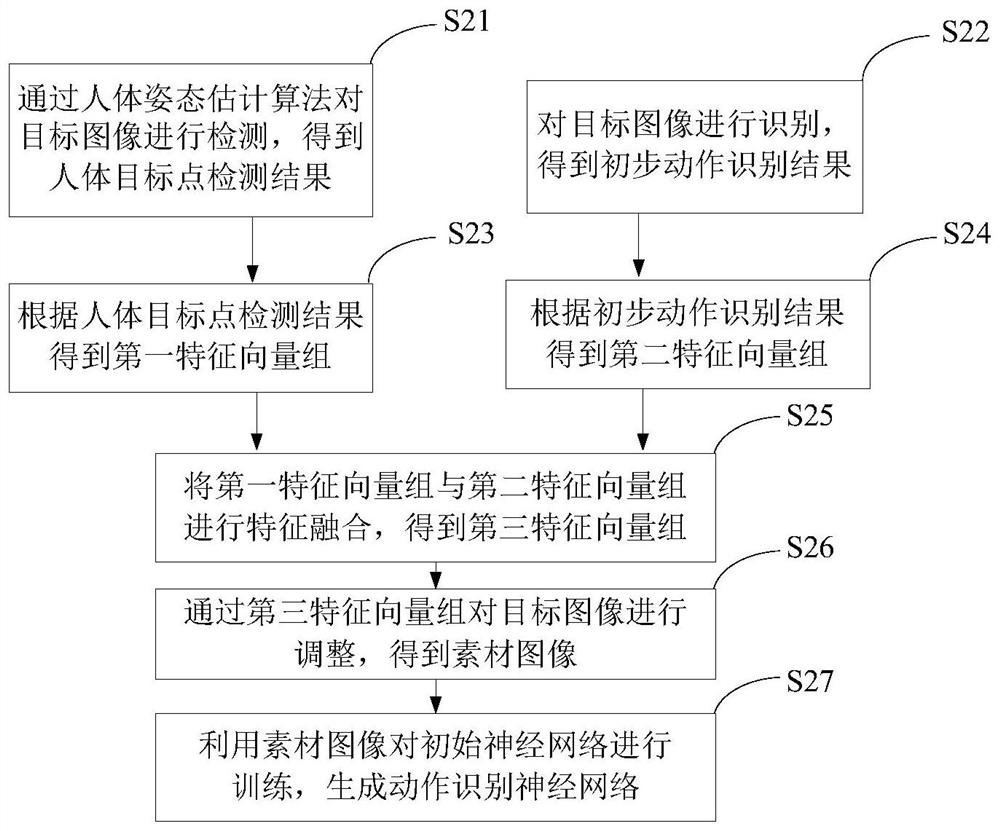

[0077] An embodiment of the present invention provides a neural network generation method for human body action recognition, as a neural network generation method for fusing key point information of the human body, such as figure 2 As shown, the neural network generation method includes:

[0078] S21: Detect the target image through the human body pose estimation algorithm, and obtain the detection result of the human body target point.

[0079] In this step, the target image is detected and recognized based on the human body pose estimation technology, and the detection result of the human body target point is obtained. Wherein, the human target point detection result includes at least one of position information of human body joint points, angle information of human body joint points, position information of key body parts and angle information of key body points. For example, the target points of the human body can be the top of the head, the neck, the left shoulder, the ...

Embodiment 3

[0108] A human body action recognition method provided by an embodiment of the present invention is an action recognition method that fuses key point information of the human body, such as Figure 4 As shown, the human action recognition method includes:

[0109] S31: Detecting the target image to obtain a human target point detection result.

[0110] S32: Recognize the target image to obtain a preliminary action recognition result.

[0111] S33: Fusing the features according to the detection result of the human target point and the preliminary action recognition result to obtain a fusion result.

[0112] S34: Generate an action recognition neural network through training according to the fusion result.

[0113] As a preferred solution, the specific implementation manner of the above steps S31, S32, S33 and S34 is the same as that of the first or second embodiment, and will not be described in detail here.

[0114] S35: Recognize the target image through the motion recognit...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com