Action recognition method and its neural network generation method, device and electronic equipment

A neural network and action recognition technology, applied in the field of image recognition, can solve problems such as poor action recognition effect, achieve poor solution effect, and achieve the effect of stability and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

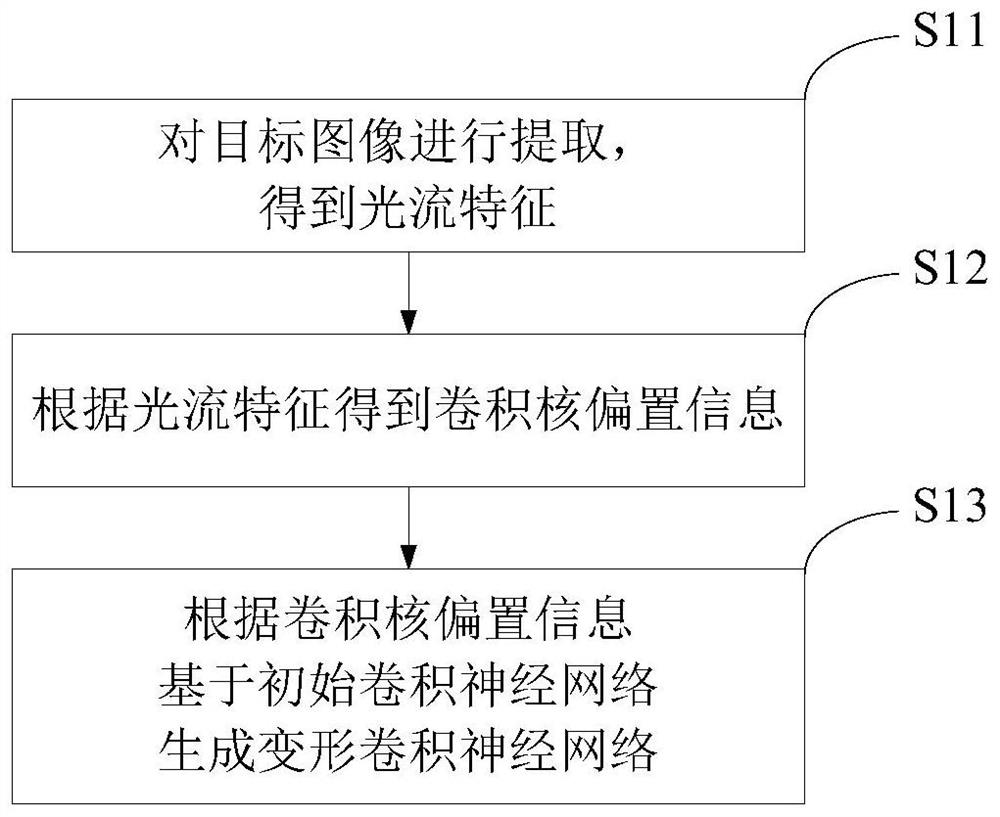

[0059] An embodiment of the present invention provides a neural network generation method for action recognition, as a variable convolution kernel neural network generation method that fuses optical flow information, such as figure 1 As shown, the method includes:

[0060] S11: Extract the target image to obtain optical flow features.

[0061] Wherein, the target image may be an image such as a dynamic video or a static picture acquired by an image acquisition device such as a common camera or a depth camera. In this embodiment, the optical flow feature information is first extracted from the target image input to the image recognition neural network to obtain the optical flow feature.

[0062] It should be noted that the optical flow (optical flow) is the instantaneous speed of the pixel movement of the space moving object on the observation imaging plane. Simply put, the optical flow is due to the movement of the foreground object itself in the scene, the movement of the ca...

Embodiment 2

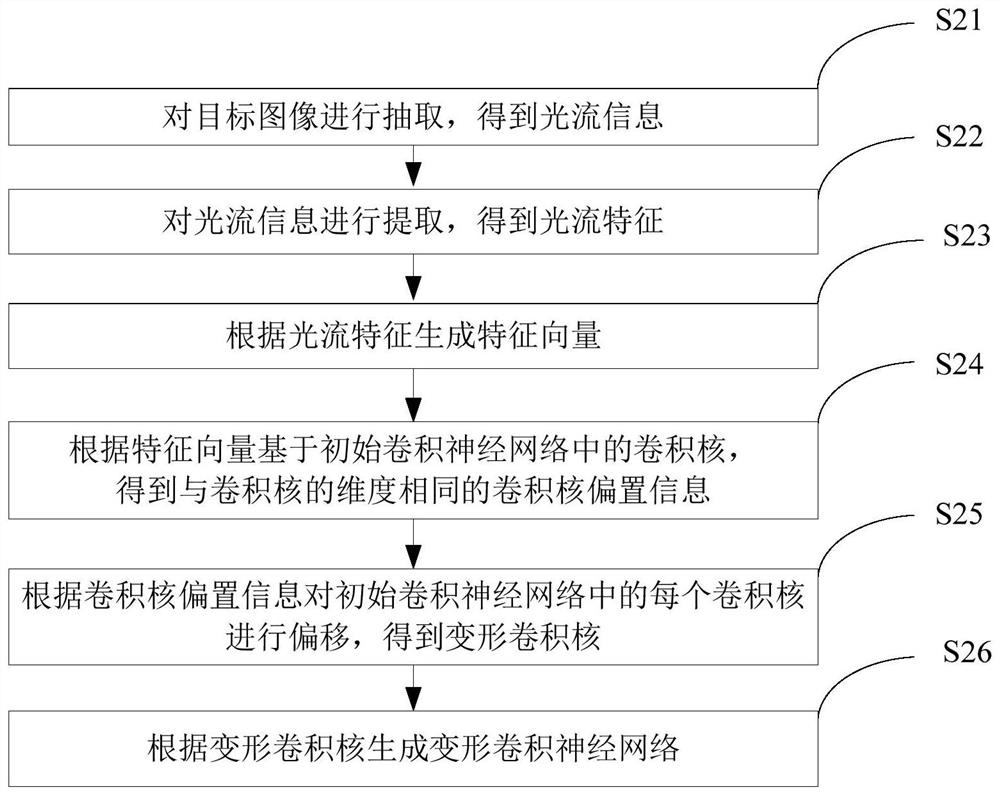

[0071] An embodiment of the present invention provides a neural network generation method for action recognition, as a variable convolution kernel neural network generation method that fuses optical flow information, such as figure 2 As shown, the method includes:

[0072] S21: Extract the target image to obtain optical flow information.

[0073] In this embodiment, the optical flow information is firstly extracted from the target image input to the image recognition neural network, so as to obtain the optical flow information. Among them, the optical flow information expresses the change of the image, since the optical flow contains the information of the target movement, it can be used by the observer to determine the movement of the target.

[0074] Preferably, the target image can be extracted by an optical flow method, so as to obtain optical flow information. It should be noted that the optical flow method uses the changes of pixels in the image sequence in the time d...

Embodiment 3

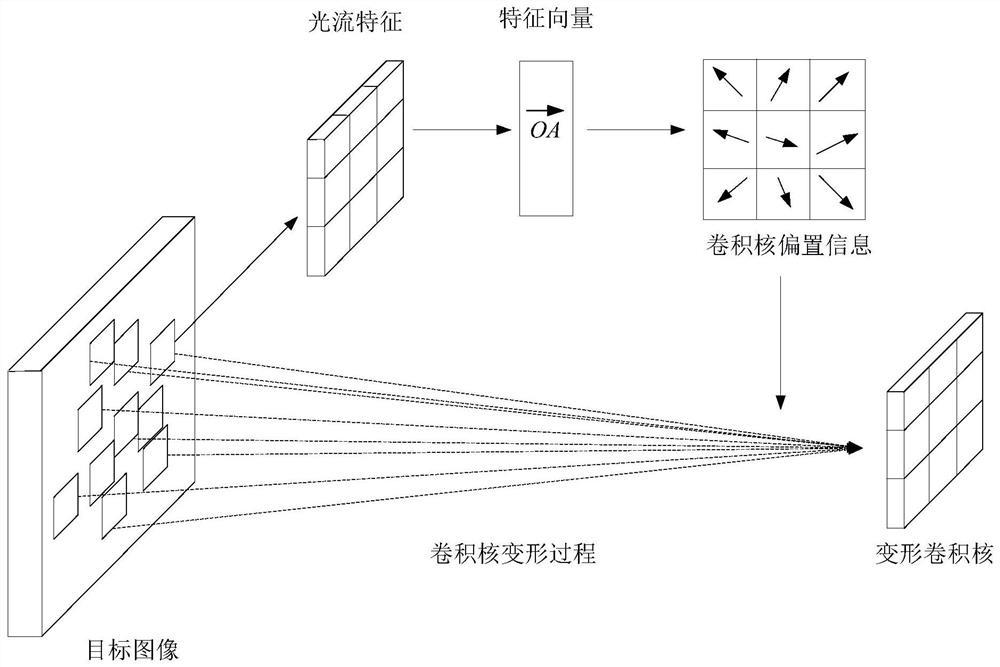

[0091] This embodiment provides an application example based on the above neural network generation method for action recognition. In an implementation manner, the initial convolutional neural network is a two-dimensional convolutional neural network.

[0092] Preferably, the action recognition method of the two-dimensional deformation convolution kernel neural network may include: firstly, extracting the target image to obtain optical flow information; then extracting the optical flow information to obtain optical flow features; then generating The feature vector; then, according to the feature vector based on the two-dimensional convolution kernel in the two-dimensional convolutional neural network, the spatial dimension offset vector and the time dimension offset vector are obtained; after that, according to the spatial dimension offset vector, the two-dimensional convolution The two-dimensional convolution kernel in the neural network is spatially offset to obtain the two-d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com