Engineering Computer Memory Integrated Management System

A comprehensive management and computer technology, applied in the direction of calculation, program control design, multi-program device, etc., can solve the problems of reducing performance, increasing cache errors, etc., to achieve the effect of ensuring stability, reducing cache errors, and wide application range

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

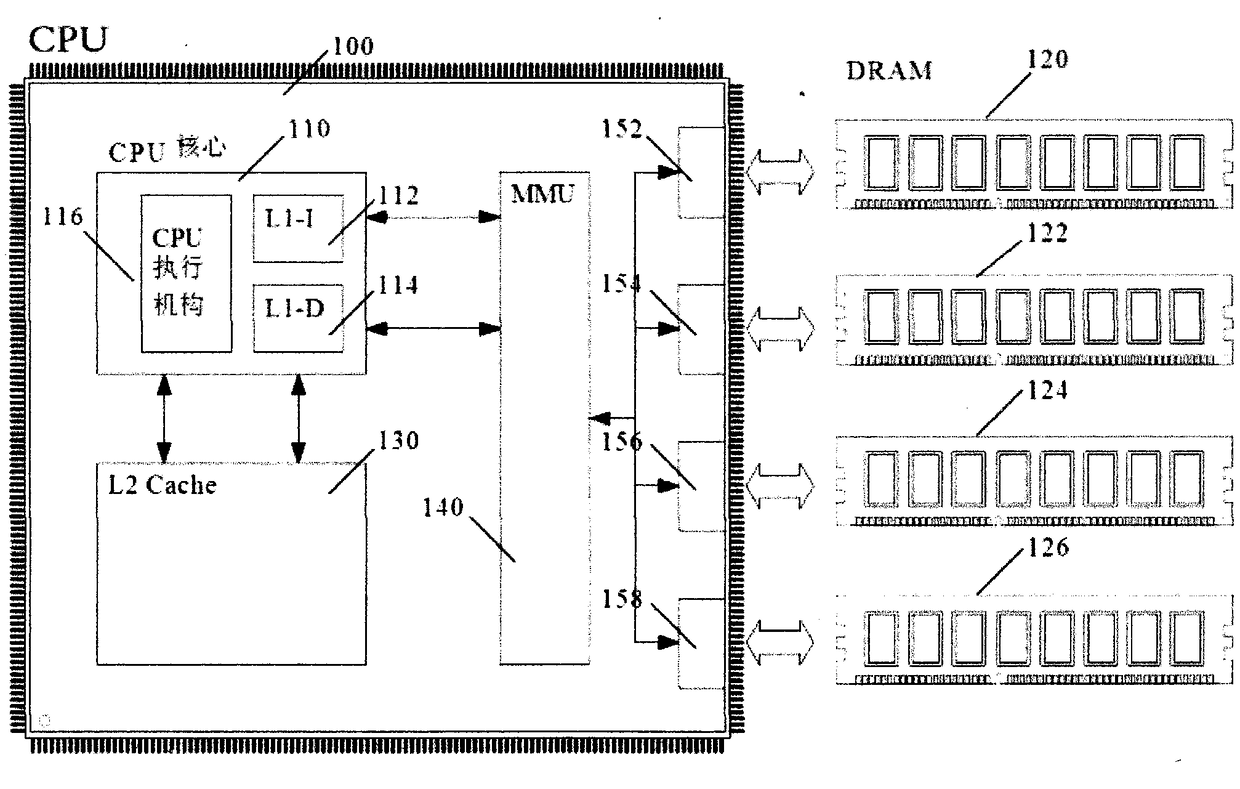

[0026] Such as Figure 1~2 As shown, the CPU independent chip 100 in the CPU system of the present invention integrates a CPU core 110, a secondary cache 130, a memory access controller MMU 140 and four memory channels. The CPU core 110 is built with a CPU execution mechanism 116 , a first-level instruction cache 112 (ie, L1-I Cache), and a first-level data cache 114 (ie, L1-D Cache). The secondary cache 130 directly exchanges data with the CPU core 110, and the four memory channels (i.e. memory channel one 152, memory channel two 154, memory channel three 156 and memory channel four 158) communicate with the memory access controller MMU 140 to accept its management instructions.

[0027] The memory access controller MMU 140 exchanges data with the instruction and data filling mechanism of the CPU core 110 . figure 1 The first cache of the independent CPU chip 100 in the CPU adopts a separate storage structure for instructions and data: instructions are stored in the first-l...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com