Neural network reinforcement learning method and system based on a fitness track

A technology of reinforcement learning and neural network, applied in the direction of neural learning methods, biological neural network models, etc., can solve the problems of poor learning ability of reinforcement learning, limited computer storage, and inability to meet needs, so as to improve generalization performance and speed up convergence speed effect

Pending Publication Date: 2019-04-05

CHINA PETROLEUM & CHEM CORP +1

View PDF0 Cites 1 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

[0002] Reinforcement learning is used more and more in the field of artificial intelligence, including industrial production, elevator scheduling, and path planning. It can be used to solve decision-making problems such as stochastic or uncertain dynamic system optimization. With the development of reinforcement learning and various aspects The expansion of applications requires more and more combined technologies and algorithms. At this time, the classic look-up table method cannot meet the needs.

Because the traditional reinforcement learning algorithm needs to save the value function corresponding to the state-action in the table for query, but the storage of the computer is limited, and the value table method cannot store such a large number of problems in the face of continuous state space. value function

[0003] Faced with the above problems, the more common way is to disperse the continuous space into a single separable state set, so that it can be finitely dispersed into finite states, and then use the classic reinforcement learning algorithm, but this will cause many problems, such as discrete The transformed state may no longer have the Markov property, and the hidden state that cannot be directly observed may be introduced. At the same time, the reinforcement learning may no longer have the Markov property. At this time, the function strategy cannot converge, and the learning ability of reinforcement learning becomes worse.

[0004] At the same time, the speed at which the neural network approaches the function value and whether it is a global extremum are all problems to be solved. Some existing algorithms tend to make reinforcement learning fall into a local extremum, unable to obtain the optimal decision, resulting in learning failure.

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment

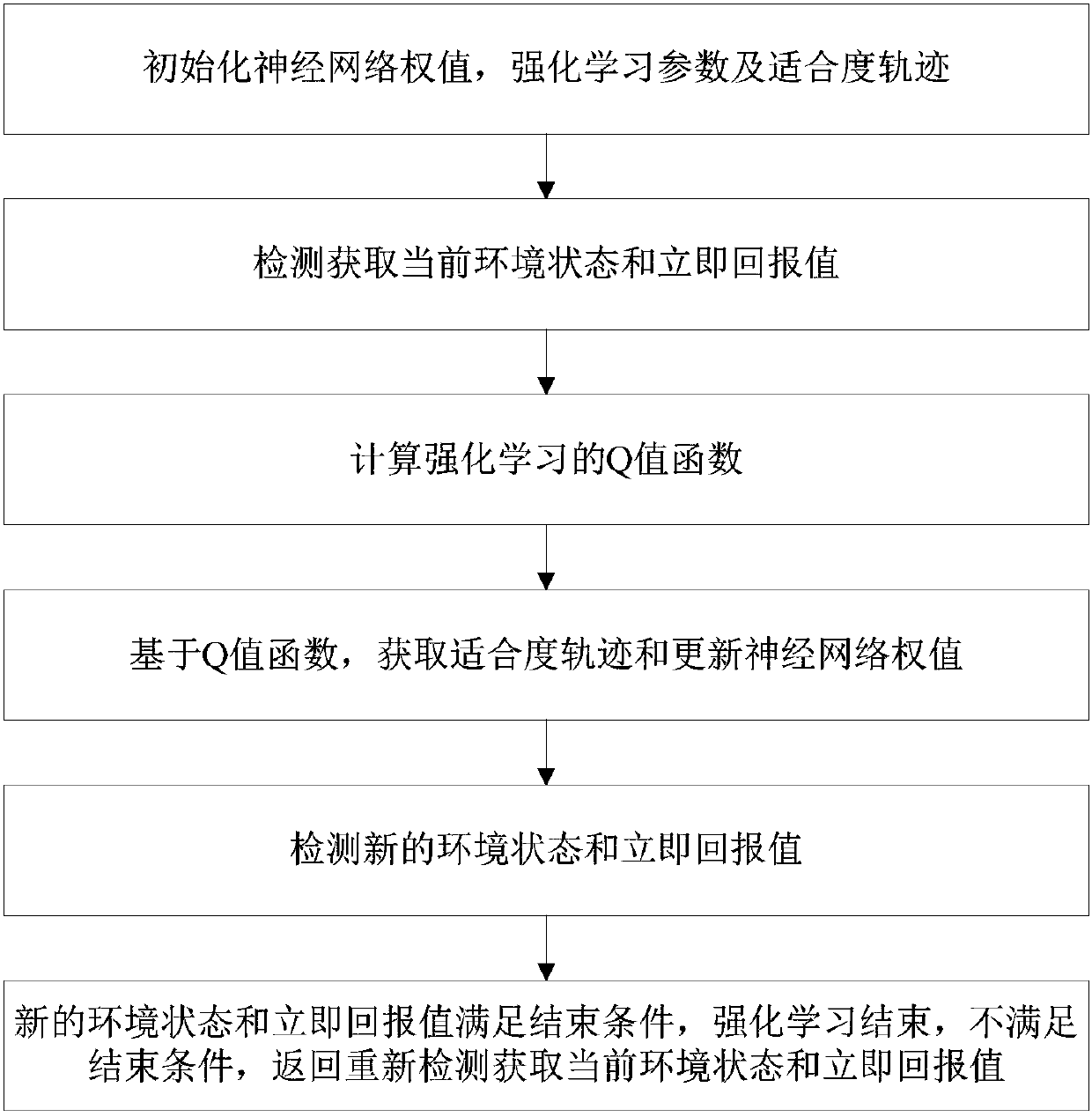

[0115] figure 1 A flowchart showing the steps of a neural network reinforcement learning method based on a fitness trajectory according to an exemplary embodiment of the present invention.

[0116] Such as figure 1 As shown, a neural network reinforcement learning method based on a fitness track in this embodiment includes:

[0117] Initialize neural network weights, reinforcement learning parameters and fitness trajectory;

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

The invention discloses a neural network reinforcement learning method and system based on a fitness track. The neural network reinforcement learning method comprises the following steps: initializinga neural network weight, reinforcement learning parameters and a fitness track; Obtaining a current environment state and an immediate return value; Calculating a Q value function of reinforcement learning; Acquiring a fitness track and updating a neural network weight; Detecting a new environment state and an immediate return value; When the new environment state and the immediate return value meet the end condition, reinforcement learning is finished, the end condition is not met,obtaining the current environment state and the immediate return value through return re-detection. The method has the advantages that the function approximation problem of reinforcement learning for continuous state space is solved, meanwhile, a fitness track is introduced to effectively store an access path with correct experienced state actions, the generalization performance of the neural network is improved, and finally the convergence speed of the algorithm is increased.

Description

technical field [0001] The present invention relates to the technical field of machine learning, and more specifically, to a neural network reinforcement learning method and system based on a fitness trajectory. Background technique [0002] Reinforcement learning is used more and more in the field of artificial intelligence, including industrial production, elevator scheduling, and path planning. It can be used to solve decision-making problems such as stochastic or uncertain dynamic system optimization. With the development of reinforcement learning and various aspects The expansion of applications requires more and more combined technologies and algorithms. At this time, the classic look-up table method cannot meet the needs. Because the traditional reinforcement learning algorithm needs to save the value function corresponding to the state-action in the table for query, but the storage of the computer is limited, and the value table method cannot store such a large numbe...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More IPC IPC(8): G06N3/08

CPCG06N3/08

Inventor 王婷婷

Owner CHINA PETROLEUM & CHEM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com