Compression method, system and terminal equipment of a deep neural network

A technology of deep neural network and compression method, applied in the field of compression method of deep neural network, system and terminal equipment, can solve the problems of low classification accuracy and low computing efficiency, and achieve the effect of ensuring classification accuracy and improving computing efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

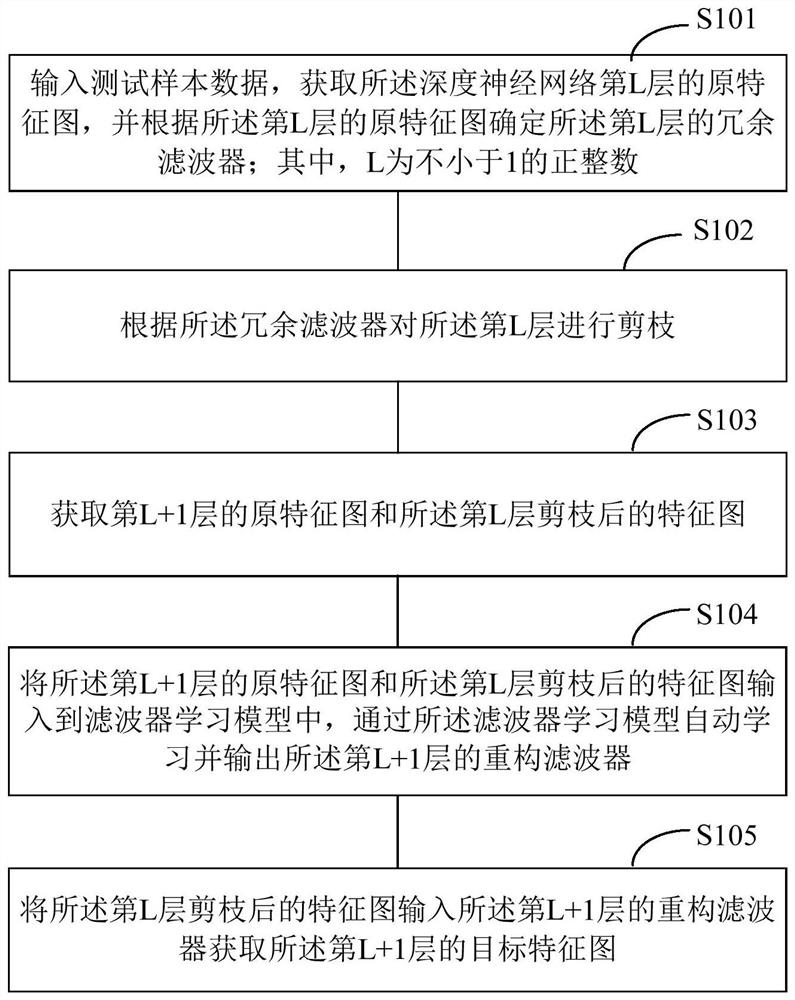

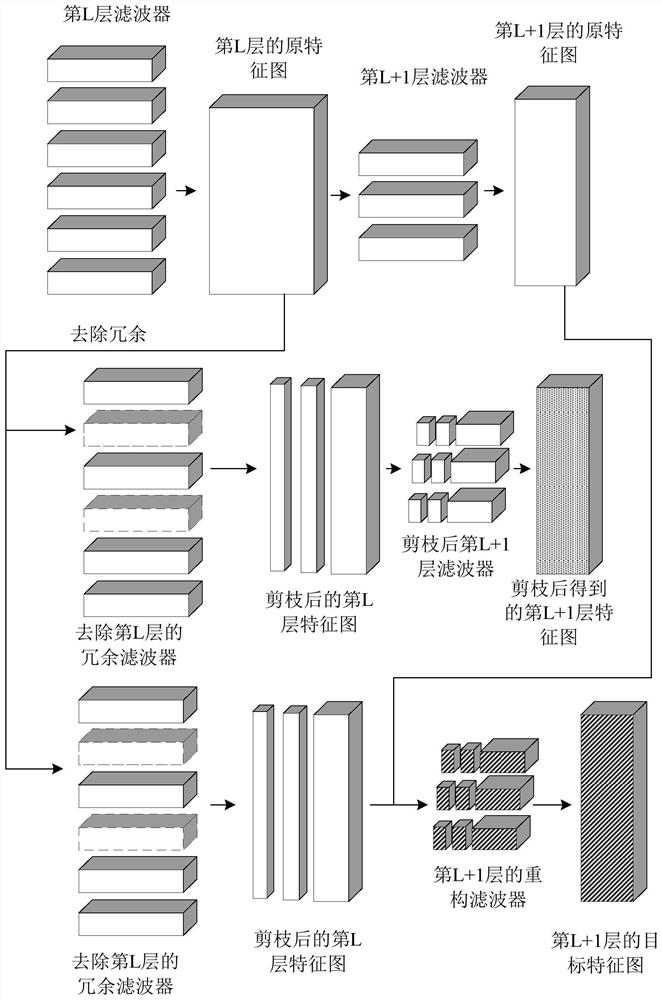

[0043] Such as figure 1 As shown, this embodiment provides a compression method of a deep neural network, which is mainly used in audio and video processing equipment, face recognition equipment and other computer equipment for classifying, detecting and segmenting audio, video and images. The foregoing devices may be general terminal devices, mobile terminal devices, embedded terminal devices, or non-embedded terminal devices, which are not limited here. The compression method of the above-mentioned deep neural network specifically includes:

[0044] Step S101: Input test sample data, obtain the original feature map of the L-th layer of the deep neural network, and determine the redundant filter of the L-th layer according to the original feature map of the L-th layer; wherein, L is not A positive integer less than 1.

[0045] It should be noted that the test sample data is set to test the classification accuracy of the compressed deep neural network and the uncompressed de...

Embodiment 2

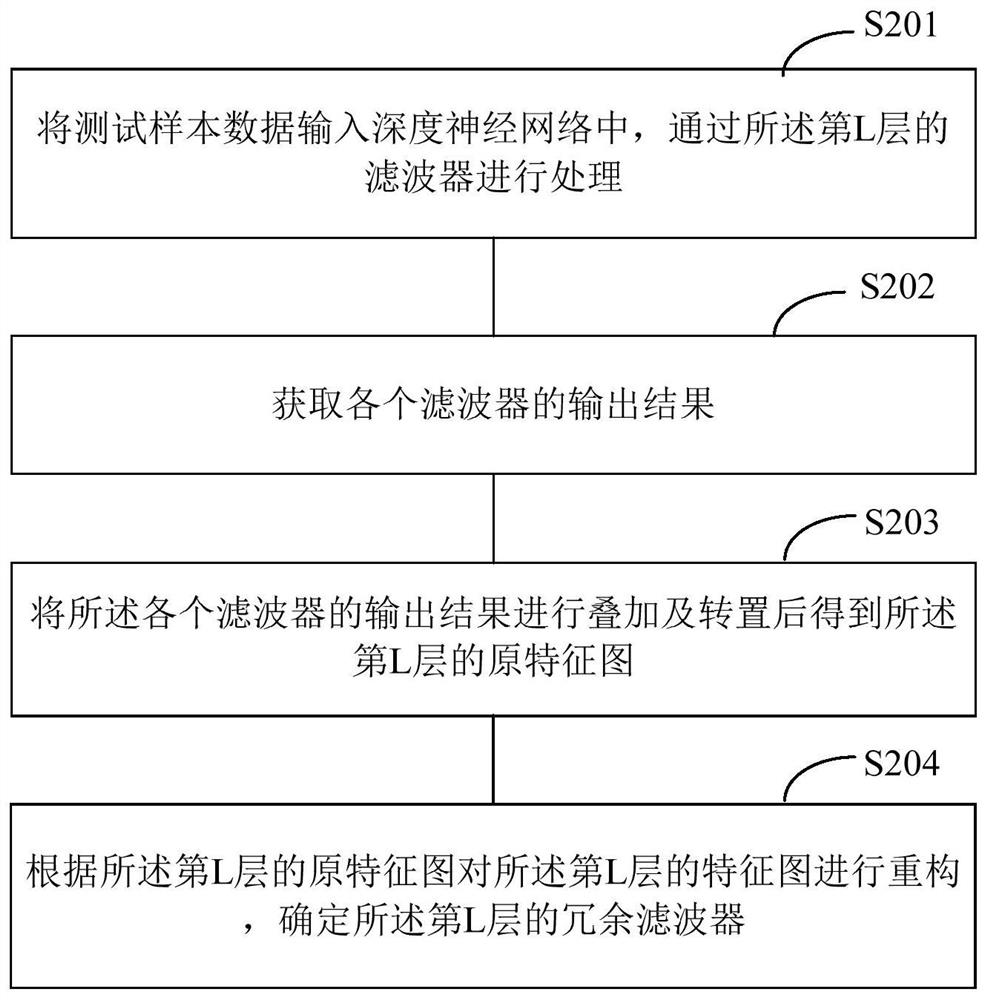

[0064] Such as image 3 As shown, in this embodiment, step S101 in Embodiment 1 specifically includes:

[0065] Step S201: Input the test sample data into the deep neural network, and process it through the filter of the Lth layer.

[0066] Step S202: Obtain the output results of each filter.

[0067] Step S203: Obtain the original feature map of the L-th layer after superimposing and transposing the output results of the filters.

[0068] In a specific application, in a specific application, the test sample data is input into the deep neural network, after the data is processed through the filter of the L layer, the output results of each filter are output accordingly, and the output results are superimposed and transposed The original feature map of the L layer can be obtained. Exemplarily, the test sample data is 5000 test sample images, that is, the 5000 test sample images are input into the deep neural network, and after passing through the n filters of the L layer, th...

Embodiment 3

[0076] Such as Figure 4 As shown, in this embodiment, step S102 in Embodiment 1 specifically includes:

[0077] Step S301: Find the corresponding channel of the redundant filter according to the redundant filter.

[0078] In a specific application, since the corresponding channel in the feature map of the redundant filter corresponds to the redundant filter, the corresponding redundant channel can be found through the redundant filter.

[0079] Step S302: Cut out the redundant filter from the filter of the L-th layer.

[0080] Step S303: Pruning the corresponding channel of the redundant filter from the original feature map of the L-th layer to obtain a pruned feature map of the L-th layer.

[0081] In a specific application, the redundant filter is cut from the filter of the L-th layer, and the channel corresponding to the redundant filter is cut out from the original feature map of the L-th layer to complete the pruning process, and the obtained is The filter of the prun...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com